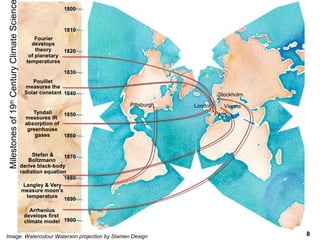

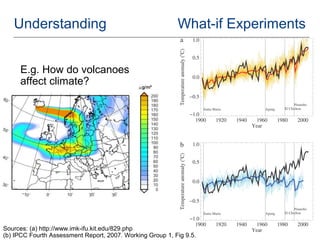

The document discusses climate modeling and its parallels with software engineering, highlighting the use of models to understand climate systems, facilitate communication, and improve decision-making. It outlines the history and evolution of climate models, the engineering challenges faced, and the importance of interdisciplinary collaboration. Key observations underline the limitations and strengths of models, such as their incomplete nature and the necessity for integration to enhance their value.