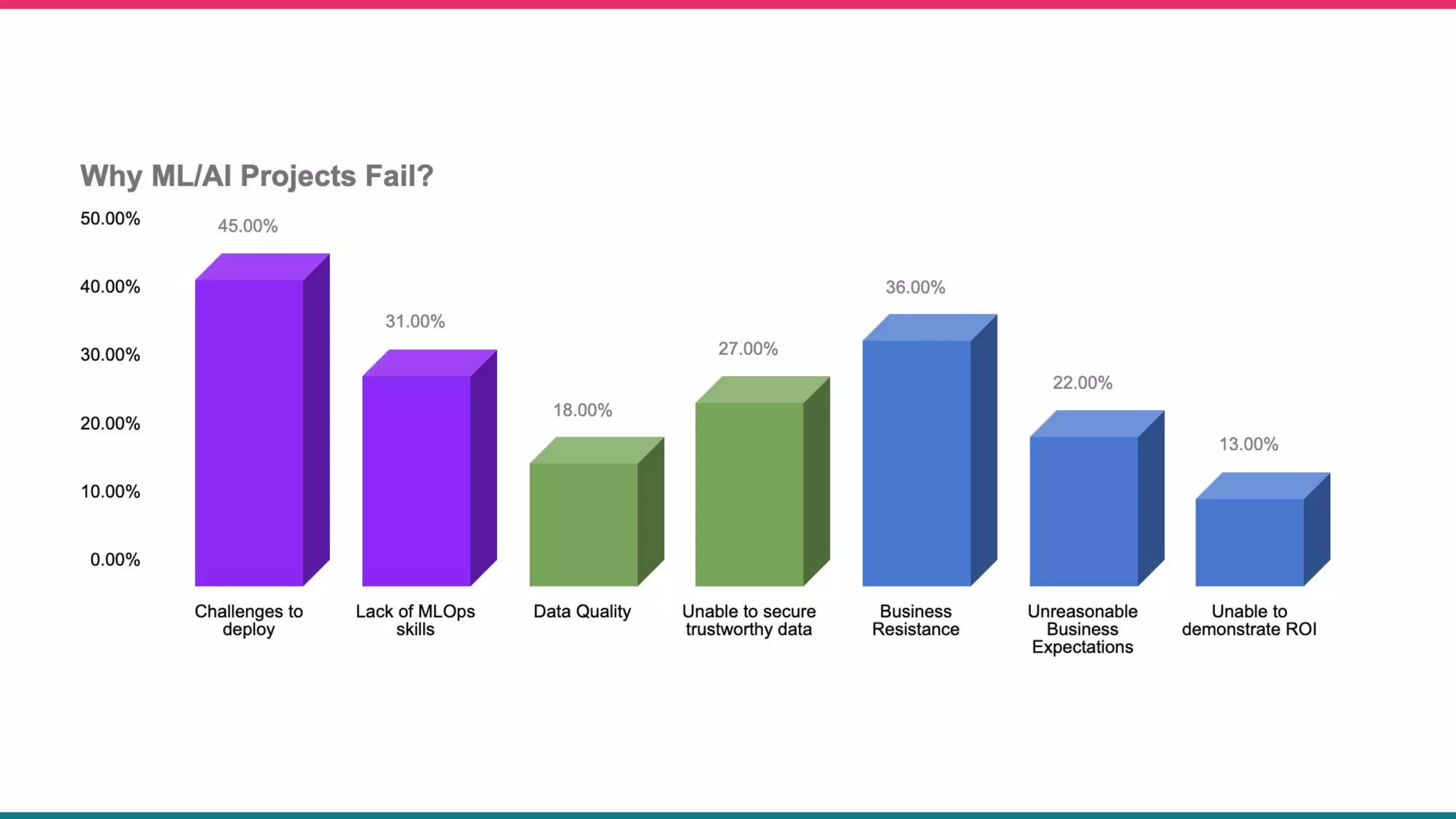

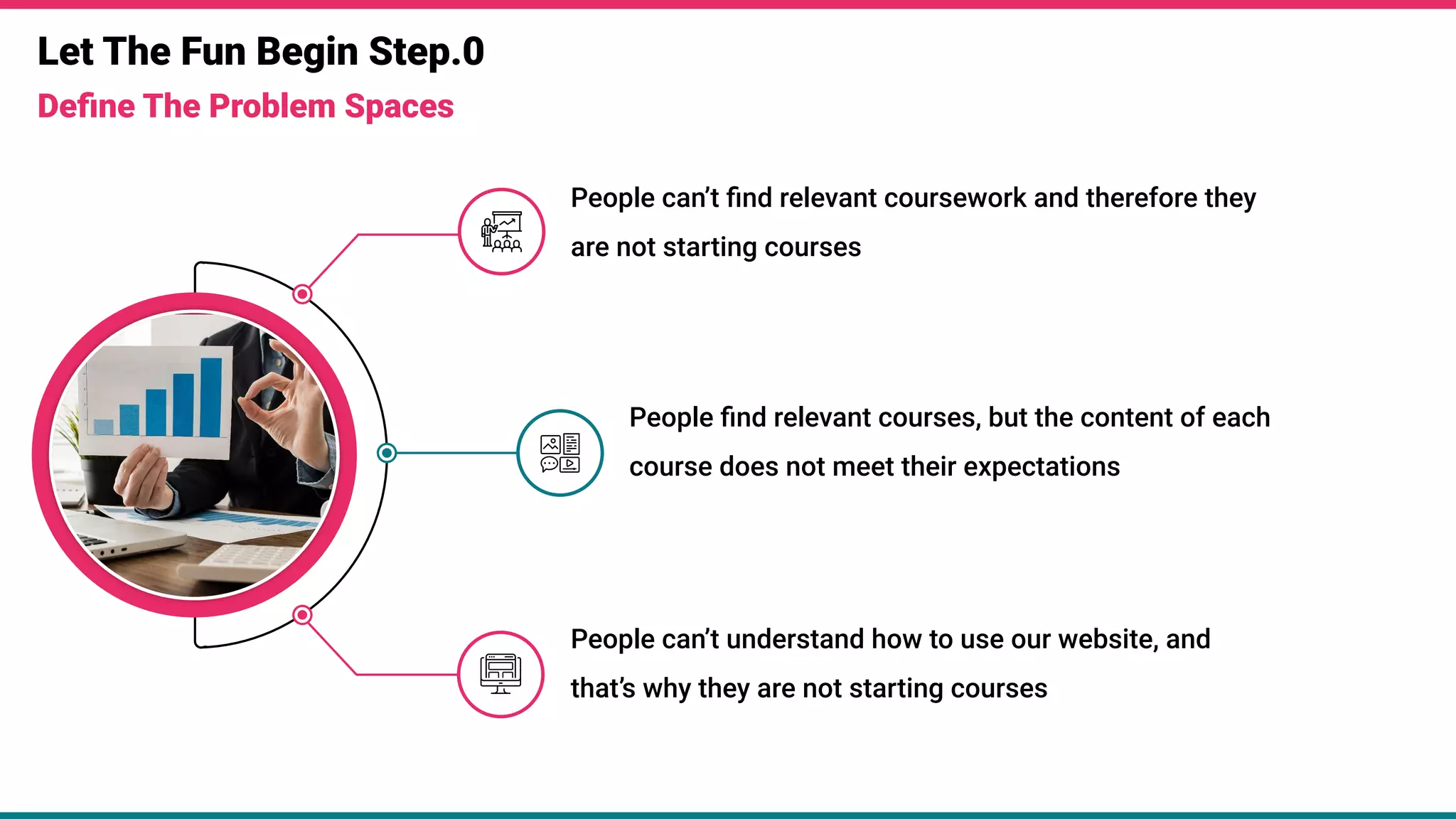

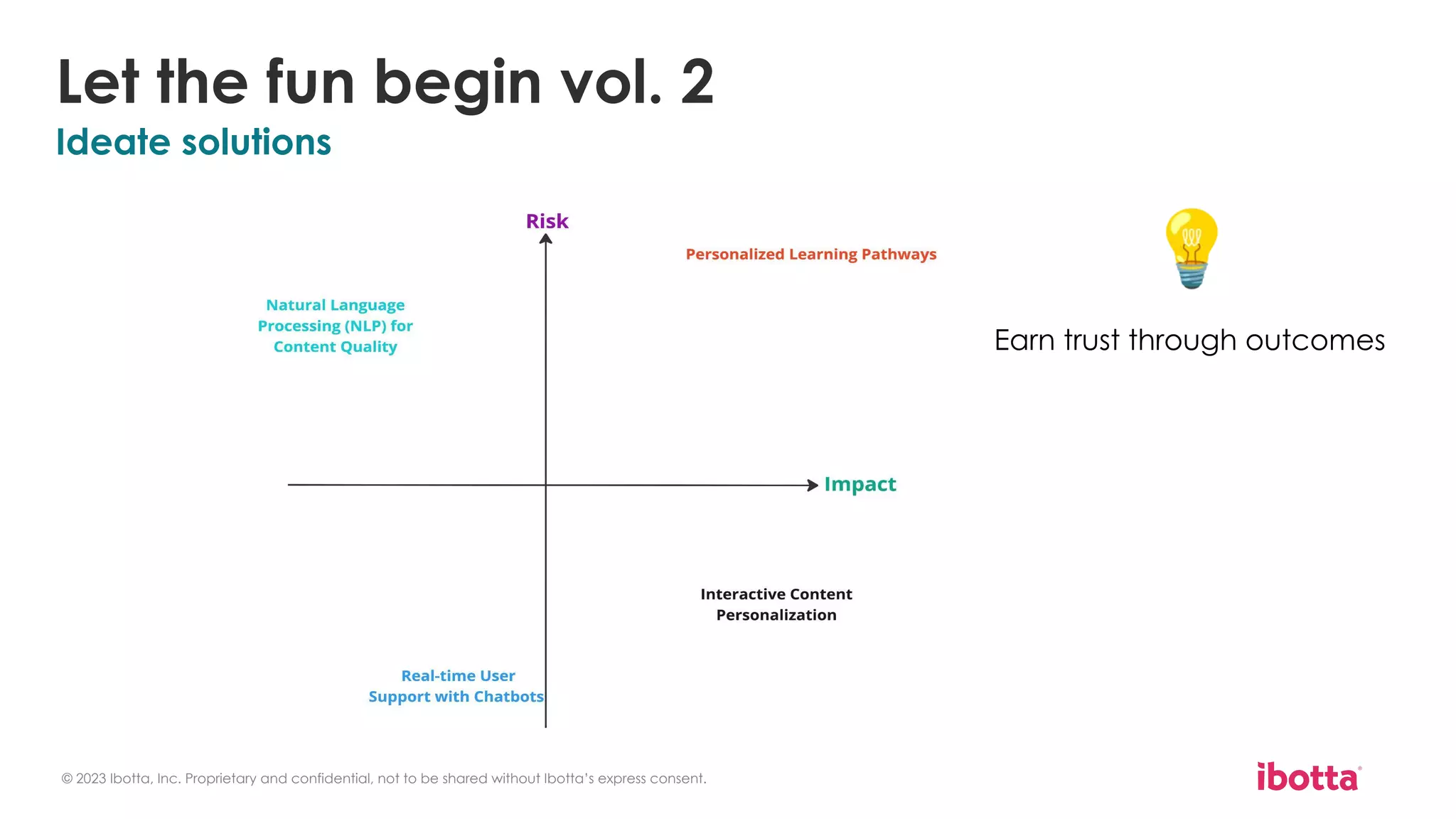

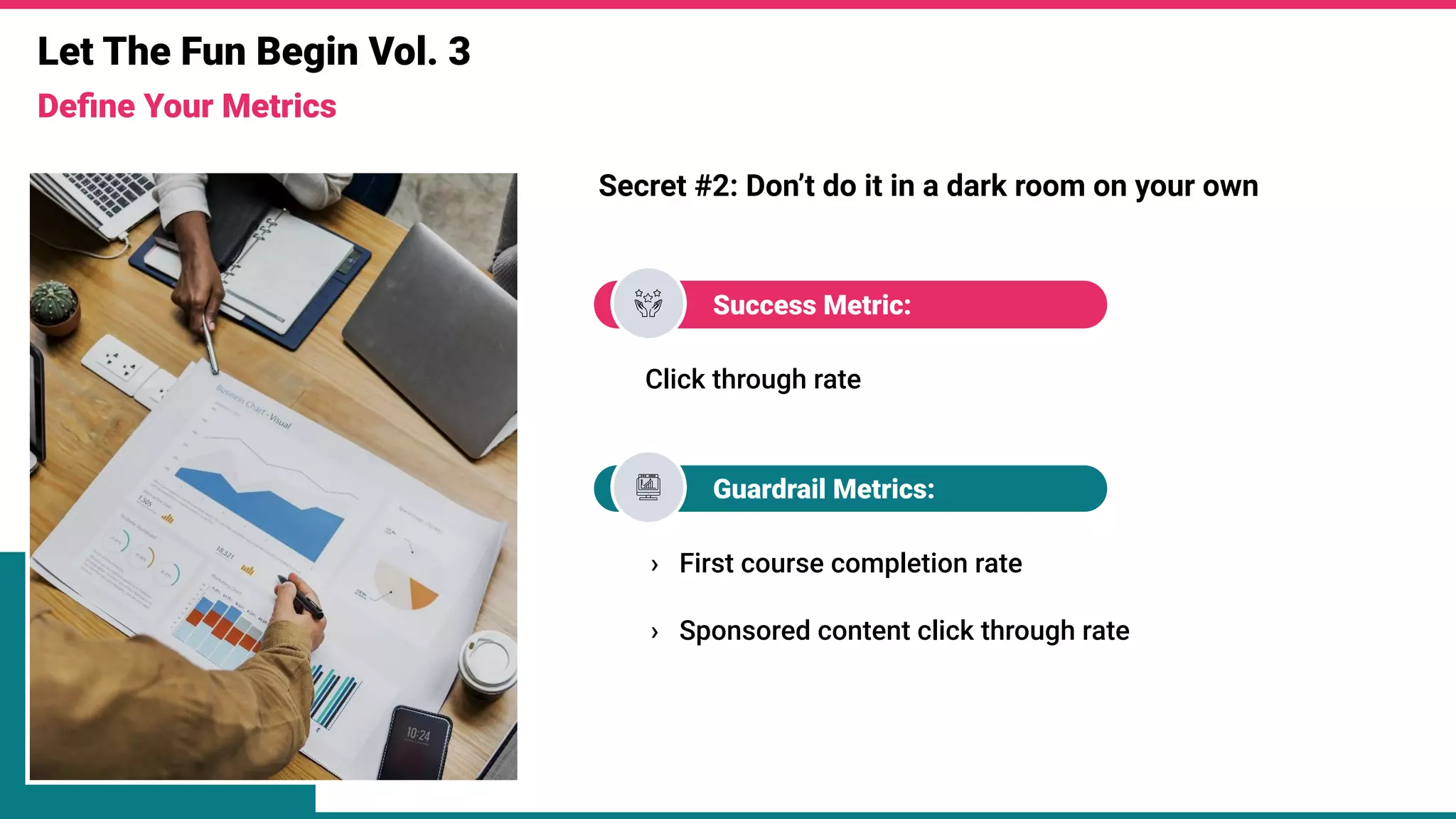

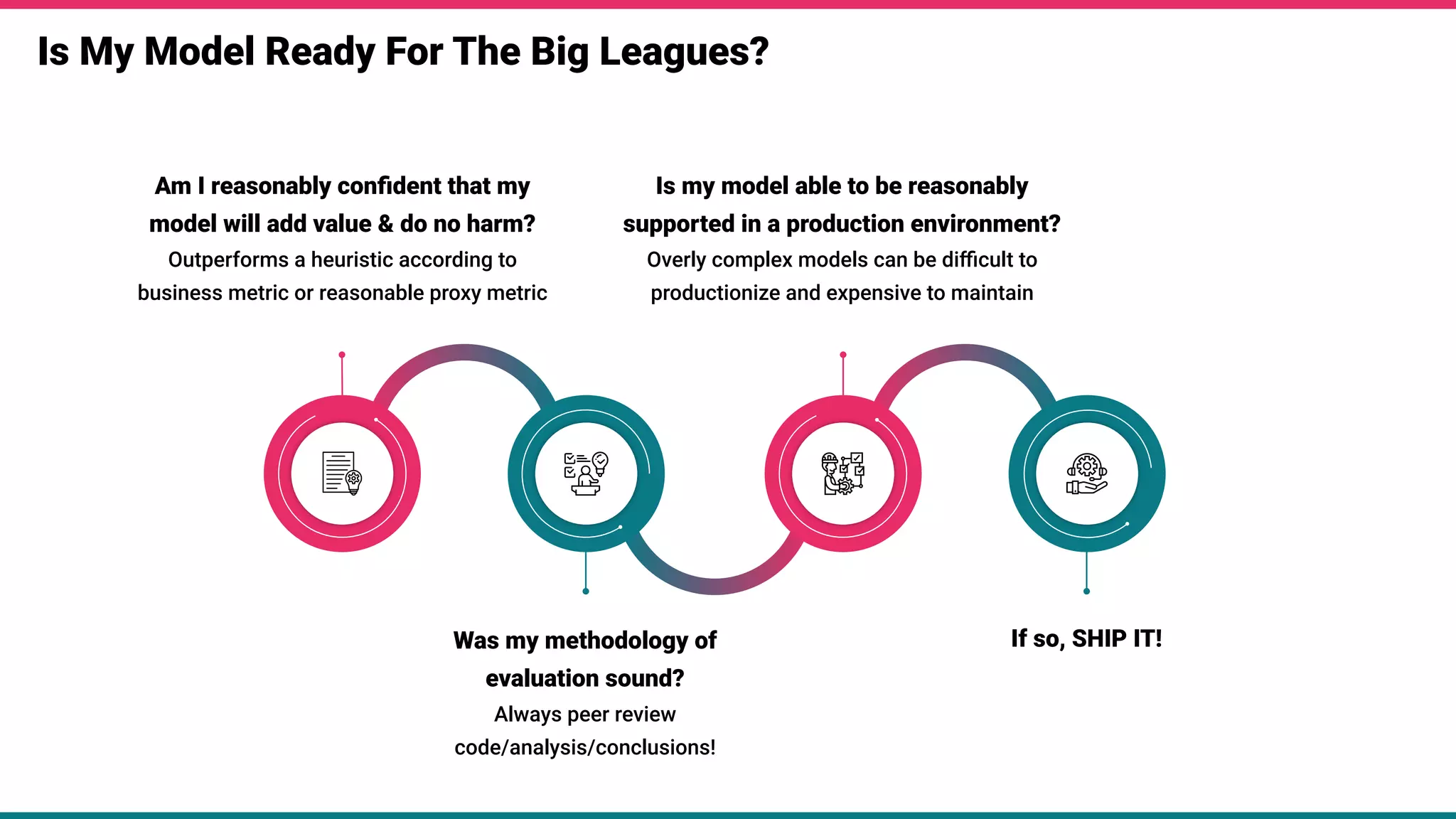

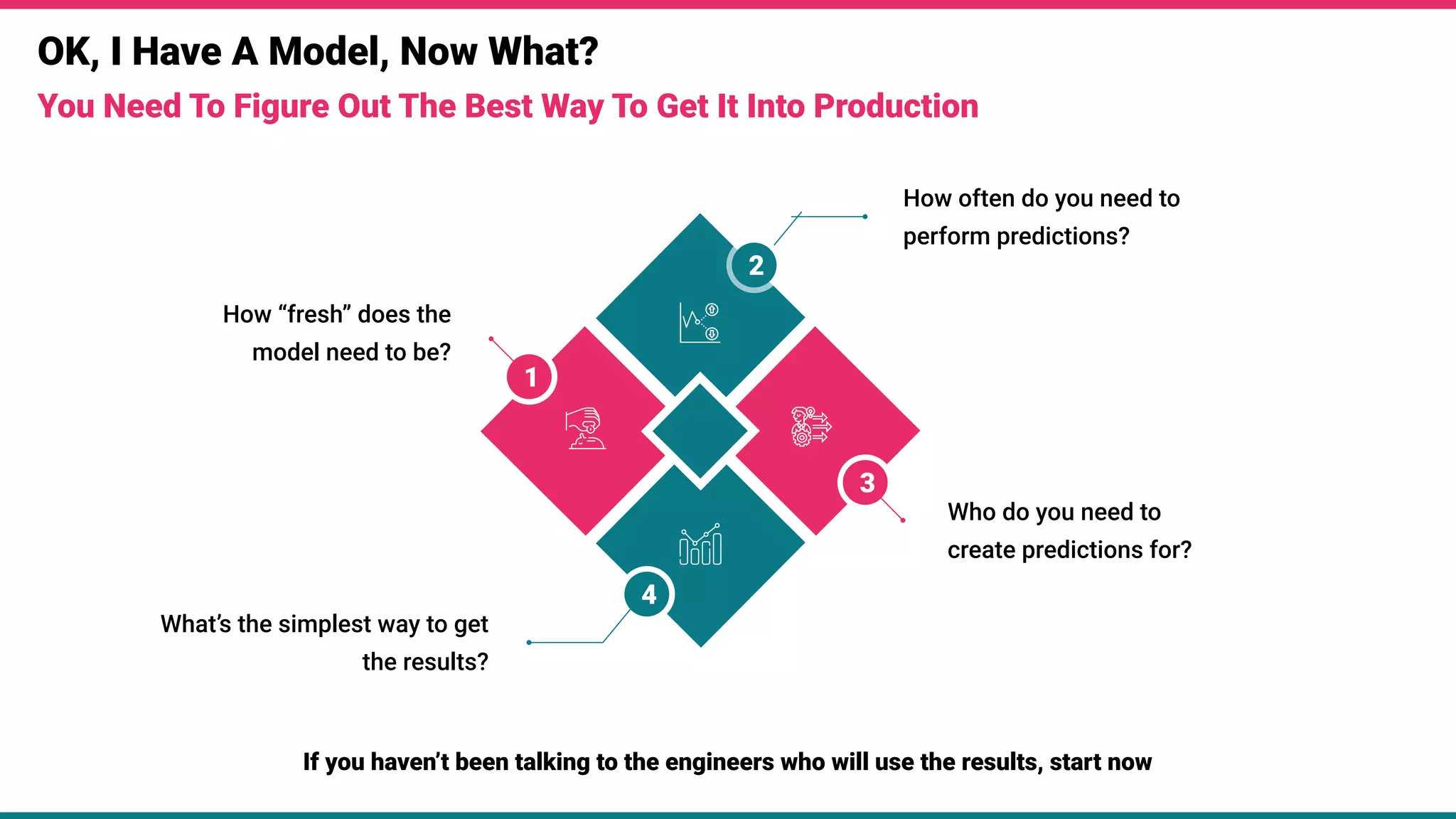

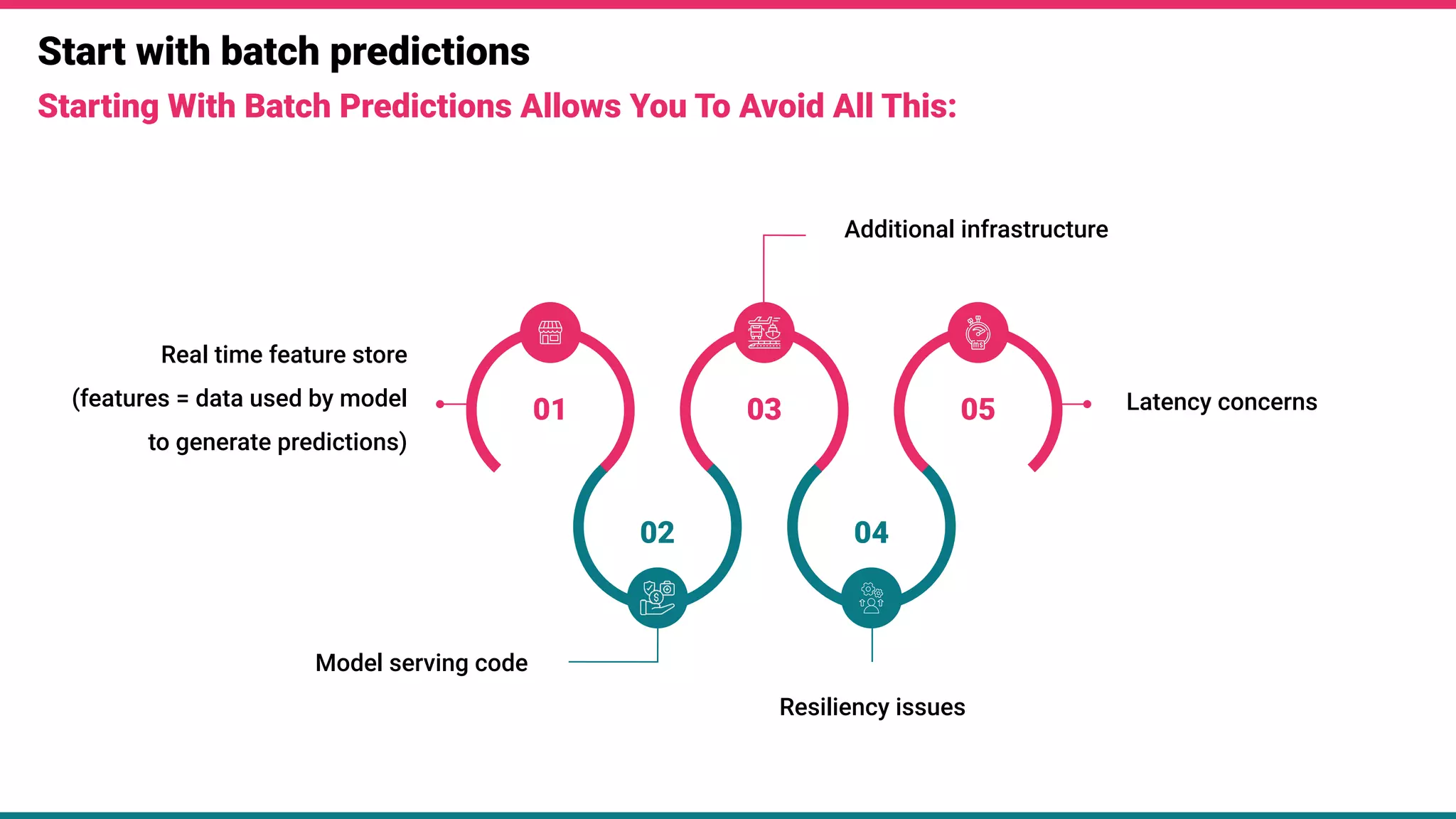

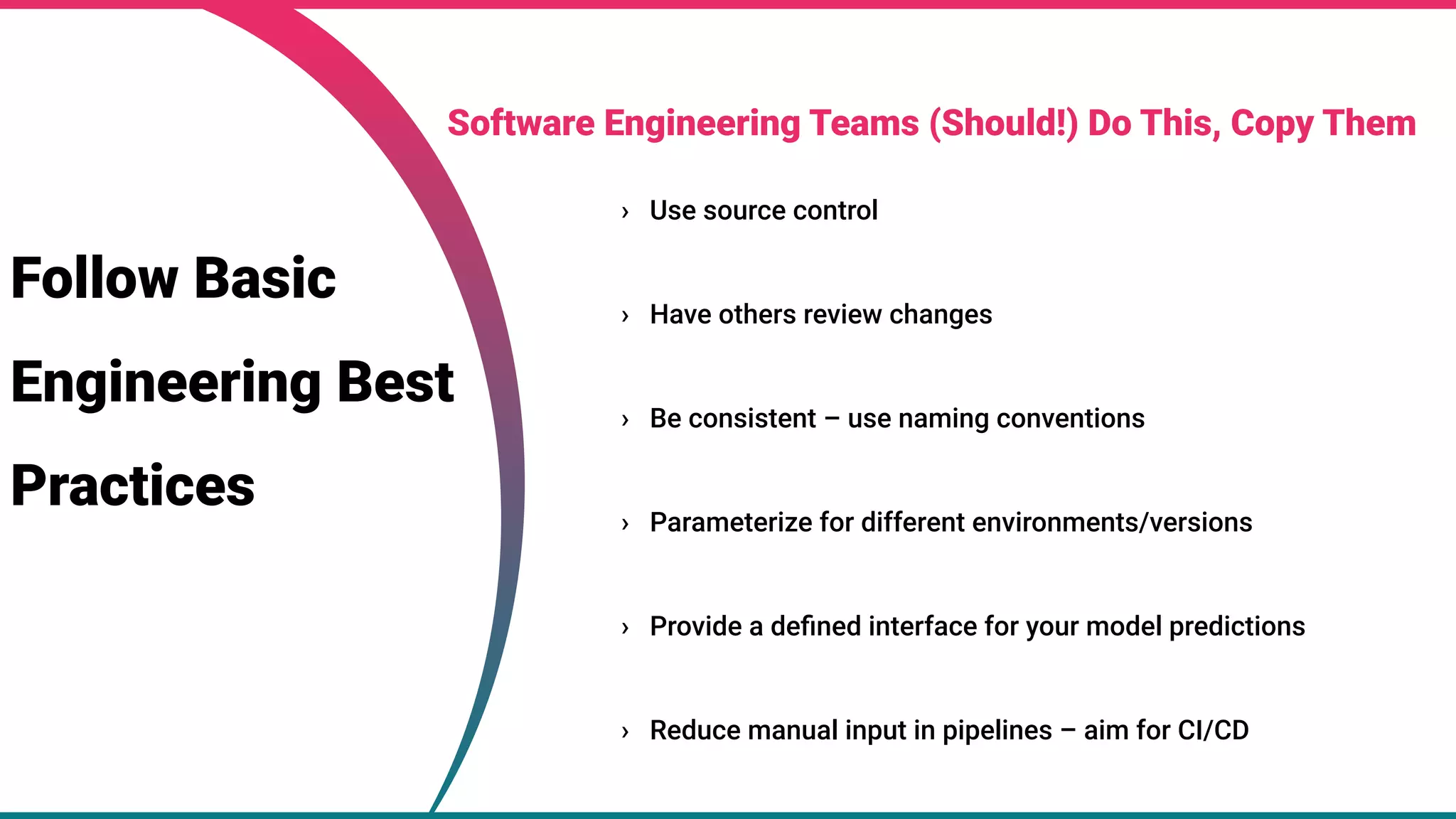

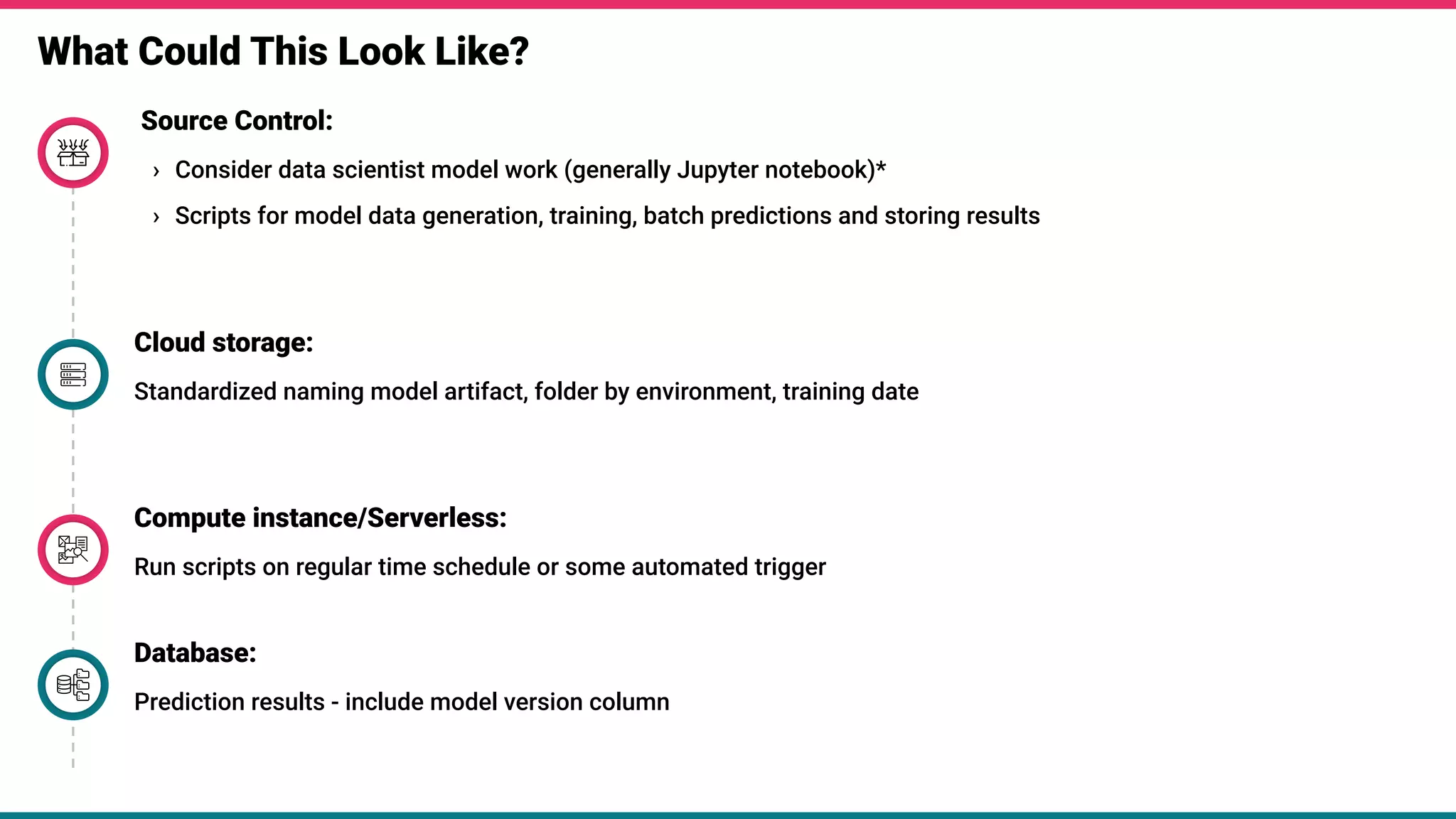

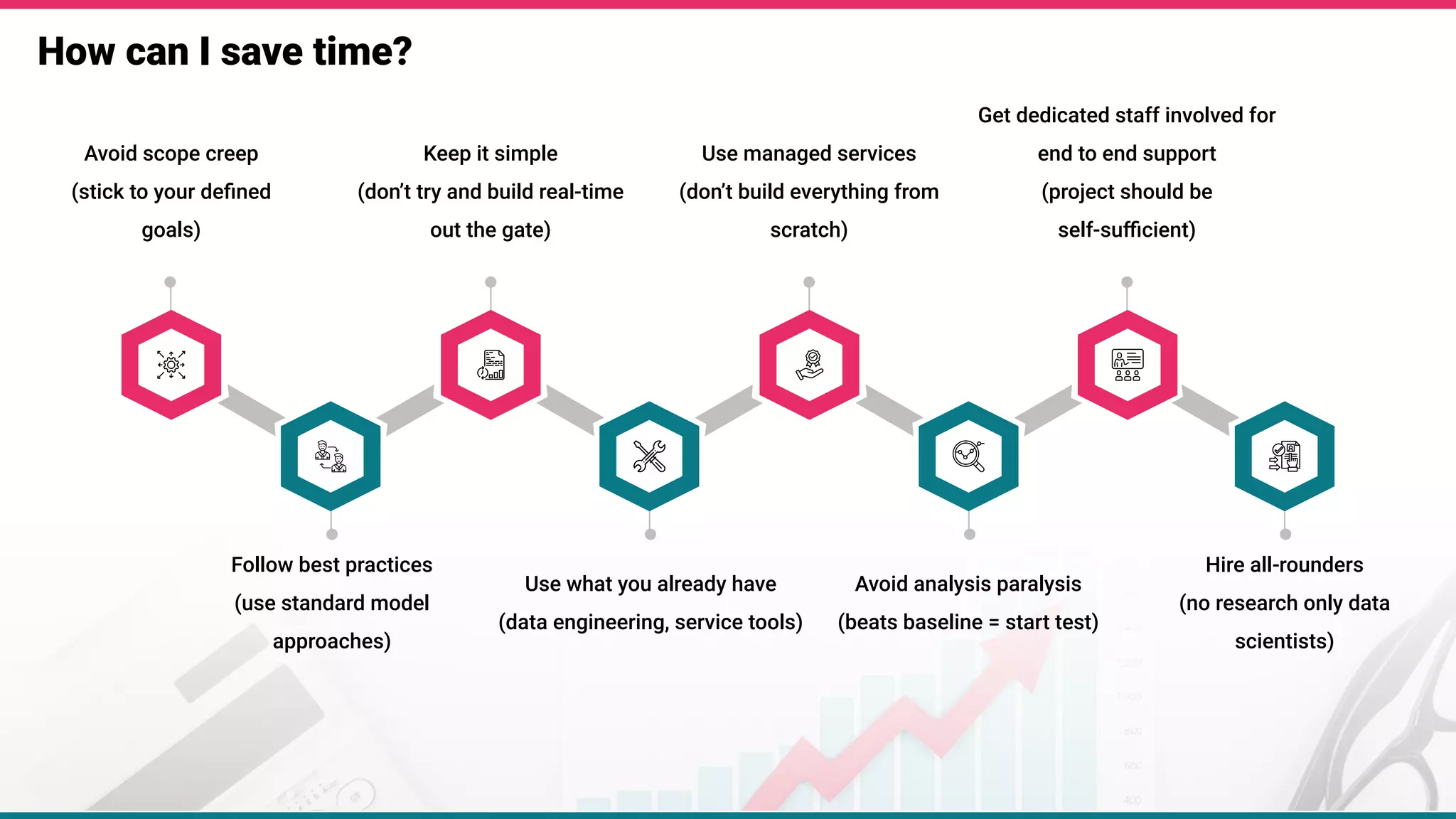

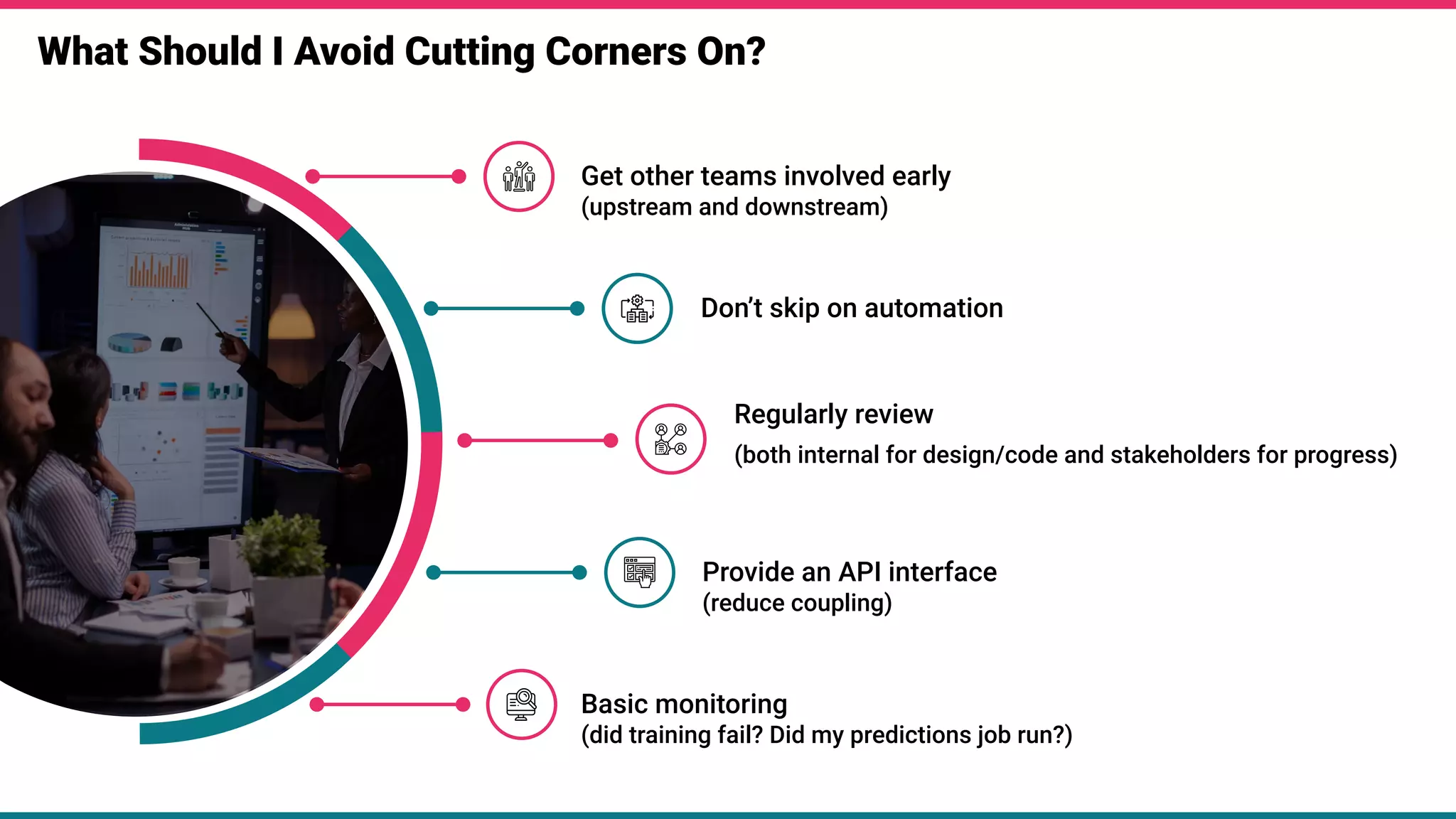

The document outlines a practical guide for startups focusing on machine learning and AI to drive growth and innovation. It discusses essential steps, such as defining problems, forming hypotheses, and the importance of collaboration across teams, while emphasizing the challenges and considerations for model experimentation and productionizing ML solutions. Key takeaways include the necessity of using clean and representative data, performing offline testing, and adhering to best practices in engineering to ensure successful implementation.