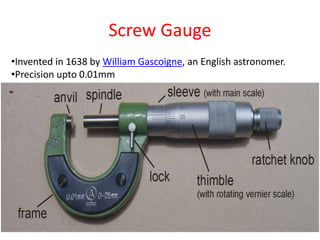

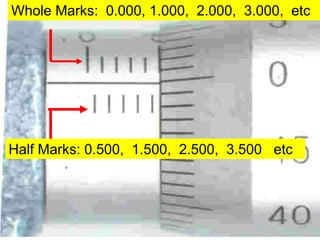

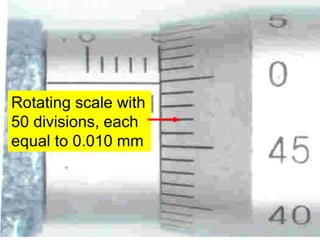

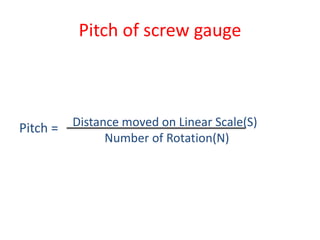

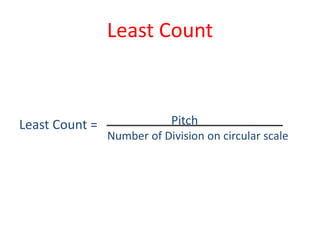

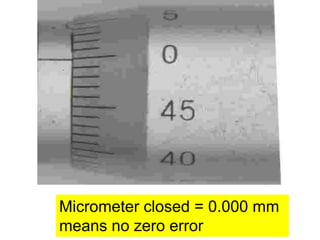

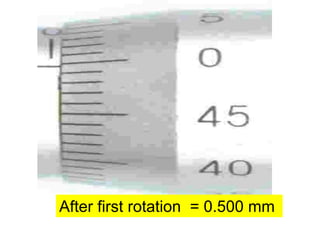

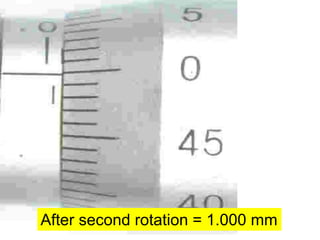

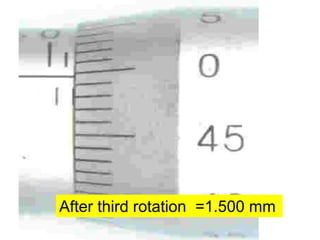

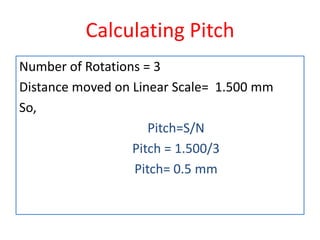

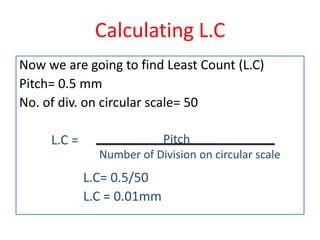

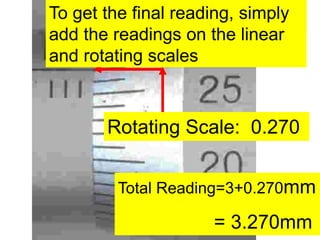

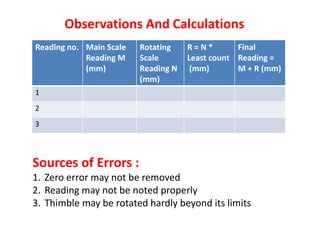

The document describes a micrometer or screw gauge, which is a precision measuring tool invented in 1638. It has two scales - a main linear scale graduated in 0.500 mm marks and a rotating scale with 50 divisions each equal to 0.010 mm. To take a measurement, the spindle is turned until it contacts the object being measured. The reading is the sum of the values indicated on the two scales. The precision or least count is 0.01 mm, determined by dividing the pitch (distance moved per rotation) by the number of divisions on the rotating scale. Potential sources of error include non-removal of zero error and improper reading.