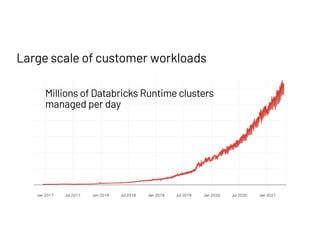

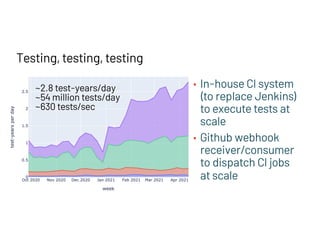

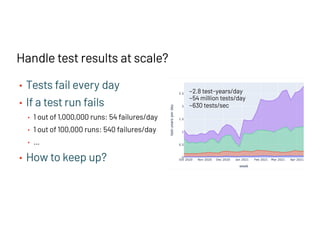

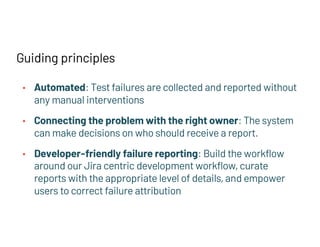

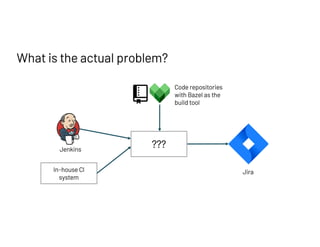

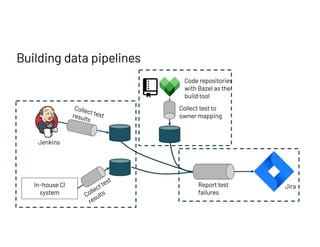

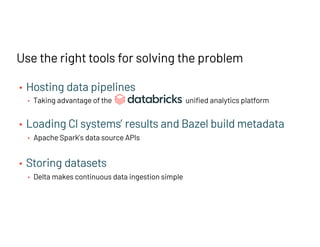

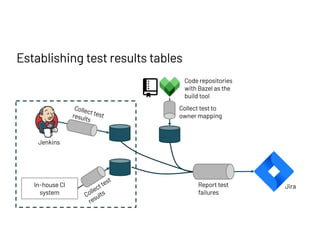

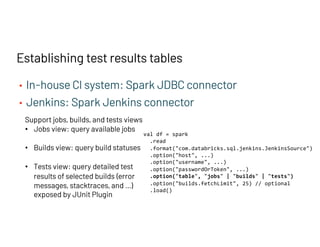

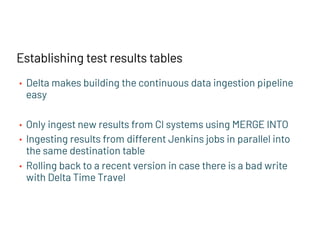

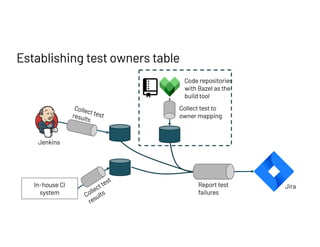

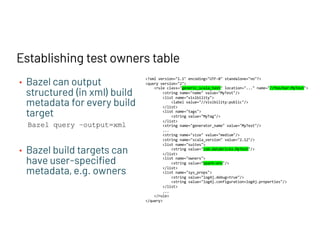

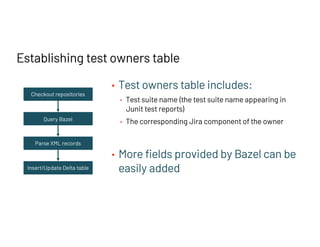

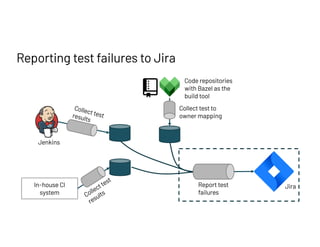

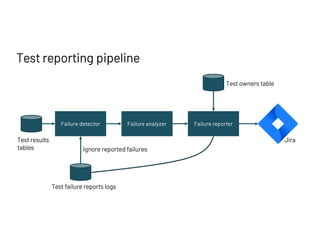

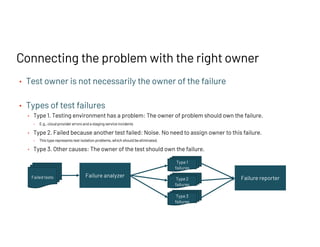

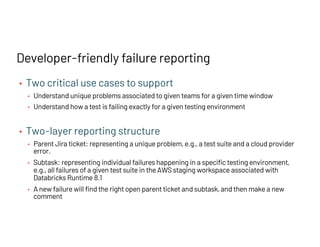

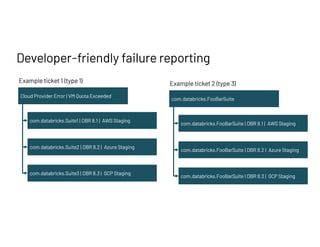

The document discusses managing millions of tests at Databricks, highlighting the implementation of a CI system to automate the execution and reporting of tests at scale. It emphasizes the importance of triaging test failures to the correct owners in a developer-friendly manner and outlines the use of various technologies and methodologies to enhance testing and reporting processes. The presentation also touches on future goals, such as building holistic views of CI/CD activities and improving engineering practices based on CI/CD insights.