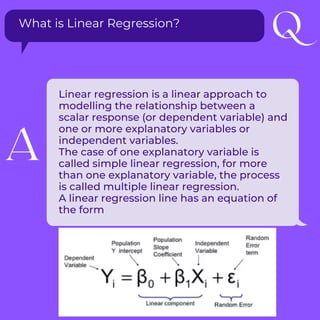

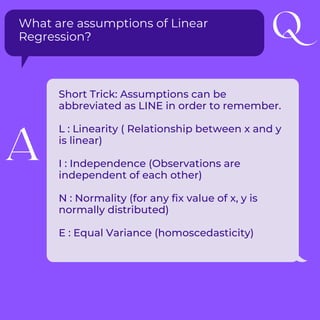

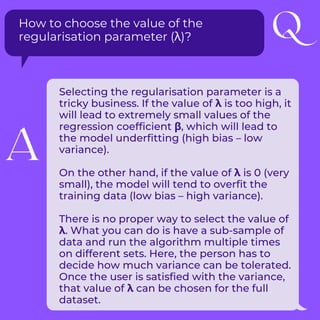

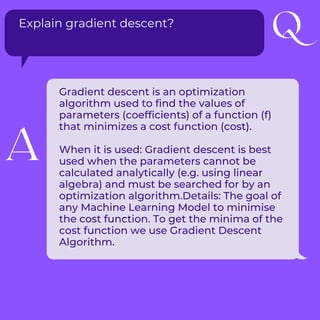

This document covers key topics in machine learning, focusing on linear regression, its assumptions, and regularization methods. It explains linear and multiple regression, different types of regularization (L1, L2, and elastic net), and the importance of choosing the regularization parameter wisely to avoid underfitting or overfitting. Additionally, it discusses gradient descent as an optimization algorithm for minimizing the cost function in machine learning models.