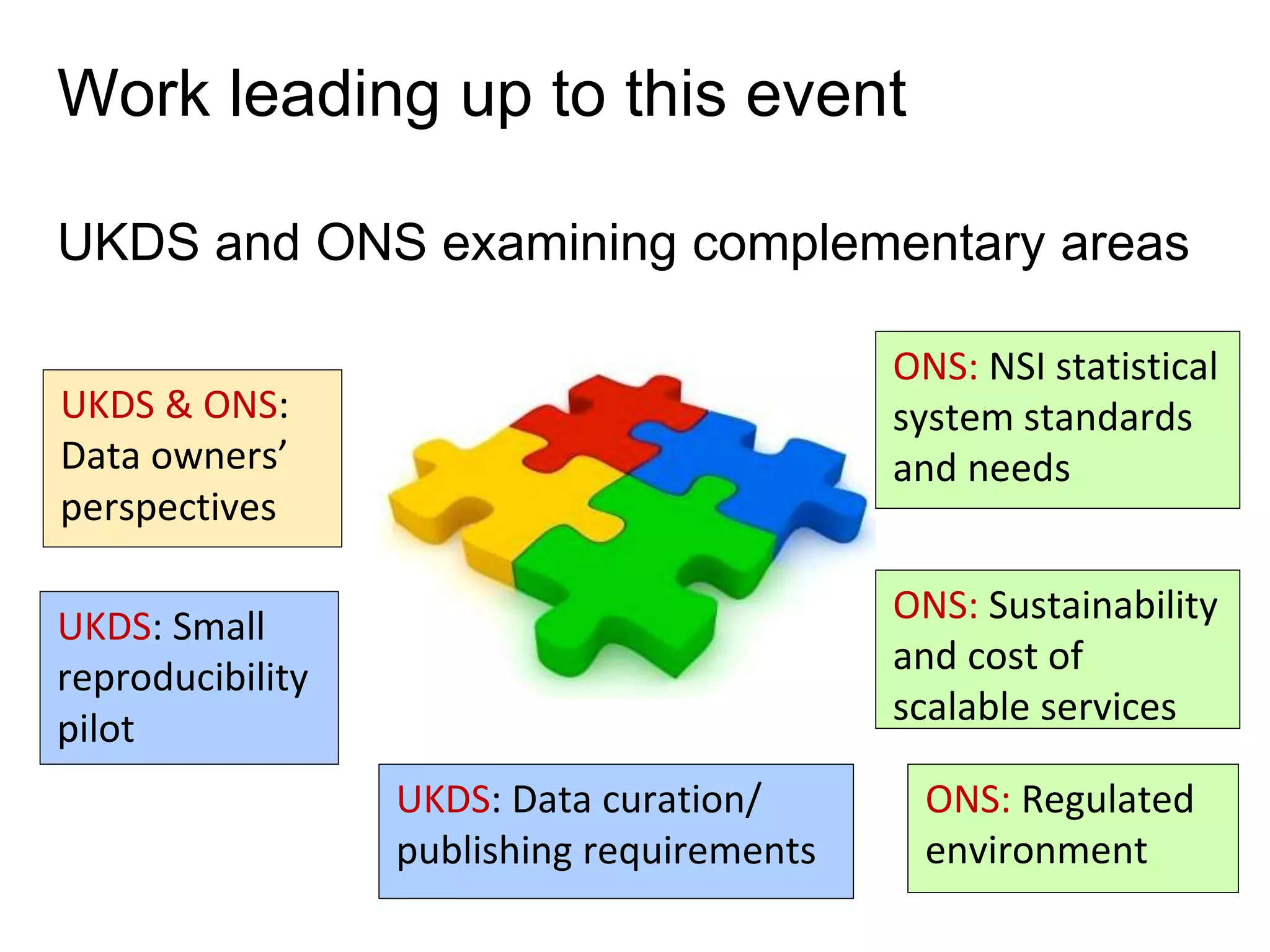

The document discusses the actors involved in the reproducibility landscape, including data owners, regulatory bodies, journals, and researchers, emphasizing their roles and responsibilities. It aims to explore the benefits and challenges of reproducibility in research, and define best practice recommendations for improving data transparency and integrity. The document also addresses the need for collaboration among stakeholders to enhance the reproducibility of research outputs.