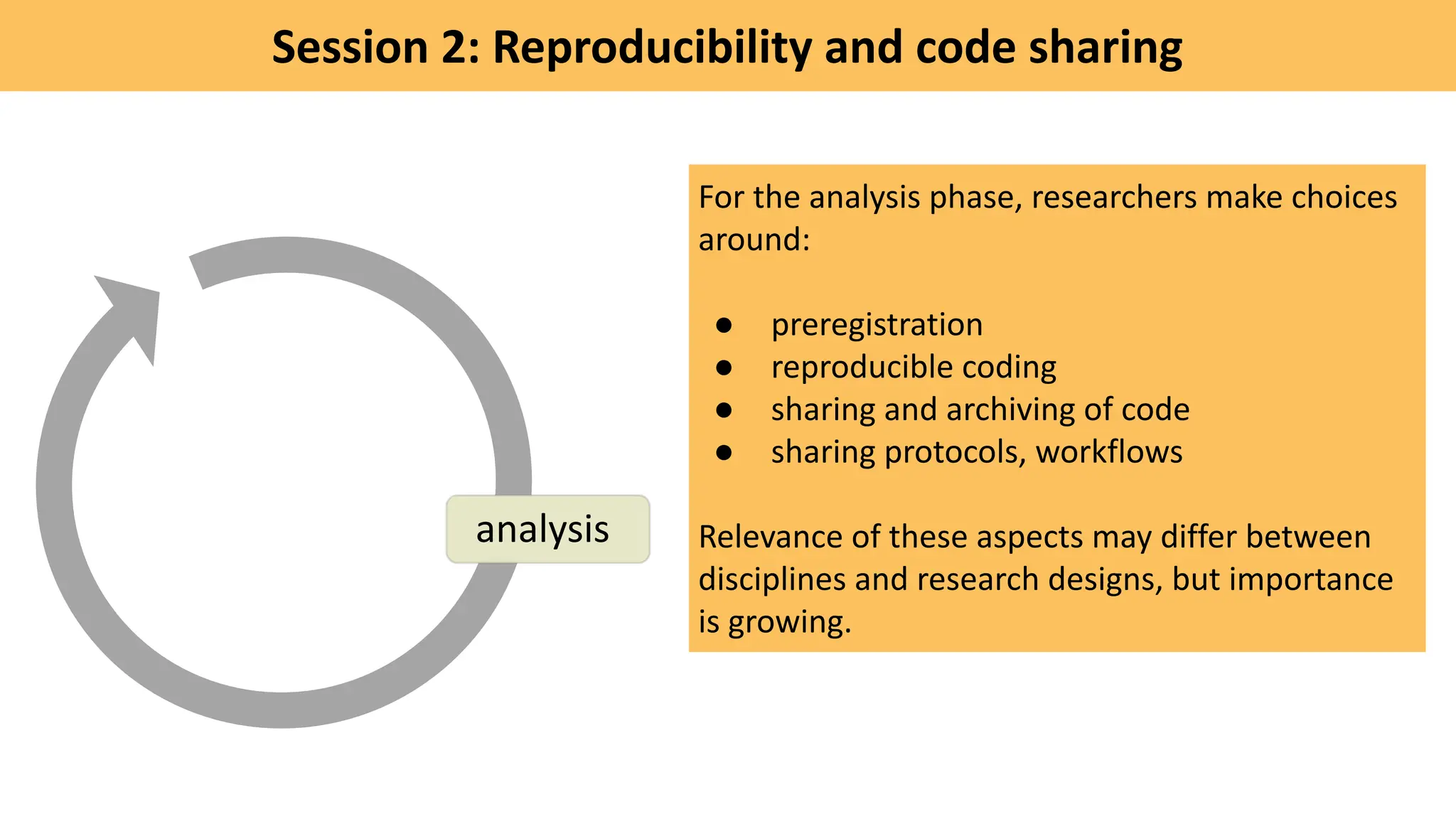

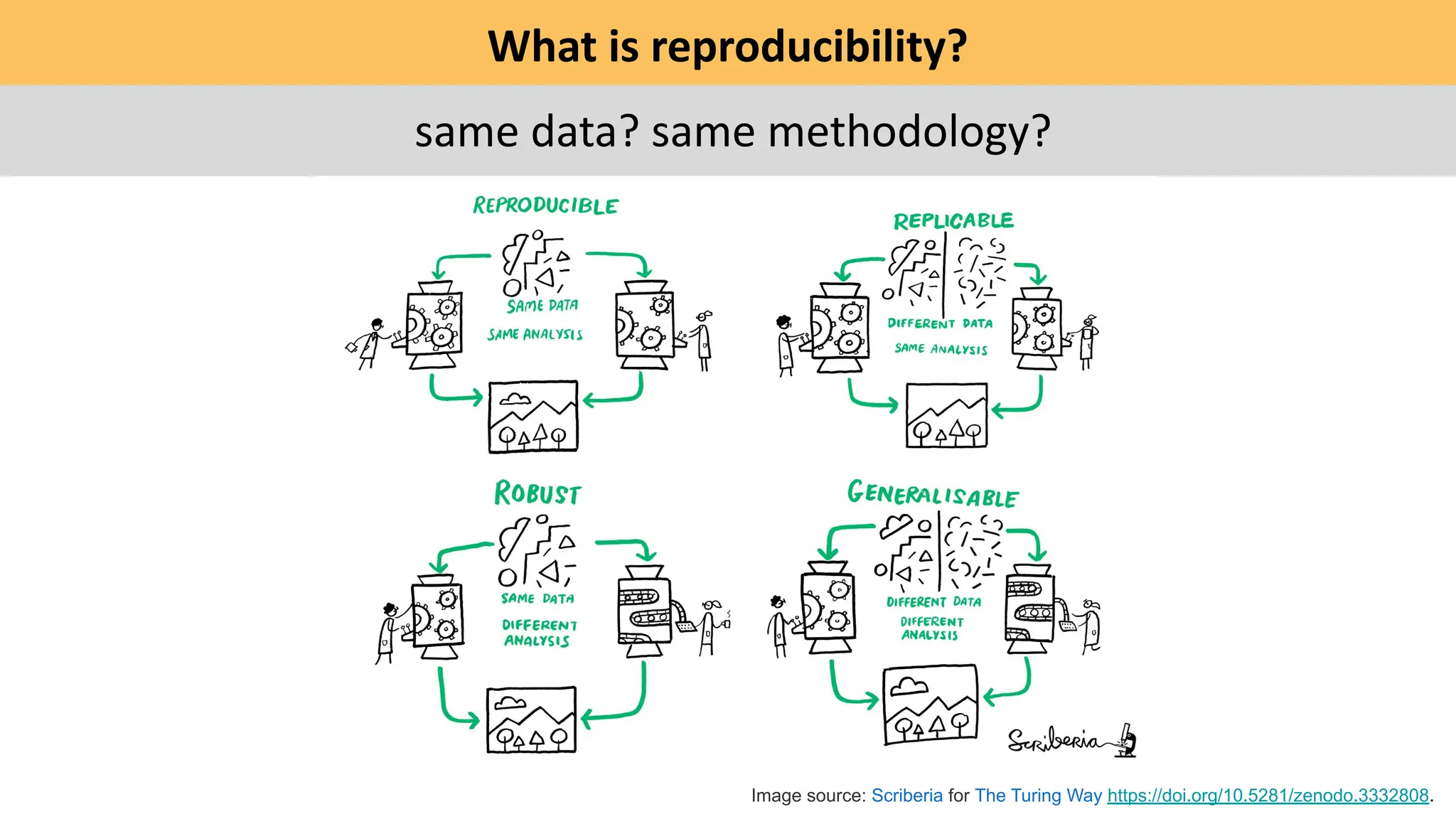

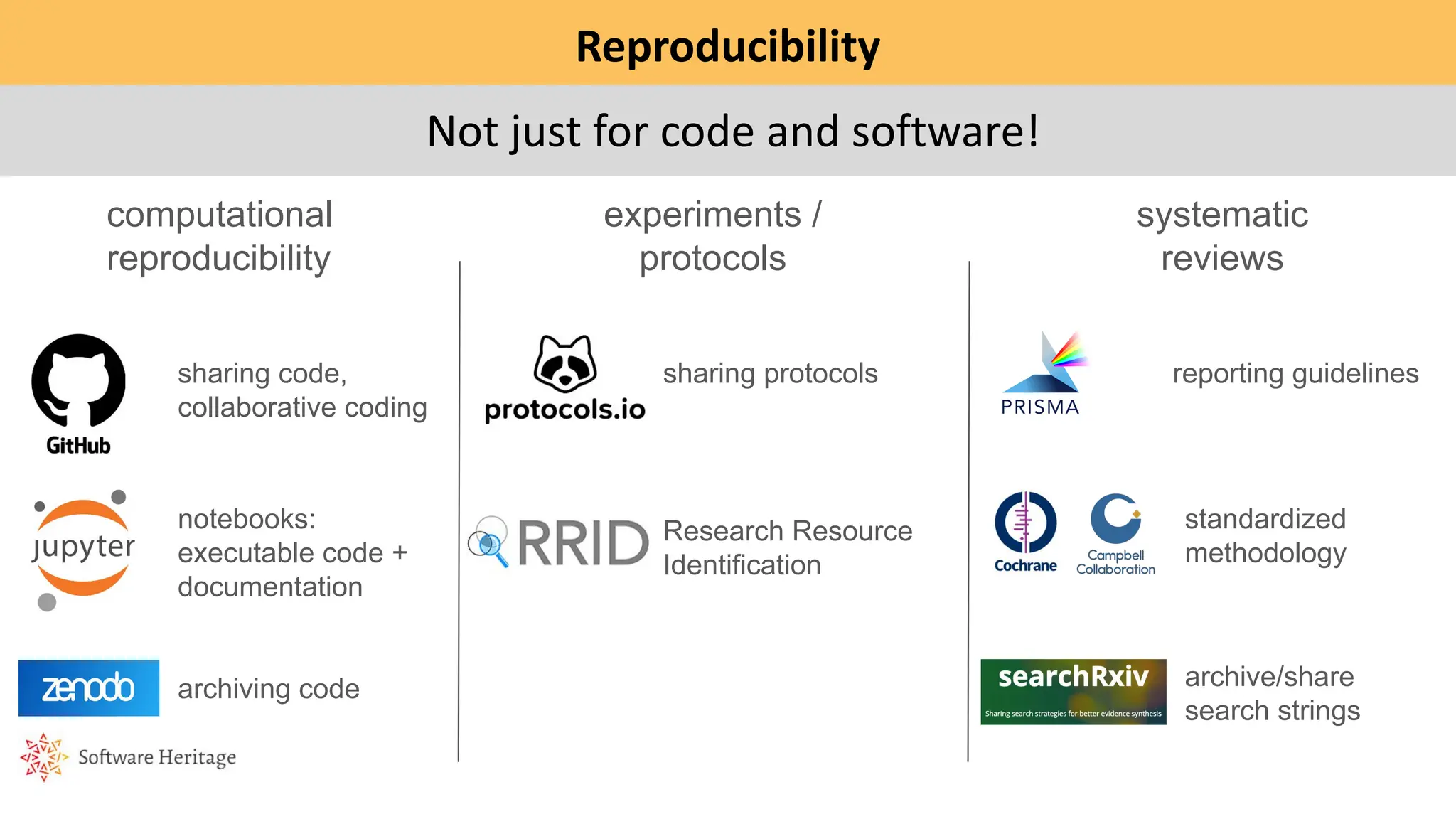

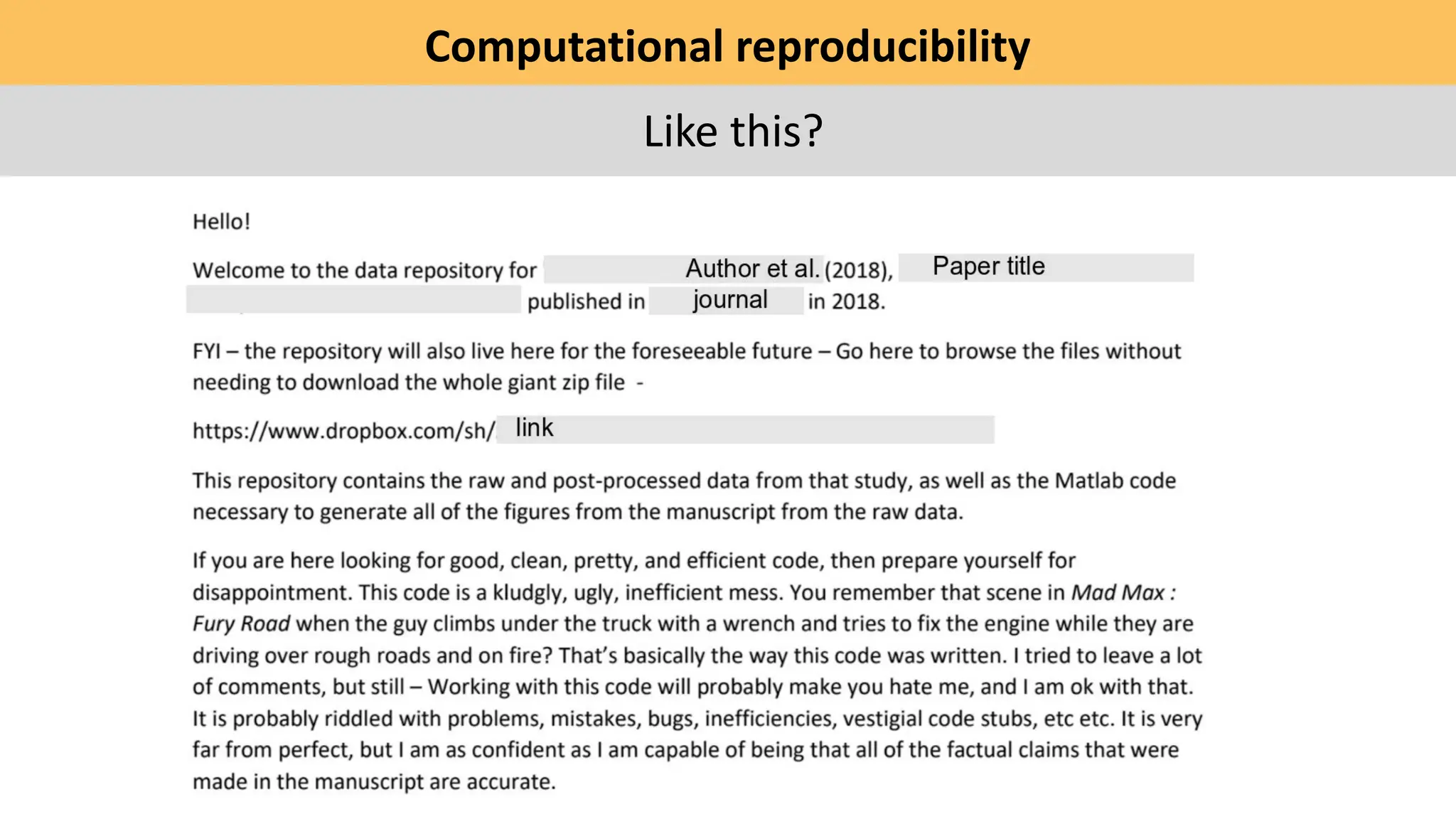

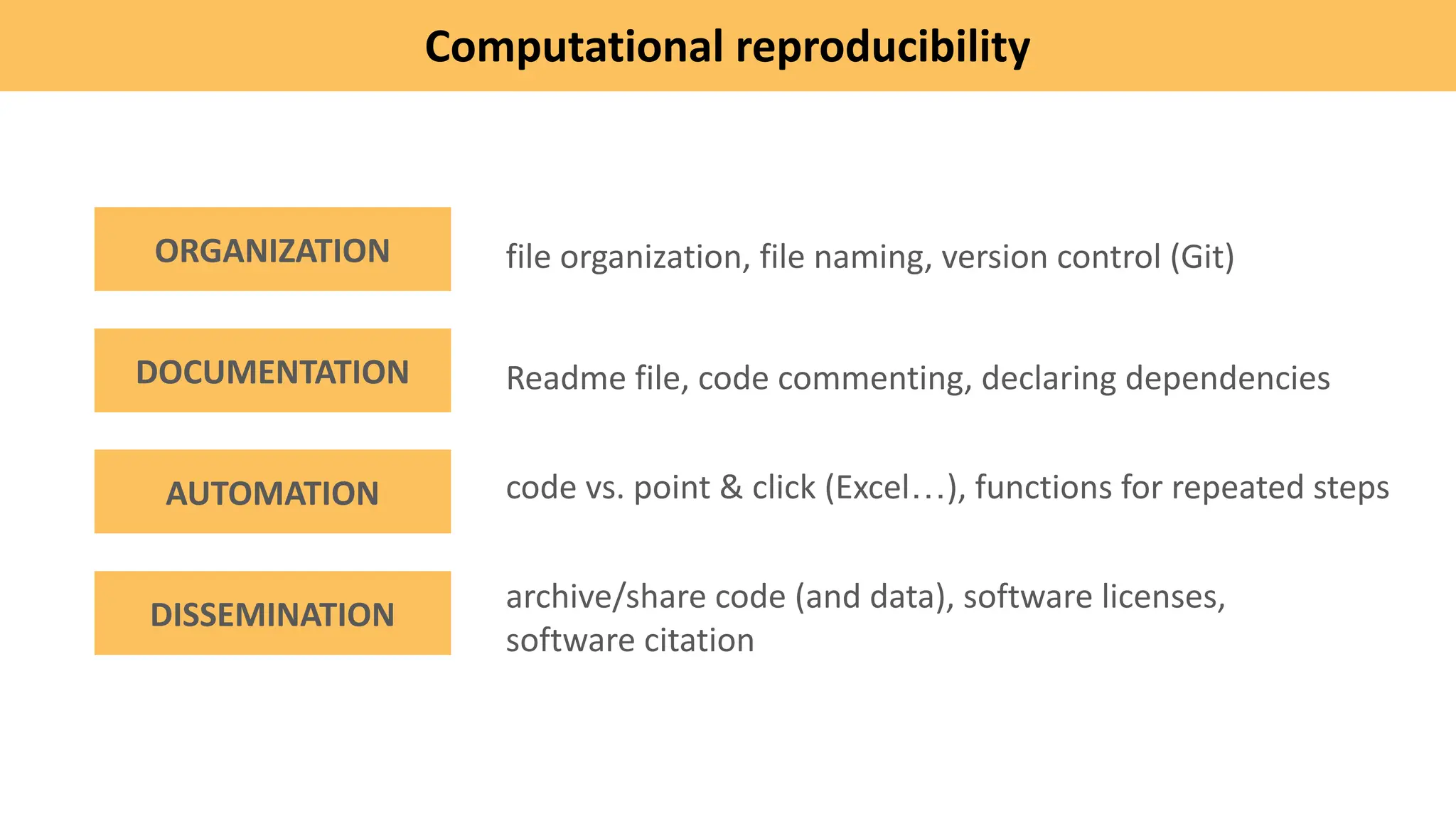

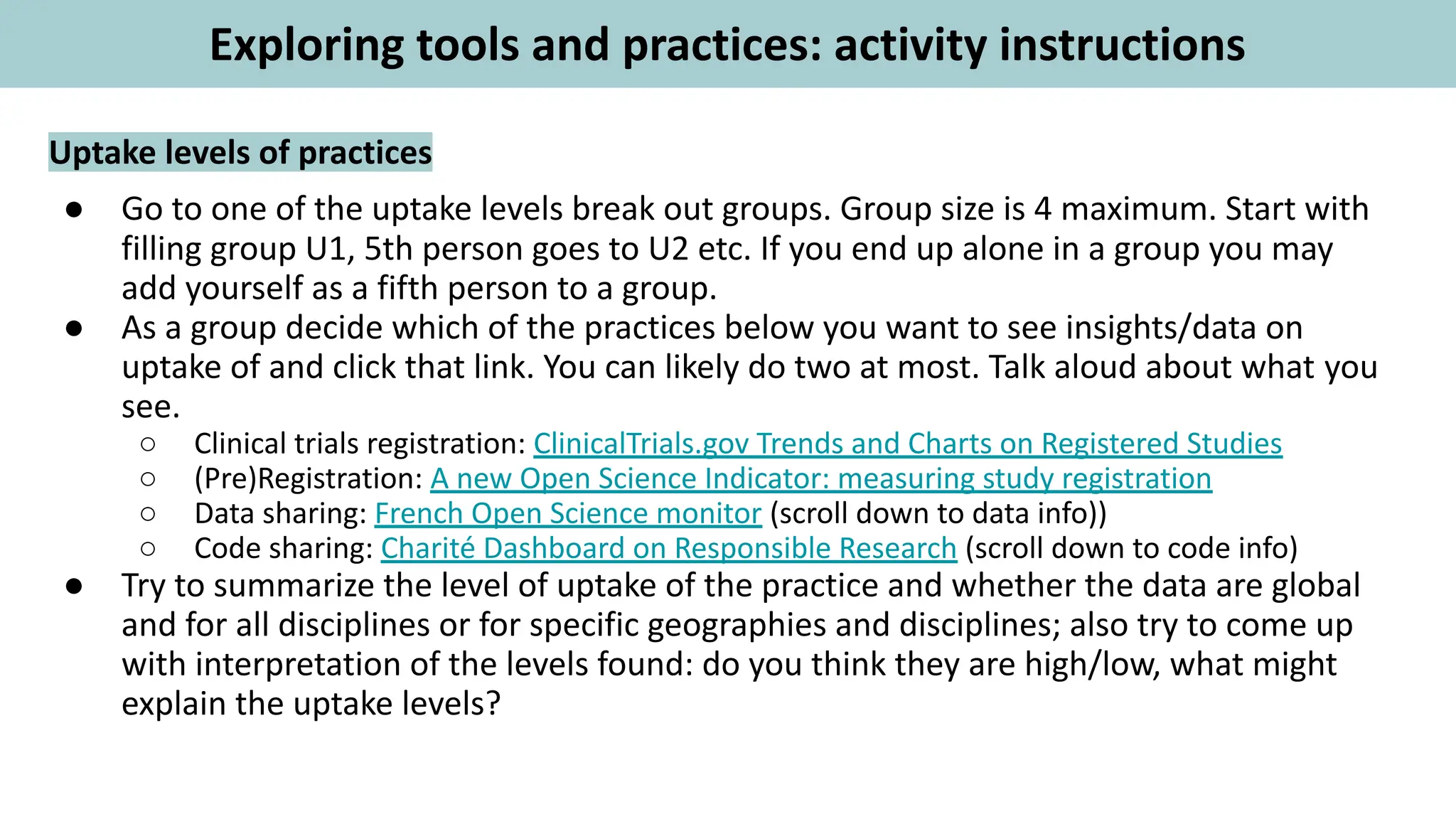

The document outlines a training session for open research focusing on reproducibility and code sharing, scheduled for October 10, 2024. It aims to educate participants on the importance of open research practices, related tools, and policies, culminating in hands-on activities and discussions. Additionally, it emphasizes formulating actionable plans within organizations to enhance reproducibility and code sharing among researchers.

![Home assignment

Before our next session, formulate one or

more potential actions at your organization

to facilitate ‘reproducibility and code

sharing’

These can be things that are being

considered already, or fully new ideas.

Try to use the SMART rubric - identifying

actions that are specific, measurable,

achievable, relevant and time-bounded.

Formulate actions along the lines of:

“[Actor] will [action] for [audience] ”

For example:

“Our graduate school will start a

ReproducibiliTEA journal club”

“The library will organize a workshop on

preregistration for ECRs”

“The library, together with the computer

science department, will host Software

Carpentry workshops”](https://image.slidesharecdn.com/openresearch-nisotrainingfall2024-session3-forsharing-241011150352-d2b57b97/75/Bosman-and-Kramer-Open-Research-A-2024-NISO-Training-Series-Session-Three-Reproducibility-and-code-sharing-27-2048.jpg)