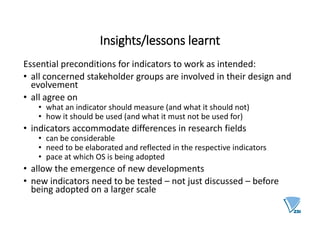

The document discusses the development of indicators for monitoring research policy in the context of open science and big data. It details a comprehensive process involving literature reviews, workshops, and stakeholder engagement, resulting in over 60 categorized indicators focused on the scientific process and system-level dimensions. Key insights include the importance of involving stakeholders in indicator design and the need for flexibility to accommodate differences across research fields.