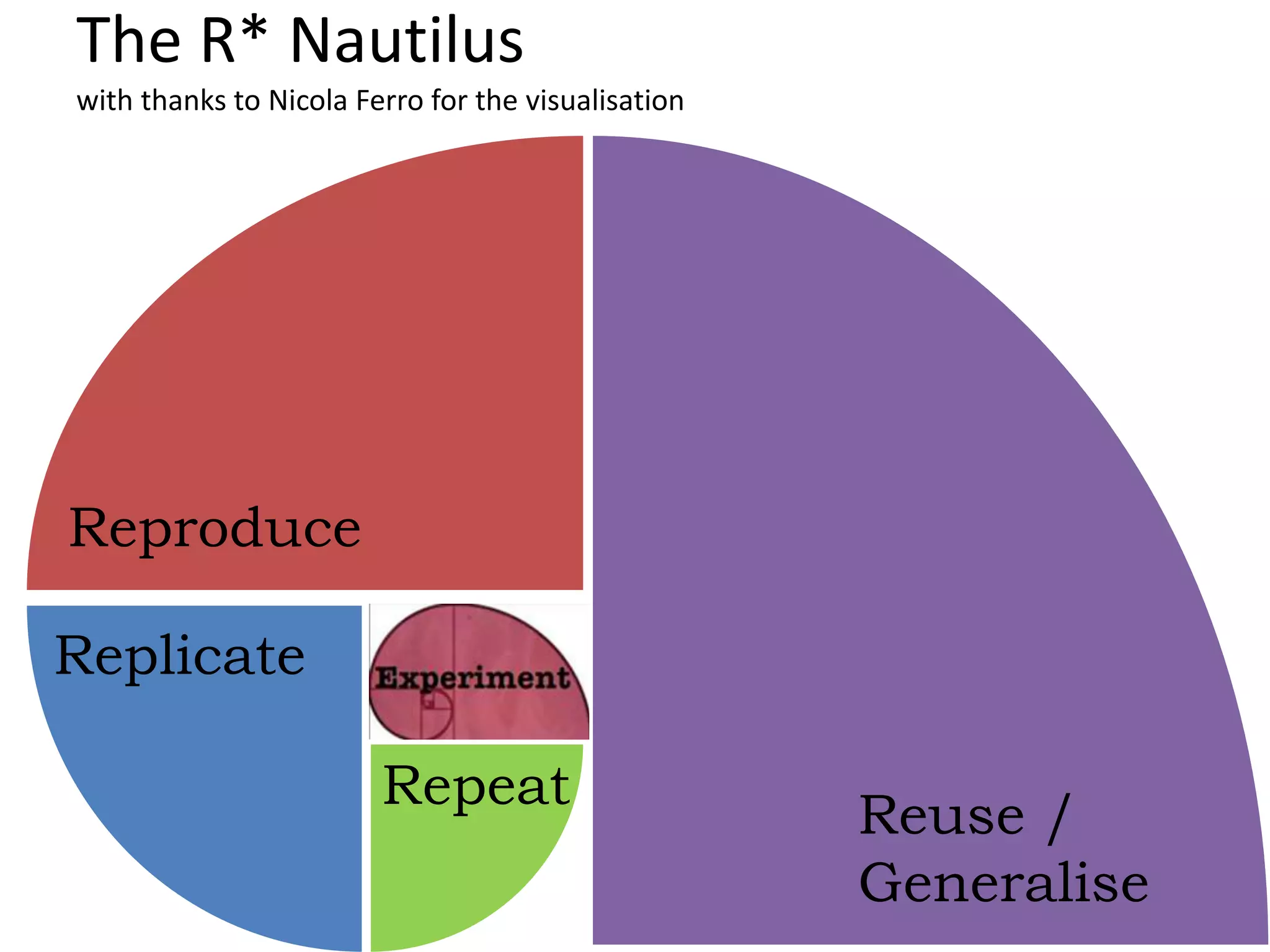

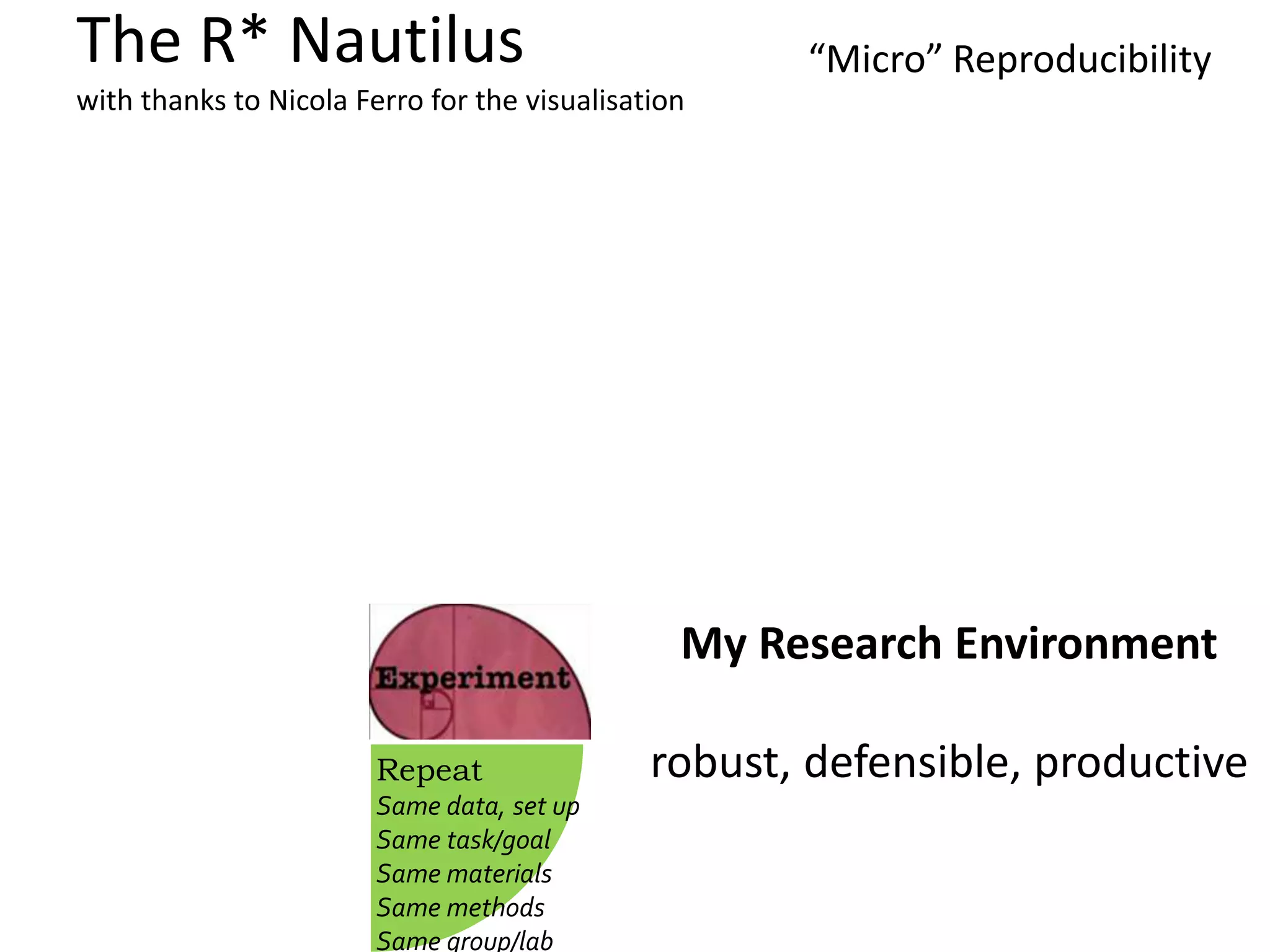

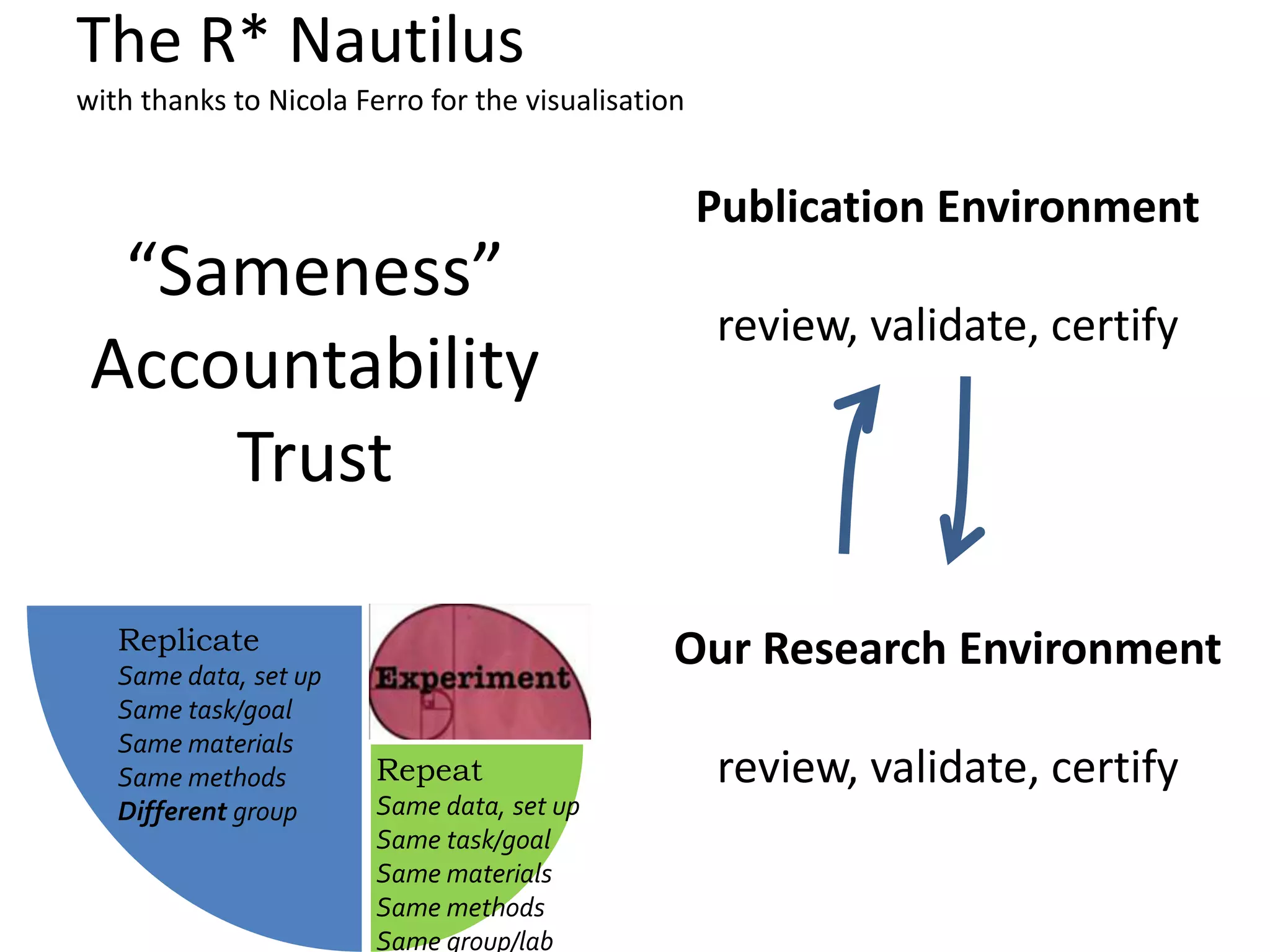

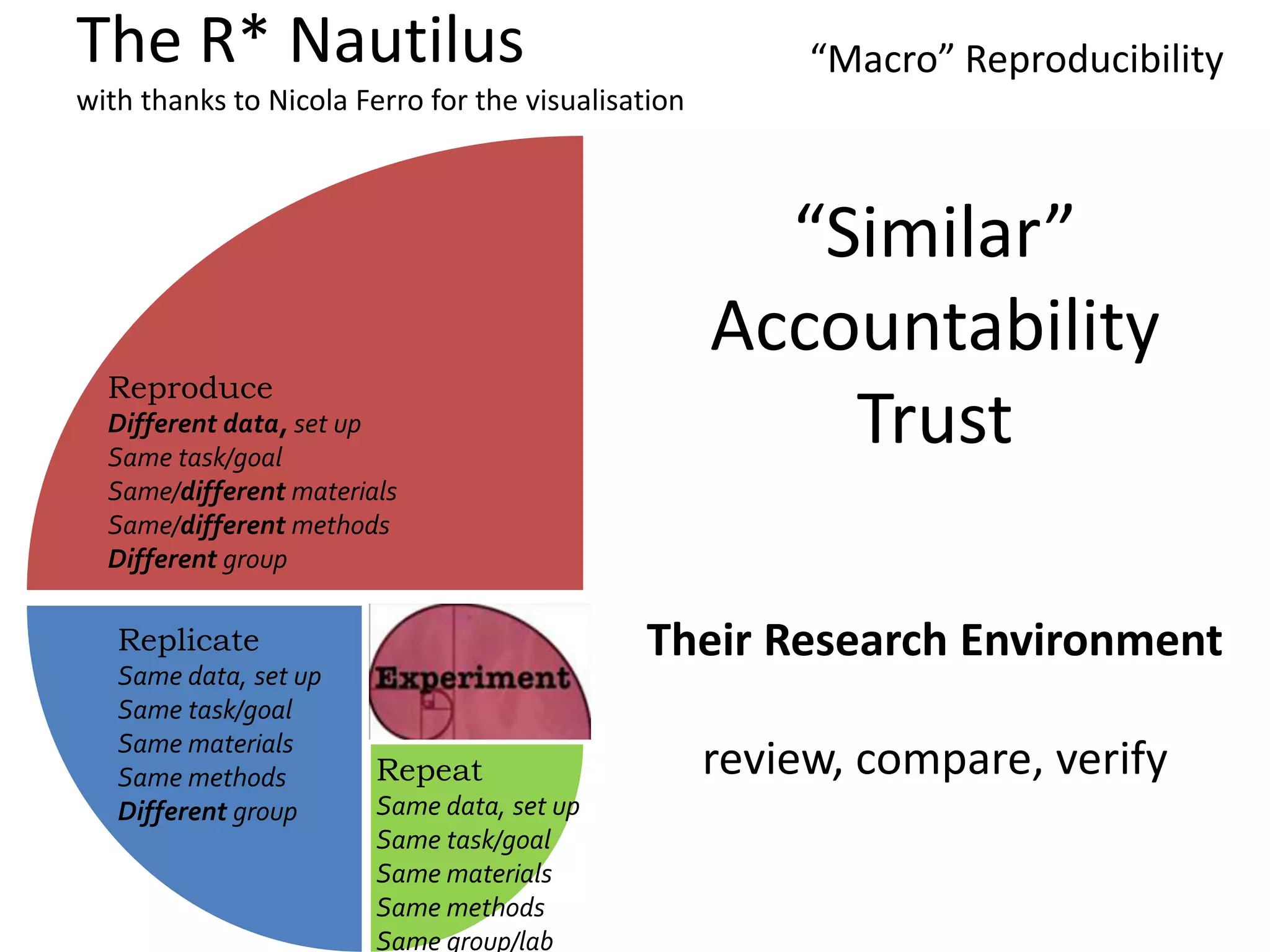

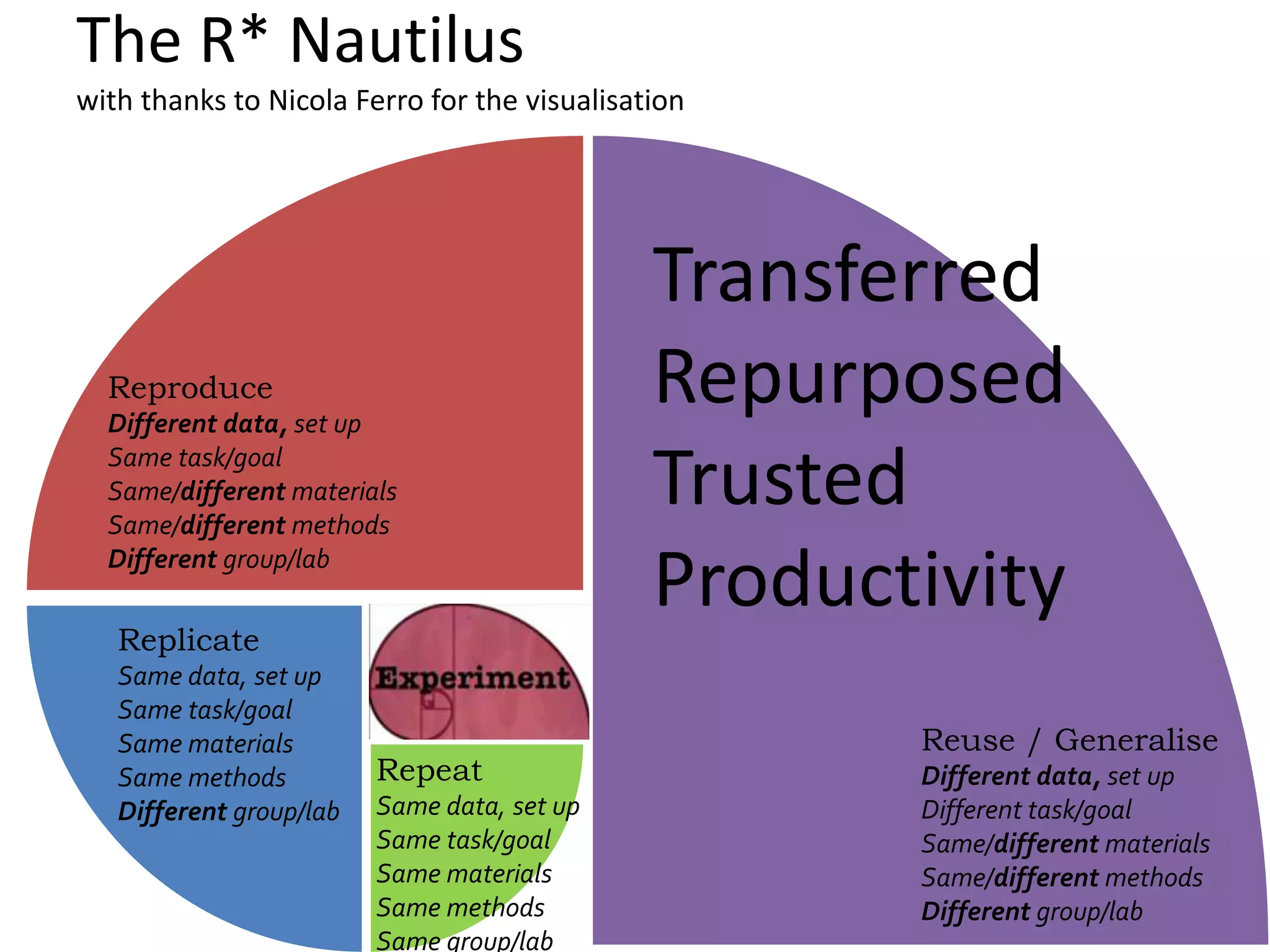

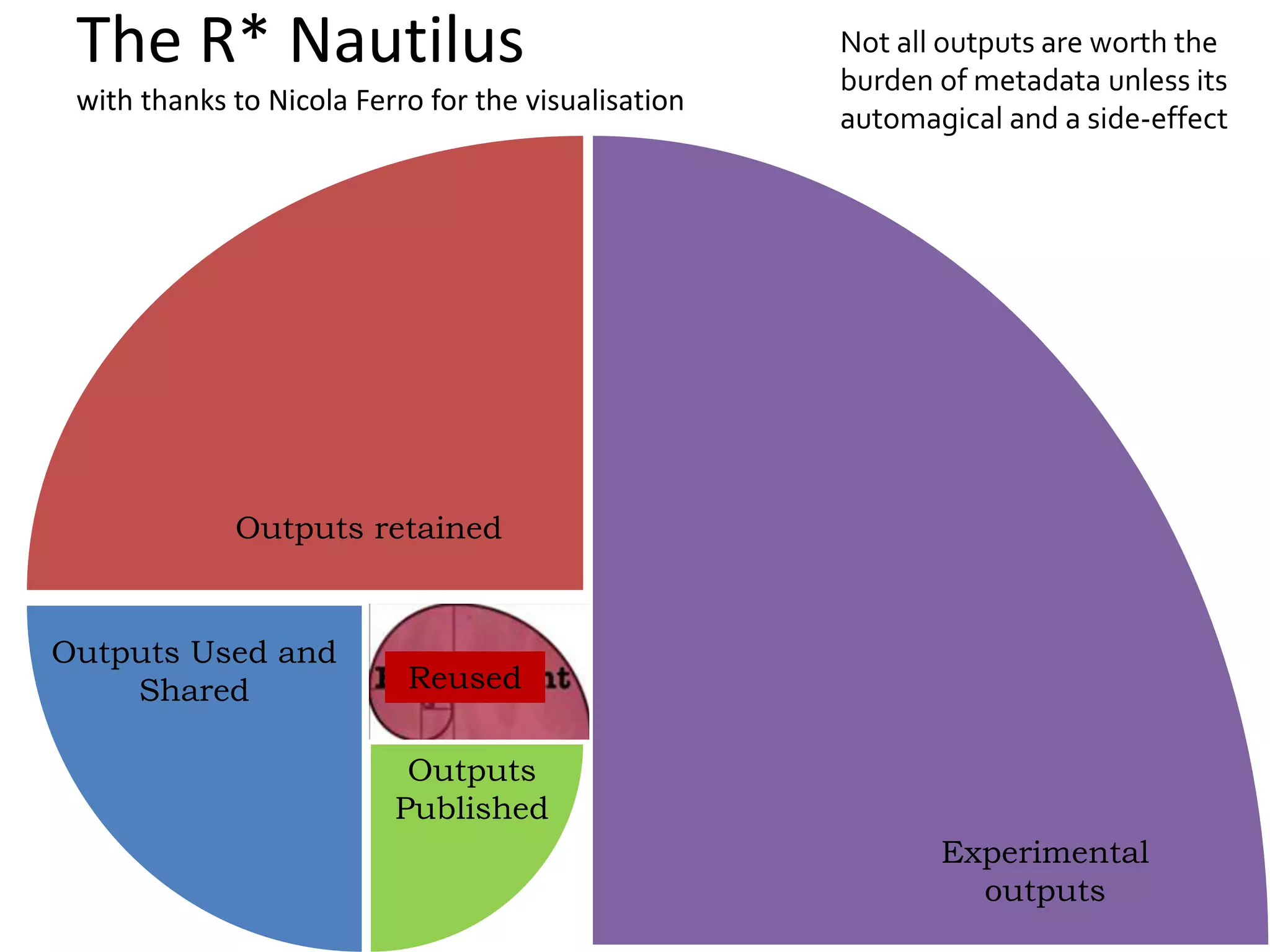

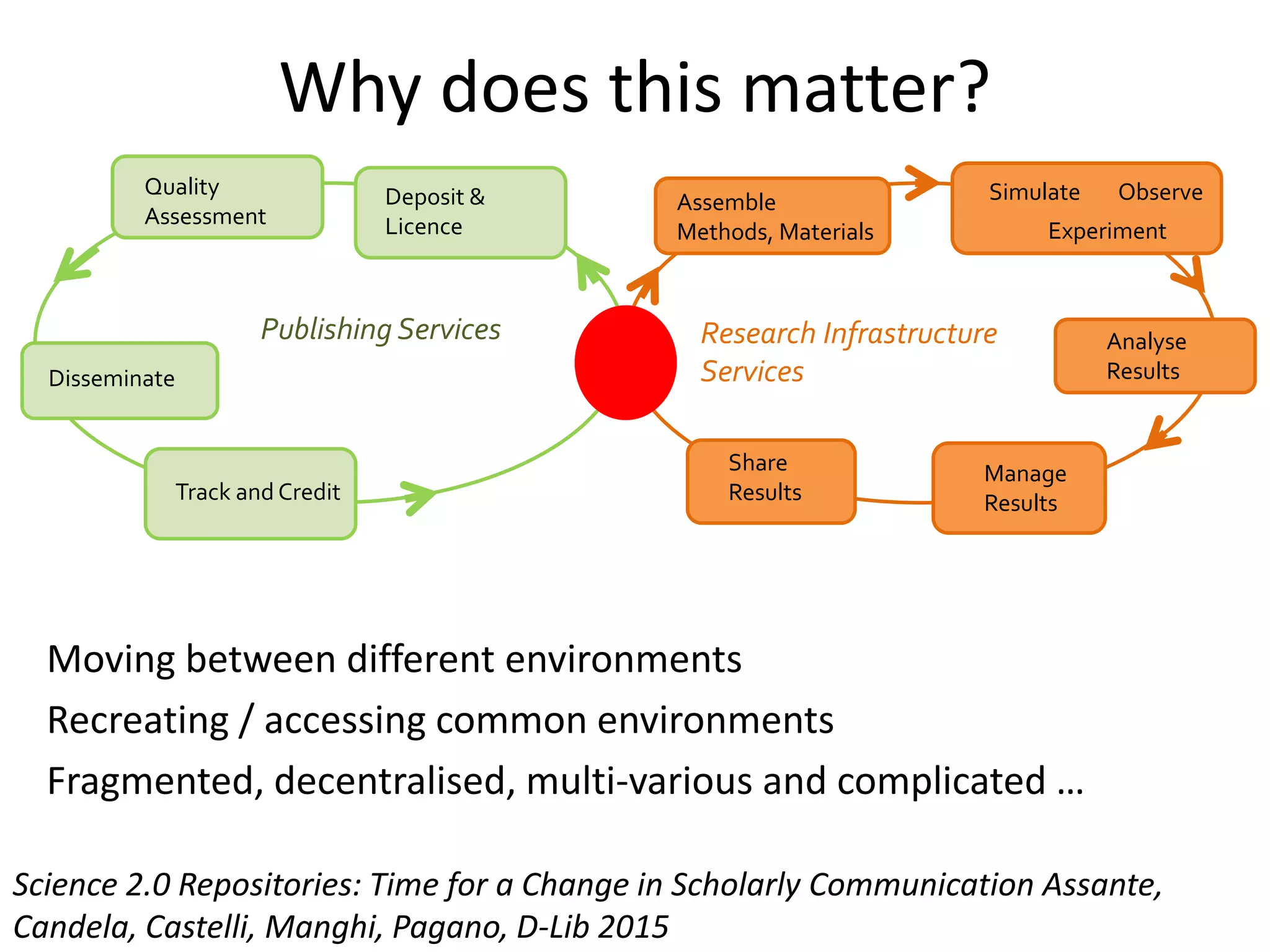

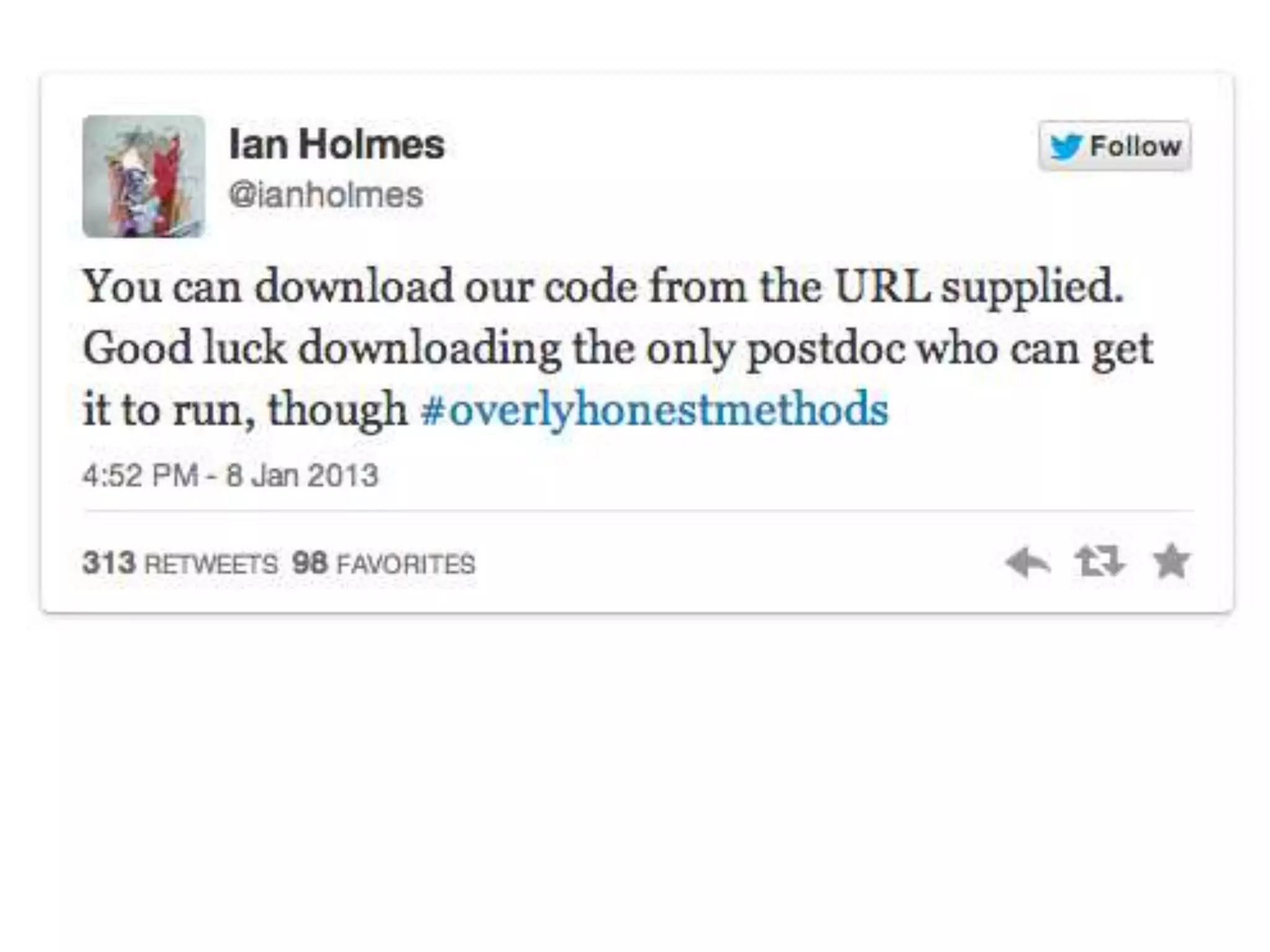

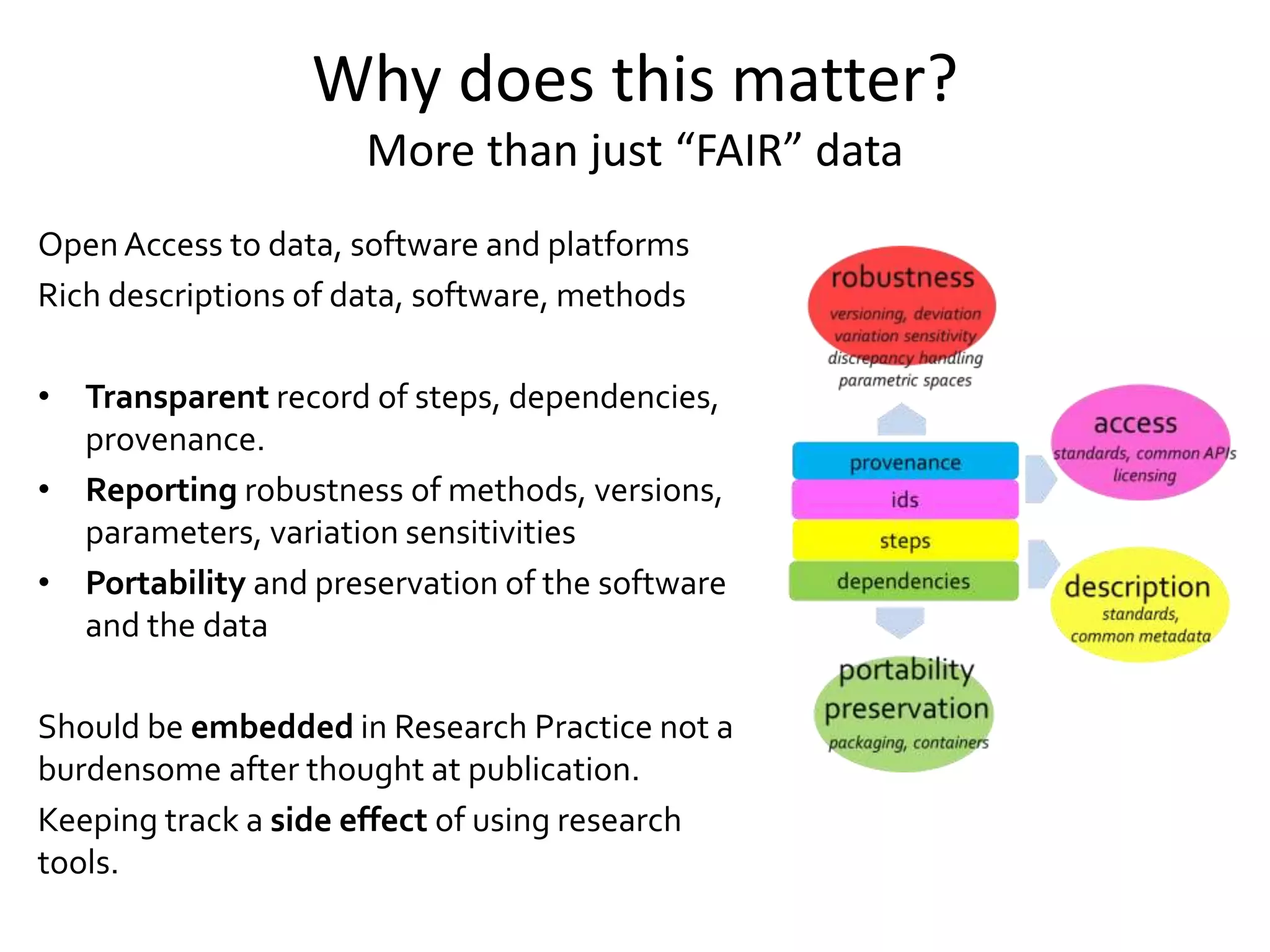

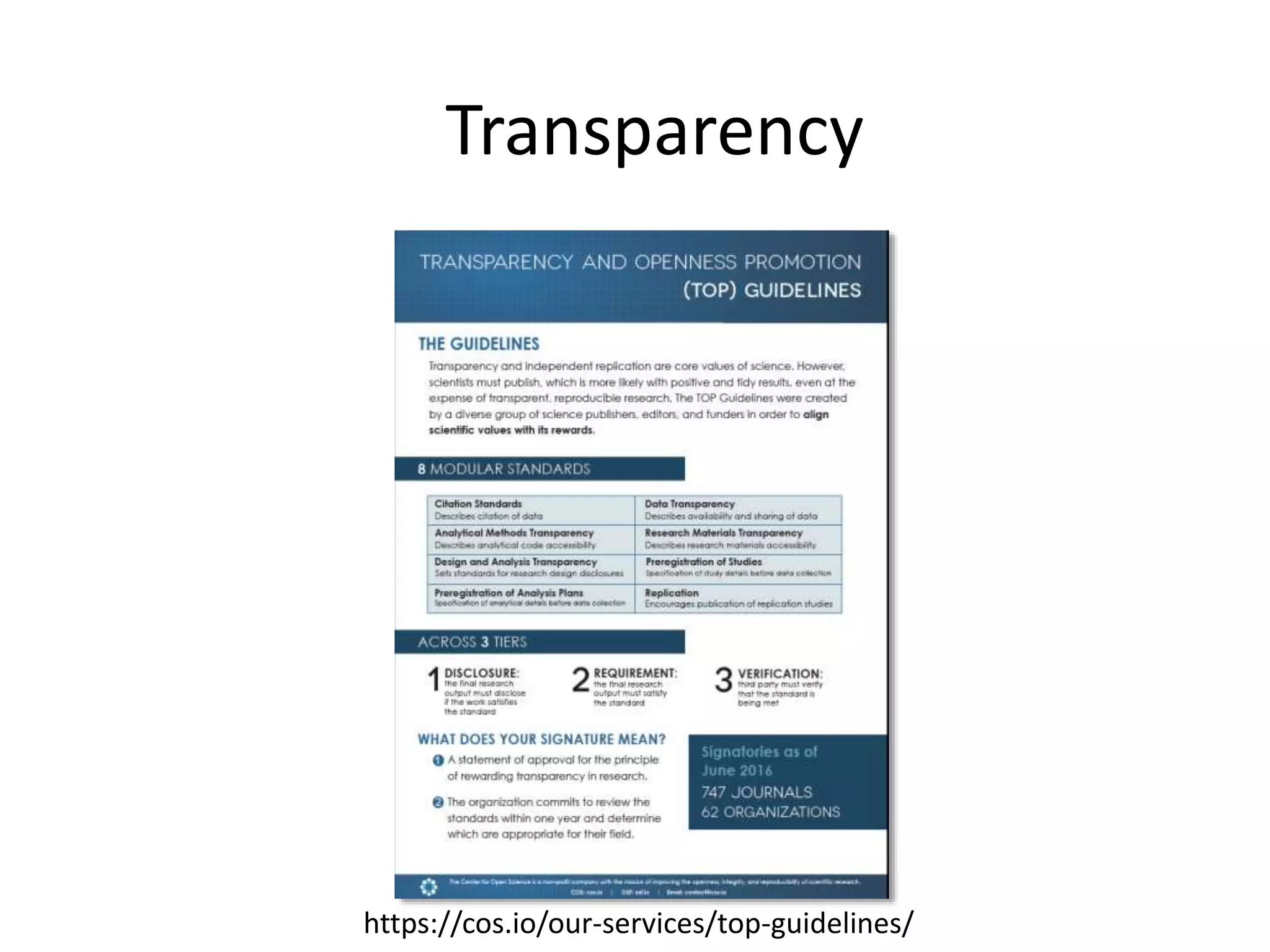

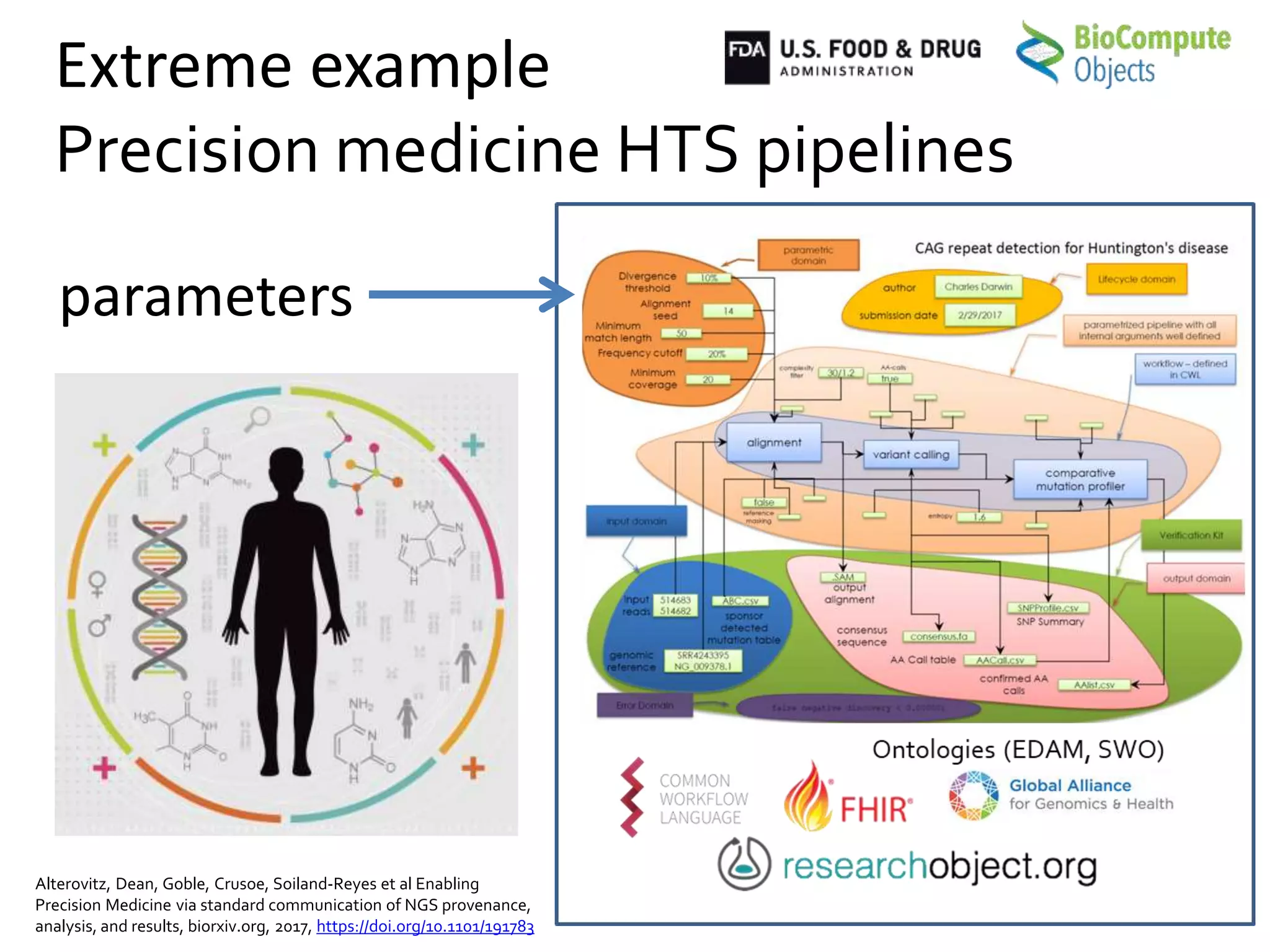

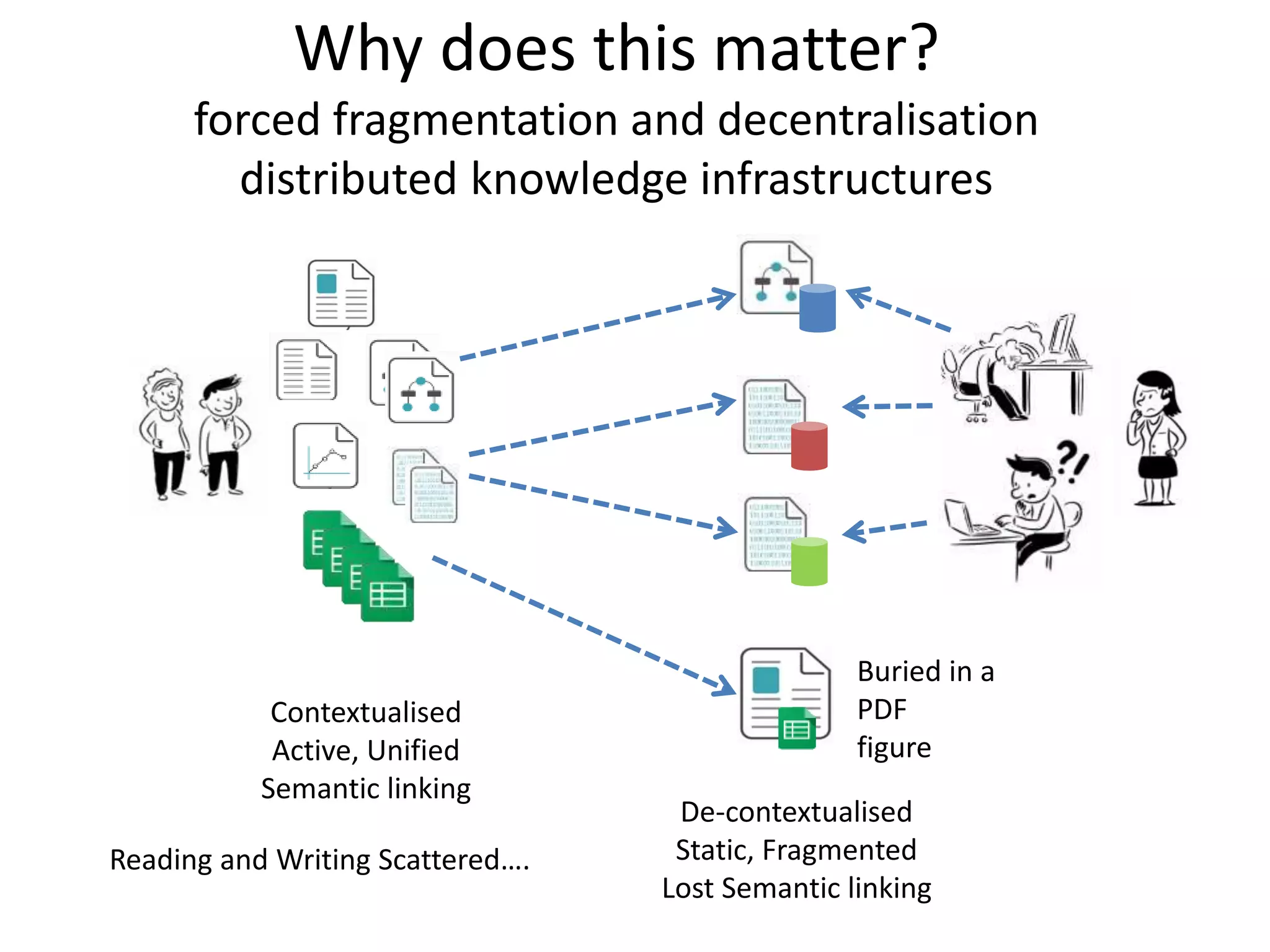

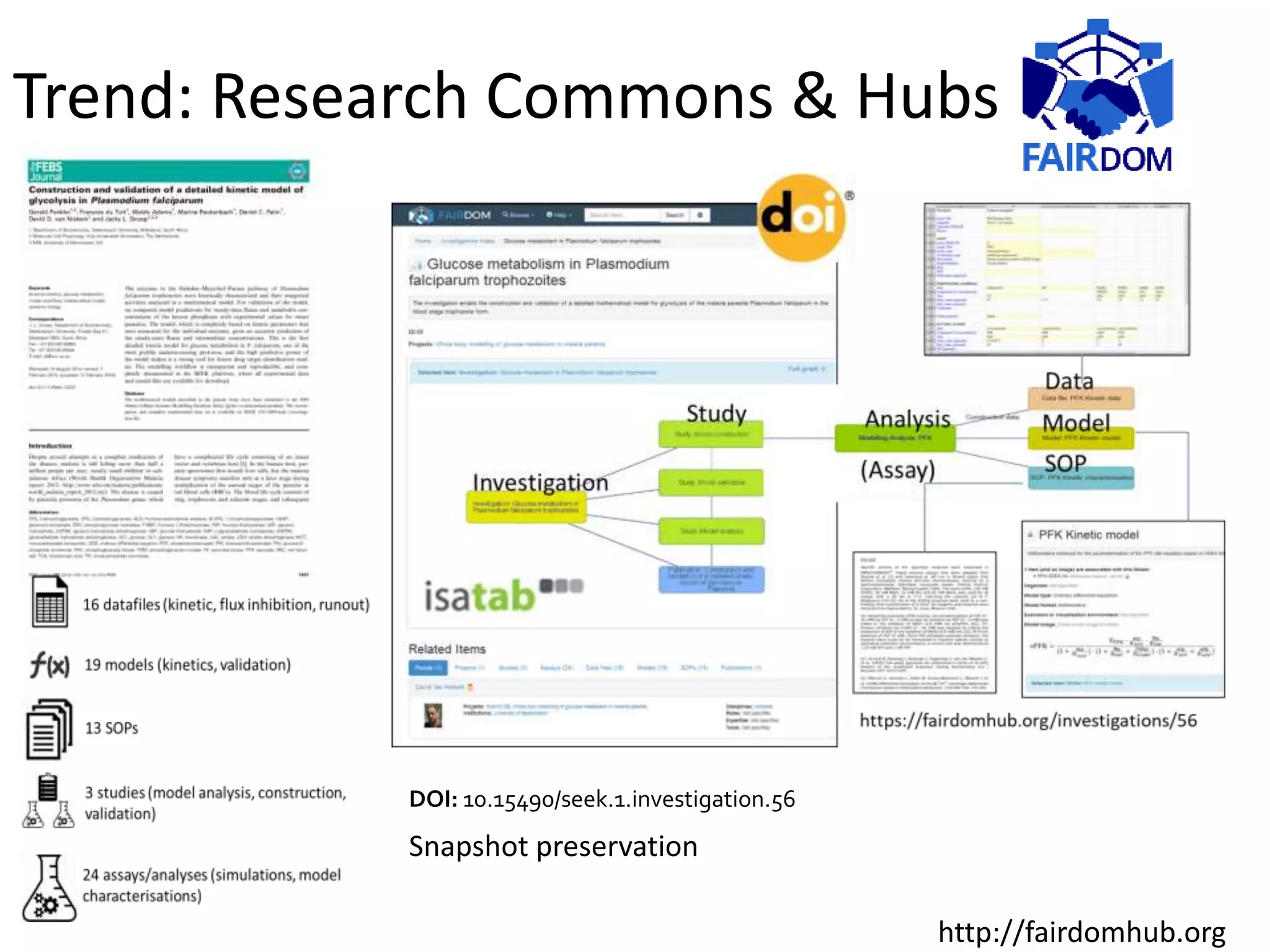

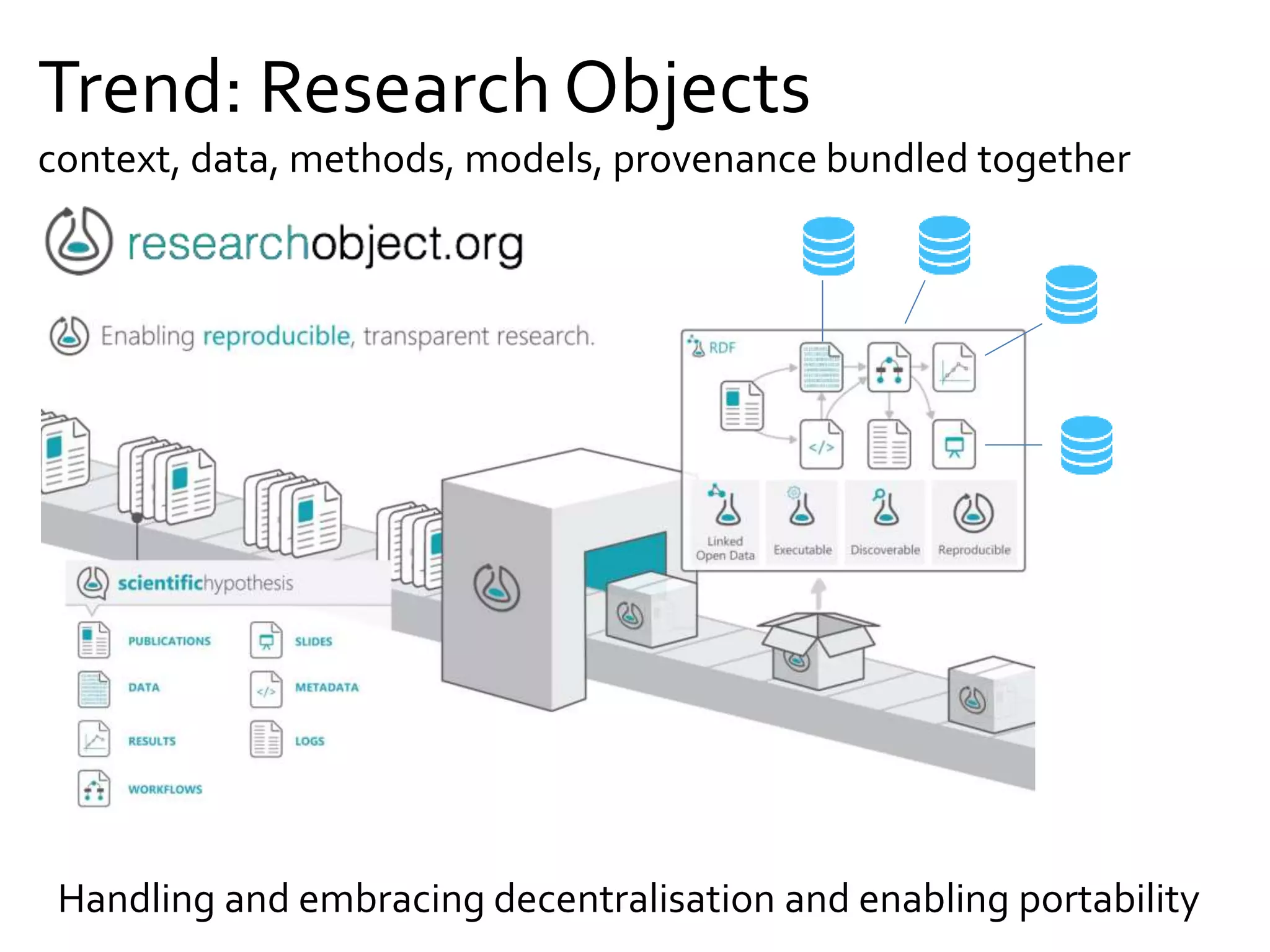

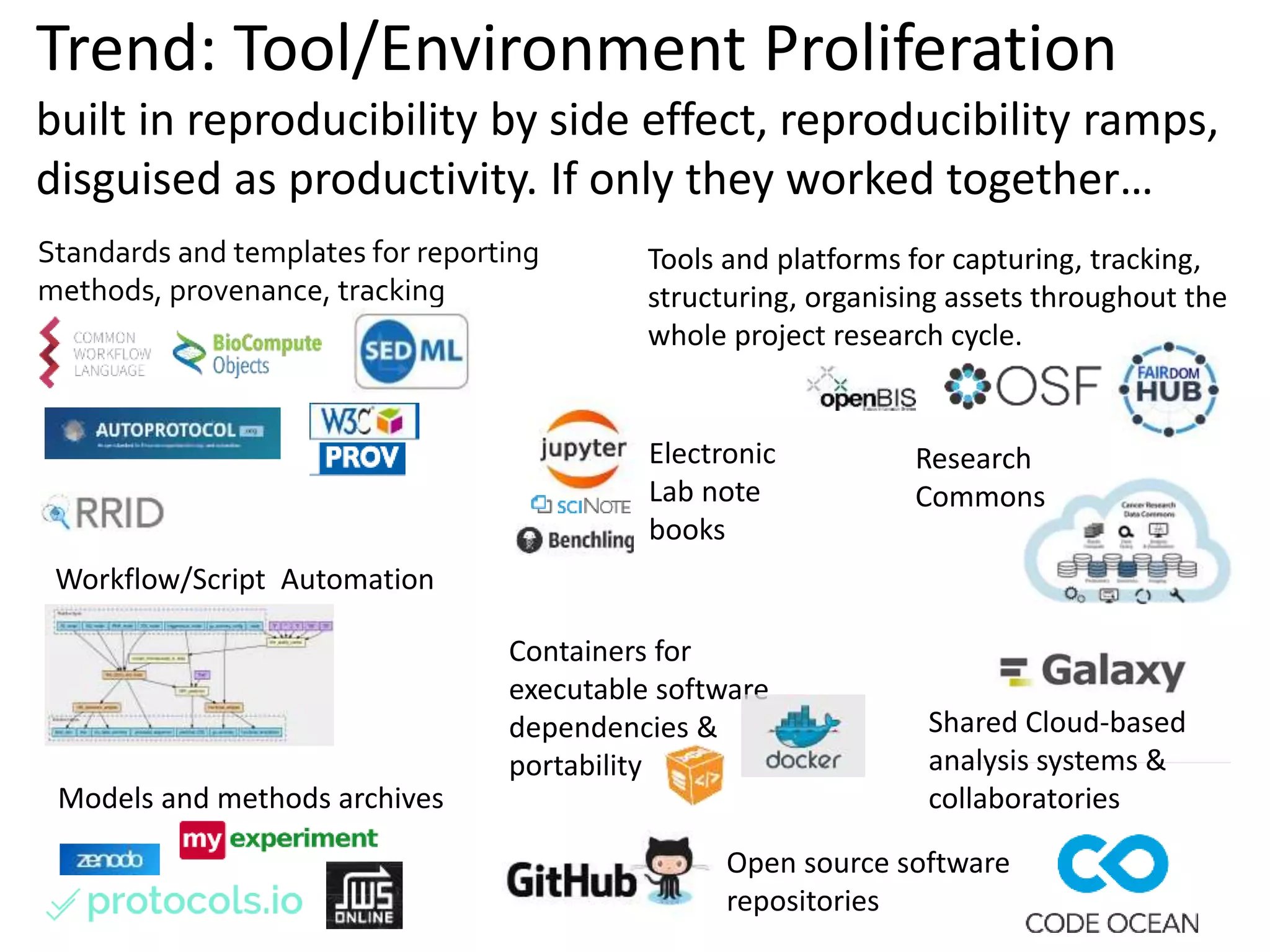

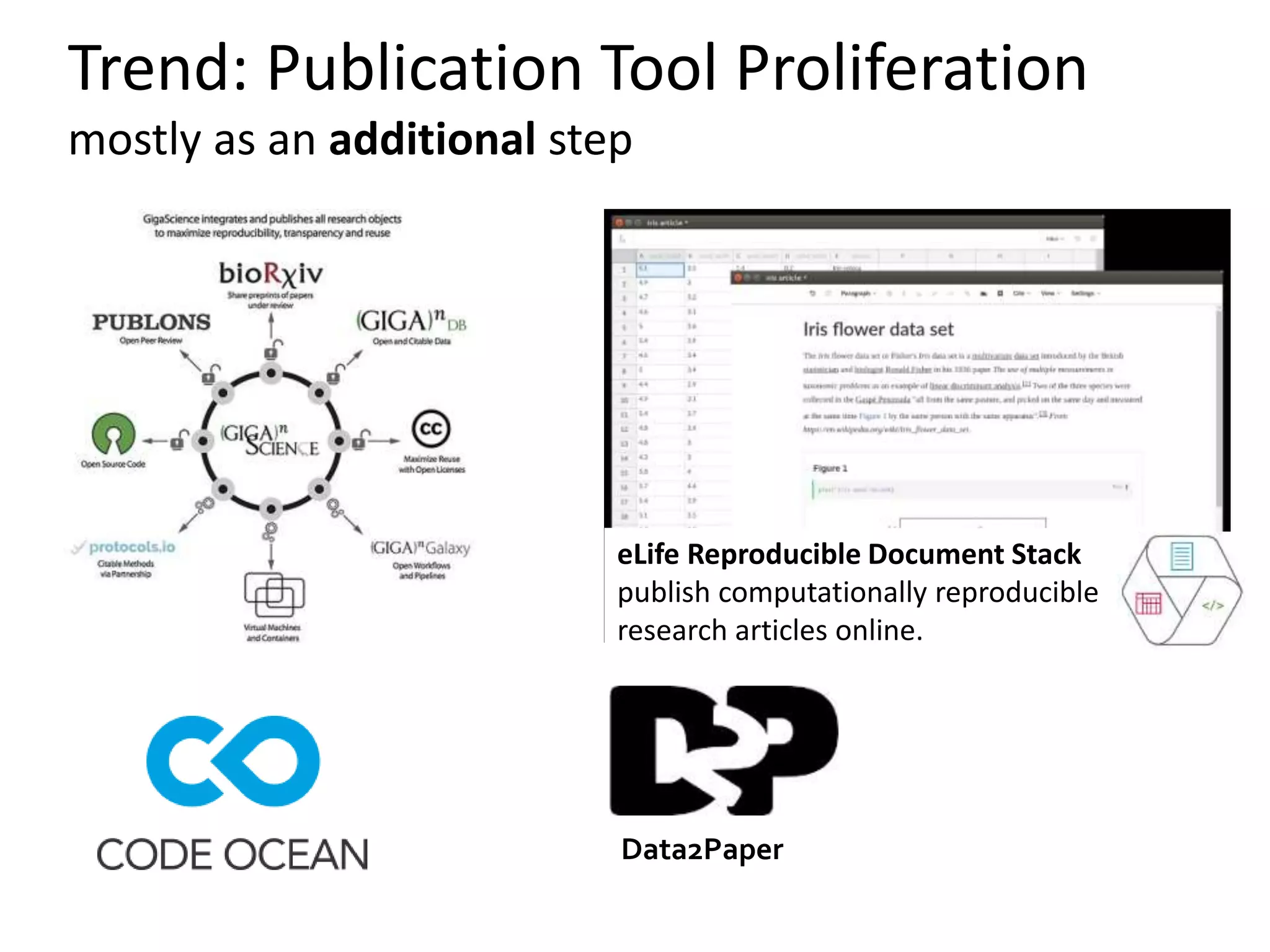

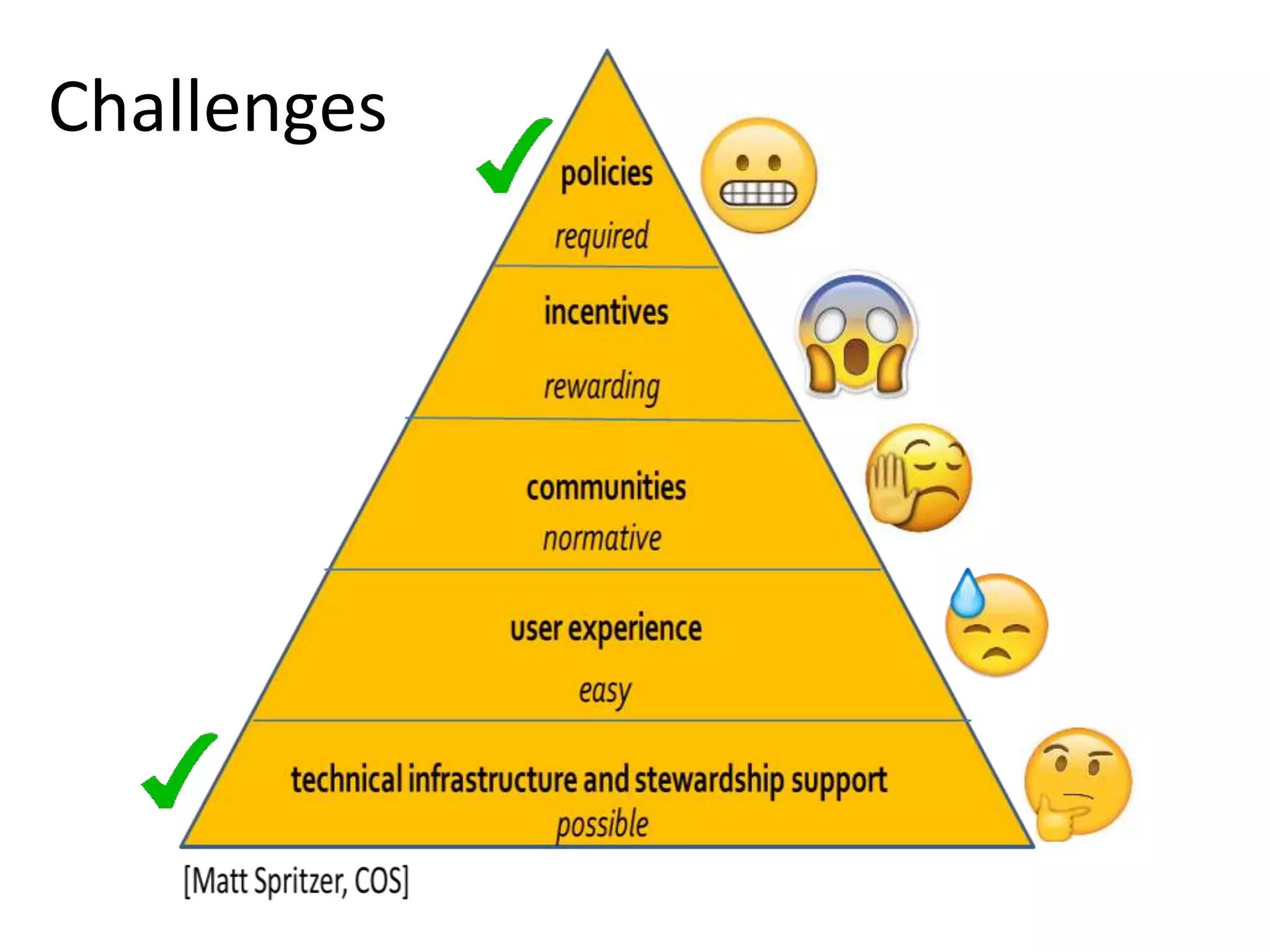

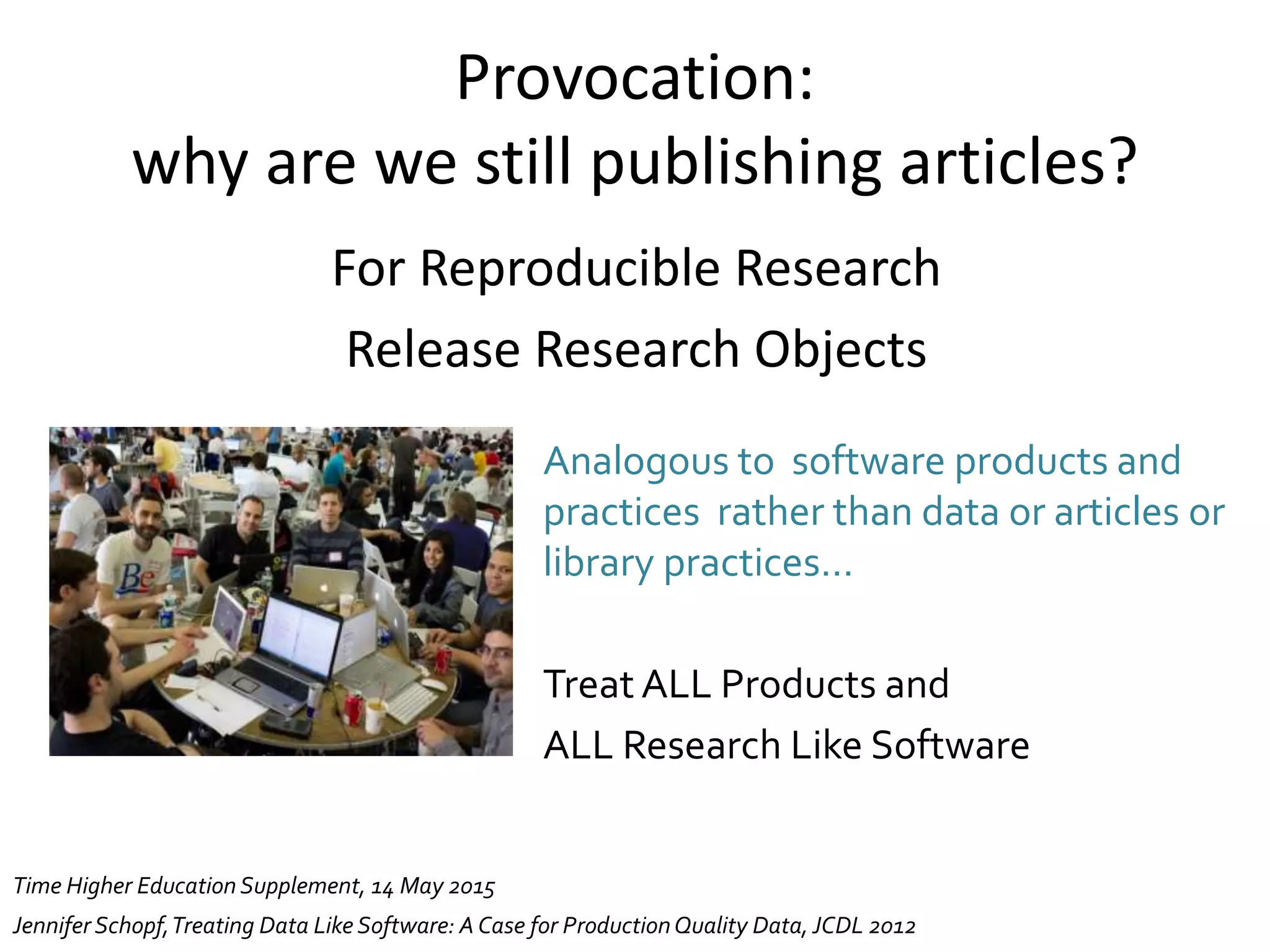

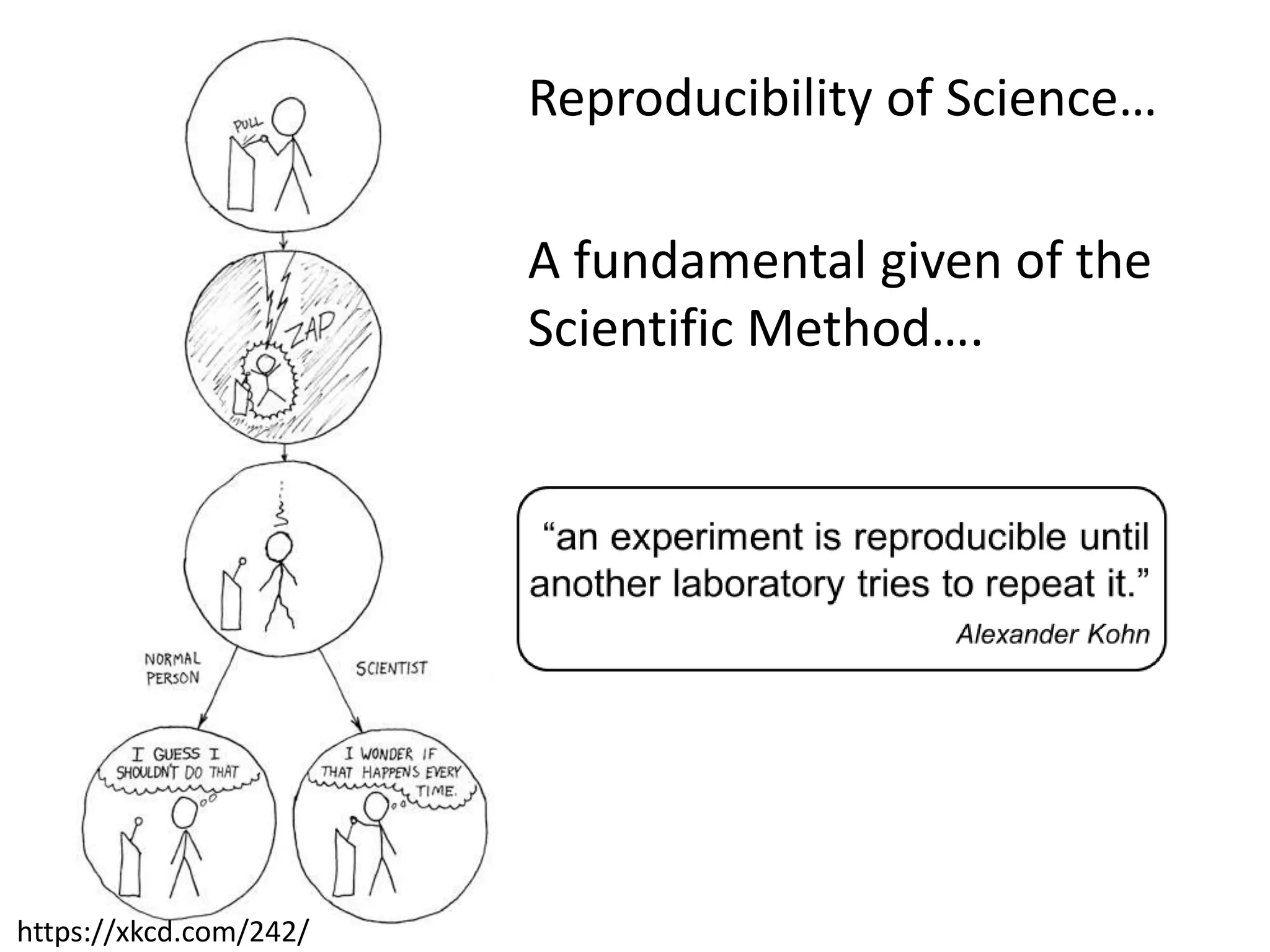

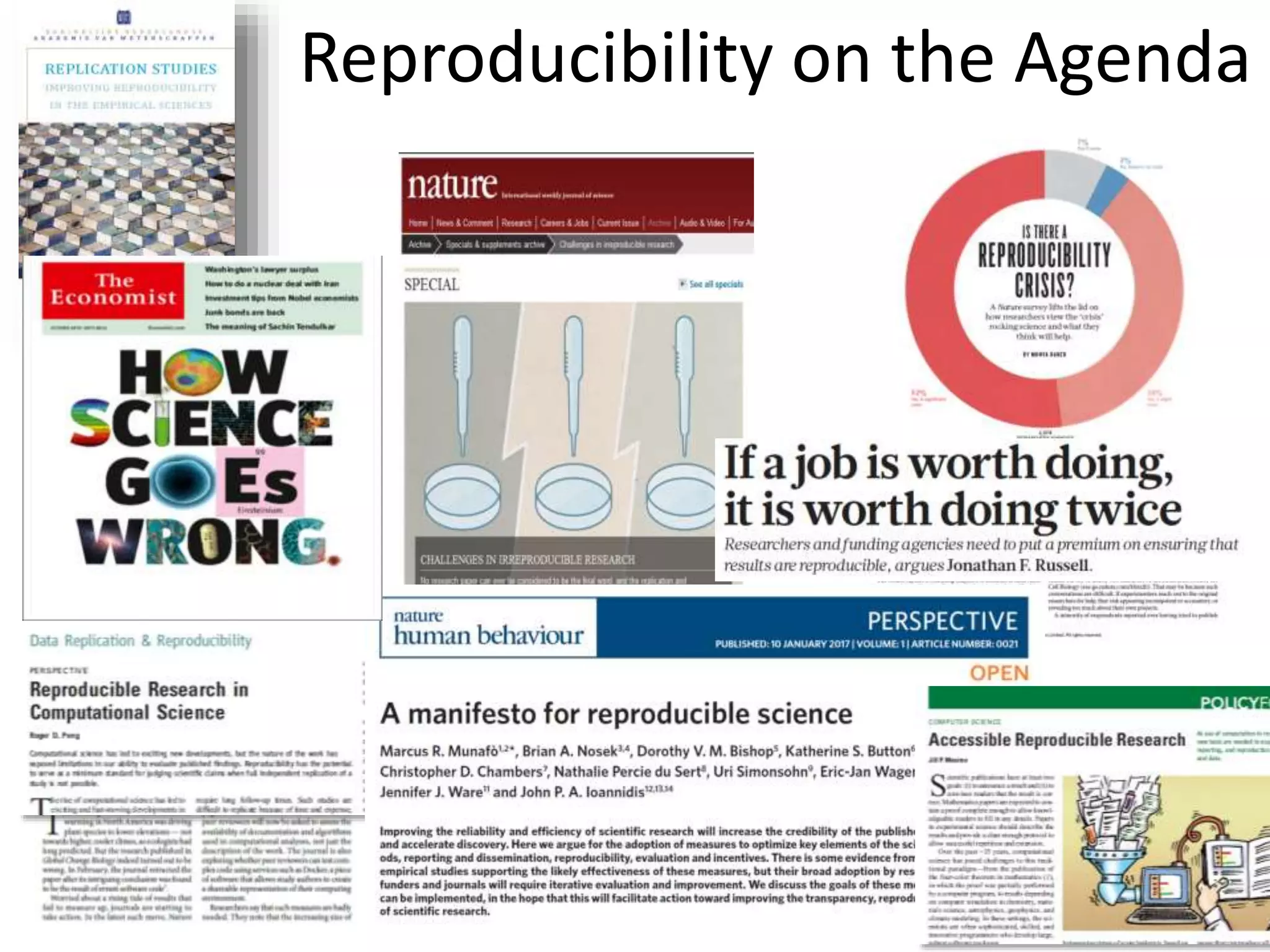

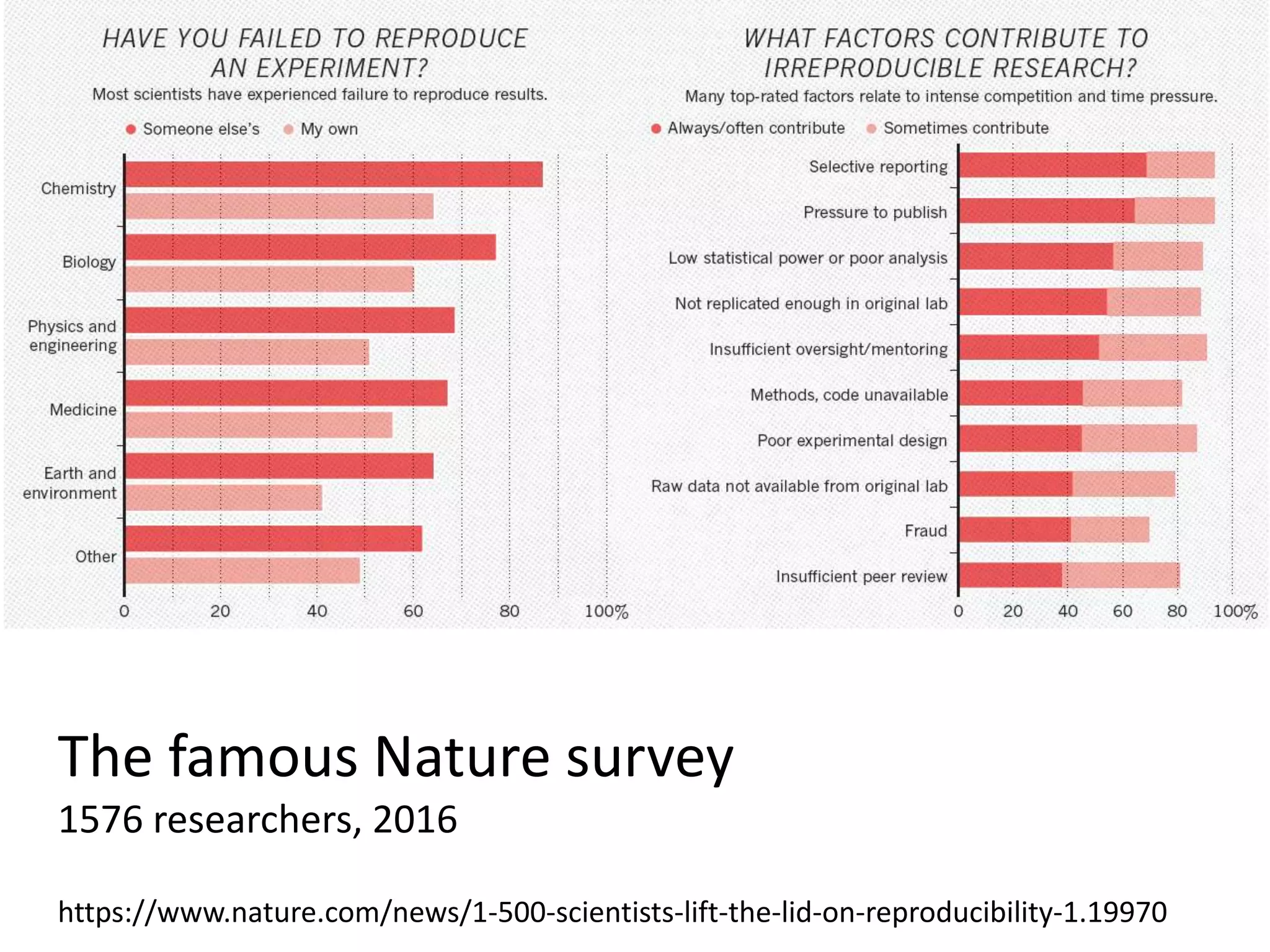

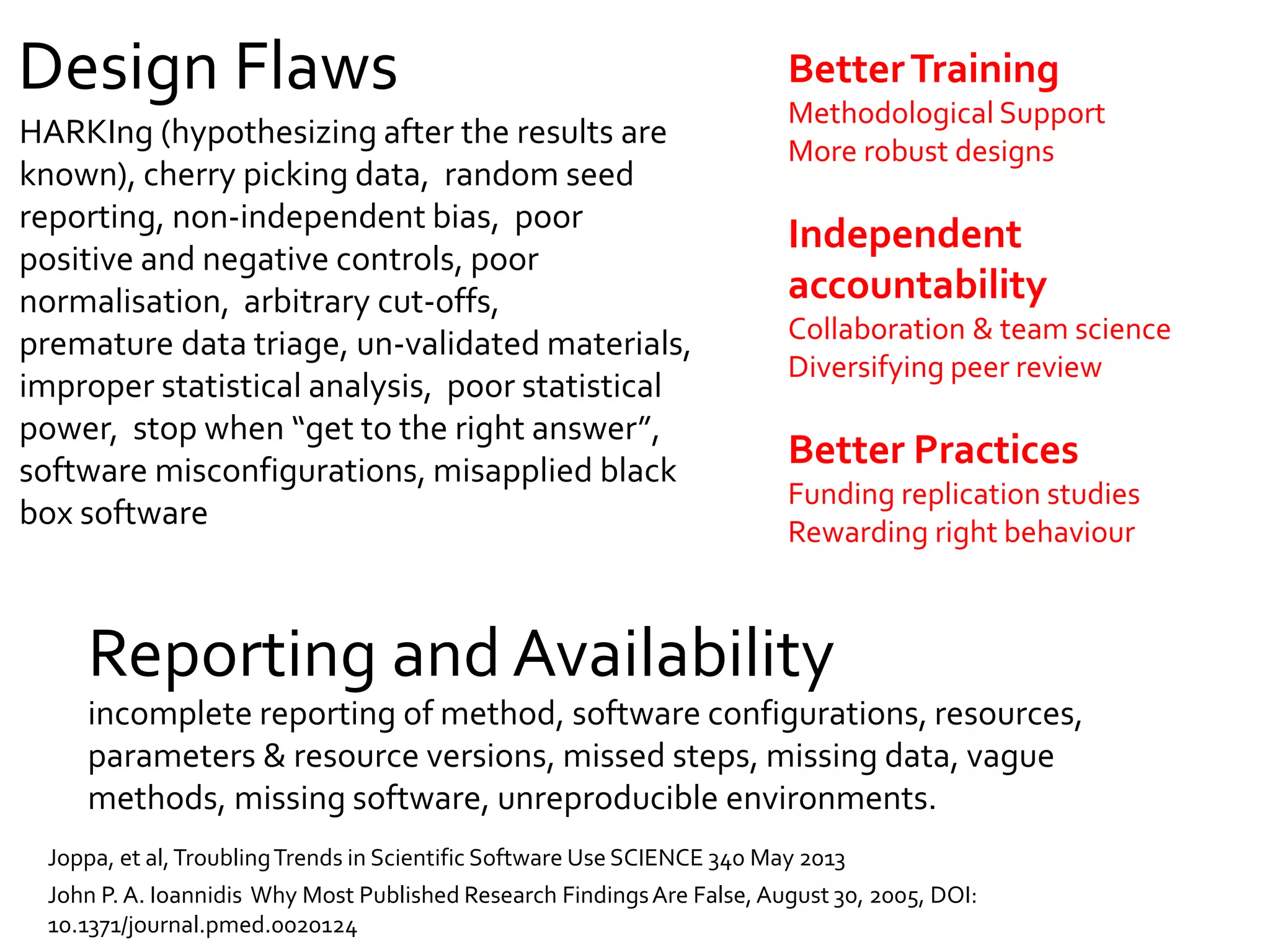

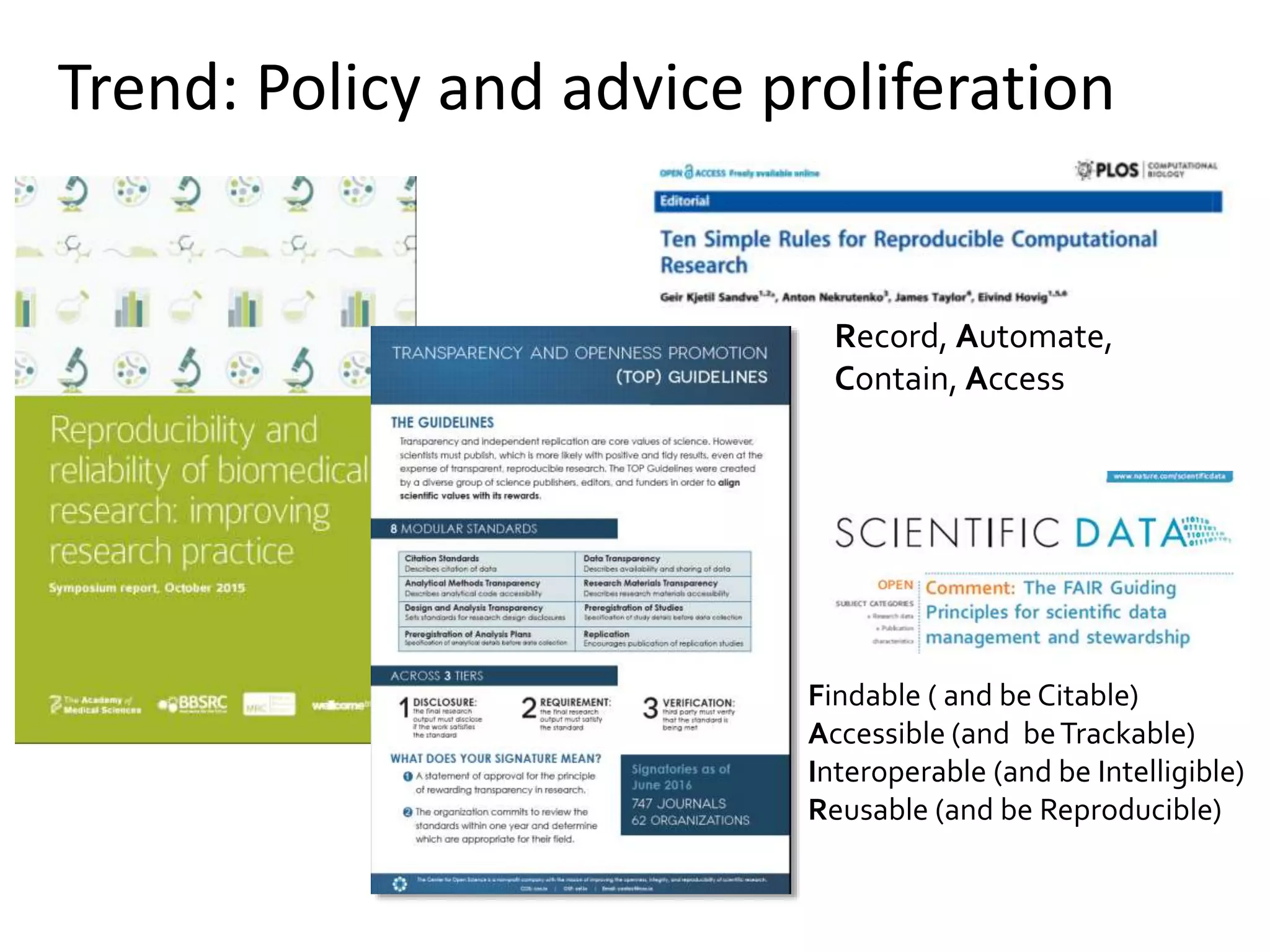

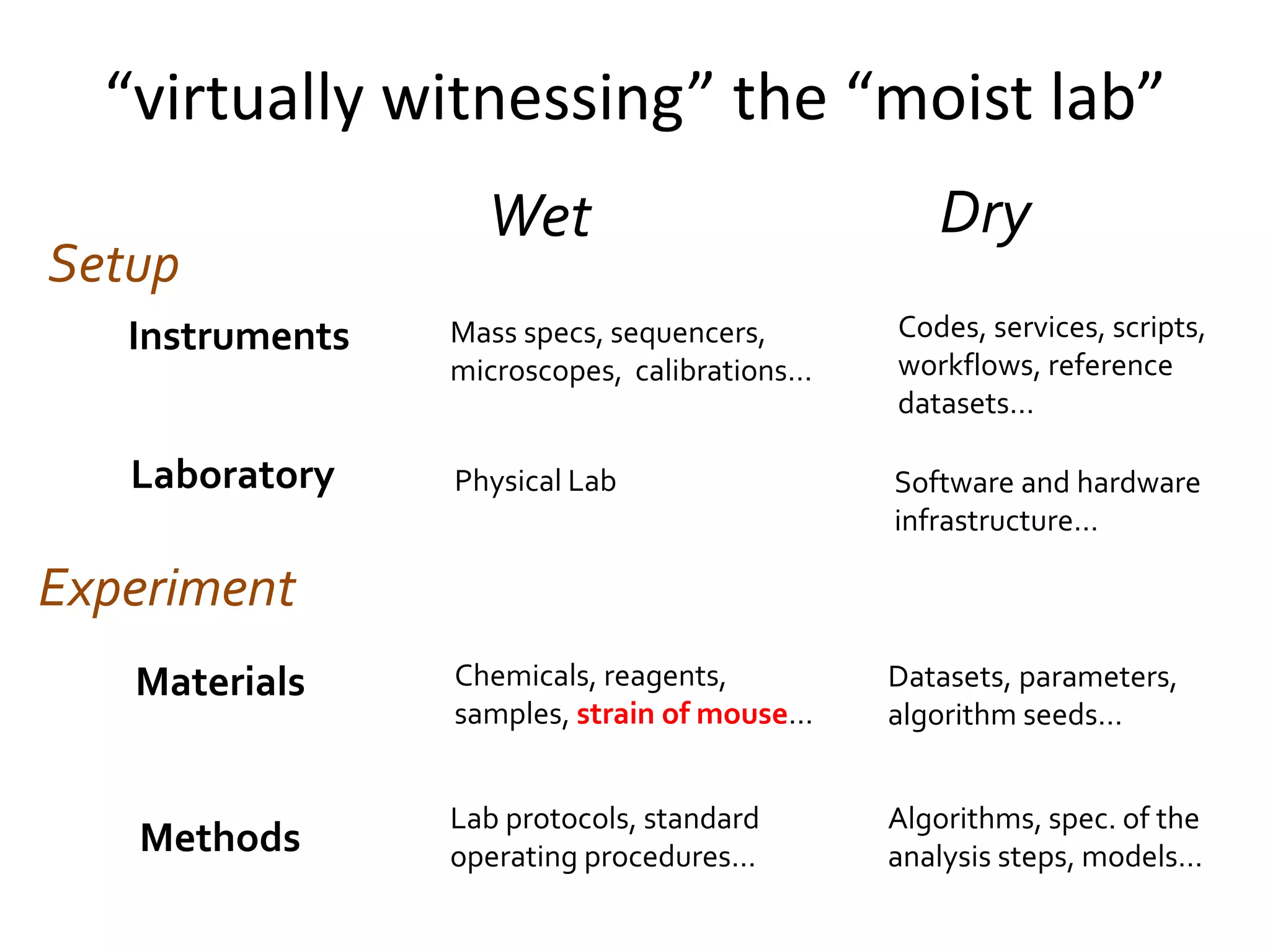

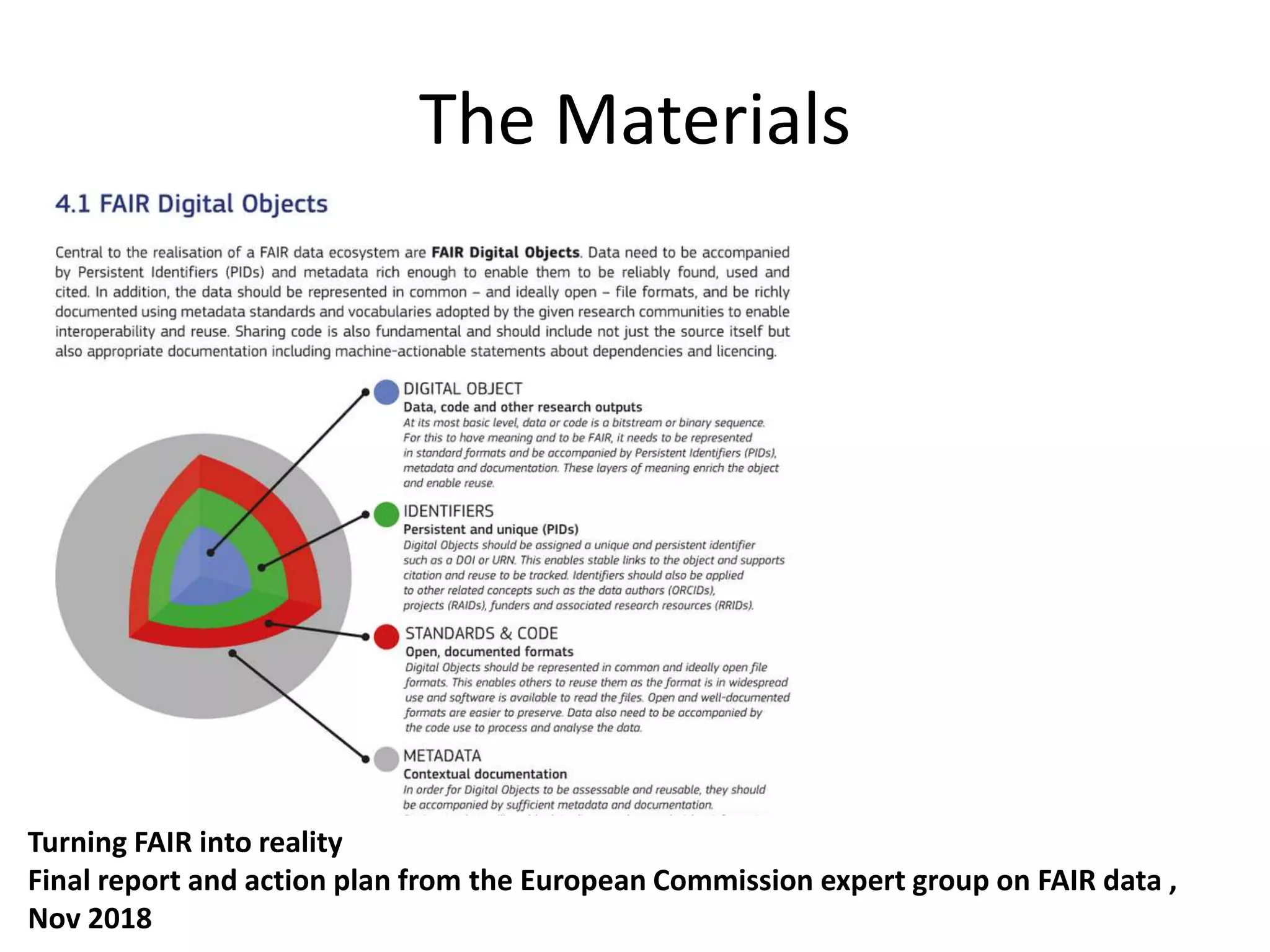

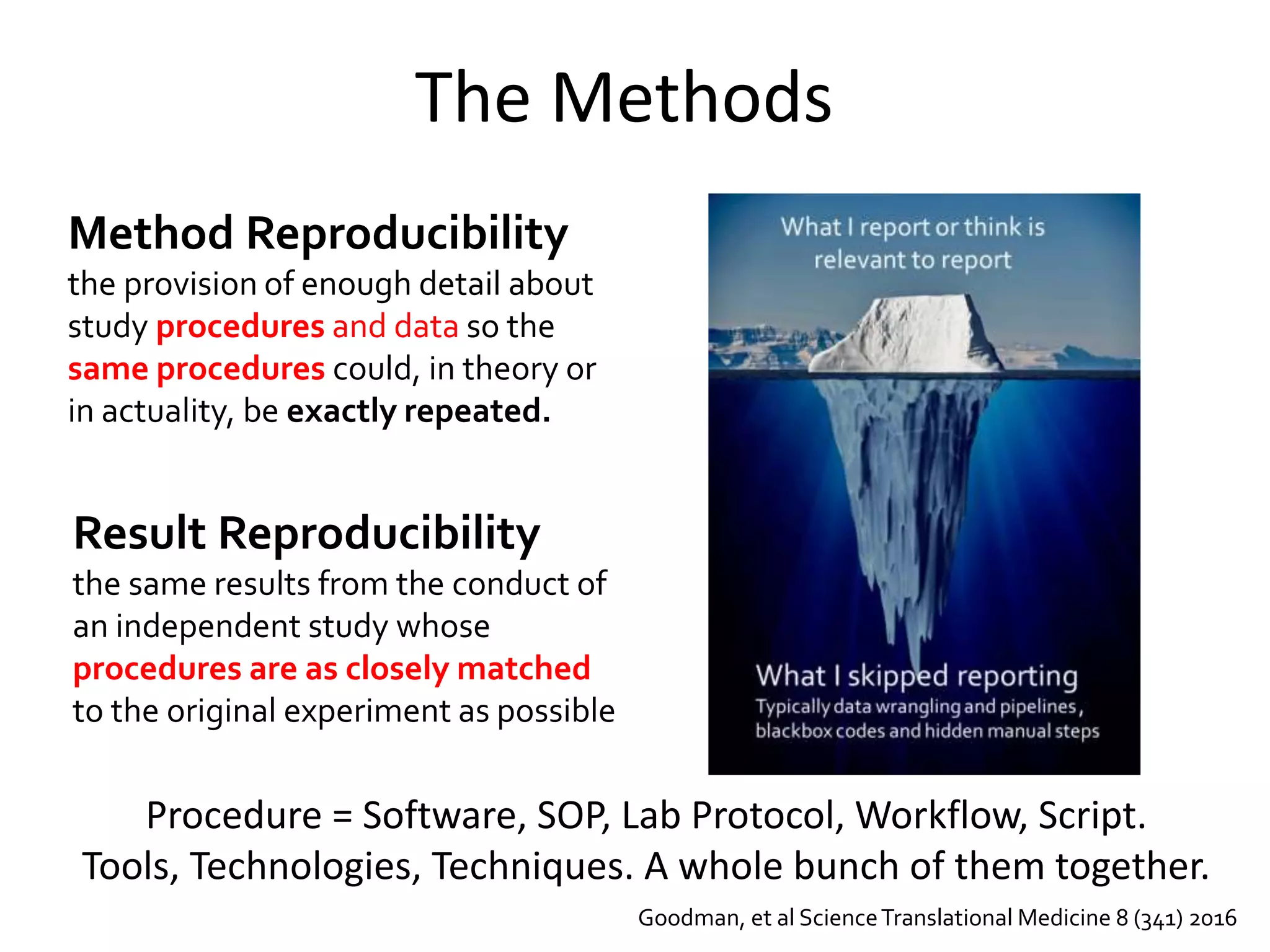

The document discusses the crucial importance of reproducibility in scientific research, highlighting the motivations, challenges, and trends surrounding the issue. It emphasizes the need for transparency in reporting methods, data, software, and protocols to enable the repetition of experiments and validation of results. The text outlines best practices for ensuring reproducibility, such as better training, robust study designs, and improved collaboration within the scientific community.

![re-compute

replicate

rerun

repeat

re-examine

repurpose

recreate

reuse

restore

reconstruct review

regenerate

revise

recycle

redo

robustness

tolerance

verify

compliance

validate assurance

remix

conceptually replicate

“show A is true by doing B rather

than doing A again”

verify but not falsify

[Yong, Nature 485, 2012]

The R* Brouhaha

repair](https://image.slidesharecdn.com/goble-ircdlpisa-2019-190301143017/75/Reproducibility-and-the-R-of-Science-motivations-challenges-and-trends-15-2048.jpg)