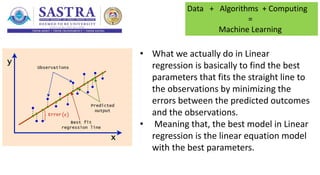

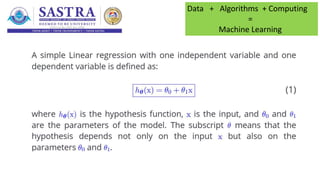

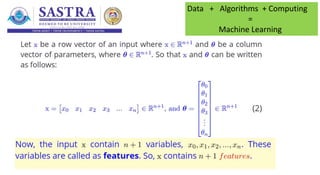

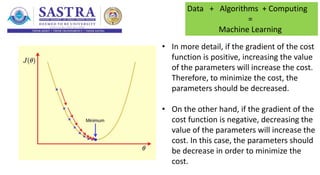

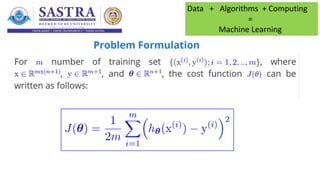

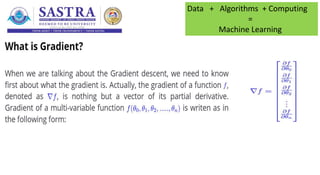

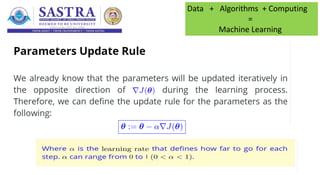

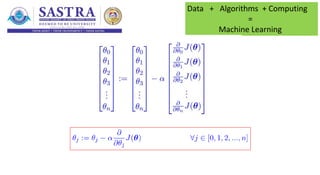

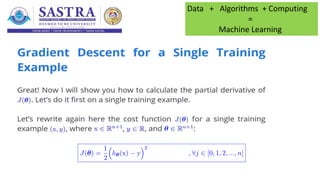

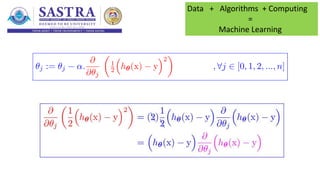

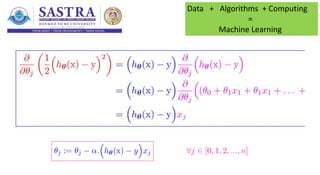

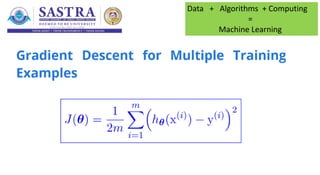

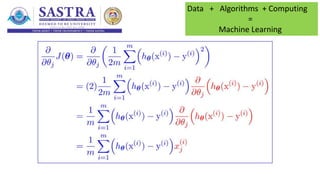

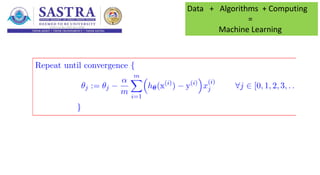

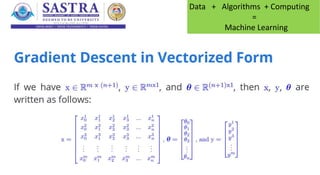

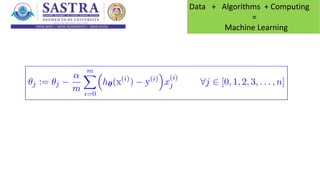

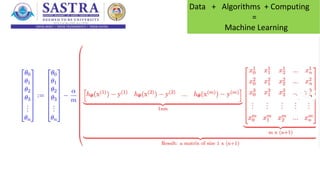

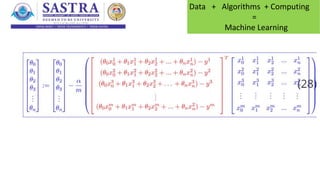

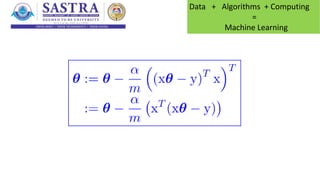

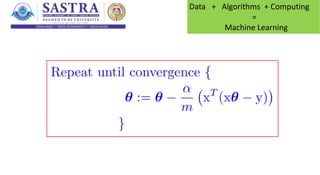

Gradient descent is an optimization algorithm that is often used to find the optimal parameters in linear regression models by minimizing the cost function. It works by iteratively updating the parameters based on the gradient of the cost function with respect to the parameters. The goal of linear regression is to find the best-fitting linear equation to describe the relationship between independent and dependent variables by minimizing the error between predicted and observed values.