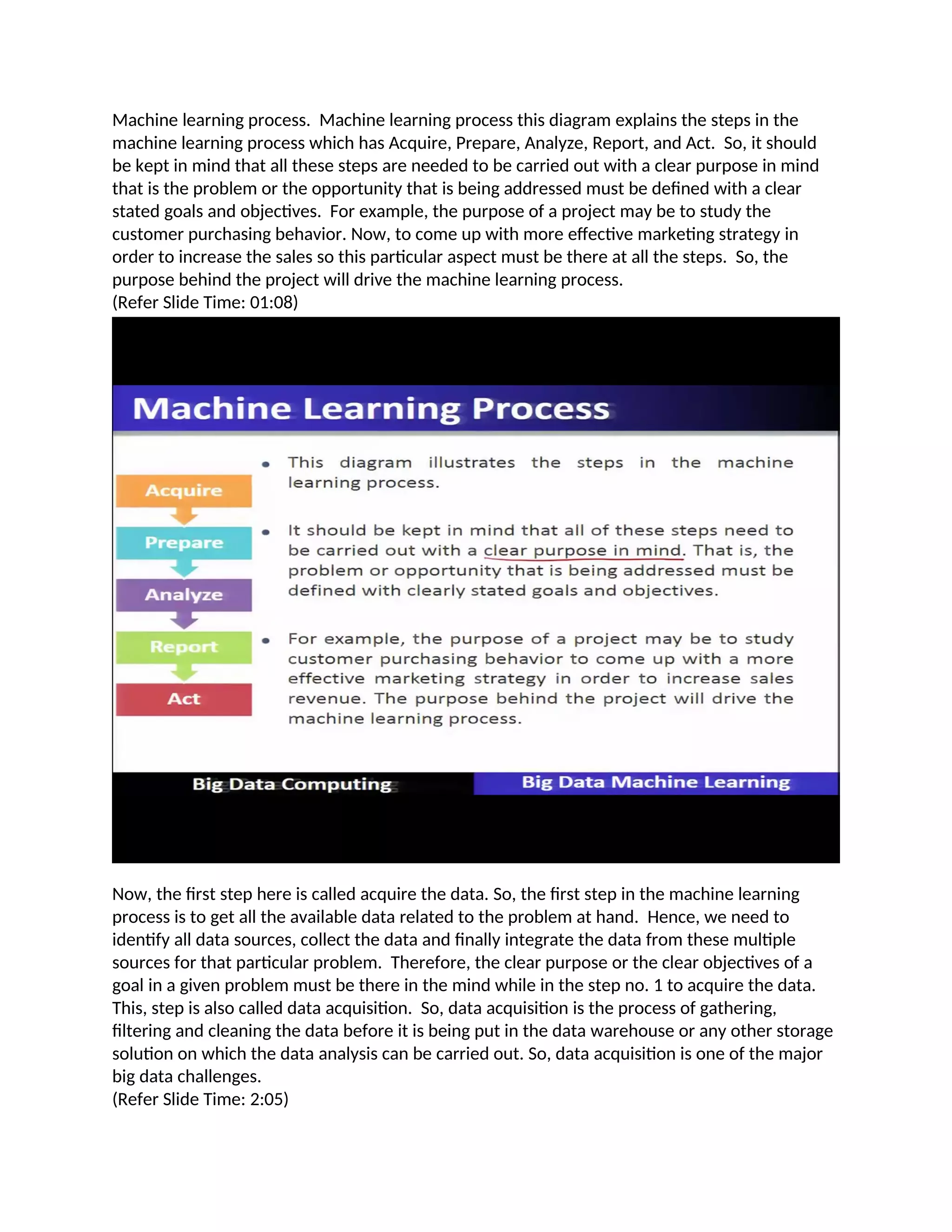

The document describes the machine learning process, which consists of 5 steps: 1) Acquire data from various sources and integrate it. 2) Prepare the data by exploring, cleaning, and preprocessing it. 3) Analyze the data by selecting a machine learning technique, building a model, and evaluating results. 4) Report results through visualizations and insights. 5) Act on insights by implementing recommendations to improve processes. The goal is to define a problem, acquire related data, analyze it to gain insights, and take actions to address the problem.