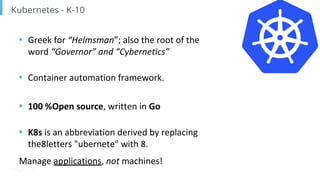

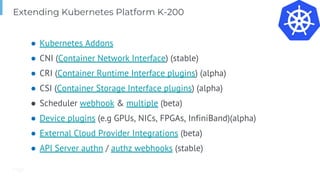

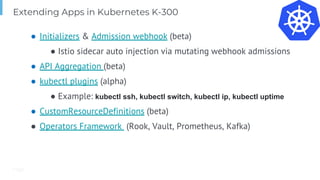

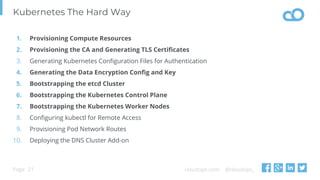

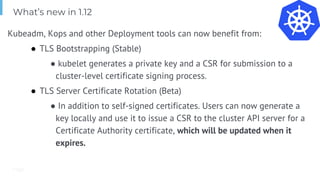

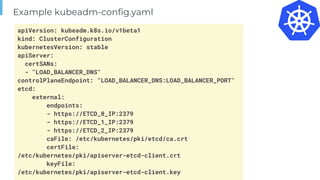

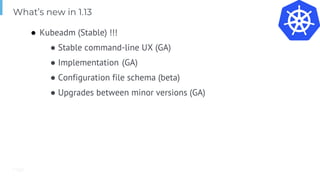

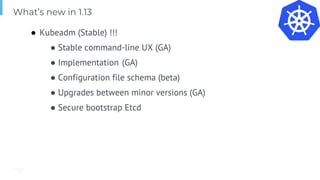

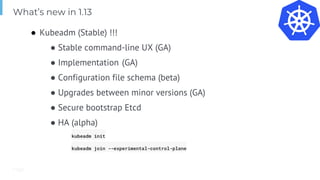

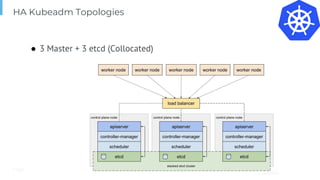

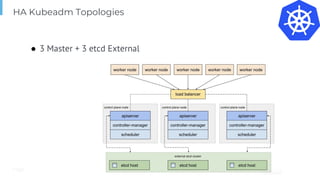

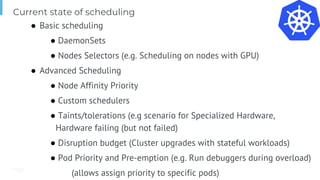

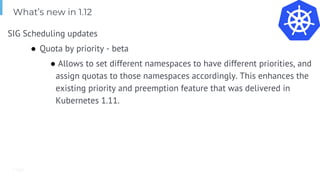

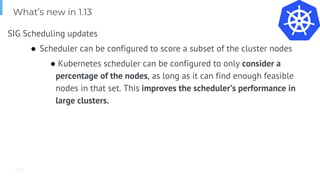

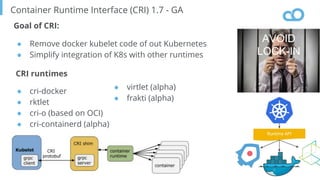

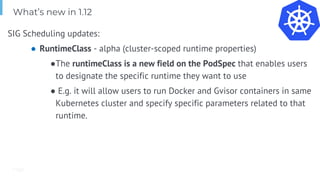

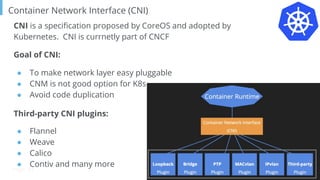

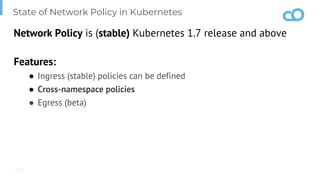

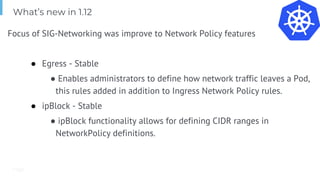

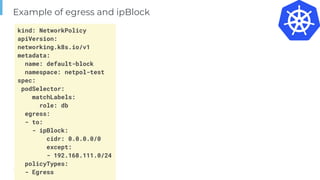

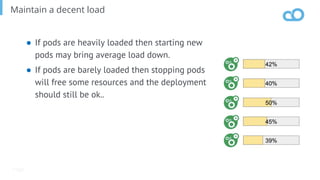

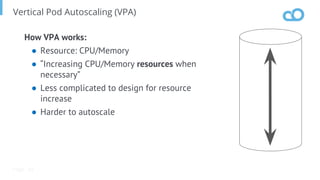

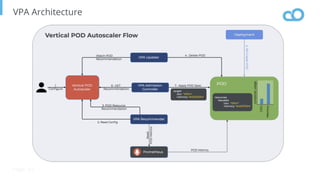

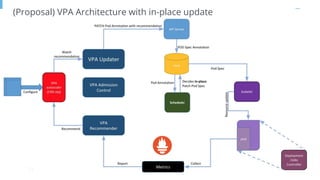

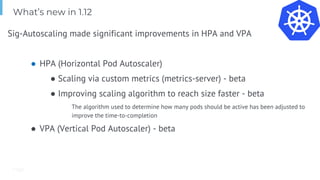

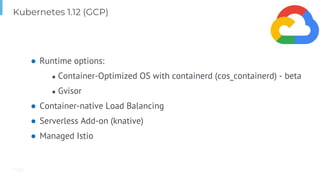

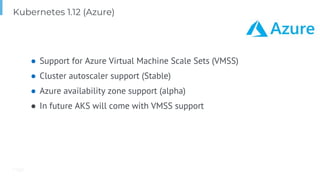

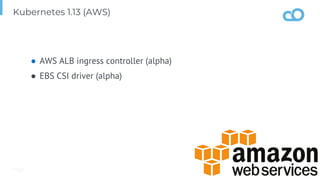

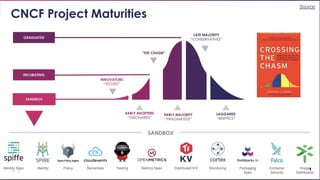

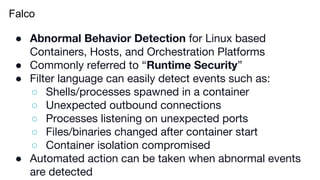

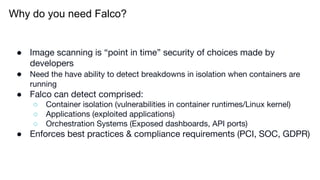

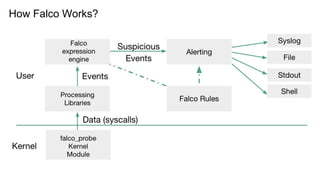

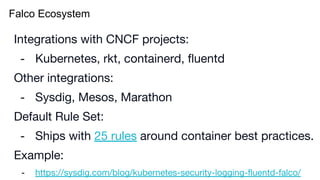

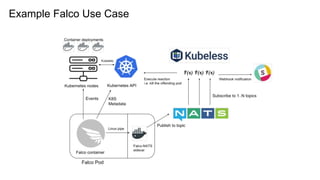

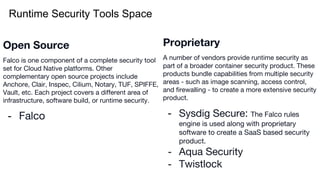

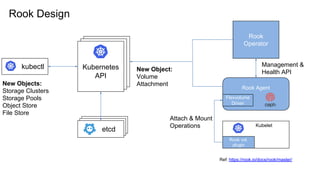

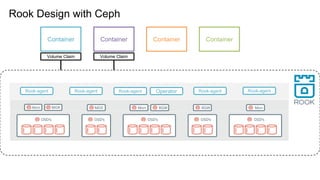

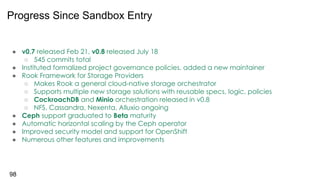

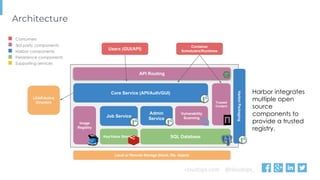

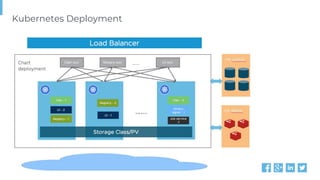

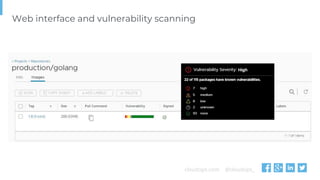

The document outlines various topics related to Kubernetes and cloud-native computing, including upcoming events, educational workshops, and new features in Kubernetes versions 1.12 and 1.13. It discusses advancements in the Container Runtime Interface (CRI), scheduling enhancements, and storage solutions through the Rook project. Additionally, it highlights security tools like Falco for runtime protection and mentions community engagement through meetups and sponsorship opportunities.