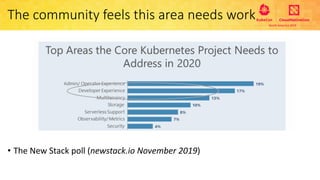

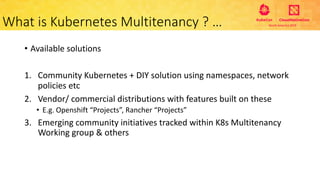

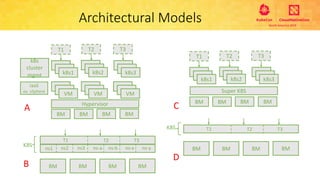

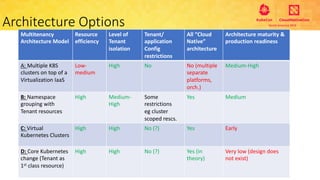

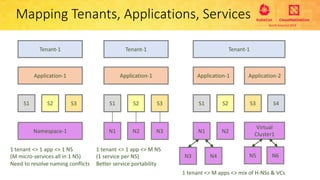

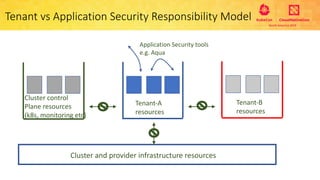

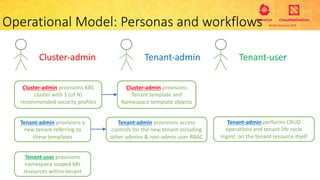

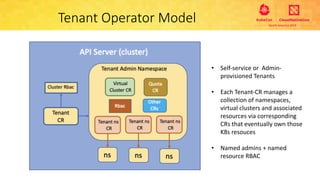

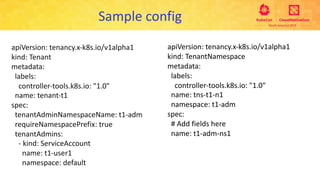

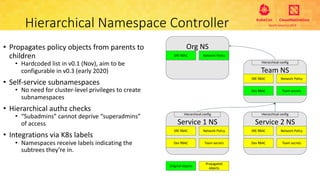

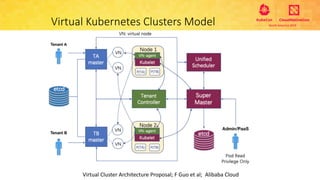

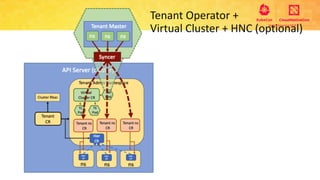

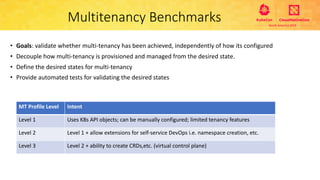

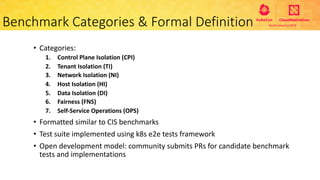

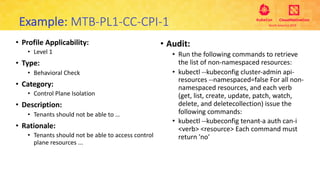

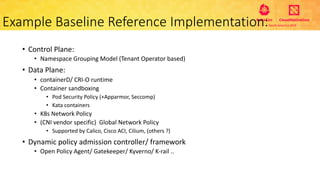

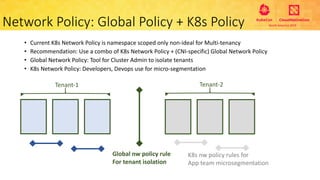

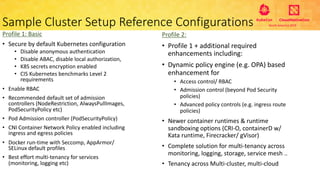

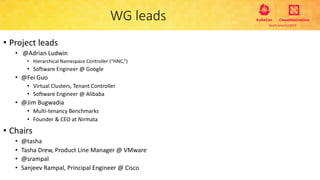

This document provides an overview and agenda for a presentation on secure multitenancy in Kubernetes. It discusses what Kubernetes multitenancy is, available solutions, architectural models for multitenancy including namespace grouping and virtual Kubernetes clusters. It also covers community initiatives for multitenancy control plane including tenant controllers and hierarchical namespaces. The document outlines benchmarking categories and a proposed baseline reference implementation for multitenancy including control plane, data plane, and network isolation techniques.