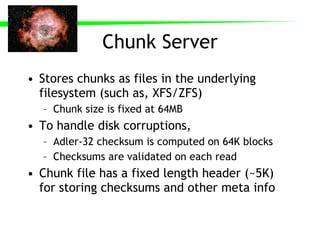

Kosmos Filesystem (KFS) is a scalable distributed storage system designed for large datasets. It uses commodity hardware and handles failures through replication and versioning of file chunks across multiple servers. The system includes a metadata server and chunkservers, with client libraries providing a POSIX-like interface.