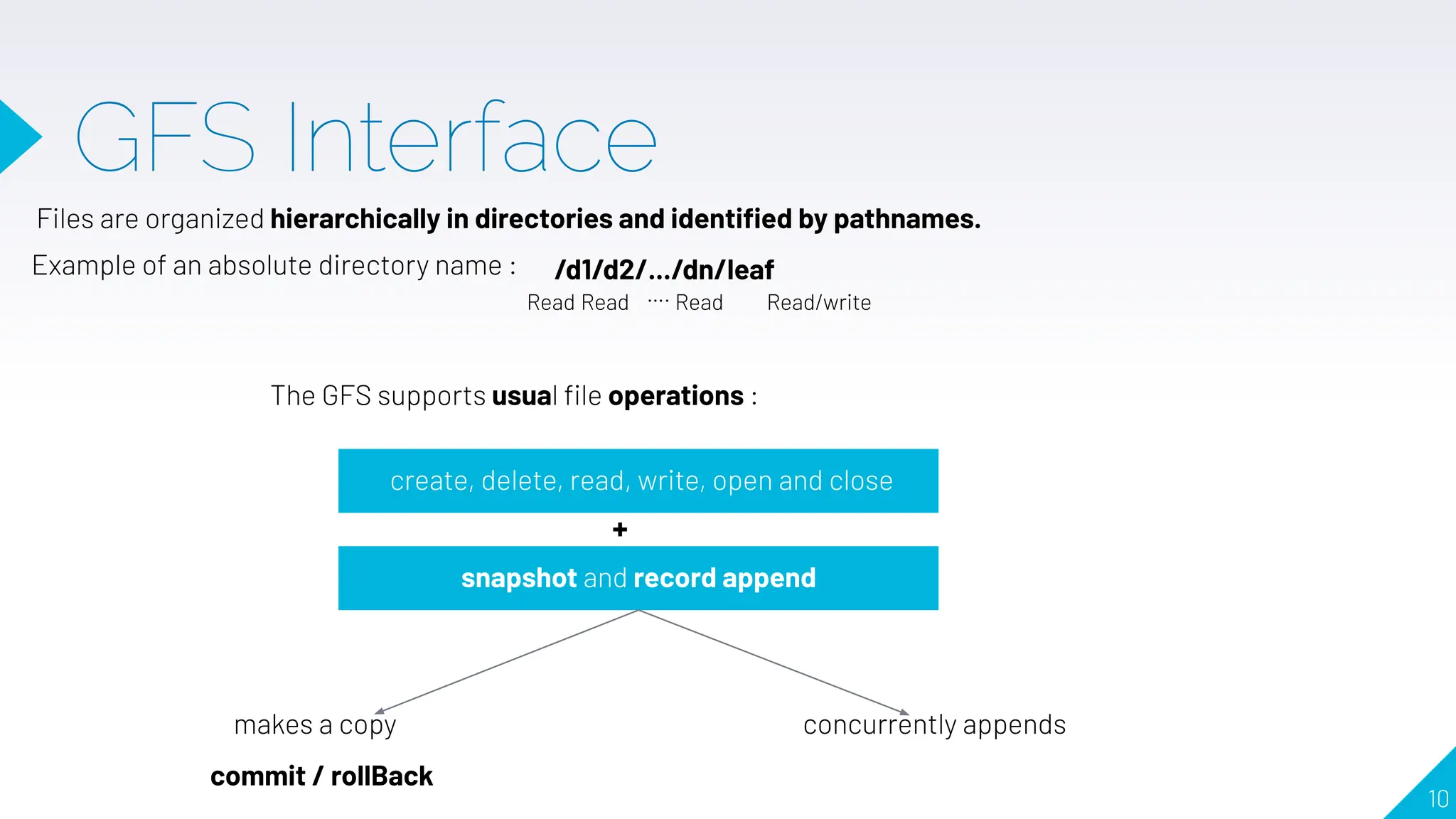

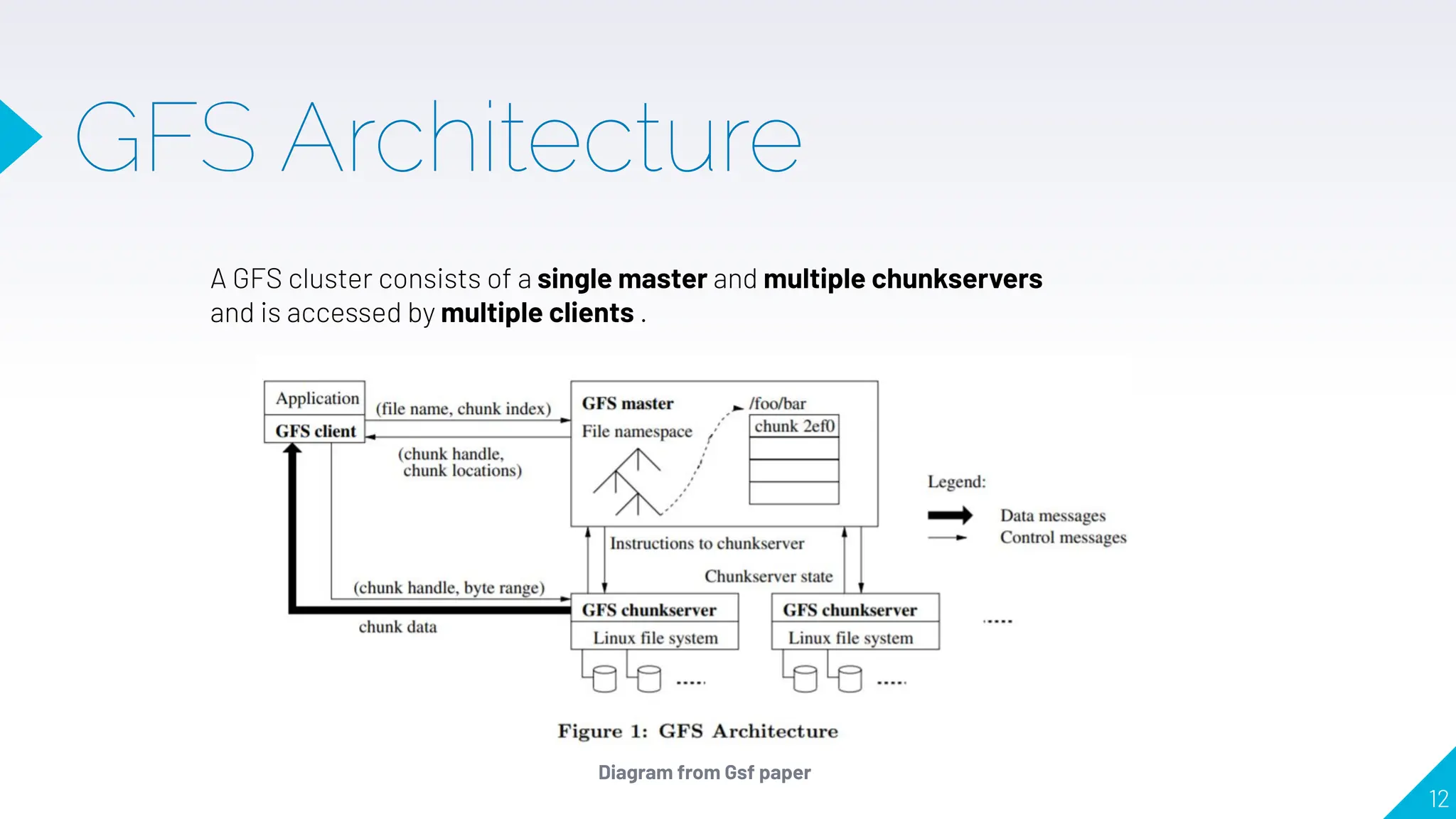

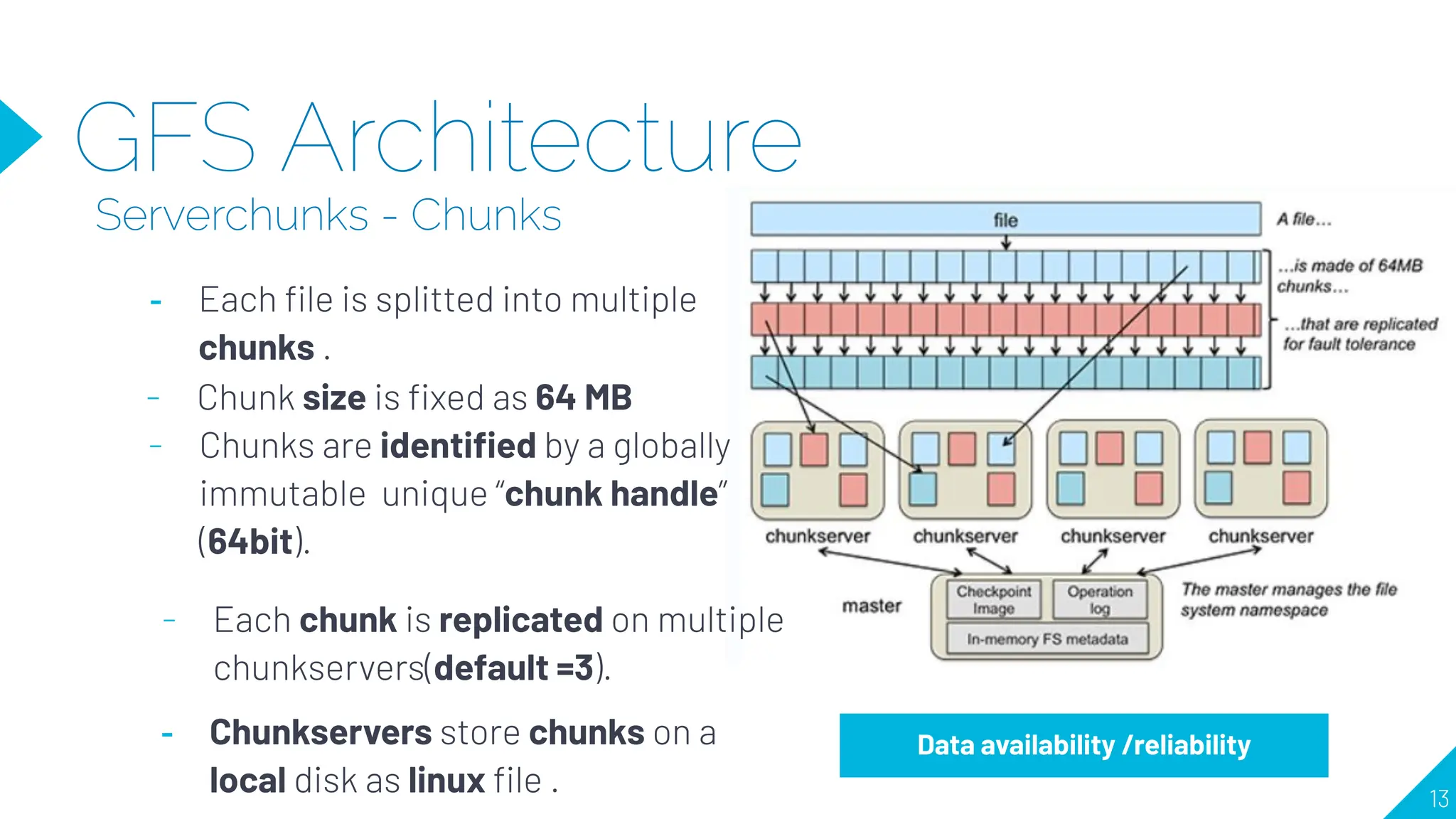

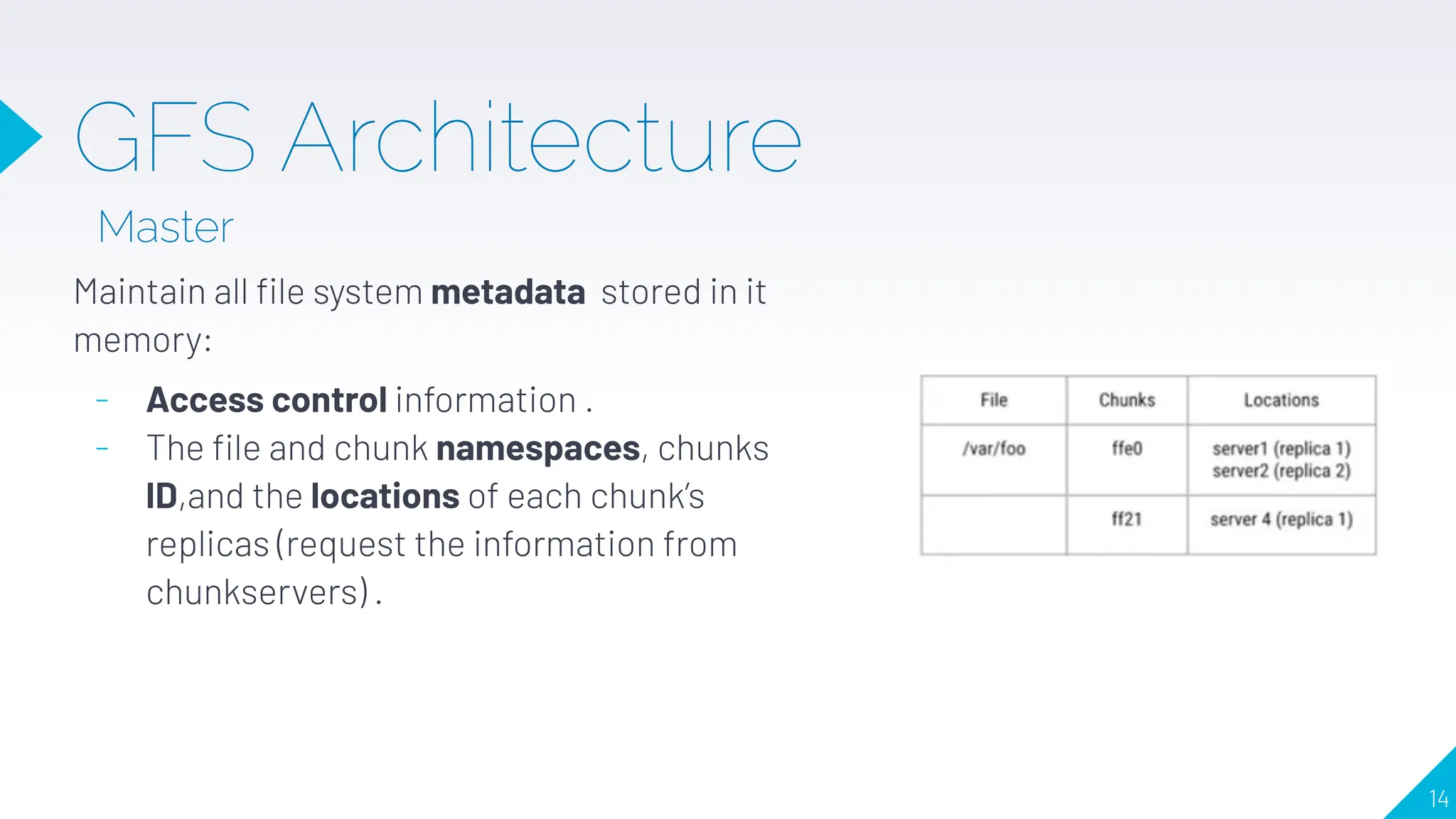

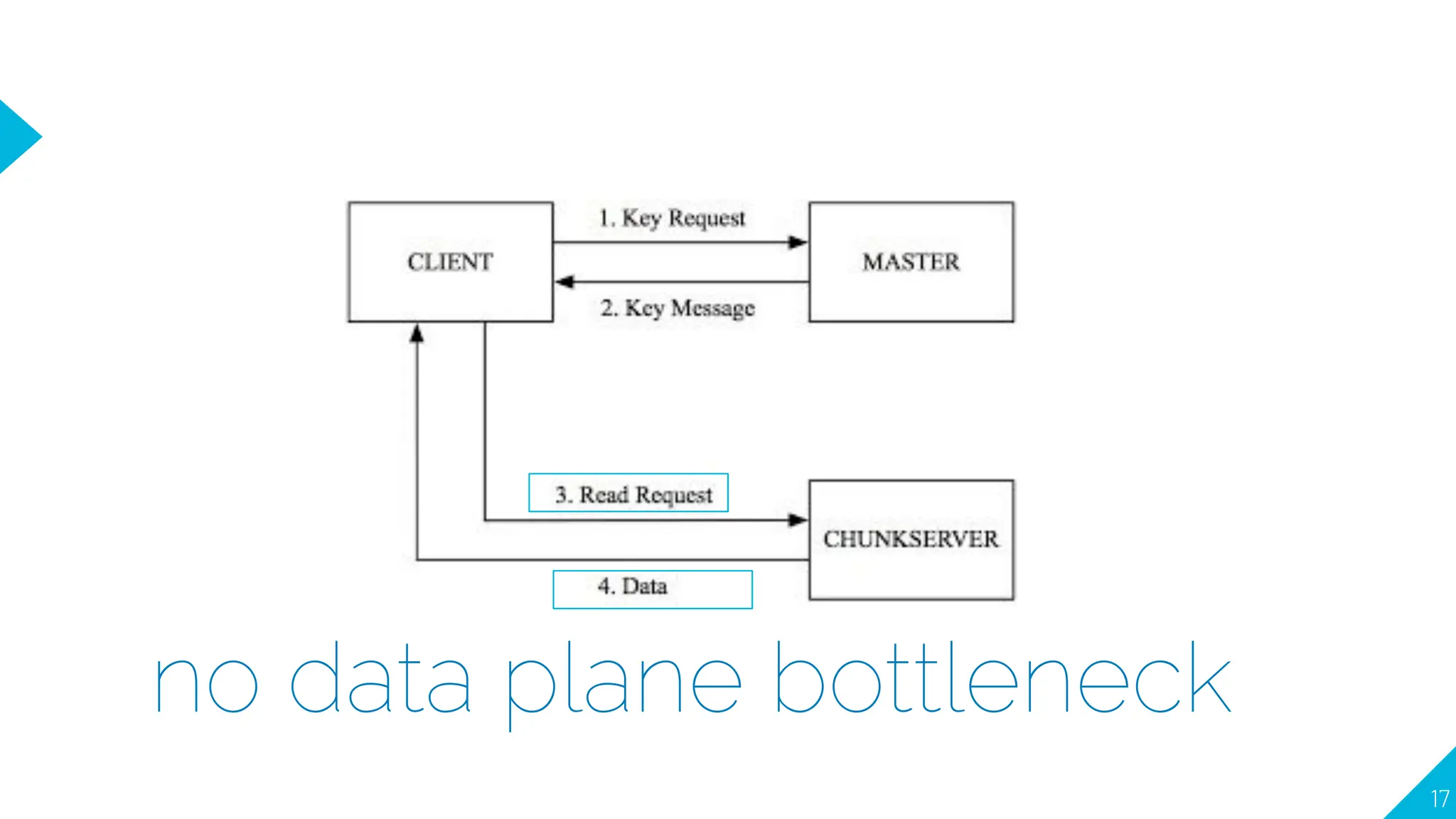

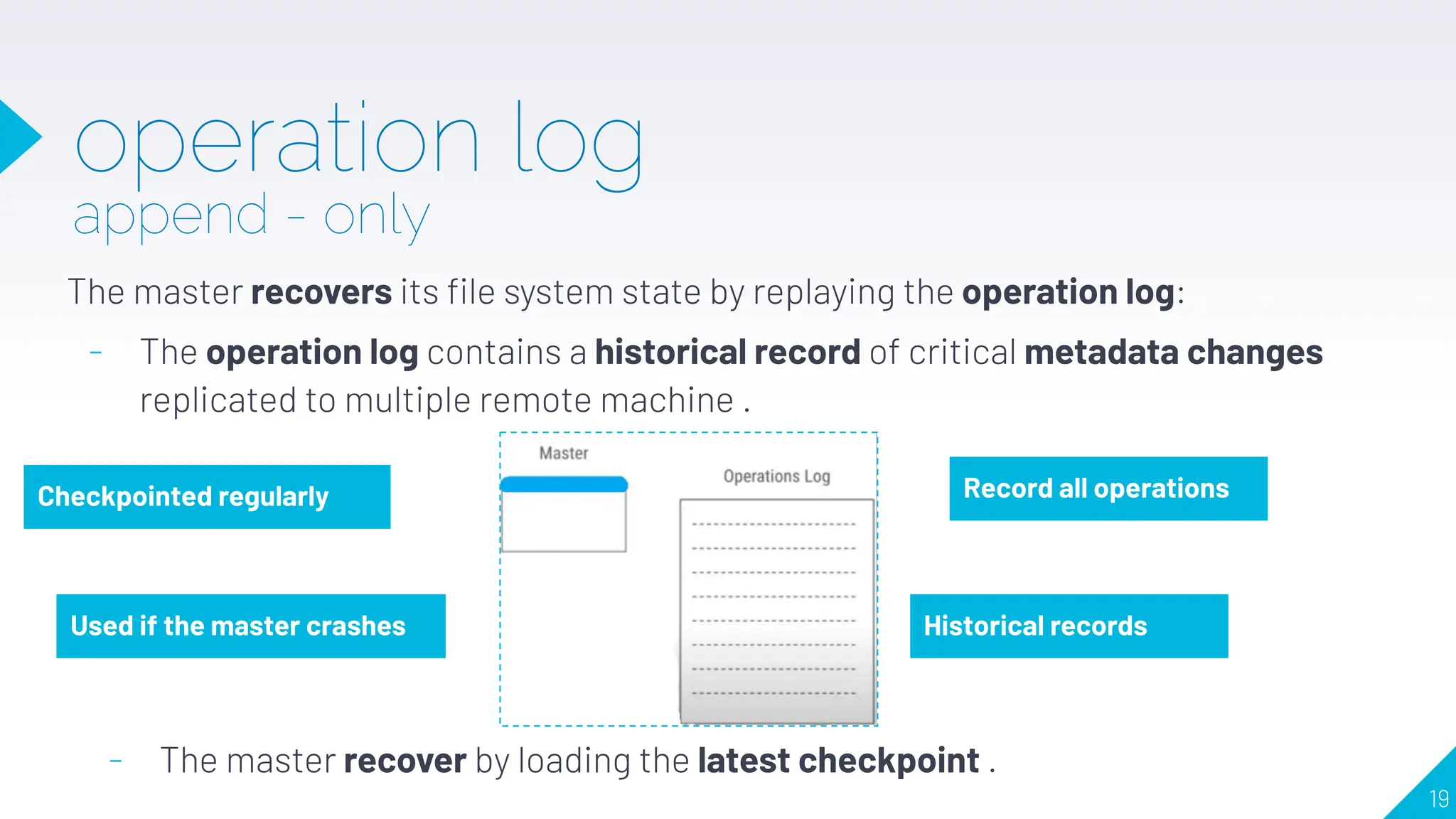

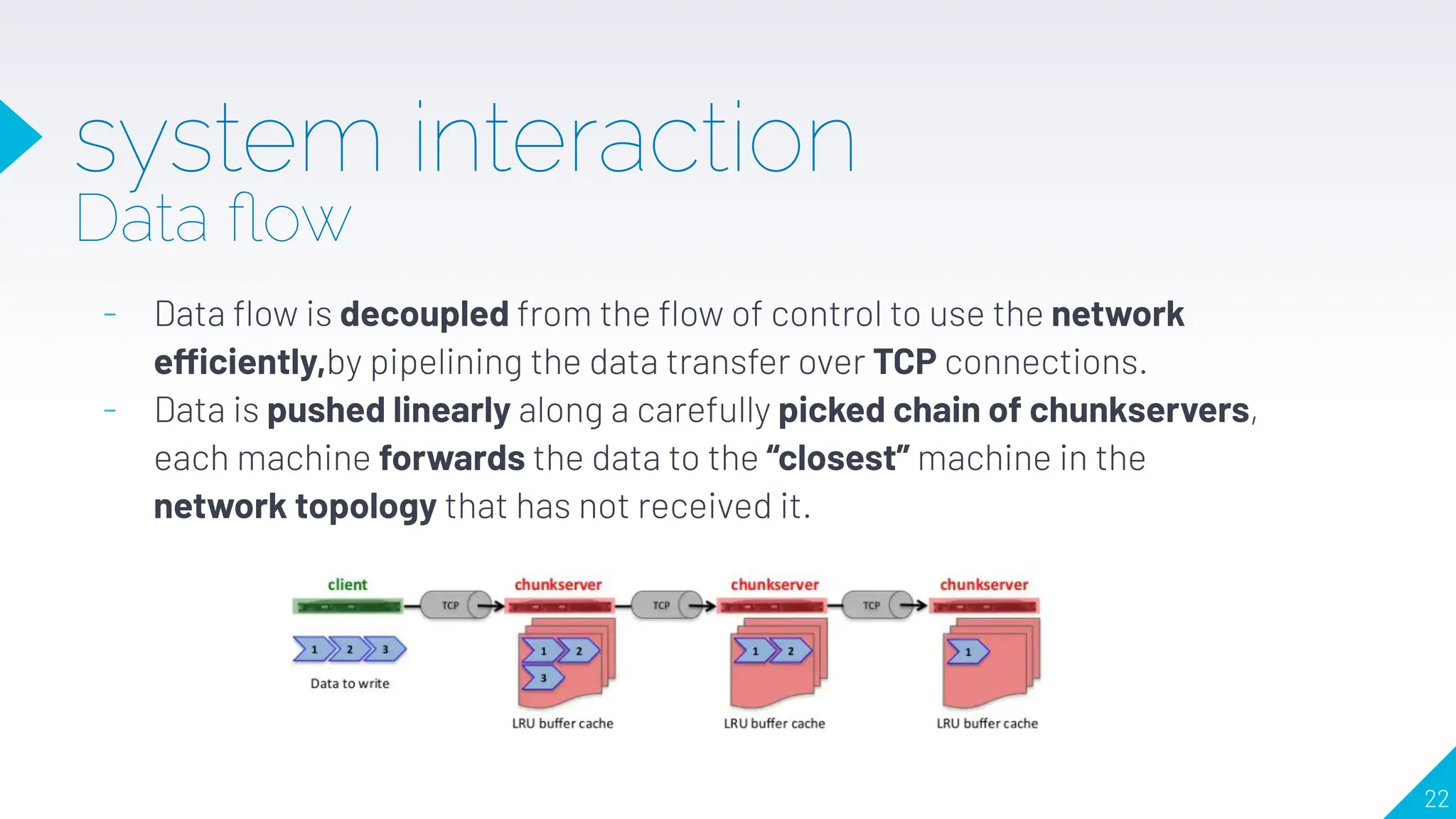

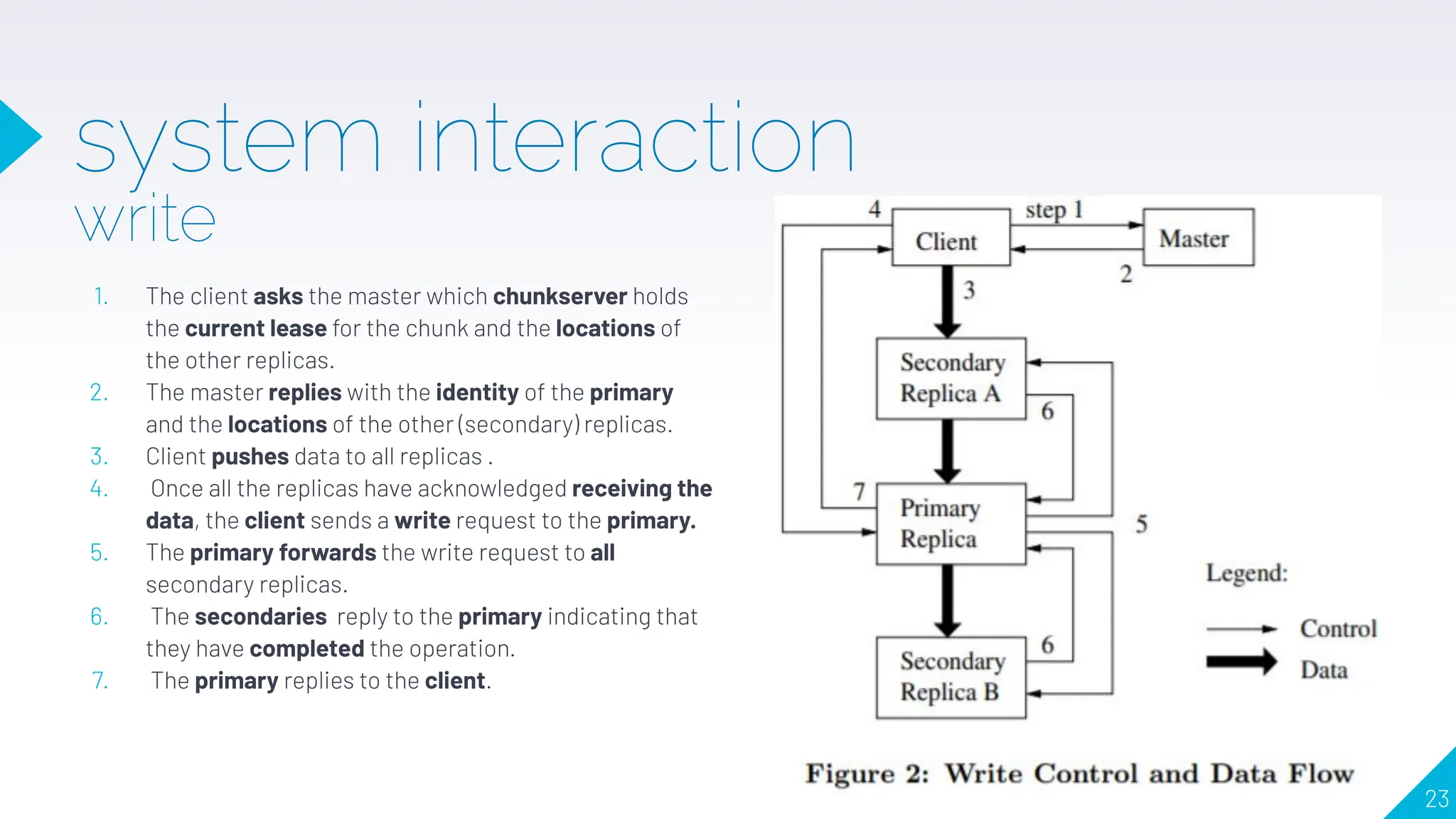

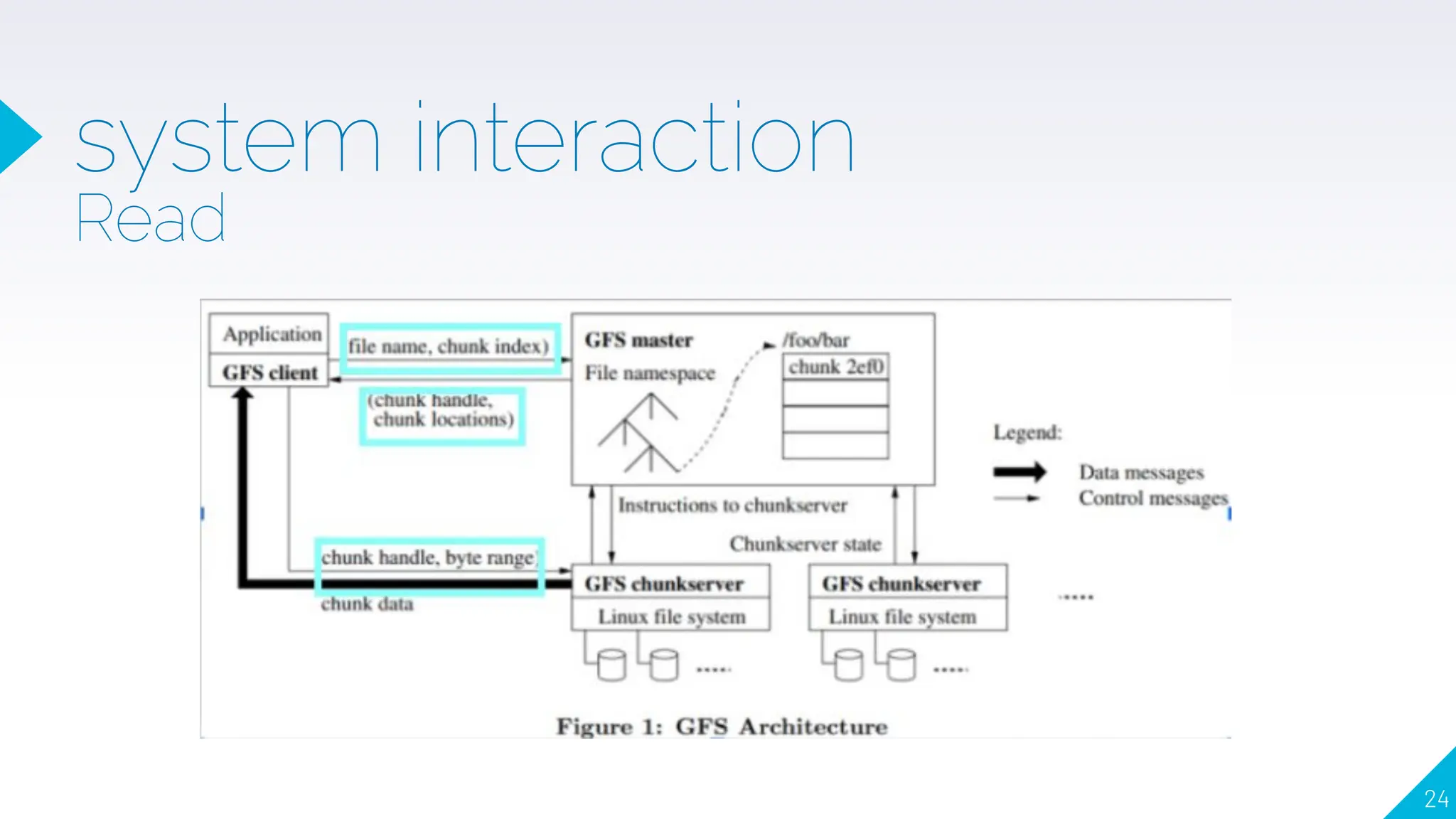

The document outlines the Google File System (GFS), a scalable distributed file system designed to manage large data-intensive applications using inexpensive commodity hardware. It details the architecture, including a master node and multiple chunk servers, and describes key features like fault tolerance, efficient data replication, and data integrity checks. The system is designed to handle hardware failures and maintain high availability while optimizing for large data chunks and high throughput.