Embed presentation

Downloaded 28 times

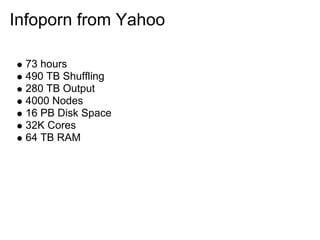

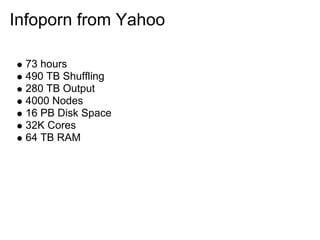

This document provides an overview of Hadoop, including: 1) Hadoop solves the problems of analyzing massively large datasets by distributing data storage and analysis across multiple machines to tolerate node failure. 2) Hadoop uses HDFS for distributed data storage, which shards massive files across data nodes with replication for fault tolerance, and MapReduce for distributed data analysis by sending code to the data. 3) The document demonstrates MapReduce concepts like map, reduce, and their composition with an example job.