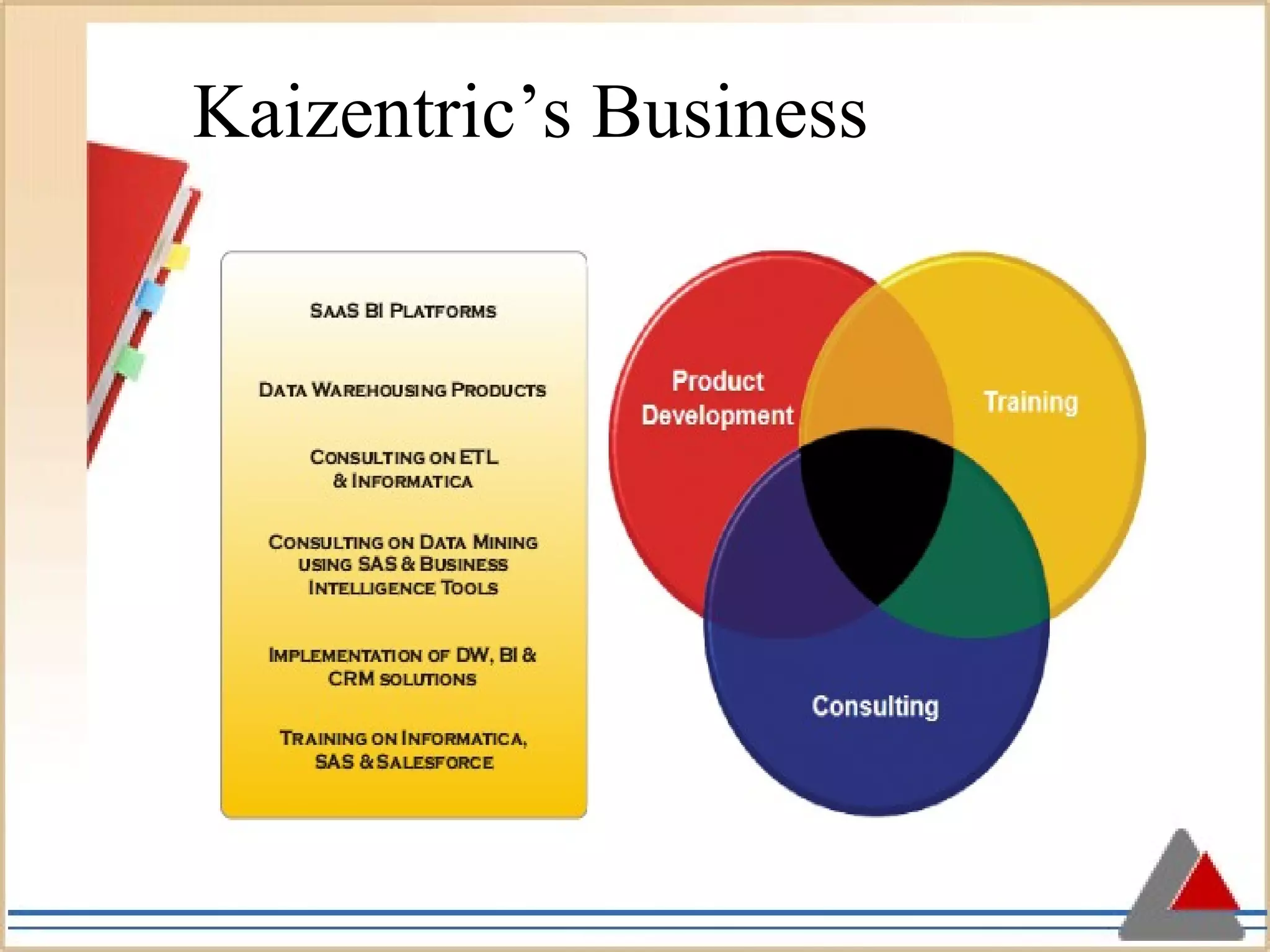

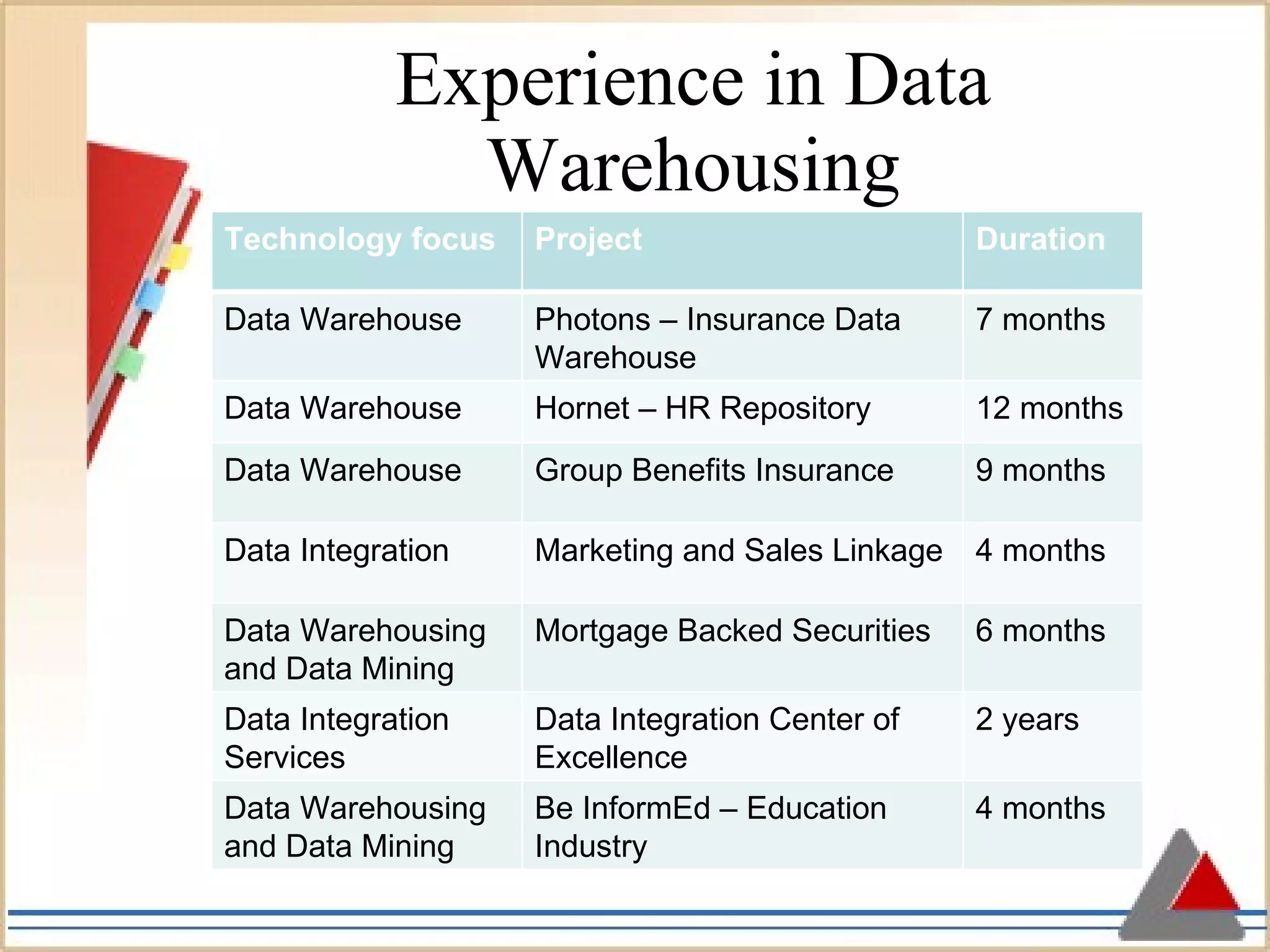

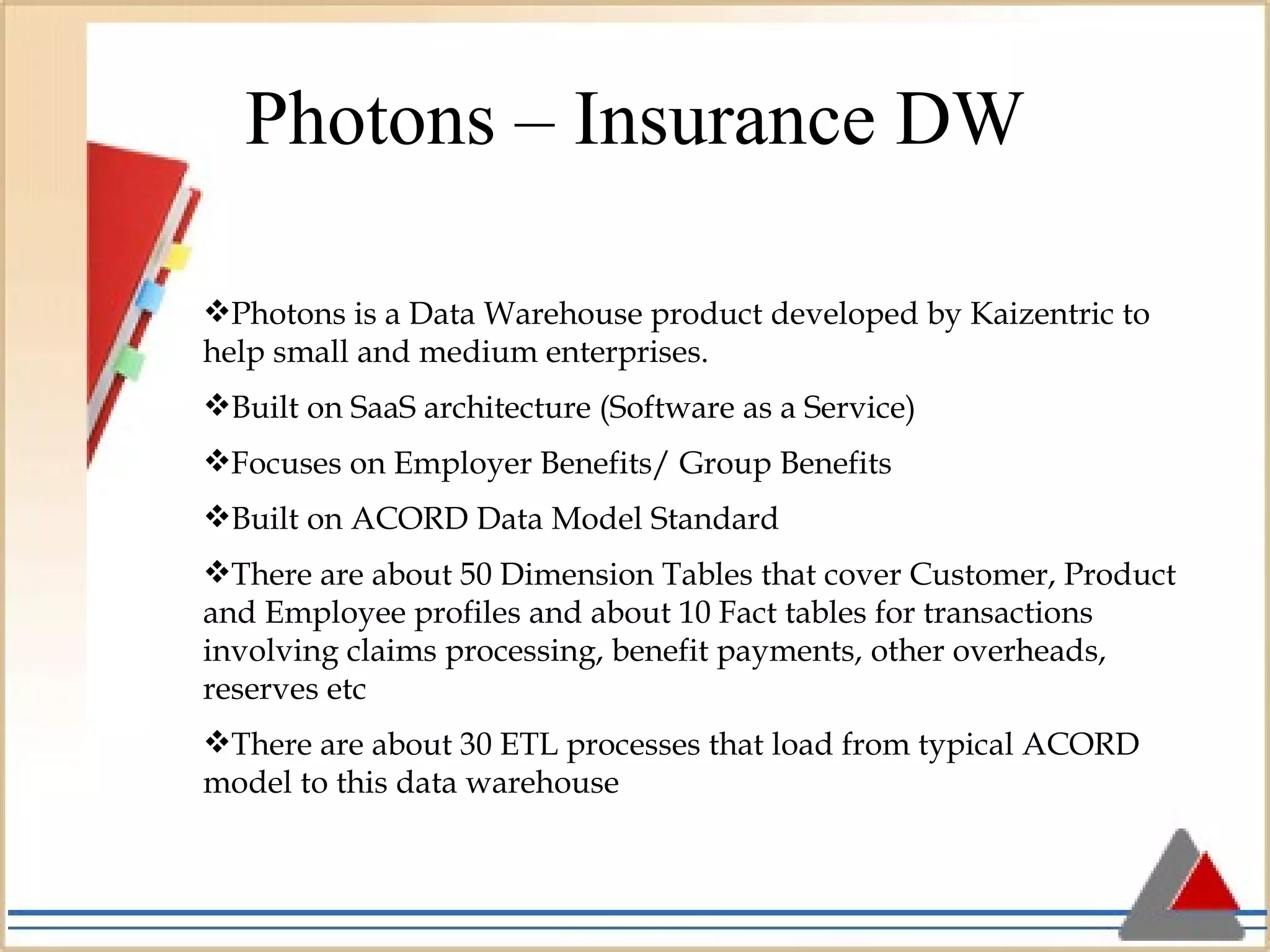

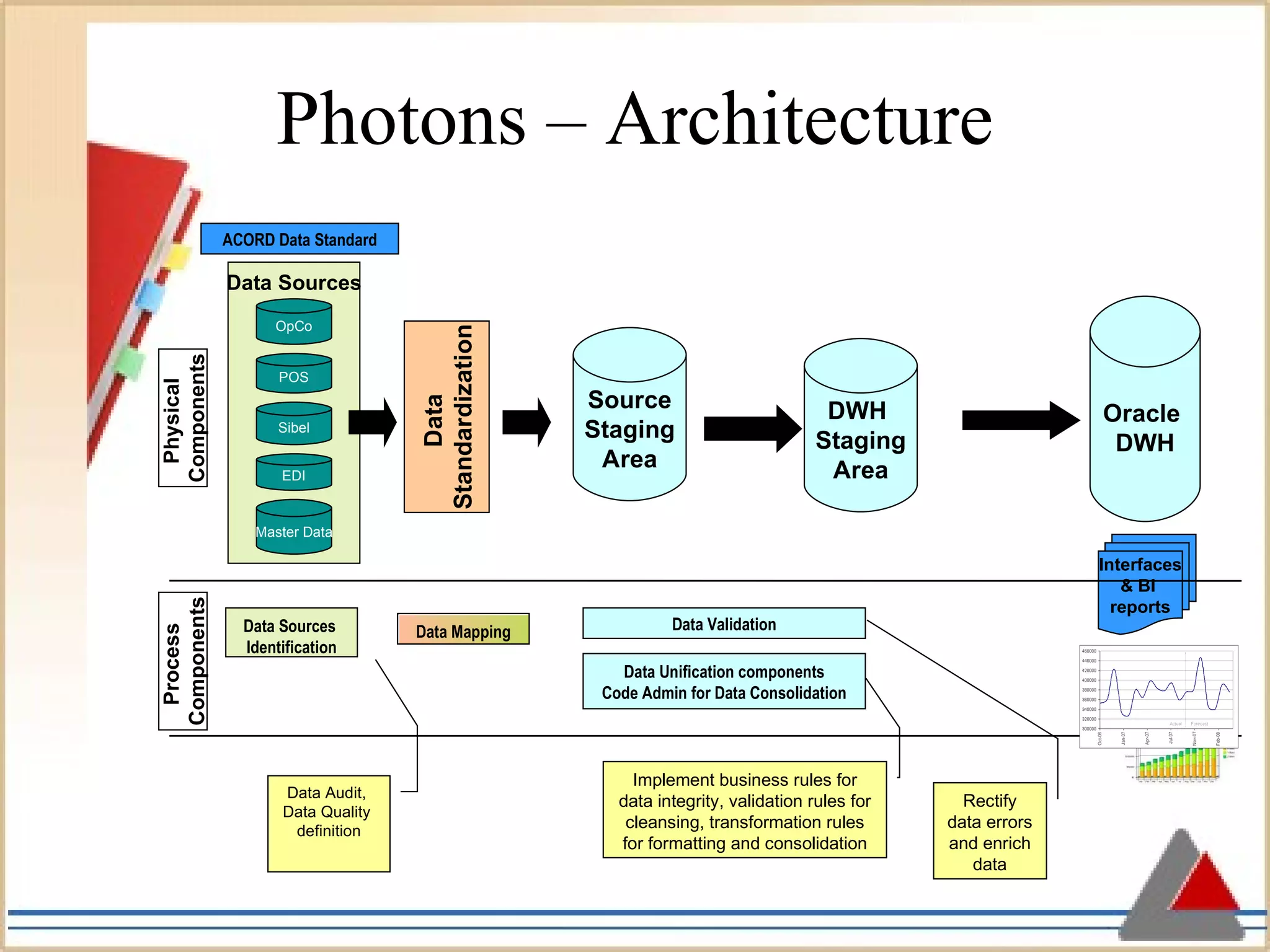

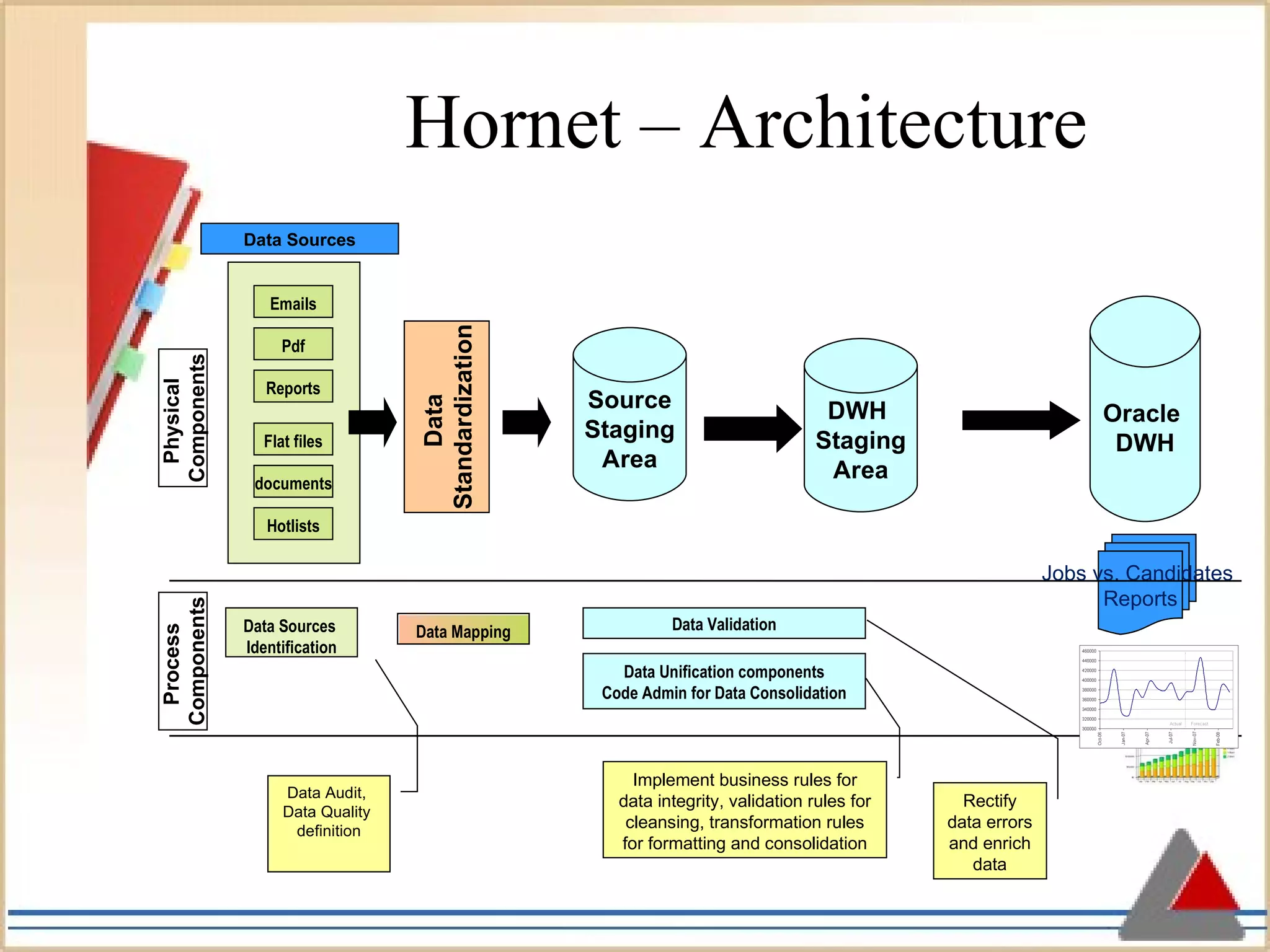

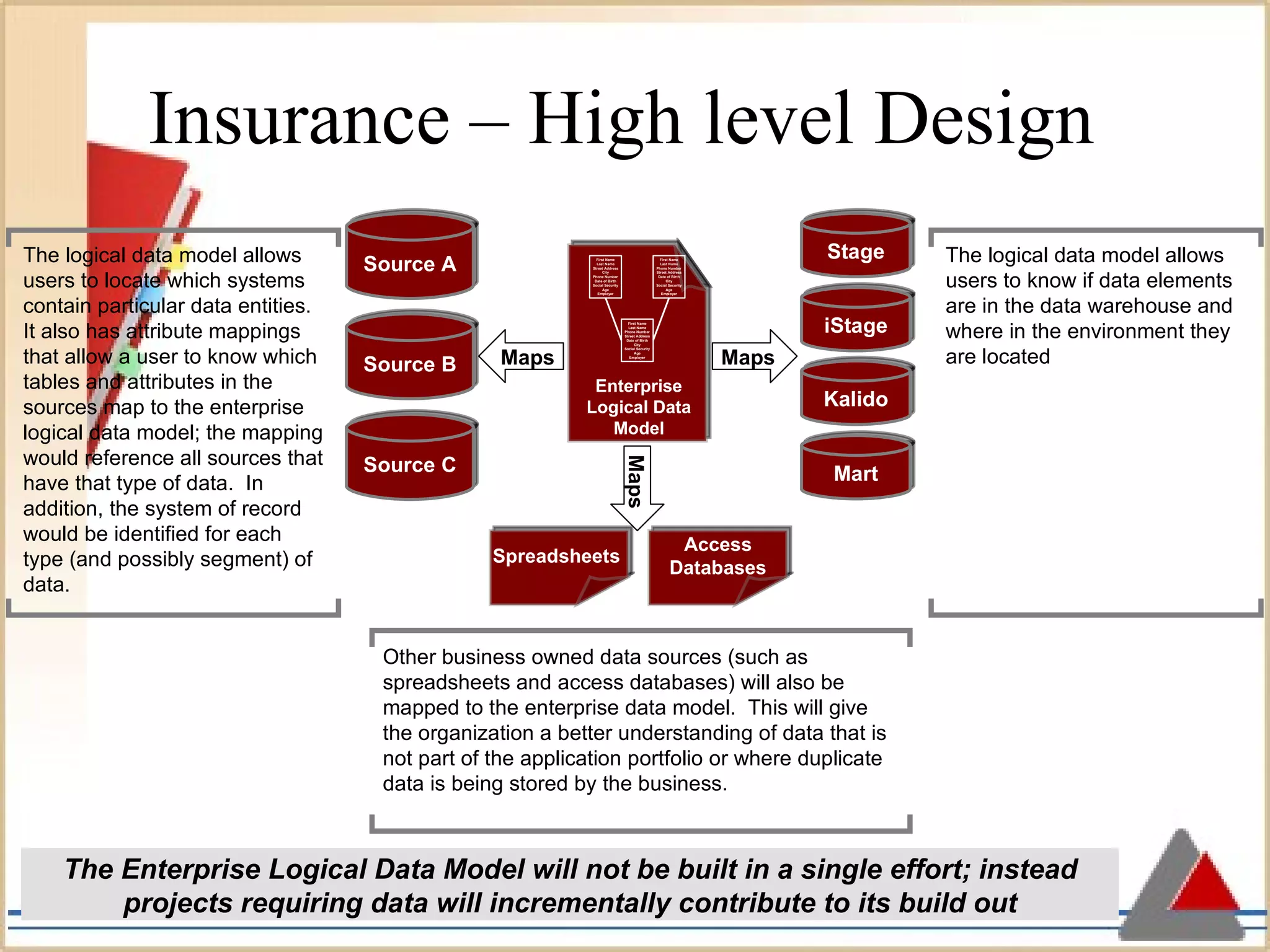

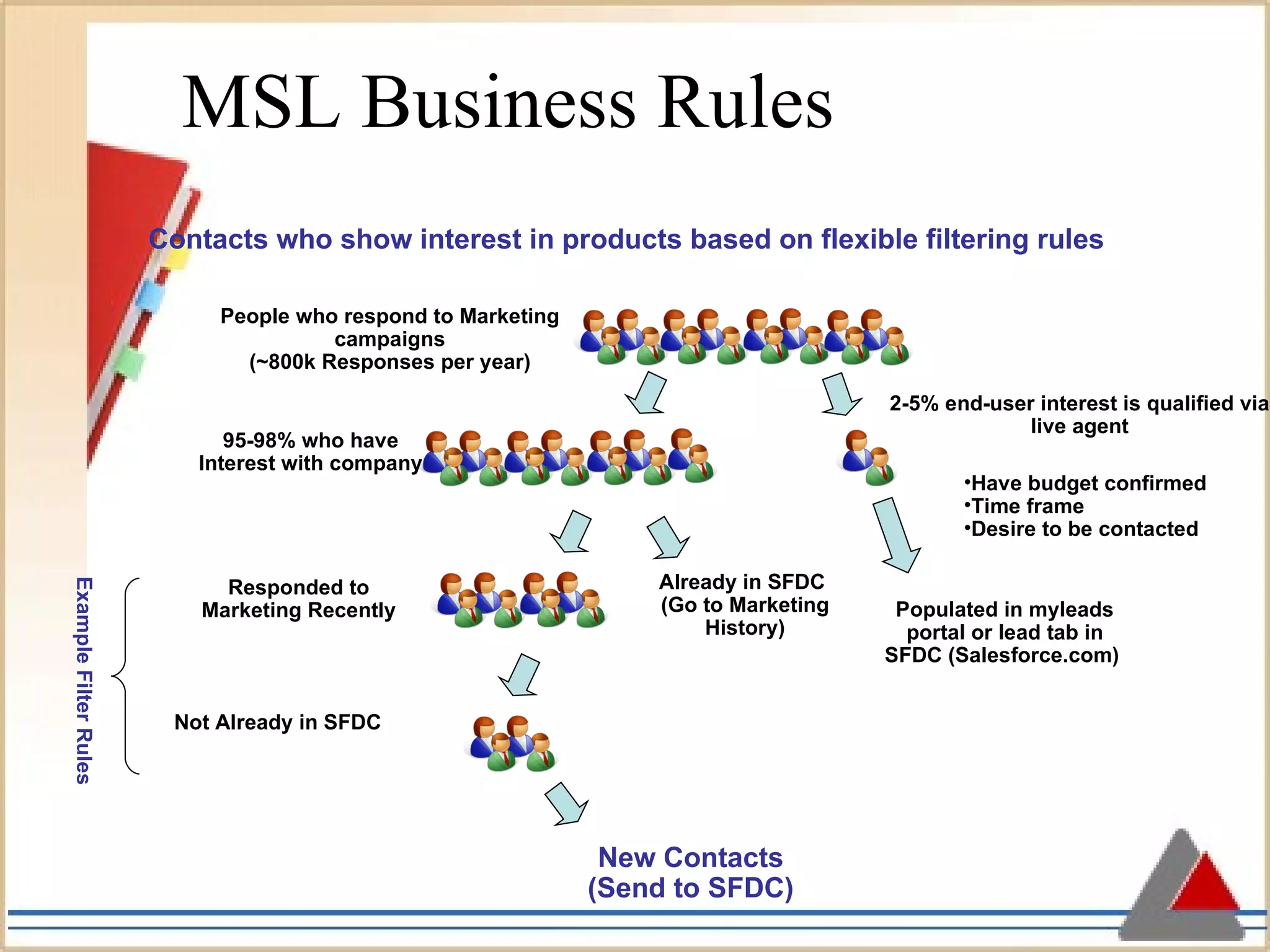

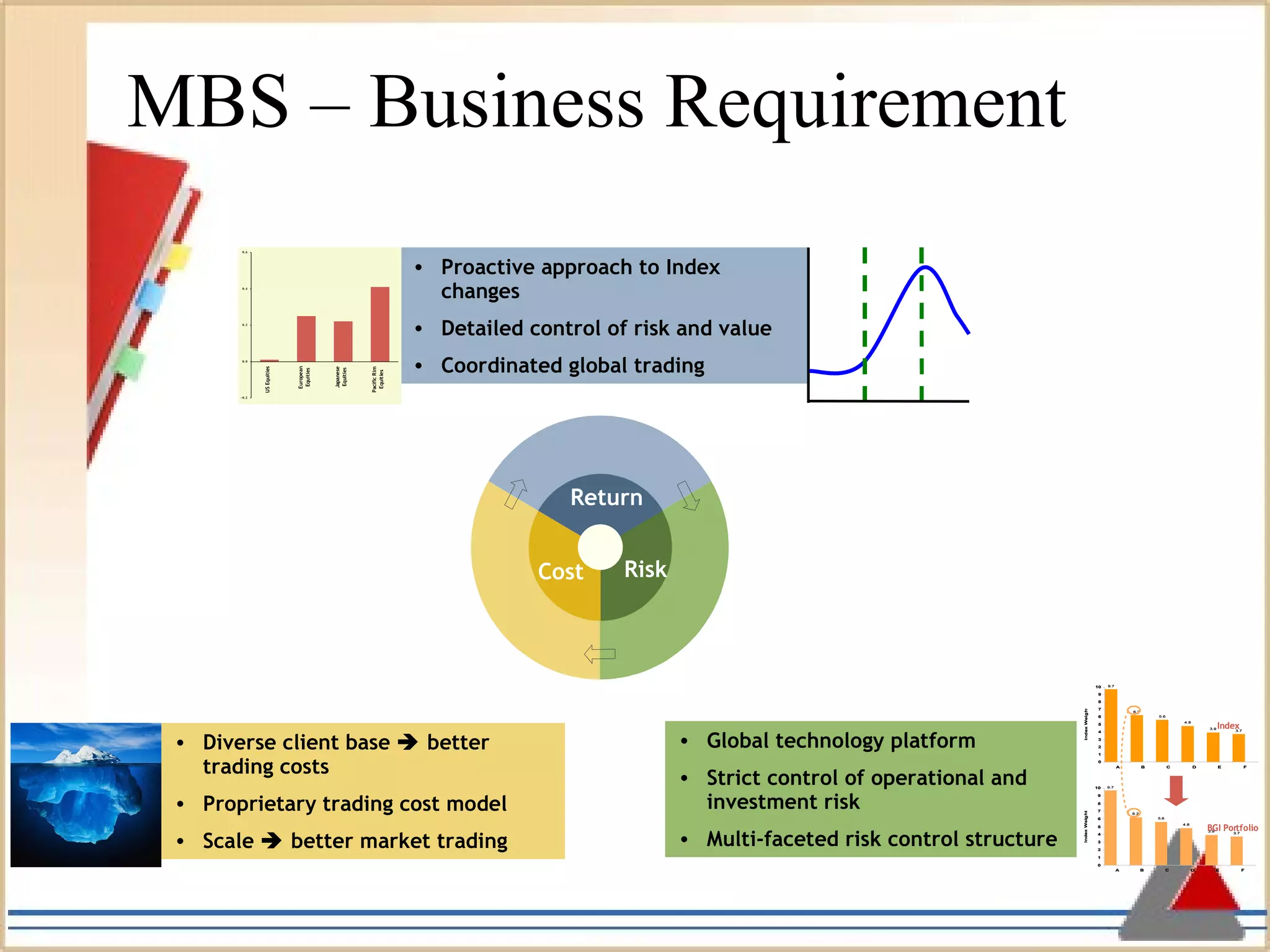

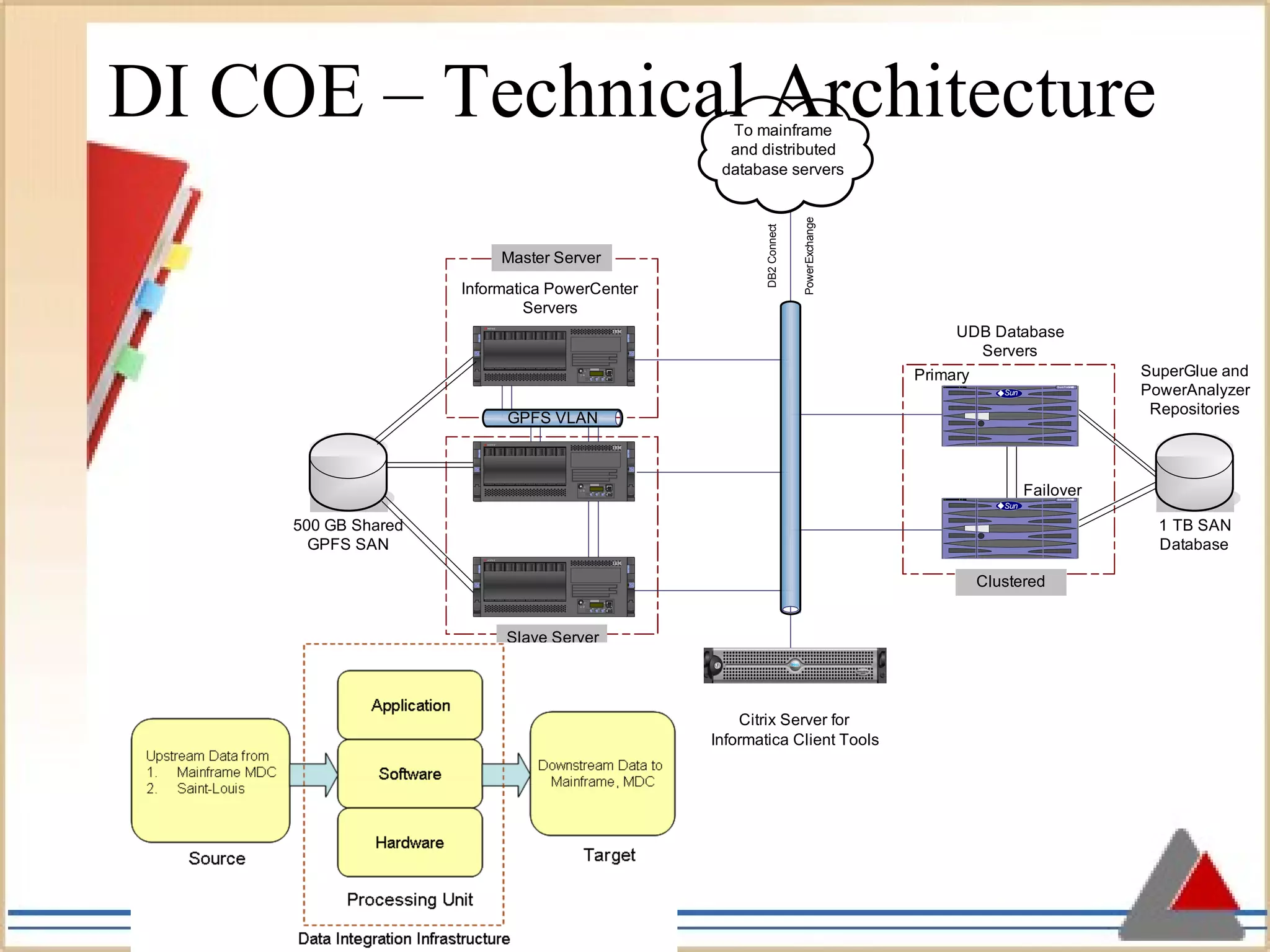

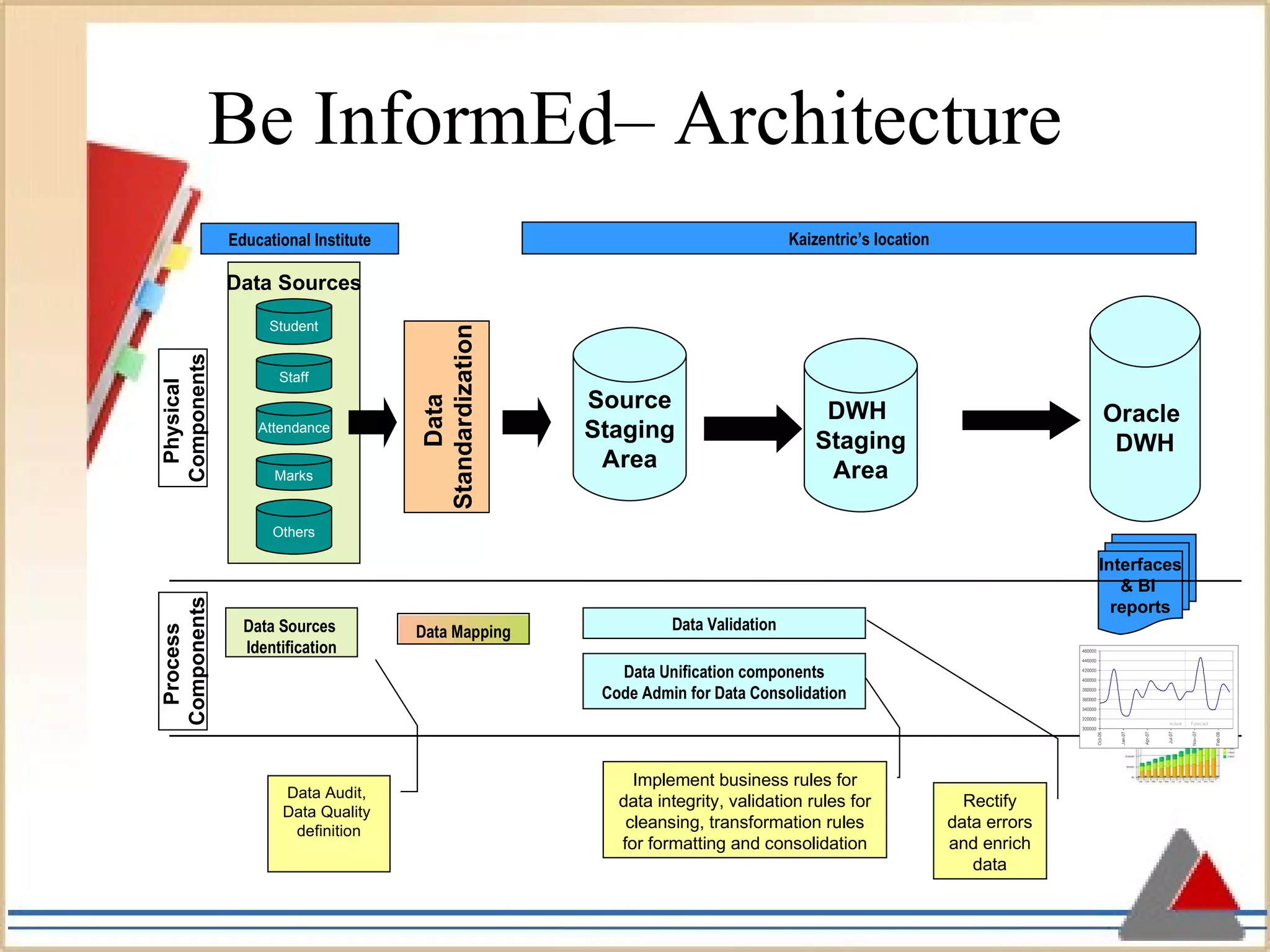

Kaizentric Technologies, founded in February 2008, specializes in data warehousing and business intelligence, primarily serving the insurance sector with scalable solutions that can expand rapidly. The company offers various data warehouse products, including 'Photons' for insurance and 'Hornet' for HR recruitment, using advanced ETL processes and SaaS architecture. Kaizentric conducts extensive research and has developed innovative systems for data integration and mining across several industries, demonstrating a strong technical foundation in multiple database and reporting tools.