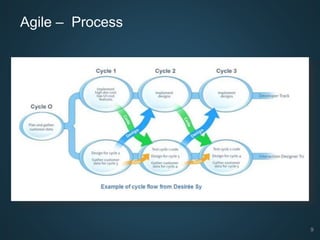

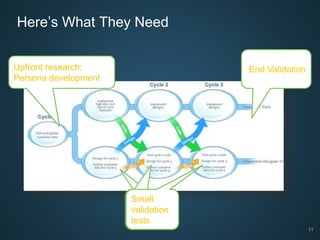

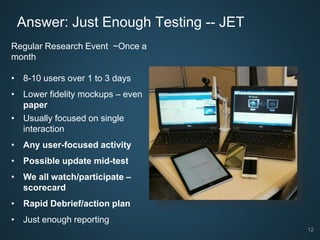

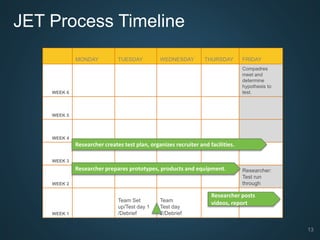

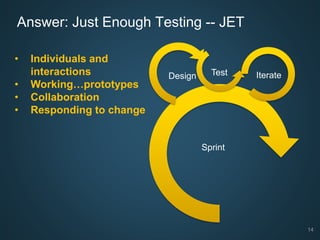

The document discusses how to conduct user experience research in an agile development environment. It proposes a method called Just Enough Testing (JET) where user research is conducted in short, monthly testing cycles. Each cycle involves testing low-fidelity prototypes with 8-10 users over 1-3 days and providing a rapid debrief and action plan. This allows for iterative user testing to inform product design while balancing the need for agility.