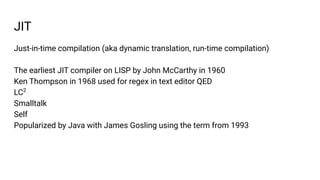

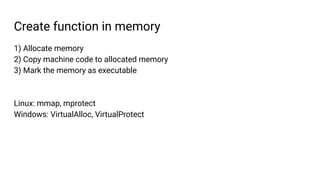

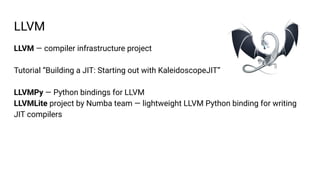

The document discusses JIT compilation in CPython. It begins with a brief history of JIT compilation, including early implementations in LISP and Smalltalk. The author then describes their experience with JIT compilation in CPython, including converting Python code to IL assembly and machine code. Benchmarks show the JIT compiled Fibonacci function is around 8 times faster than the unoptimized version. Finally, the document briefly mentions the Numba project, which uses JIT compilation to accelerate Python code.

![AST

Module(body=[

FunctionDef(name='fibonacci', args=arguments(args=[Name(id='n', ctx=Param())],

vararg=None, kwarg=None, defaults=[]), body=[

Expr(value=Str(s='Returns n-th Fibonacci number')),

Assign(targets=[Name(id='a', ctx=Store())], value=Num(n=0)),

Assign(targets=[Name(id='b', ctx=Store())], value=Num(n=1)),

If(test=Compare(left=Name(id='n', ctx=Load()), ops=[Lt()], comparators=[Num(n=1)]), body=[

Return(value=Name(id='a', ctx=Load()))

], orelse=[]),

Assign(targets=[Name(id='i', ctx=Store())], value=Num(n=0)),

While(test=Compare(left=Name(id='i', ctx=Load()), ops=[Lt()], comparators=[Name(id='n', ctx=Load())]), body=[

Assign(targets=[Name(id='temp', ctx=Store())], value=Name(id='a', ctx=Load())),

Assign(targets=[Name(id='a', ctx=Store())], value=Name(id='b', ctx=Load())),

Assign(targets=[Name(id='b', ctx=Store())], value=BinOp(

left=Name(id='temp', ctx=Load()), op=Add(), right=Name(id='b', ctx=Load()))),

AugAssign(target=Name(id='i', ctx=Store()), op=Add(), value=Num(n=1))

], orelse=[]),

Return(value=Name(id='a', ctx=Load()))

], decorator_list=[Name(id='jit', ctx=Load())])

])](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-18-320.jpg)

![AST to IL ASM

class Visitor(ast.NodeVisitor):

def __init__(self):

self.ops = []

...

...

def visit_Assign(self, node):

if isinstance(node.value, ast.Num):

self.ops.append('MOV <{}>, {}'.format(node.targets[0].id, node.value.n))

elif isinstance(node.value, ast.Name):

self.ops.append('MOV <{}>, <{}>'.format(node.targets[0].id, node.value.id))

elif isinstance(node.value, ast.BinOp):

self.ops.extend(self.visit_BinOp(node.value))

self.ops.append('MOV <{}>, <{}>'.format(node.targets[0].id, node.value.left.id))

...](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-19-320.jpg)

![AST to IL ASM

class Visitor(ast.NodeVisitor):

def __init__(self):

self.ops = []

...

...

def visit_Assign(self, node):

if isinstance(node.value, ast.Num):

self.ops.append('MOV <{}>, {}'.format(node.targets[0].id, node.value.n))

elif isinstance(node.value, ast.Name):

self.ops.append('MOV <{}>, <{}>'.format(node.targets[0].id, node.value.id))

elif isinstance(node.value, ast.BinOp):

self.ops.extend(self.visit_BinOp(node.value))

self.ops.append('MOV <{}>, <{}>'.format(node.targets[0].id, node.value.left.id))

...

...

Assign(

targets=[Name(id='i', ctx=Store())],

value=Num(n=0)

),

Assign(

targets=[Name(id='a', ctx=Store())],

value=Name(id='b', ctx=Load())

),

...

...

MOV <i>, 0

...](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-20-320.jpg)

![AST to IL ASM

class Visitor(ast.NodeVisitor):

def __init__(self):

self.ops = []

...

...

def visit_Assign(self, node):

if isinstance(node.value, ast.Num):

self.ops.append('MOV <{}>, {}'.format(node.targets[0].id, node.value.n))

elif isinstance(node.value, ast.Name):

self.ops.append('MOV <{}>, <{}>'.format(node.targets[0].id, node.value.id))

elif isinstance(node.value, ast.BinOp):

self.ops.extend(self.visit_BinOp(node.value))

self.ops.append('MOV <{}>, <{}>'.format(node.targets[0].id, node.value.left.id))

...

...

Assign(

targets=[Name(id='i', ctx=Store())],

value=Num(n=0)

),

Assign(

targets=[Name(id='a', ctx=Store())],

value=Name(id='b', ctx=Load())

),

...

...

MOV <i>, 0

MOV <a>, <b>

...](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-21-320.jpg)

![IL ASM to ASM

MOV <a>, 0

MOV <b>, 1

CMP <n>, 1

JNL label0

RET

label0:

MOV <i>, 0

loop0:

MOV <temp>, <a>

MOV <a>, <b>

ADD <temp>, <b>

MOV <b>, <temp>

INC <i>

CMP <i>, <n>

JL loop0

RET

# for x64 system

args_registers = ['rdi', 'rsi', 'rdx', ...]

registers = ['rax', 'rbx', 'rcx', ...]

# return register: rax

def fibonacci(n): n ⇔ rdi

...

return a a ⇔ rax](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-23-320.jpg)

![Create function in memory

mmap_func = libc.mmap

mmap_func.argtype = [ctypes.c_void_p, ctypes.c_size_t, ctypes.c_int,

ctypes.c_int, ctypes.c_int, ctypes.c_size_t]

mmap_func.restype = ctypes.c_void_p

memcpy_func = libc.memcpy

memcpy_func.argtypes = [ctypes.c_void_p, ctypes.c_void_p, ctypes.c_size_t]

memcpy_func.restype = ctypes.c_char_p](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-34-320.jpg)

![Create function in memory

machine_code = 'x48xc7xc0x00x00x00x00x48xc7xc3x01x00x00x00x48

x83xffx01x7dx01xc3x48xc7xc1x00x00x00x00x48x89xc2x48x89xd8

x48x01xdax48x89xd3x48xffxc1x48x39xf9x7cxecxc3'

machine_code_size = len(machine_code)

addr = mmap_func(None, machine_code_size, PROT_READ | PROT_WRITE | PROT_EXEC,

MAP_ANONYMOUS | MAP_PRIVATE, -1, 0)

memcpy_func(addr, machine_code, machine_code_size)

func = ctypes.CFUNCTYPE(ctypes.c_uint64)(addr)

func.argtypes = [ctypes.c_uint32]](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-35-320.jpg)

![from numba import jit

import numpy as np

@jit(nopython=True) # Set "nopython" mode for best performance, equivalent to @njit

def go_fast(a): # Function is compiled to machine code when called the first time

trace = 0

for i in range(a.shape[0]): # Numba likes loops

trace += np.tanh(a[i, i]) # Numba likes NumPy functions

return a + trace # Numba likes NumPy broadcasting

@cuda.jit

def matmul(A, B, C):

"""Perform square matrix multiplication of C = A * B

"""

i, j = cuda.grid(2)

if i < C.shape[0] and j < C.shape[1]:

tmp = 0.

for k in range(A.shape[1]):

tmp += A[i, k] * B[k, j]

C[i, j] = tmp](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-43-320.jpg)

![x86-64 assembler embedded in Python

Portable Efficient Assembly Code-generator in Higher-level Python

PeachPy

from peachpy.x86_64 import *

ADD(eax, 5).encode()

# bytearray(b'x83xc0x05')

MOVAPS(xmm0, xmm1).encode_options()

# [bytearray(b'x0f(xc1'), bytearray(b'x0f)xc8')]

VPSLLVD(ymm0, ymm1, [rsi + 8]).encode_length_options()

# {6: bytearray(b'xc4xe2uGFx08'),

# 7: bytearray(b'xc4xe2uGD&x08'),

# 9: bytearray(b'xc4xe2uGx86x08x00x00x00')}](https://image.slidesharecdn.com/jitforcpython-191218222831/85/JIT-compilation-for-CPython-45-320.jpg)