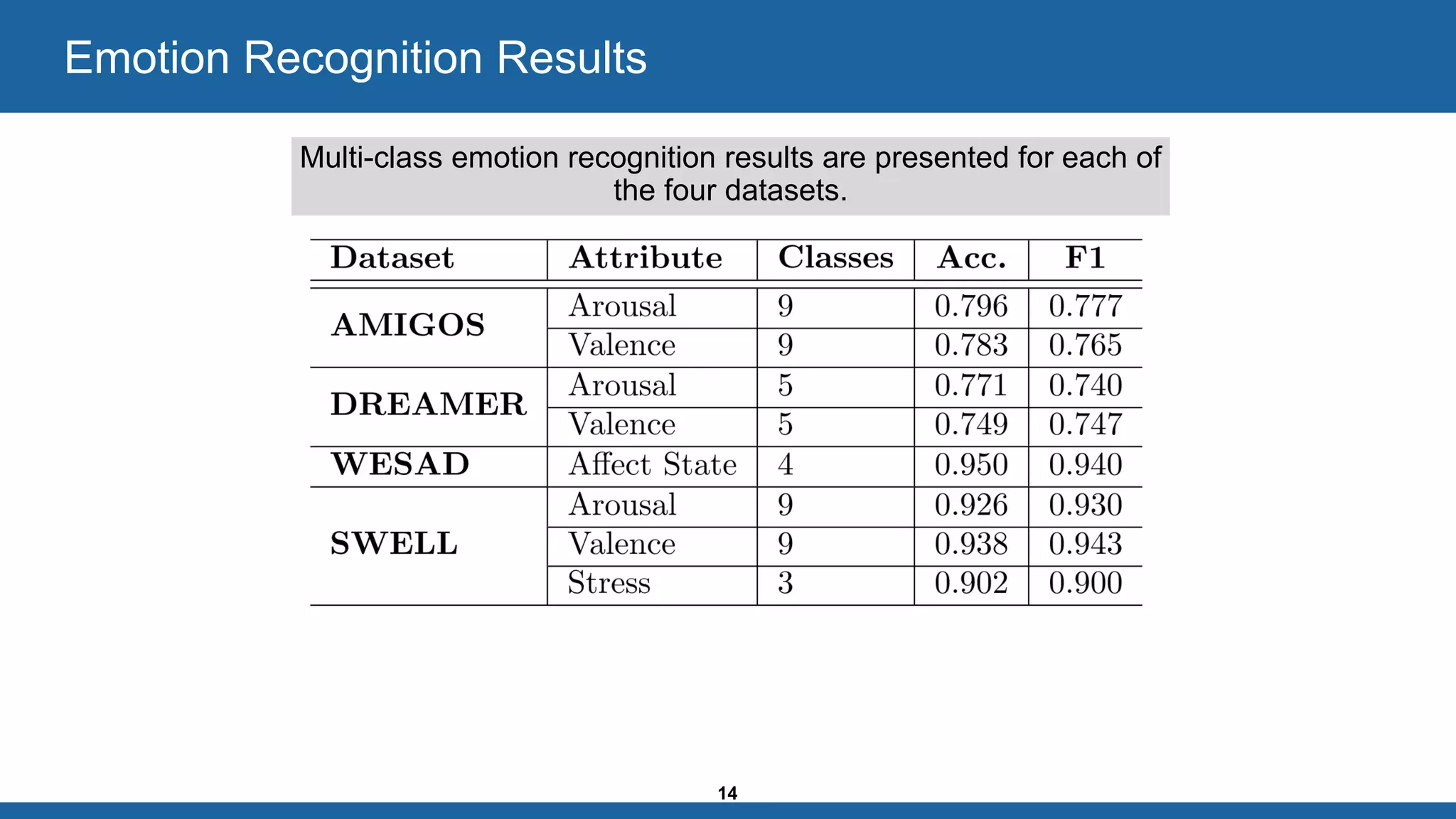

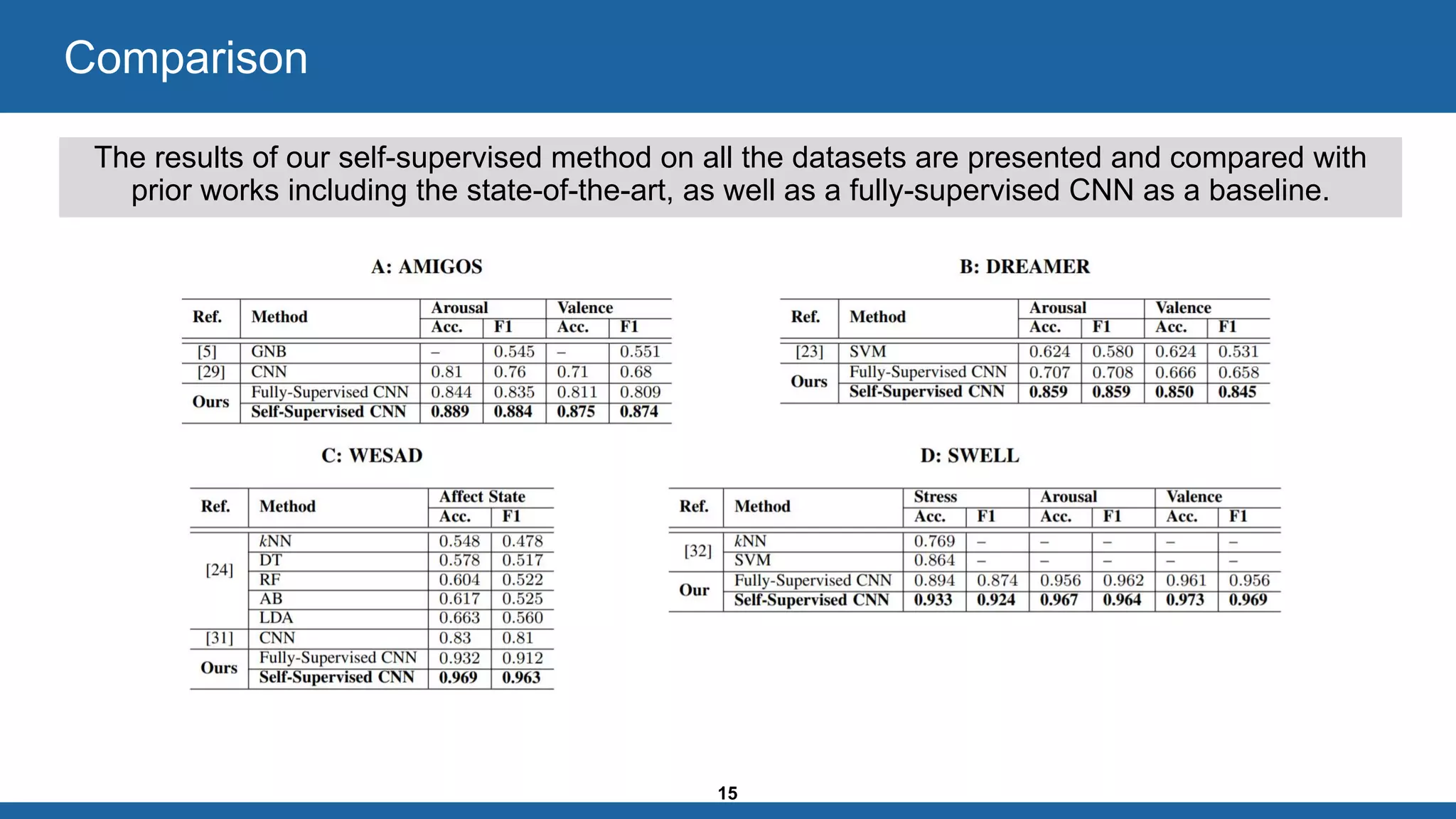

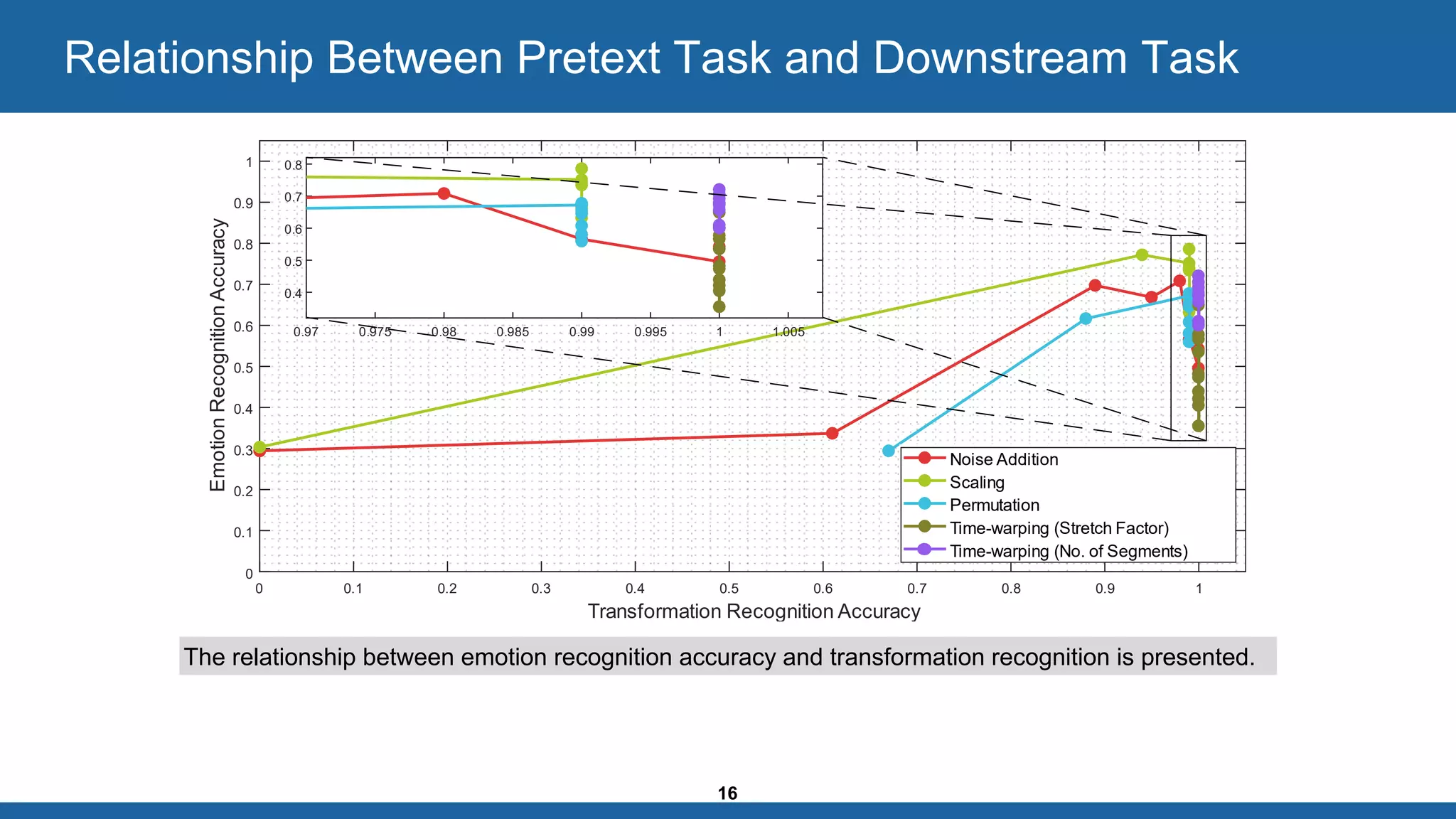

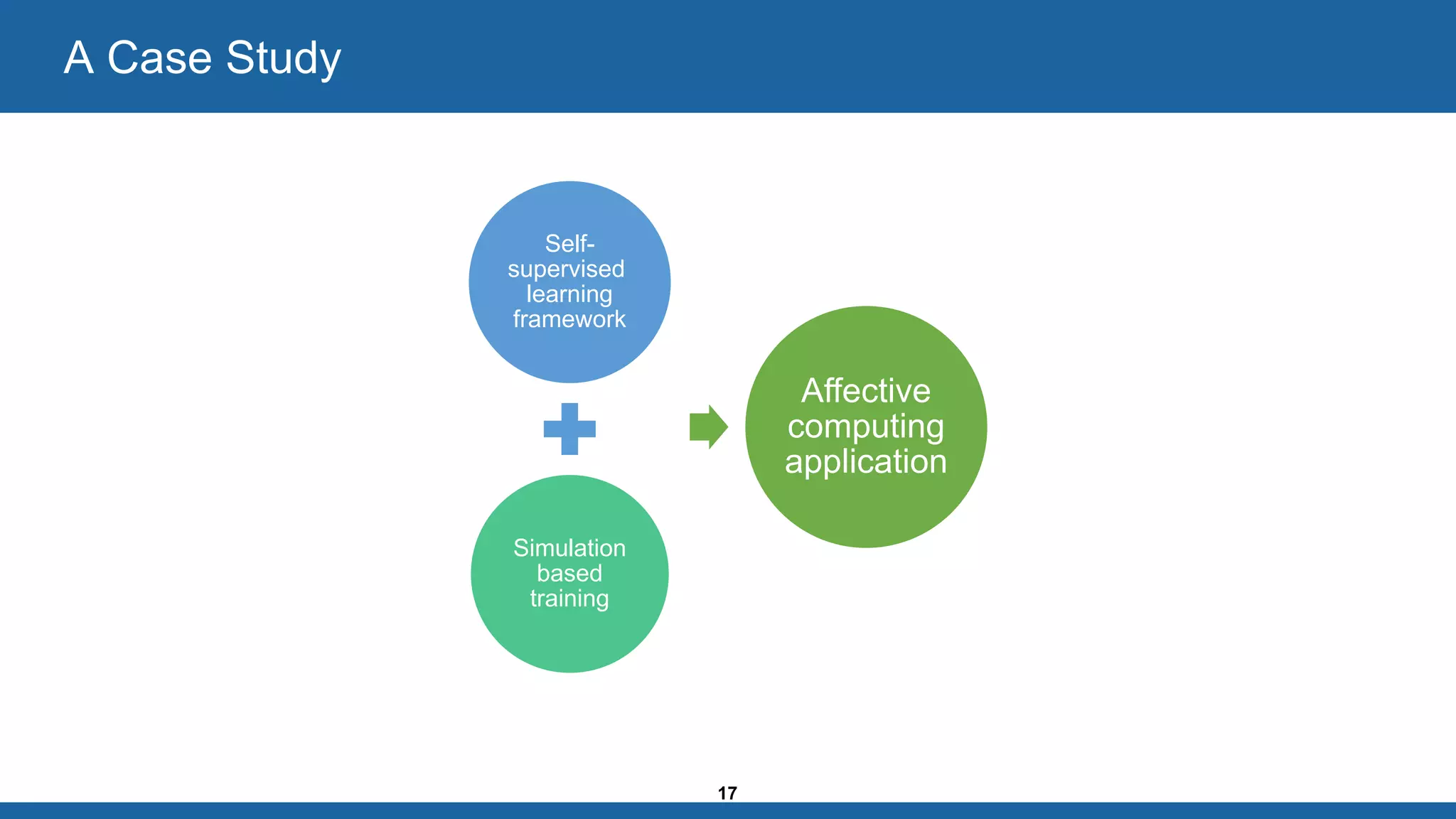

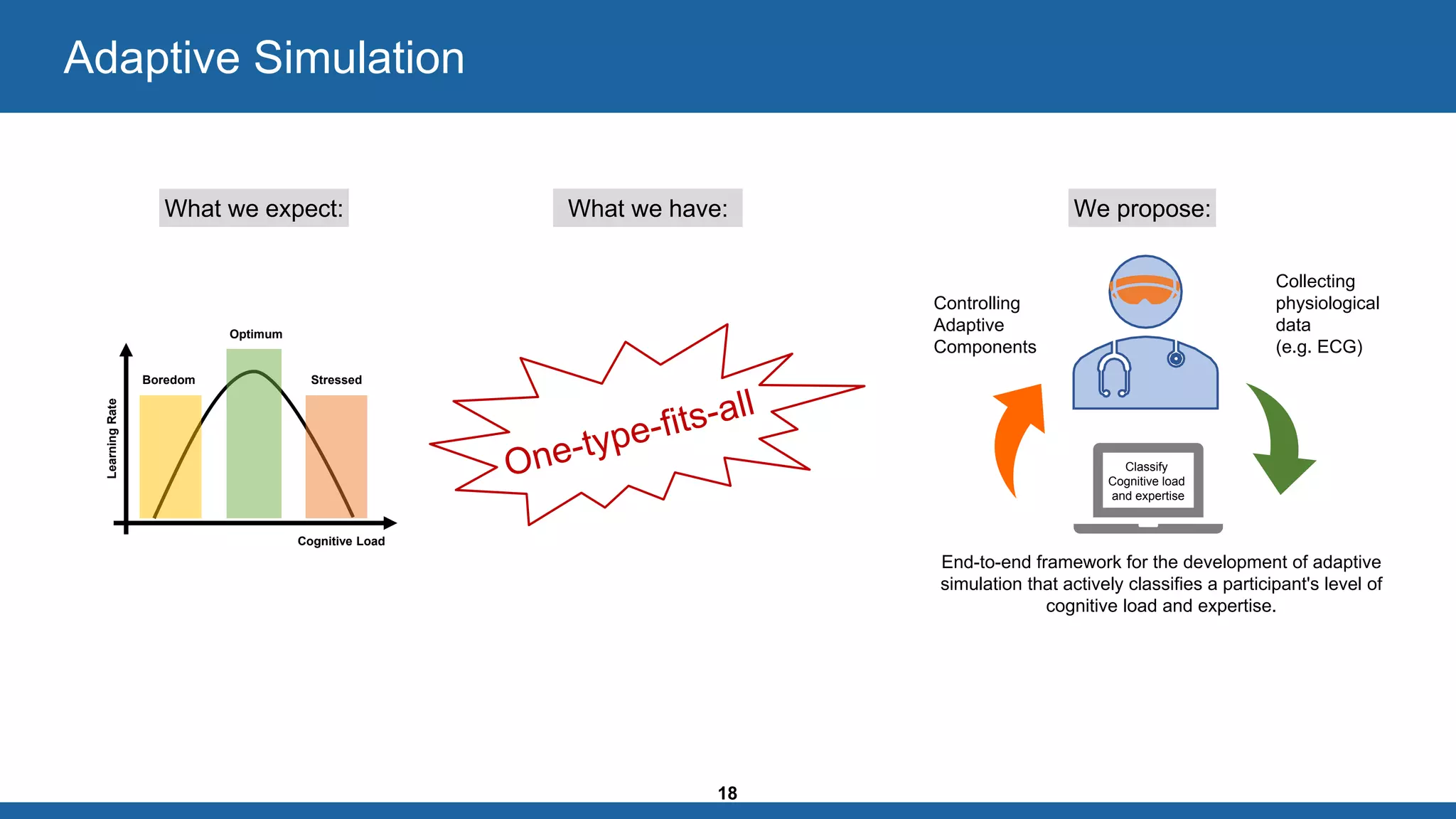

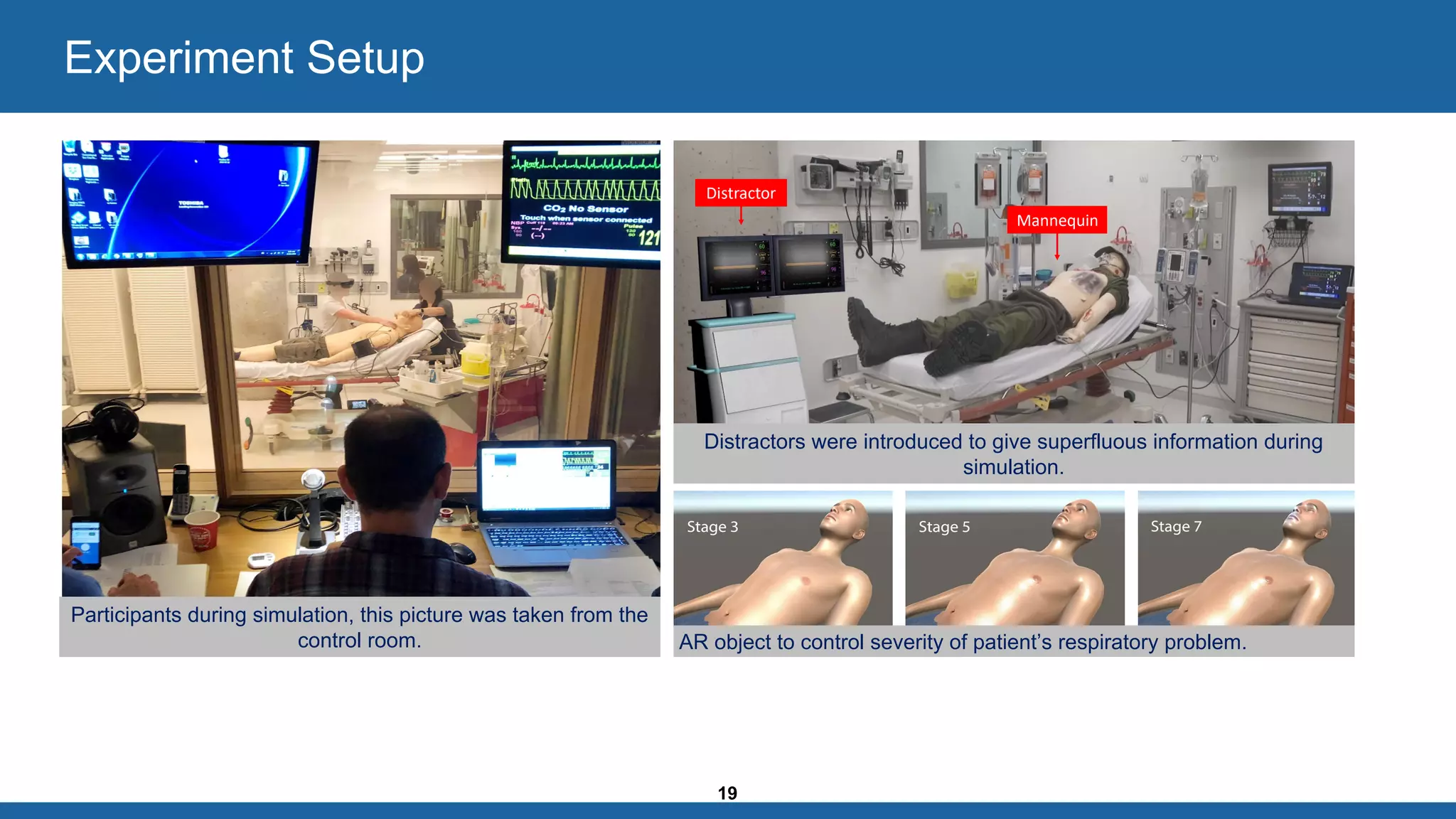

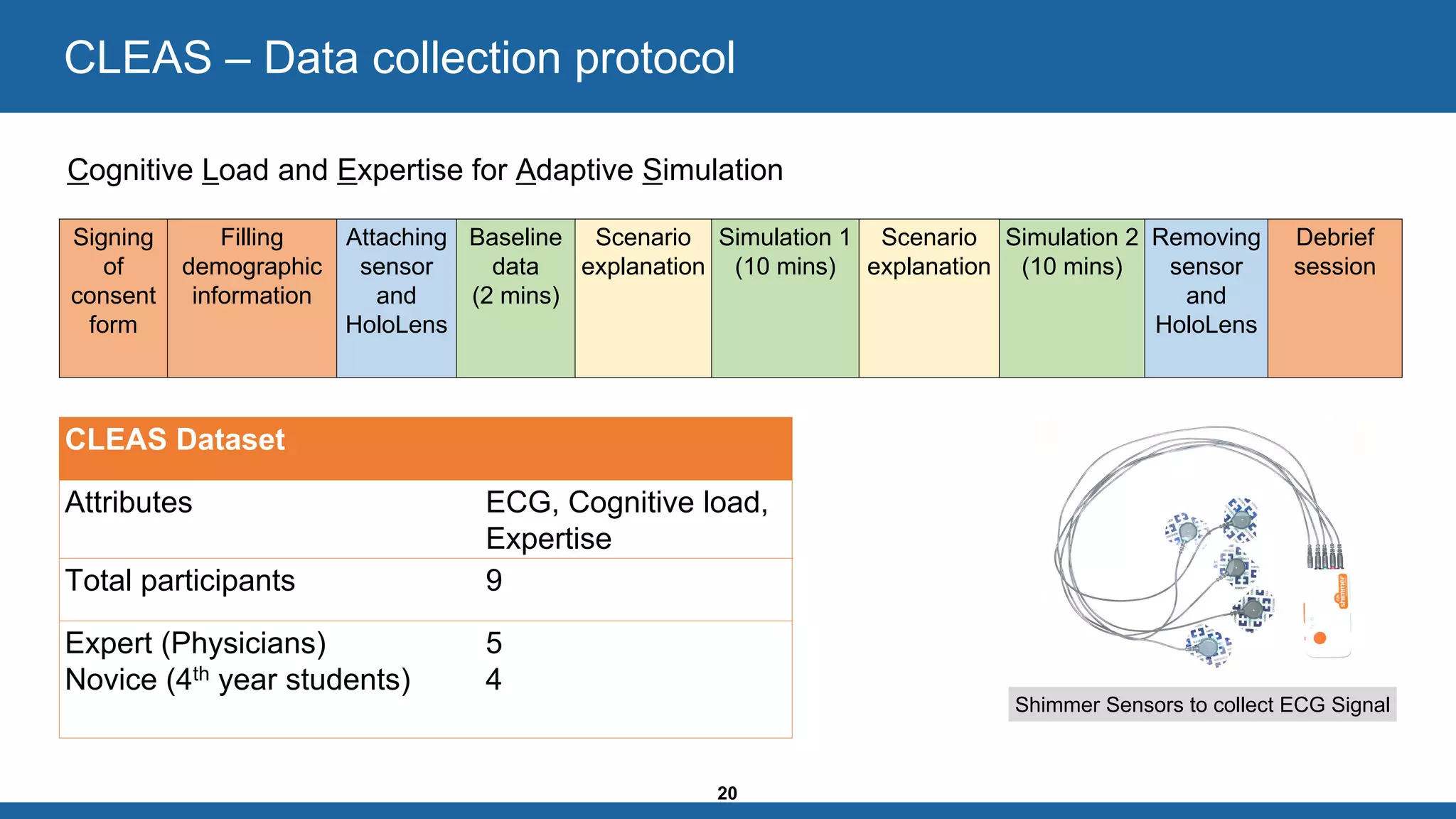

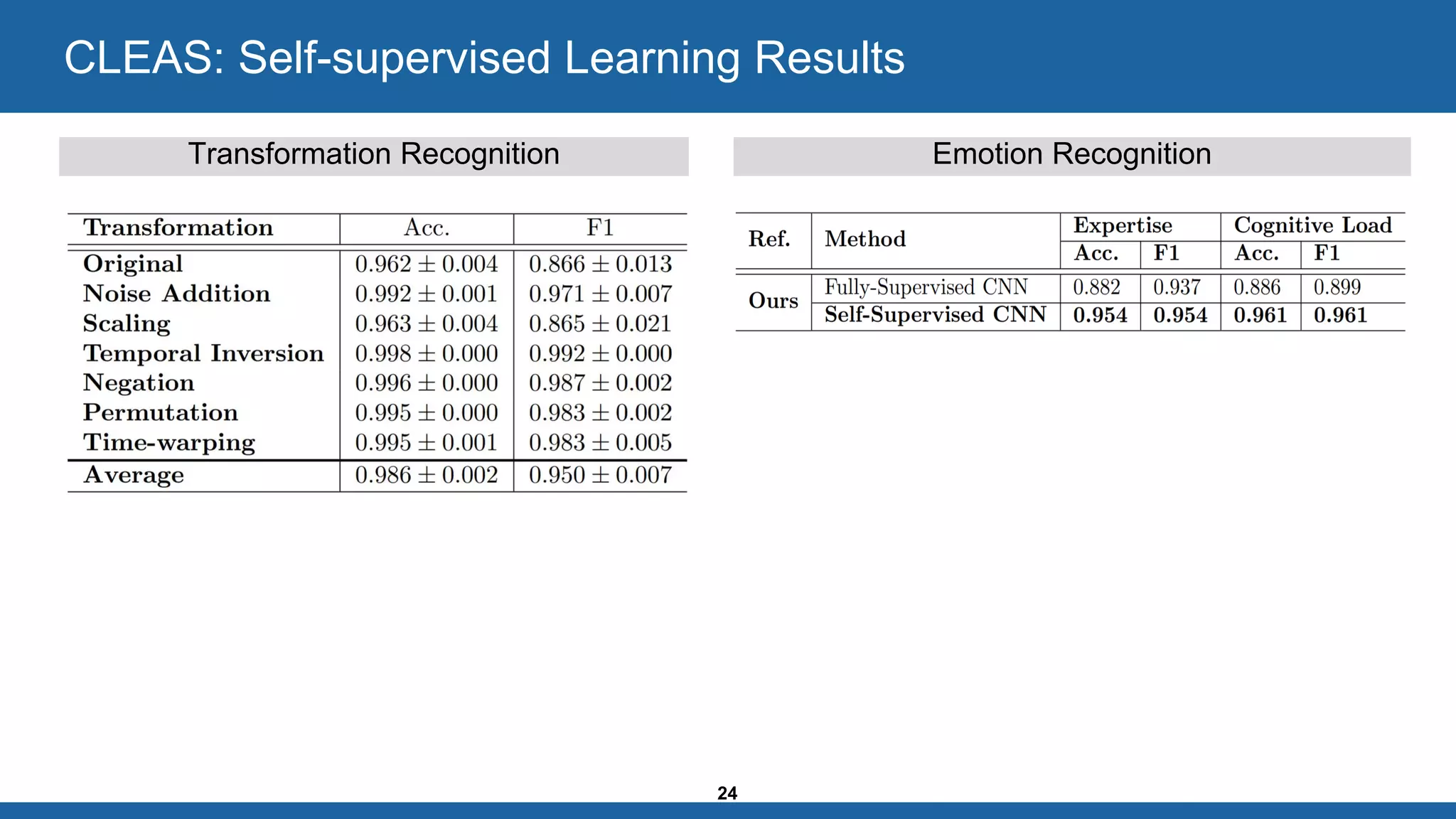

The document presents a self-supervised learning framework for ECG representation aimed at improving emotion recognition, achieving state-of-the-art results across four public datasets. It highlights the limitations of fully-supervised learning and emphasizes the advantages of self-supervised approaches, such as high-level generalizations from automatically generated labels. Additionally, the document discusses a novel adaptive simulation framework for training trauma responders that dynamically adjusts based on cognitive load and expertise.

![9

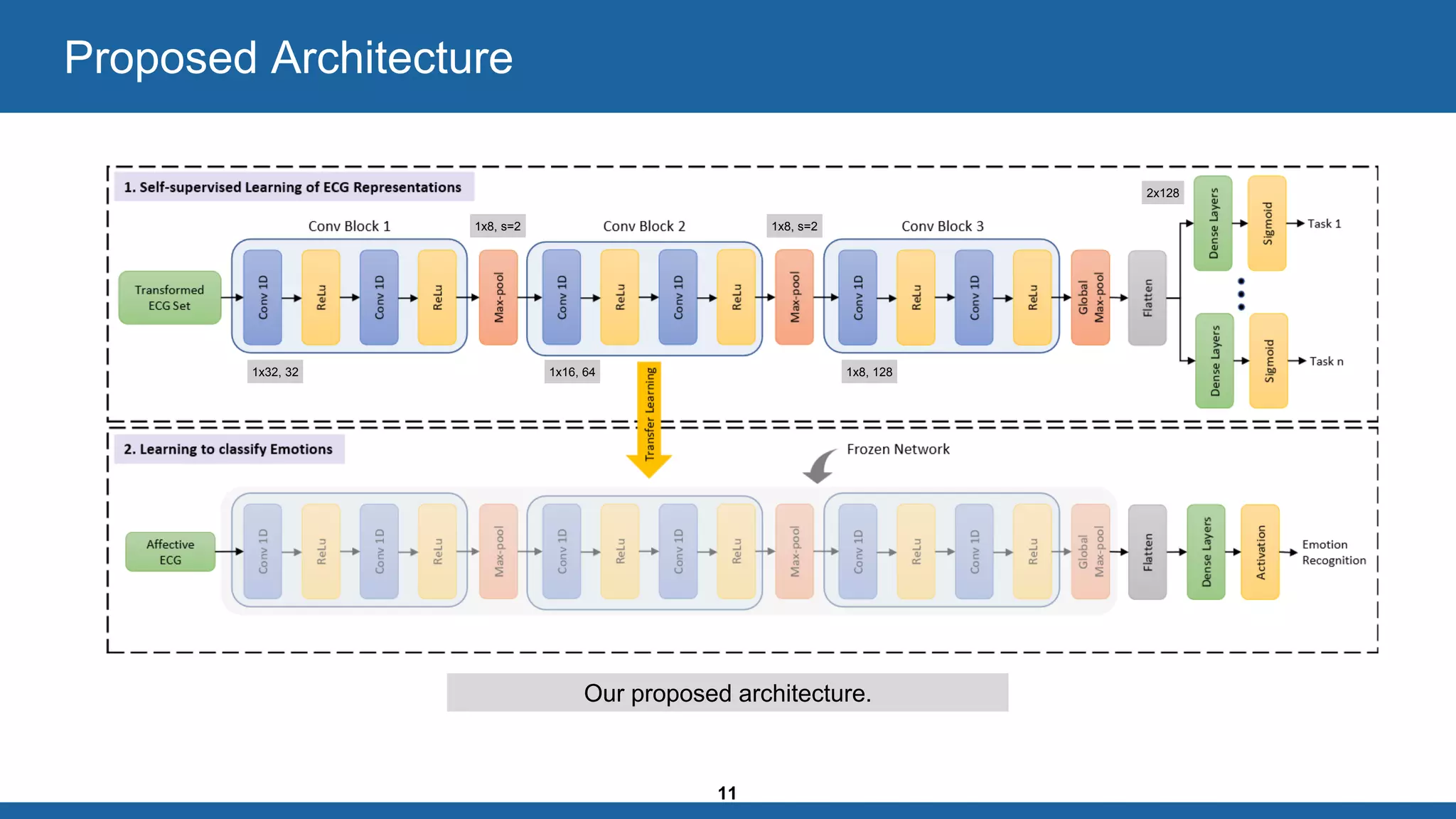

Proposed Framework

Stage 1: Pretext Task

Stage 2: Downstream Task

Transformation

Multi-task Self-supervised Network

[Xj, Pj]

Emotion Recognition

[RECG,yi]

Pseudo

Labels

Learned ECG Representation

Unlabelled

ECG

Transformed

ECG

ECG

Our proposed framework.

Affective

ECG](https://image.slidesharecdn.com/pritamsarkarmascthesispresentationaiim-200505021103/75/Self-supervised-ECG-Representation-Learning-for-Affective-Computing-9-2048.jpg)

![10

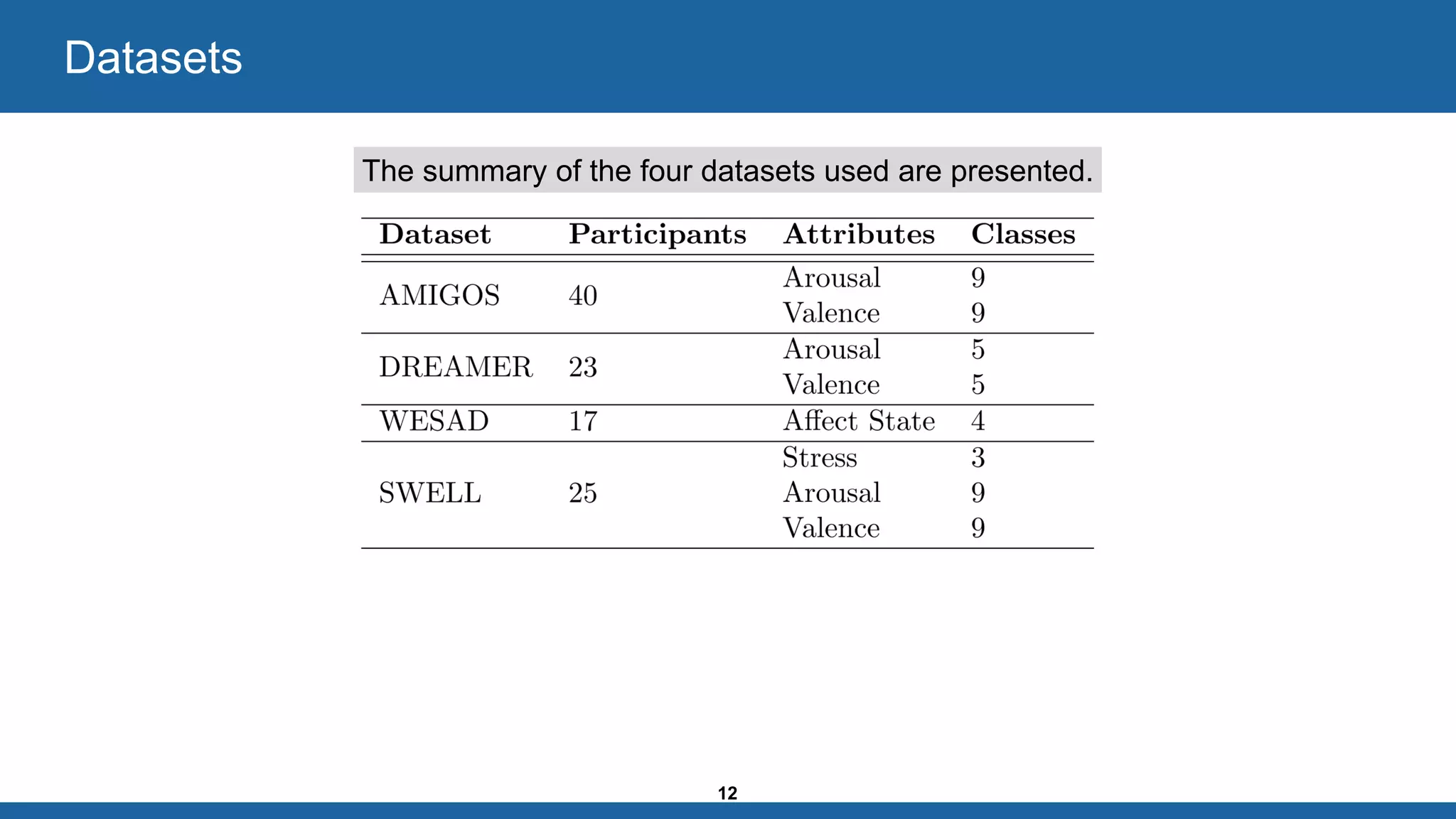

❑ Noise Addition [SNR = 15]

❑ Scaling [scaling factor = 0.9]

❑ Negation

❑ Temporal Inversion

❑ Permutation [no. of segments = 20]

❑ Time-warping [no. of segments=9,

stretching factor = 1.05]

Transformations

A sample of an original ECG signal with the six transformed

signals along with automatically generated labels are presented.](https://image.slidesharecdn.com/pritamsarkarmascthesispresentationaiim-200505021103/75/Self-supervised-ECG-Representation-Learning-for-Affective-Computing-10-2048.jpg)