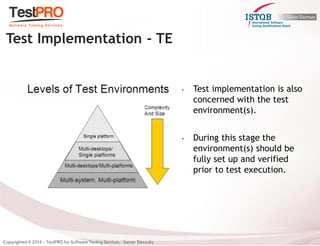

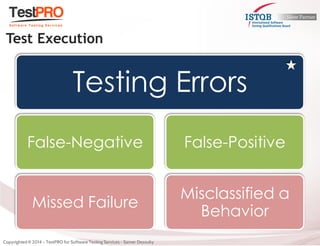

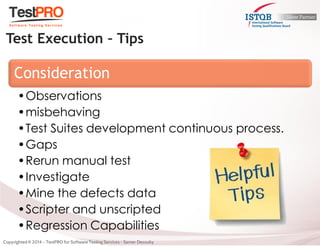

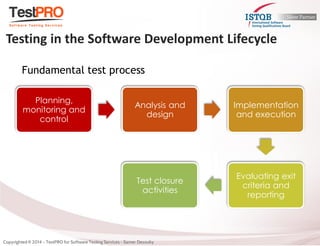

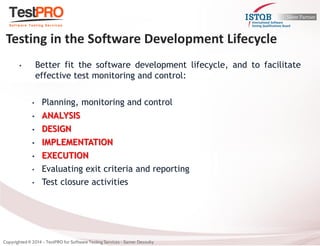

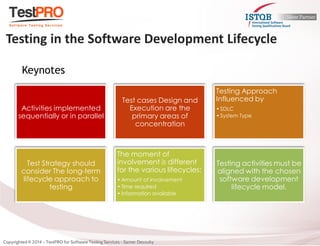

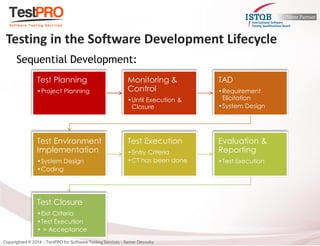

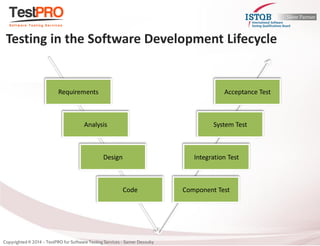

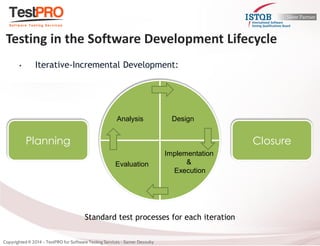

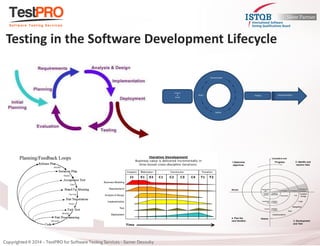

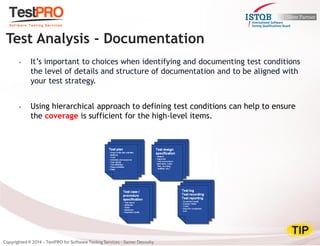

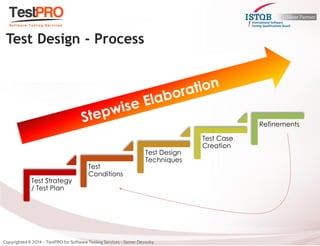

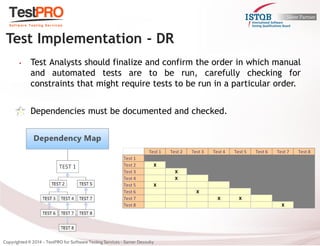

The document discusses test planning, analysis, design, implementation, and execution. It describes the roles and responsibilities of test analysts in each phase of testing. This includes activities like creating test cases and conditions, designing test suites, implementing test data and environments, executing tests, and logging test results. Test implementation is influenced by factors like the development lifecycle model, quality characteristics, test infrastructure, and exit criteria.

![•The level of detail and associated complexity for work done during test implementation may be influenced by the detail of the test cases and test conditions.

•In some cases regulatory rules apply, and tests should provide evidence of compliance to applicable standards such as the United States Federal Aviation Administration’s DO-178B/ED 12B [RTCA DO- 178B/ED-12B] which is standard for Software Considerations in Airborne Systems and Equipment Certification is a document dealing with the safety of software used in certain airborne systems, SWIFT book.

Test Implementation - DR

http://www.davi.ws/avionics/TheAvionicsHandbook_Cap_27.pdf http://www.swift.com/resources/documents/standards_inventory_of_messages.pdf](https://image.slidesharecdn.com/01-testprocess-demo-140930015322-phpapp02/85/ISTQB-CTAL-Test-Analyst-49-320.jpg)