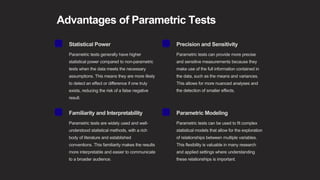

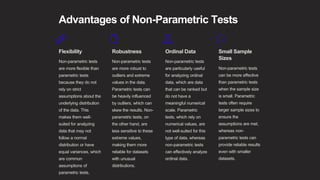

This document provides an introduction to parametric and non-parametric tests. It explains that parametric tests make assumptions about the underlying data distribution, such as normality, while non-parametric tests do not rely on these assumptions. The document emphasizes that understanding the differences between these two types of statistical tests is important for researchers to select the appropriate analysis method for their research questions and data.