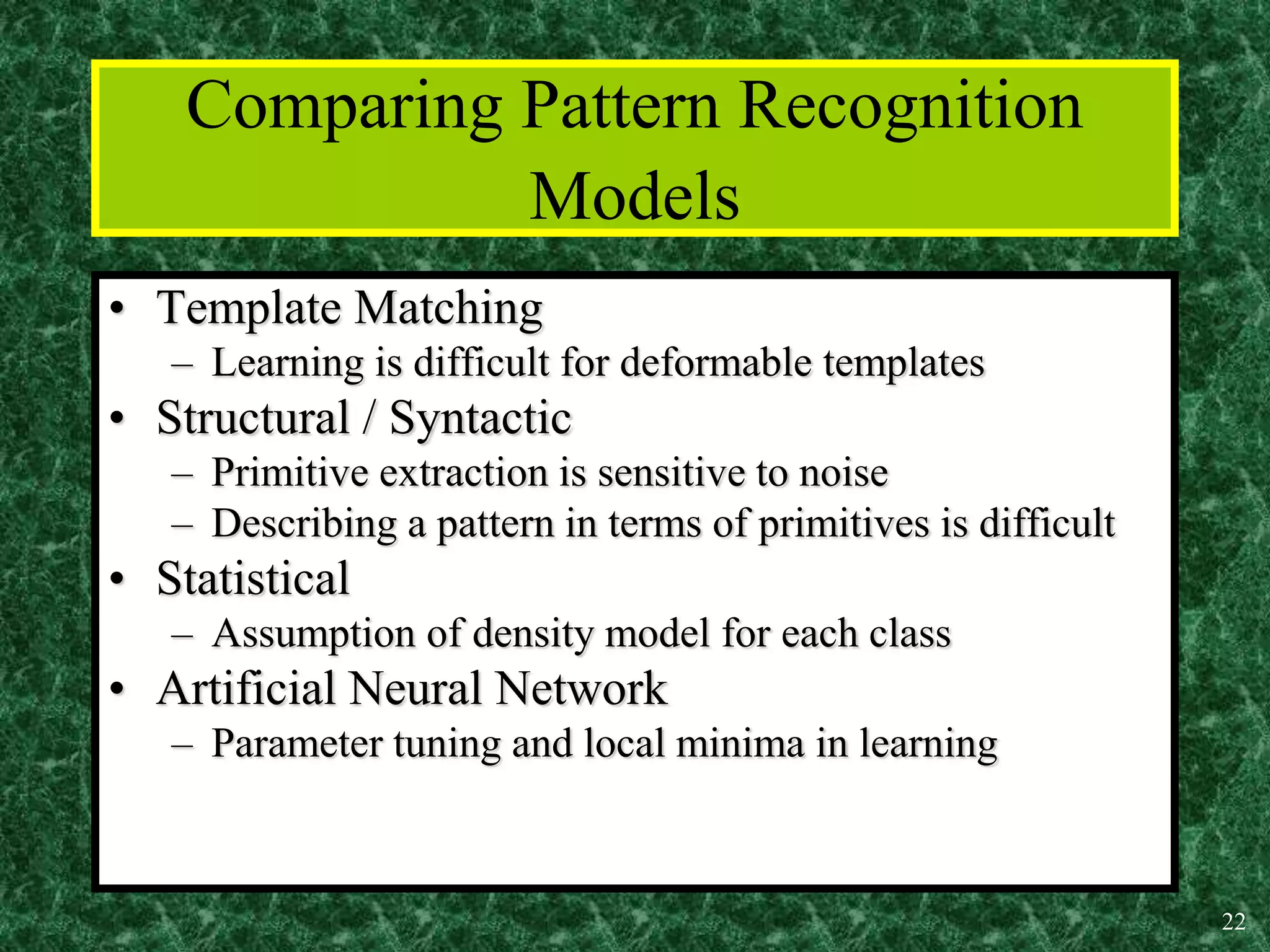

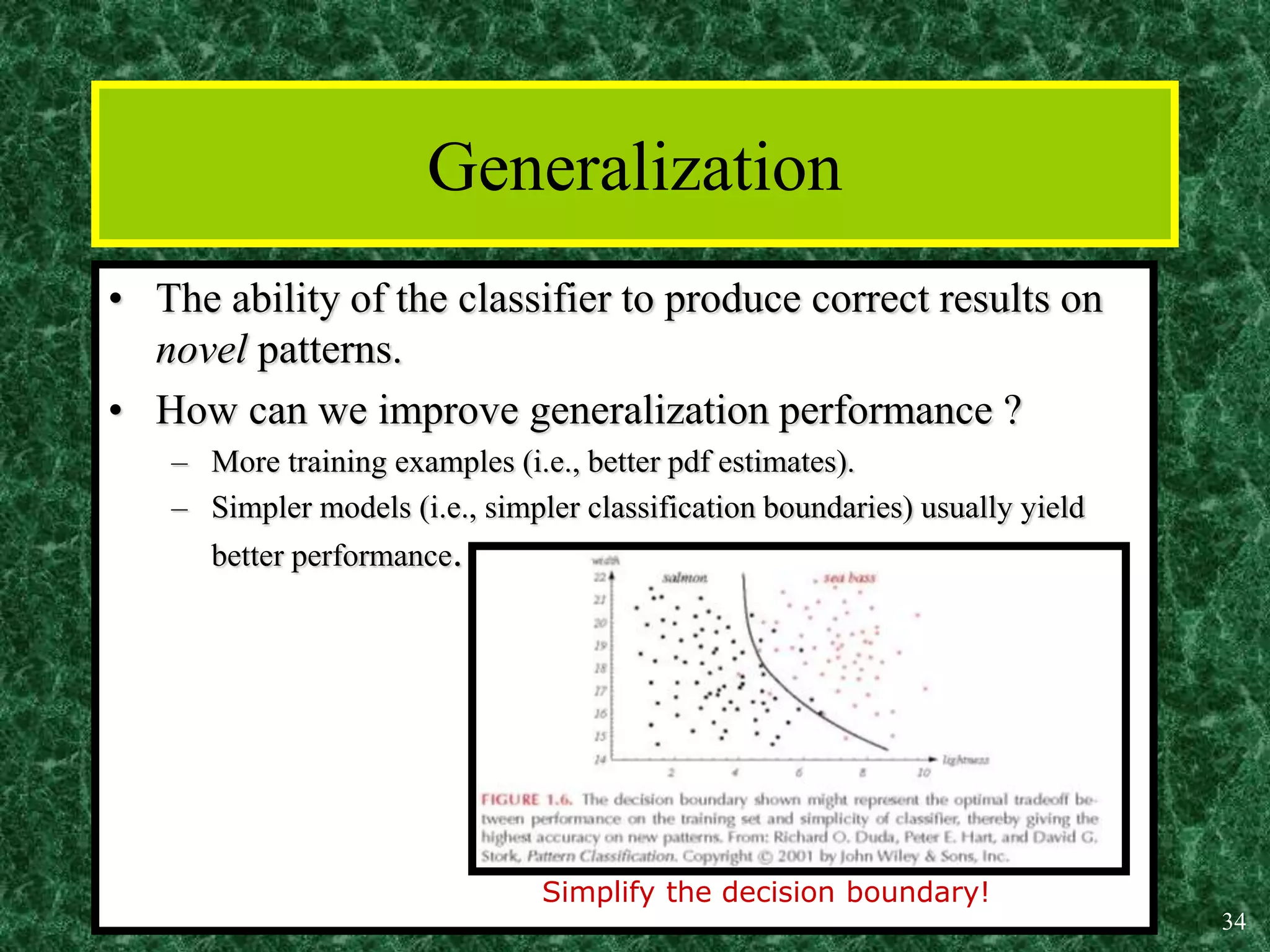

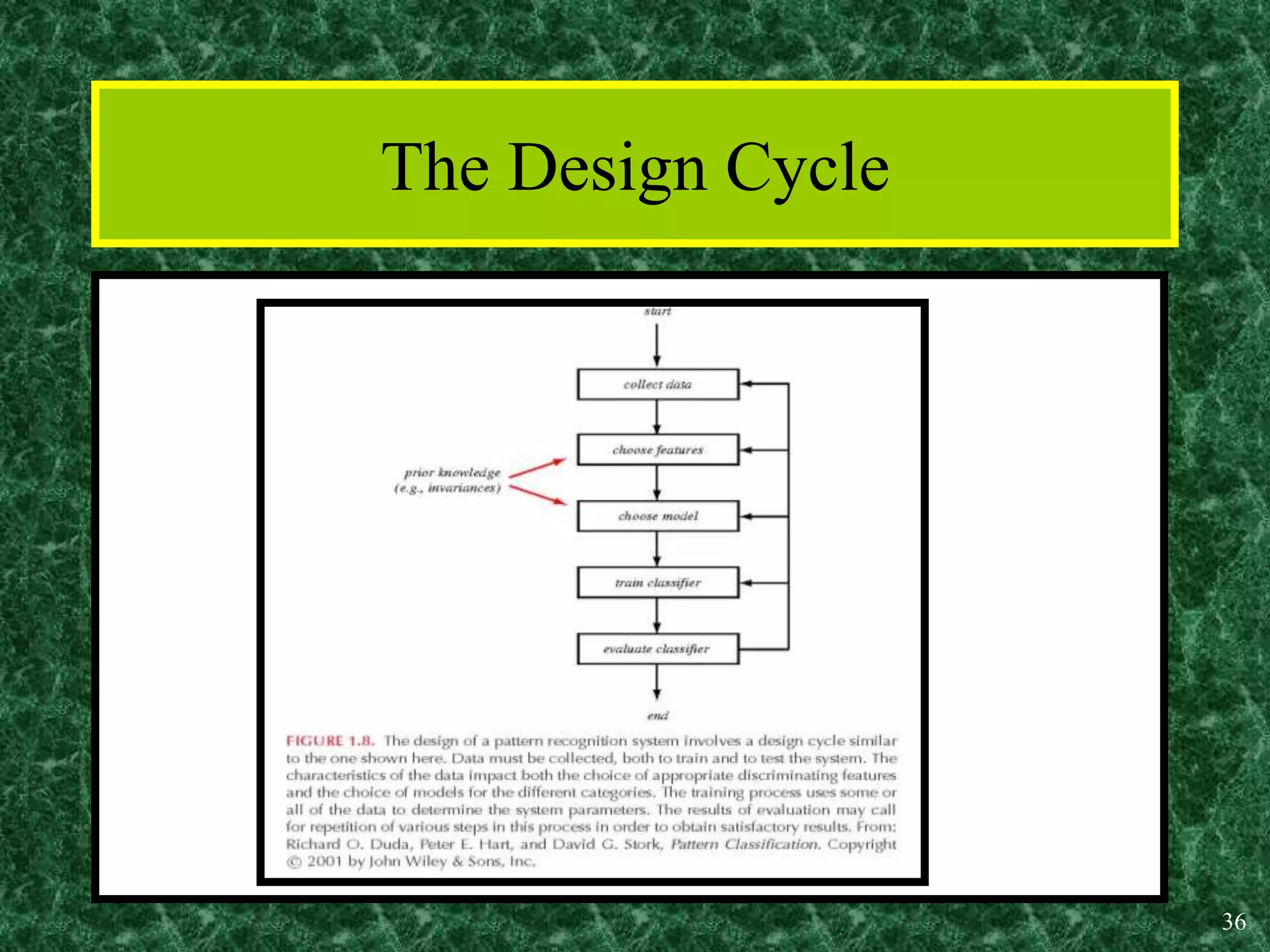

The document provides an overview of pattern recognition (PR), defining it as the study of how machines can observe, learn, and make decisions about patterns. It explores various aspects of PR, including types of pattern classes, applications in fields like speech recognition and medical diagnosis, and challenges such as noise, feature extraction, and model complexity. Key topics also cover different models of PR, including template matching, statistical techniques, and artificial neural networks, while emphasizing the importance of generalization and classifier combination.

![3

What is a pattern?

• Watanable [163] defines a pattern as

“ the opposite of a chaos; it is an entity, vaguely defined,

that could be given a name”](https://image.slidesharecdn.com/chapter1-230904175726-49dc5a0b/75/introduction-to-pattern-recognition-3-2048.jpg)