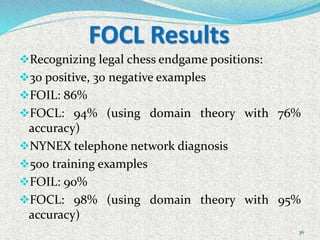

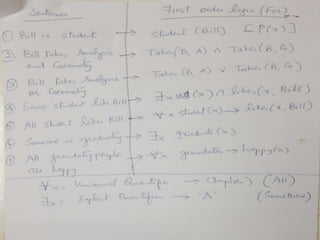

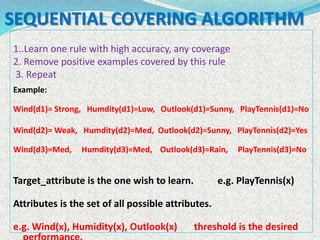

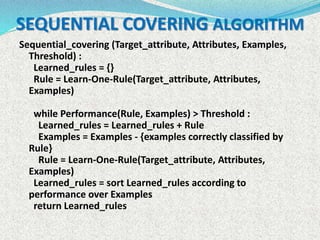

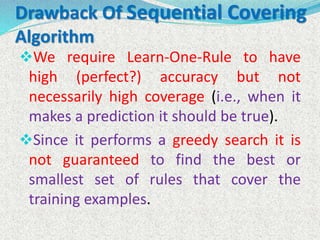

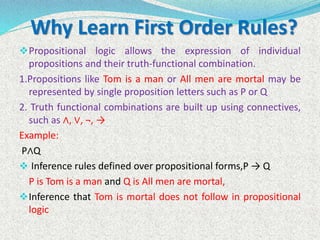

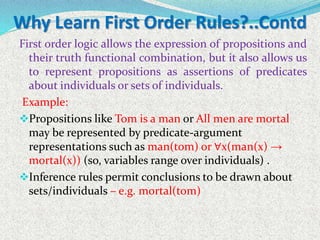

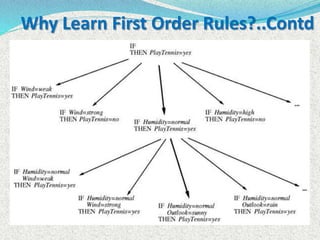

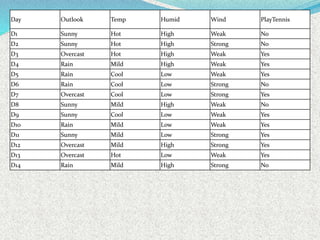

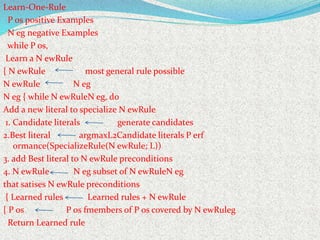

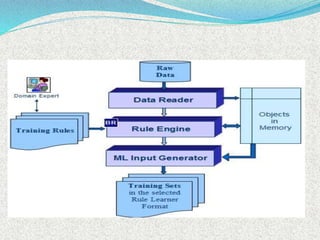

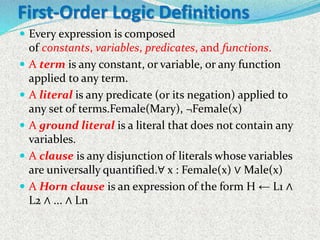

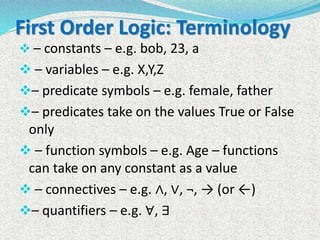

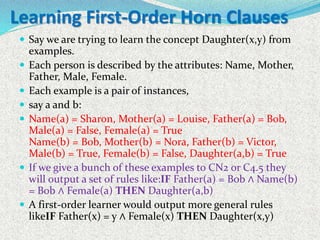

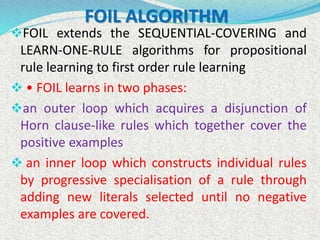

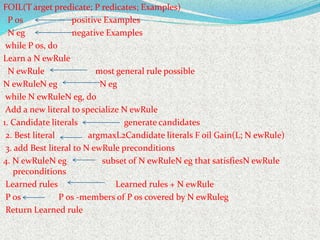

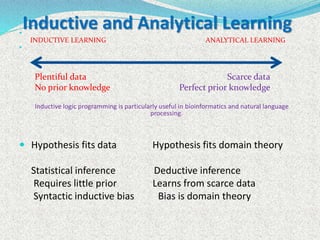

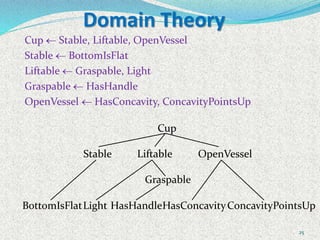

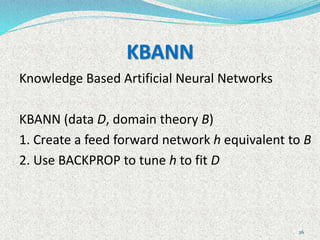

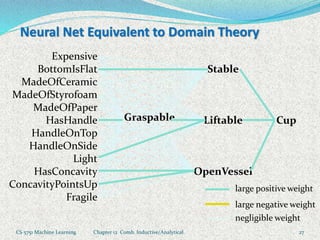

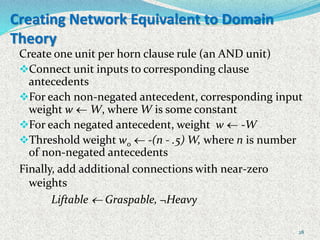

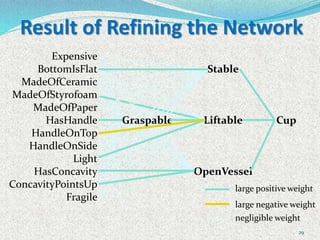

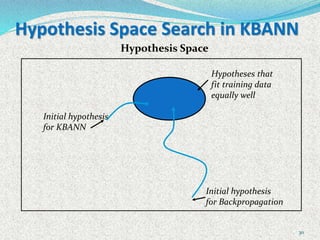

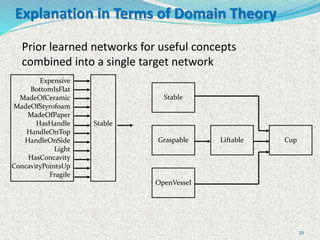

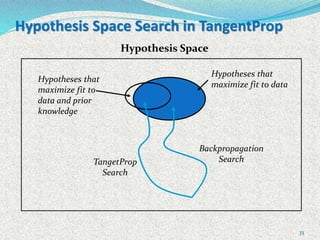

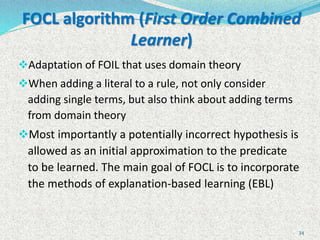

The document discusses machine learning algorithms for learning first-order logic rules from examples. It introduces the FOIL algorithm, which extends propositional rule learning algorithms like sequential covering to learn more general first-order Horn clauses. FOIL uses an iterative specialization approach, starting with a general rule and greedily adding literals to better fit examples while avoiding negative examples. The document also discusses how combining inductive learning with analytical domain knowledge can improve learning, such as in the KBANN approach where a neural network is initialized based on the domain theory before training on examples.

![35

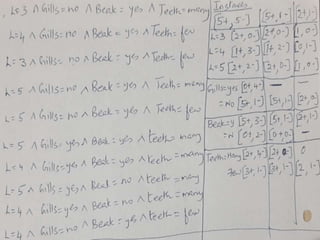

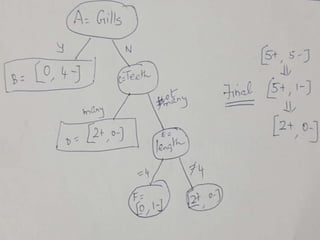

Search in FOCL

Cup HasHandle

[2+,3-]

Cup

Cup ¬HasHandle

[2+,3-]

Cup Fragile

[2+,4-]

Cup BottomIsFlat,

Light,

HasConcavity,

ConcavityPointsUp

[4+,2-]

...

Cup BottomIsFlat,

Light,

HasConcavity,

ConcavityPointsUp,

HandleOnTop

[0+,2-]

Cup BottomIsFlat,

Light,

HasConcavity,

ConcavityPointsUp,

¬ HandleOnTop

[4+,0-]

Cup BottomIsFlat,

Light,

HasConcavity,

ConcavityPointsUp,

HandleOnSide

[2+,0-]

...](https://image.slidesharecdn.com/fdponmachinelearningu-learningrule-201018095147/85/MACHINE-LEARNING-LEARNING-RULE-35-320.jpg)