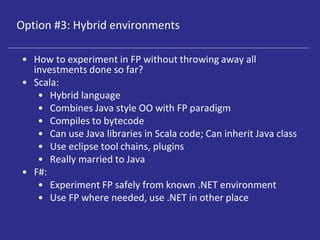

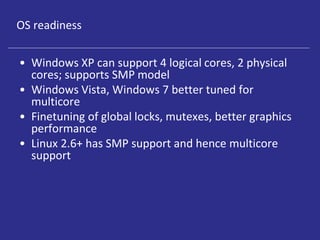

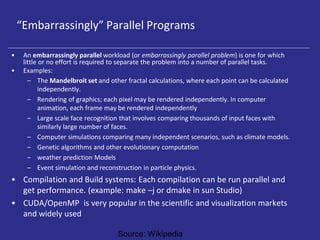

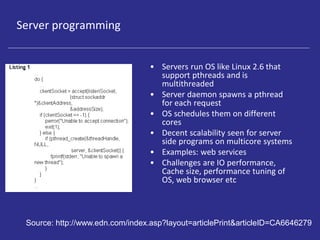

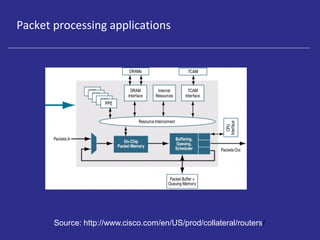

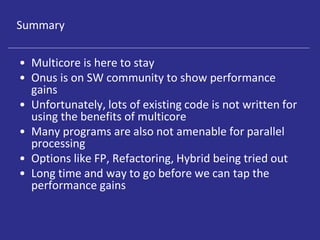

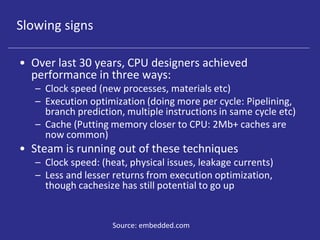

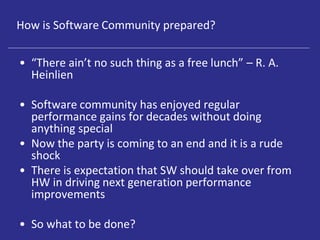

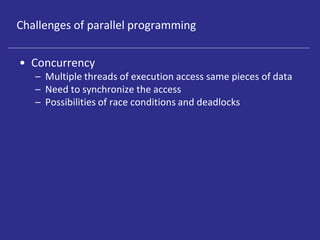

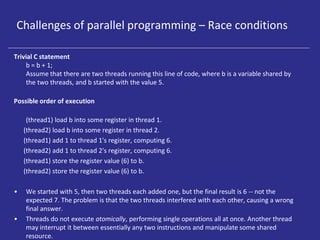

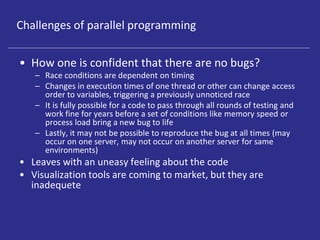

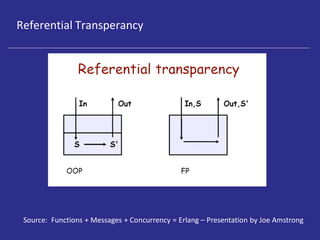

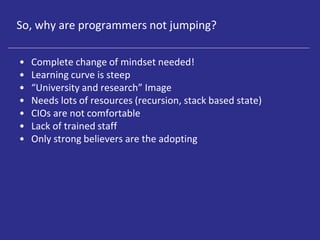

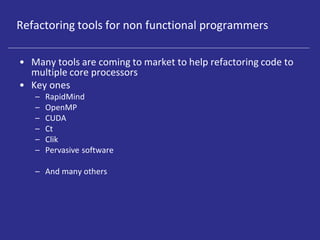

Multicore processors are becoming prevalent due to the limitations of increasing single core clock speeds. This presents challenges for software to effectively utilize multiple cores. Functional programming is one option that avoids shared state and parallel access issues, but requires a significant mindset shift. Refactoring existing code using tools is another option to incrementally introduce parallelism. Hybrid approaches combining paradigms may also help transition. Key application areas currently benefiting include servers, scientific computing, and packet processing. However, significant existing code is not easily parallelized and performance gains have yet to be fully realized.

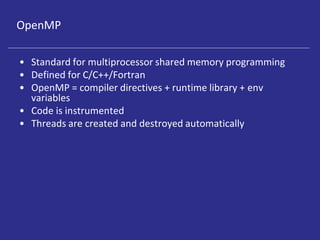

![OpenMP example code

int main(int argc, char **argv)

{ const int N = 100000;

int i, a[N];

#pragma omp parallel

for for (i = 0; i < N; i++)

a[i] = 2 * i;

return 0;

}](https://image.slidesharecdn.com/d840e0d0-7830-49a9-978b-28d9520404ce-160913150510/85/Introduction-to-multicore-ppt-34-320.jpg)