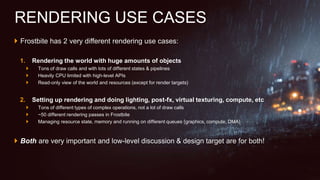

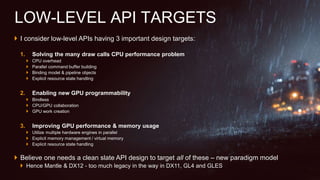

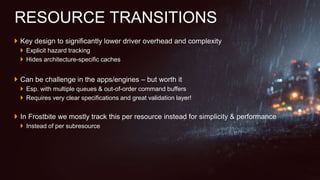

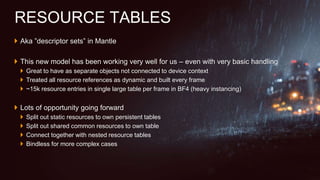

The document discusses low-level graphics rendering in the Frostbite engine, highlighting two main use cases: rendering large worlds with numerous objects and performing complex operations like lighting and compute tasks. It emphasizes the need for a clean slate API design to address CPU overhead, GPU programmability, and performance while outlining challenges and opportunities for optimization through explicit resource management and collaboration between CPU and GPU. The discussion also touches on the importance of future low-level APIs having clear state management and efficient memory handling to improve rendering performance and efficiency.