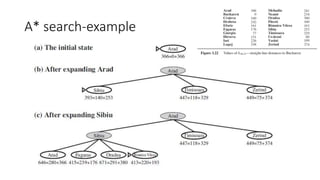

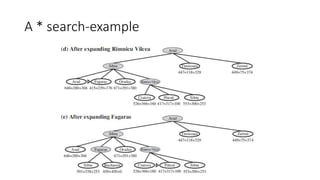

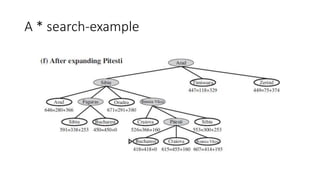

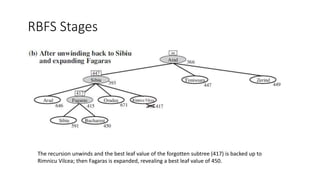

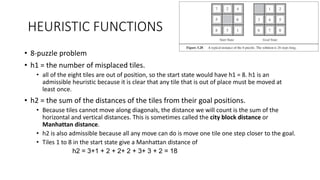

This document discusses various informed search algorithms including best-first search, greedy best-first search, A* search, and memory-bounded variants like IDA* and RBFS. It explains that A* search is optimal if the heuristic is admissible and consistent, and compares the performance of different heuristics for problems like the 8-puzzle. Memory-bounded algorithms trade optimality for practicality on large problems. Learning search control knowledge is also proposed.