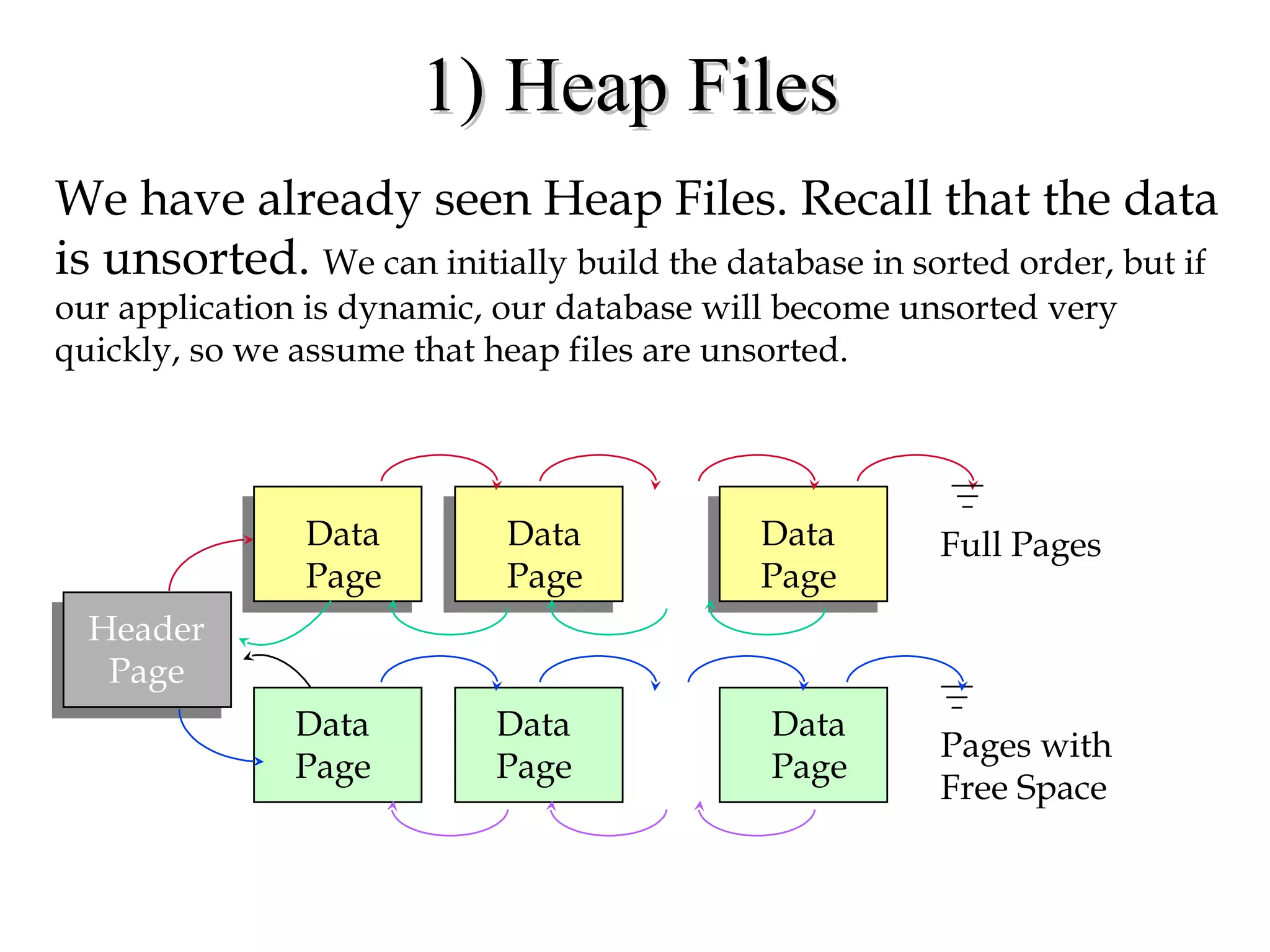

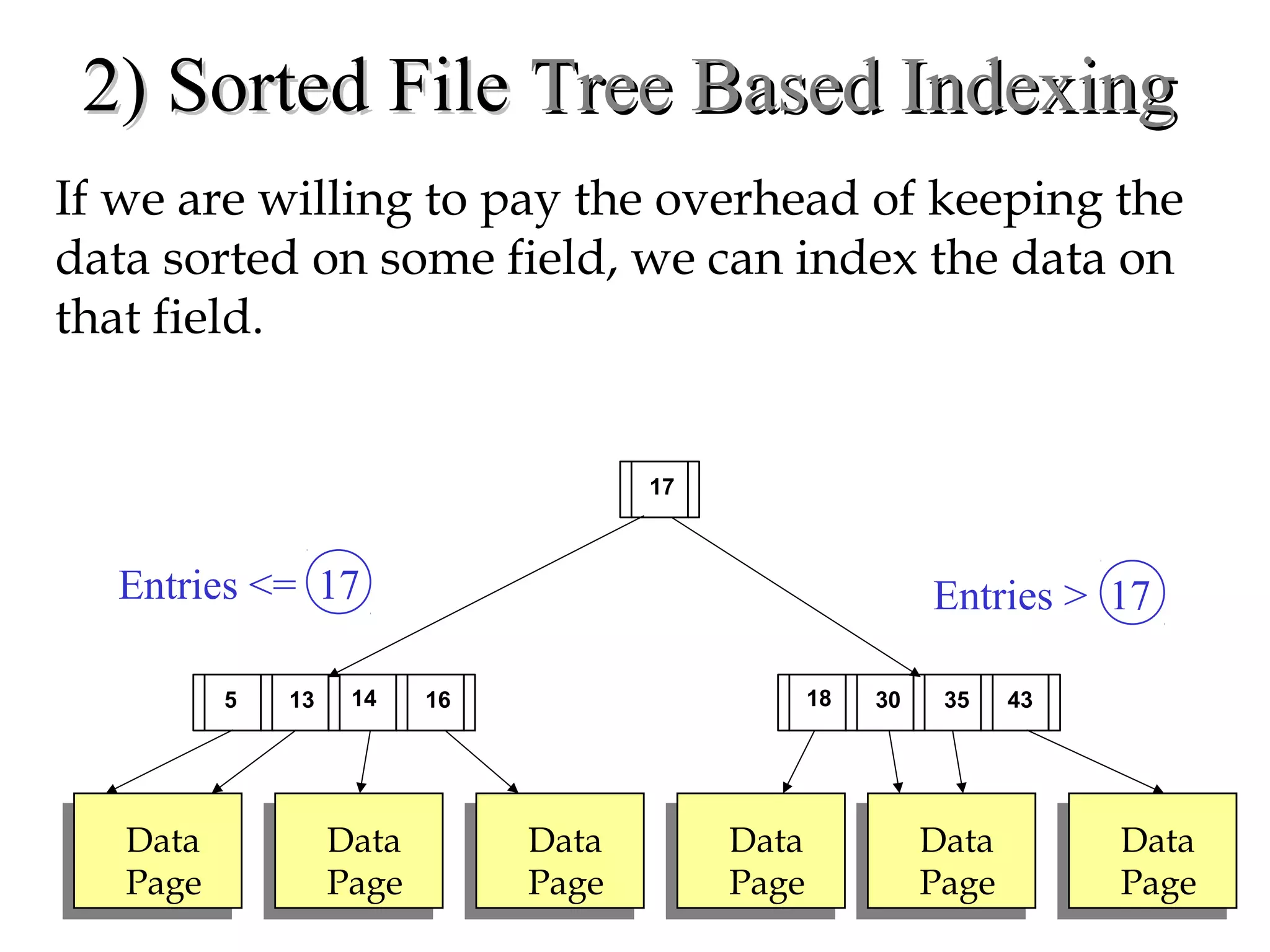

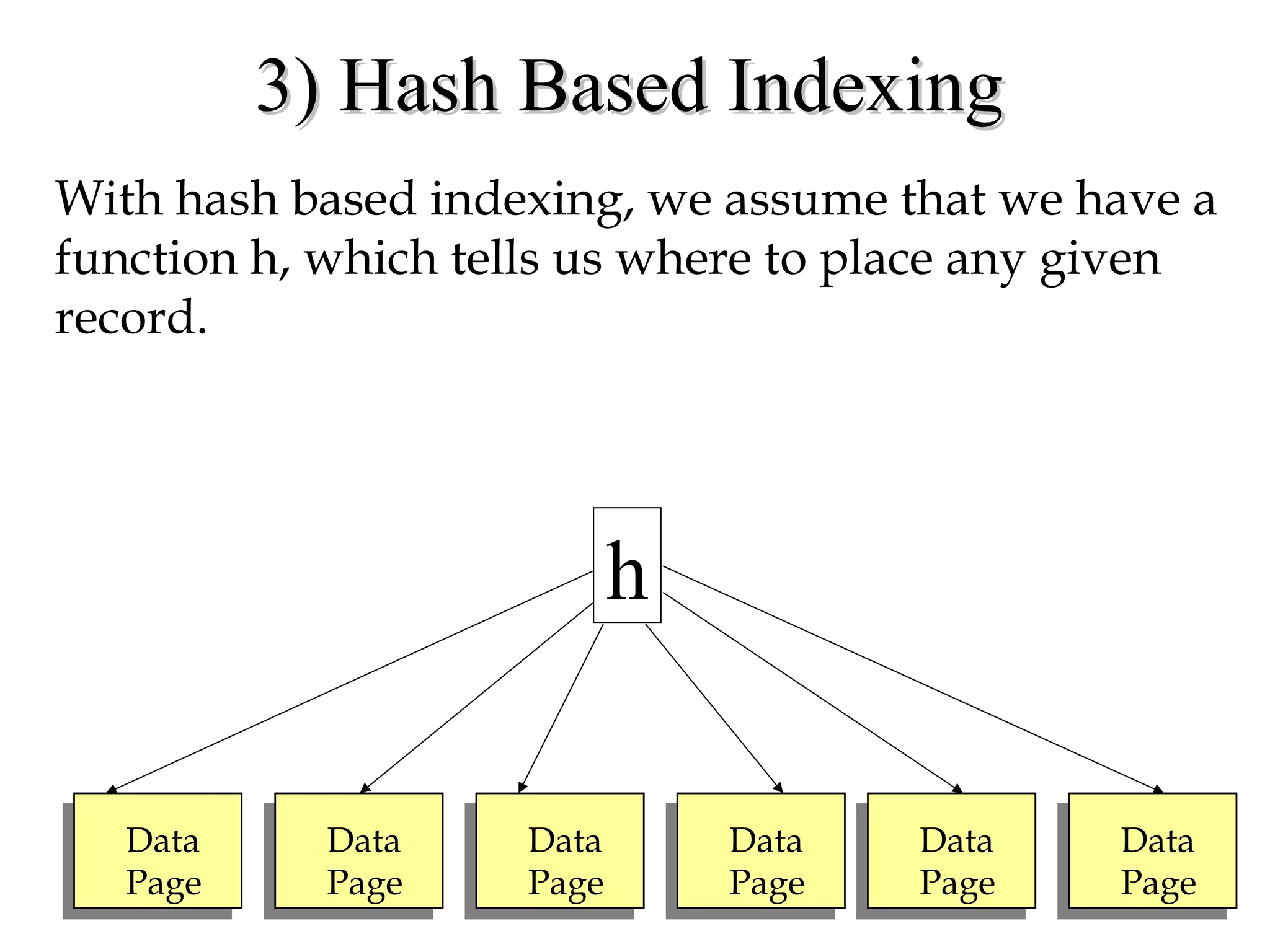

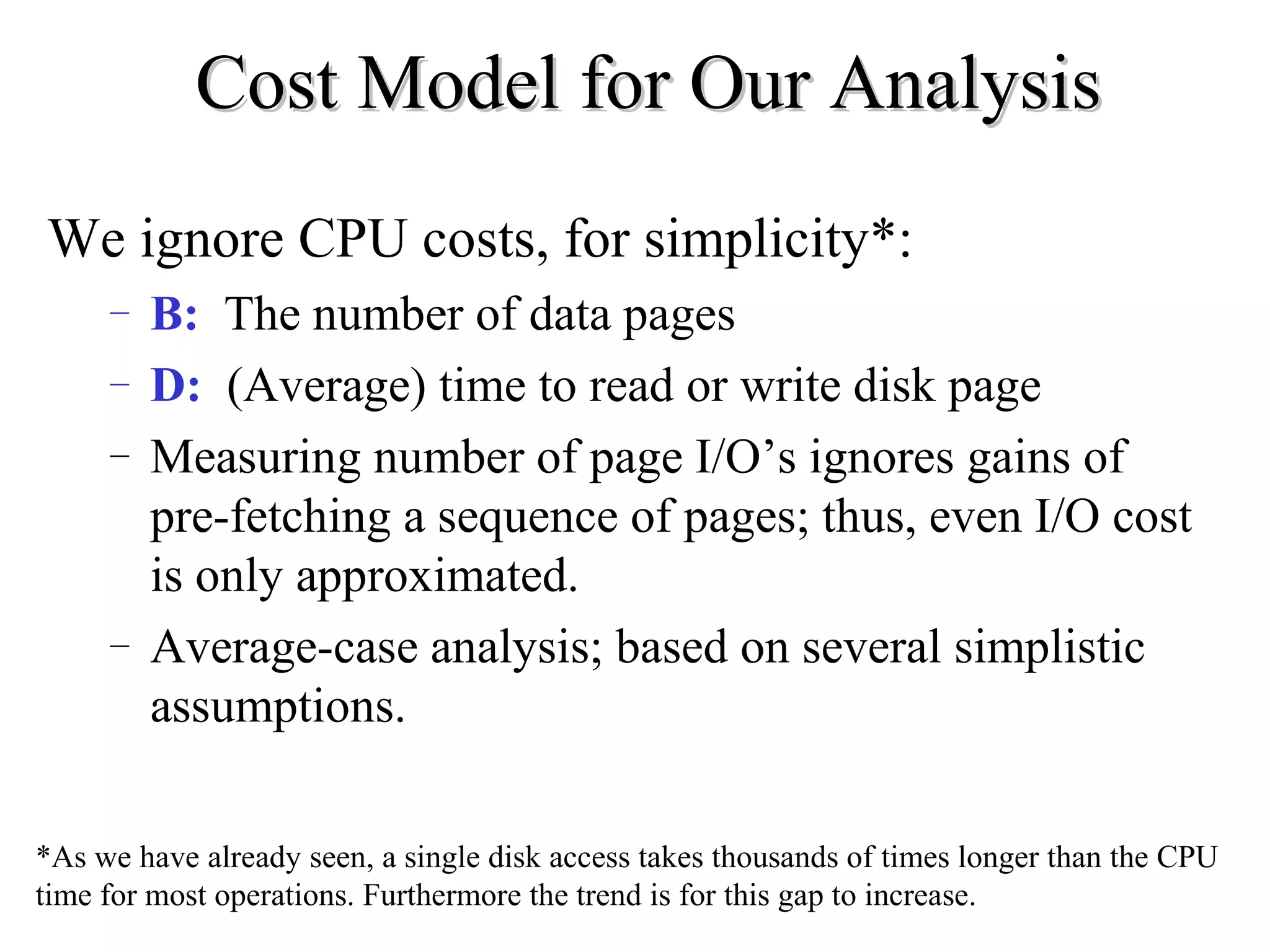

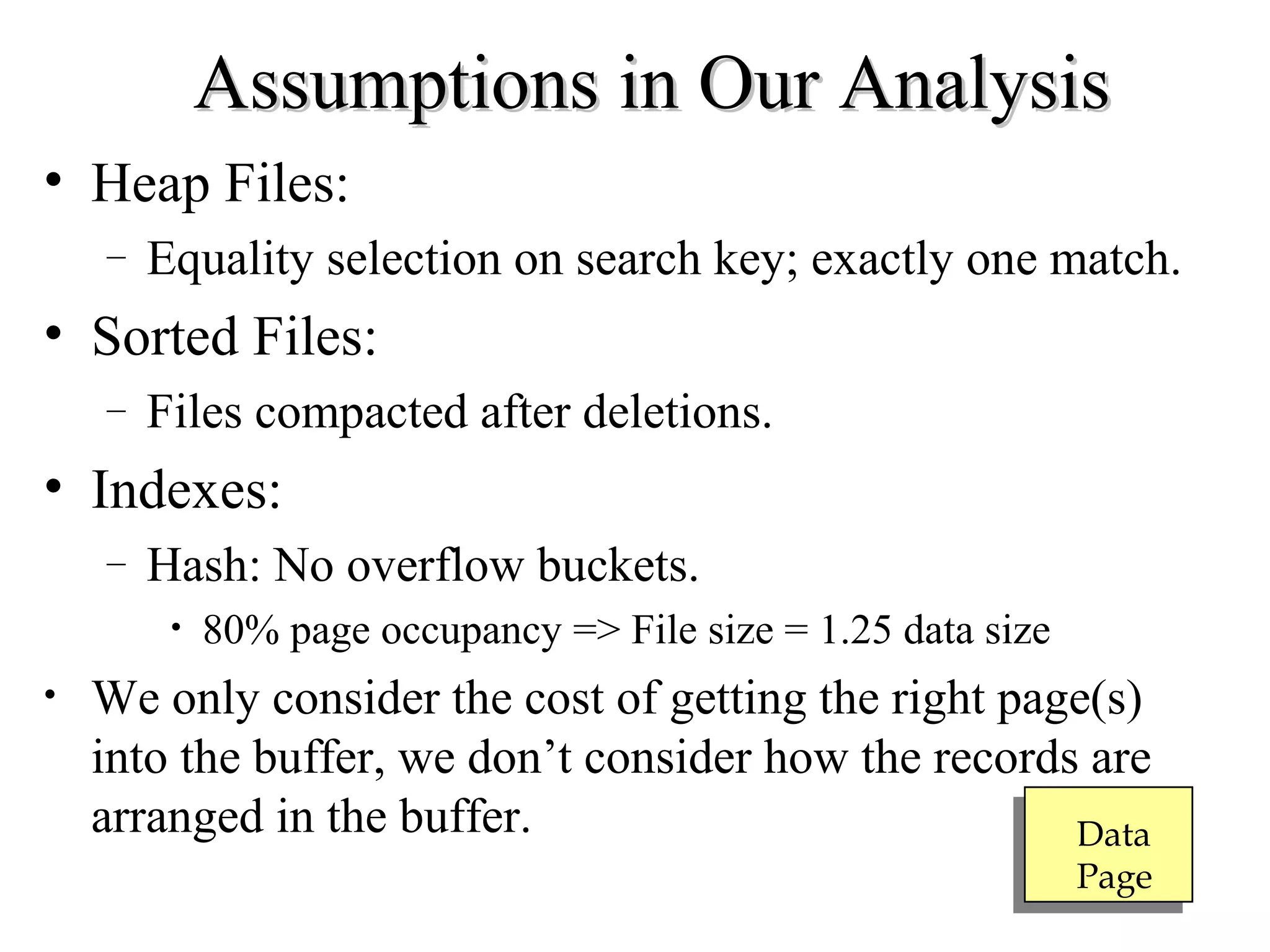

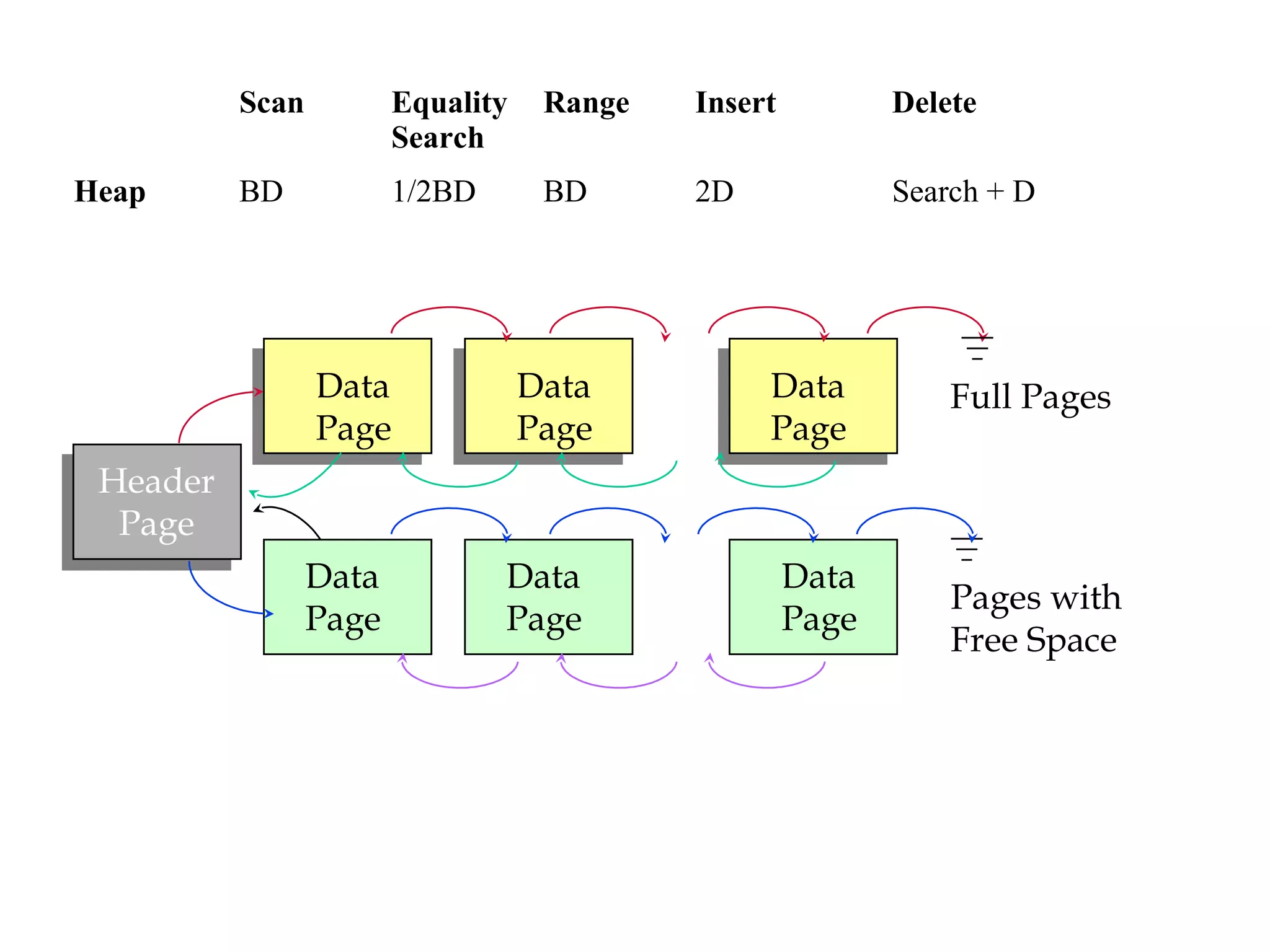

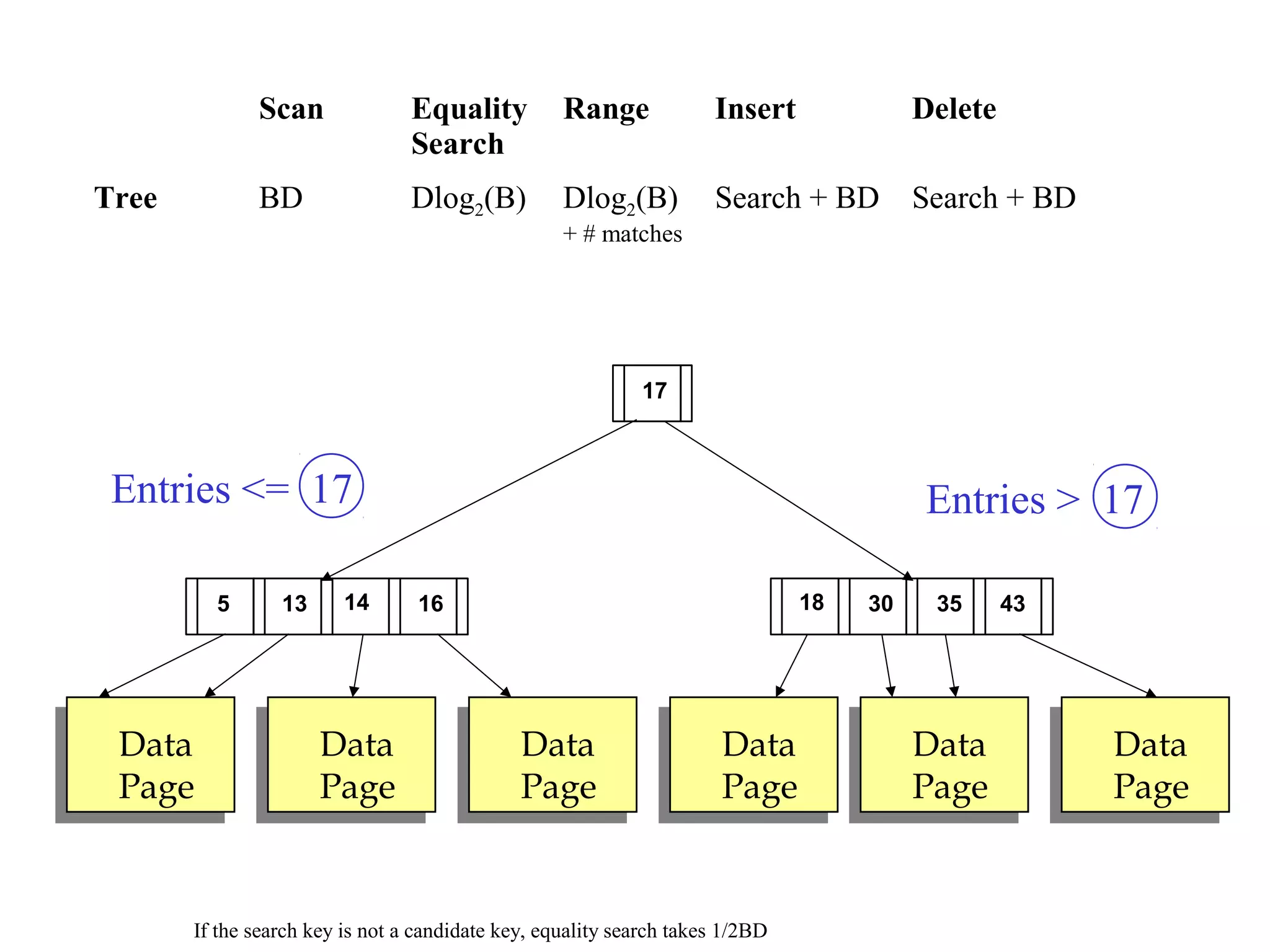

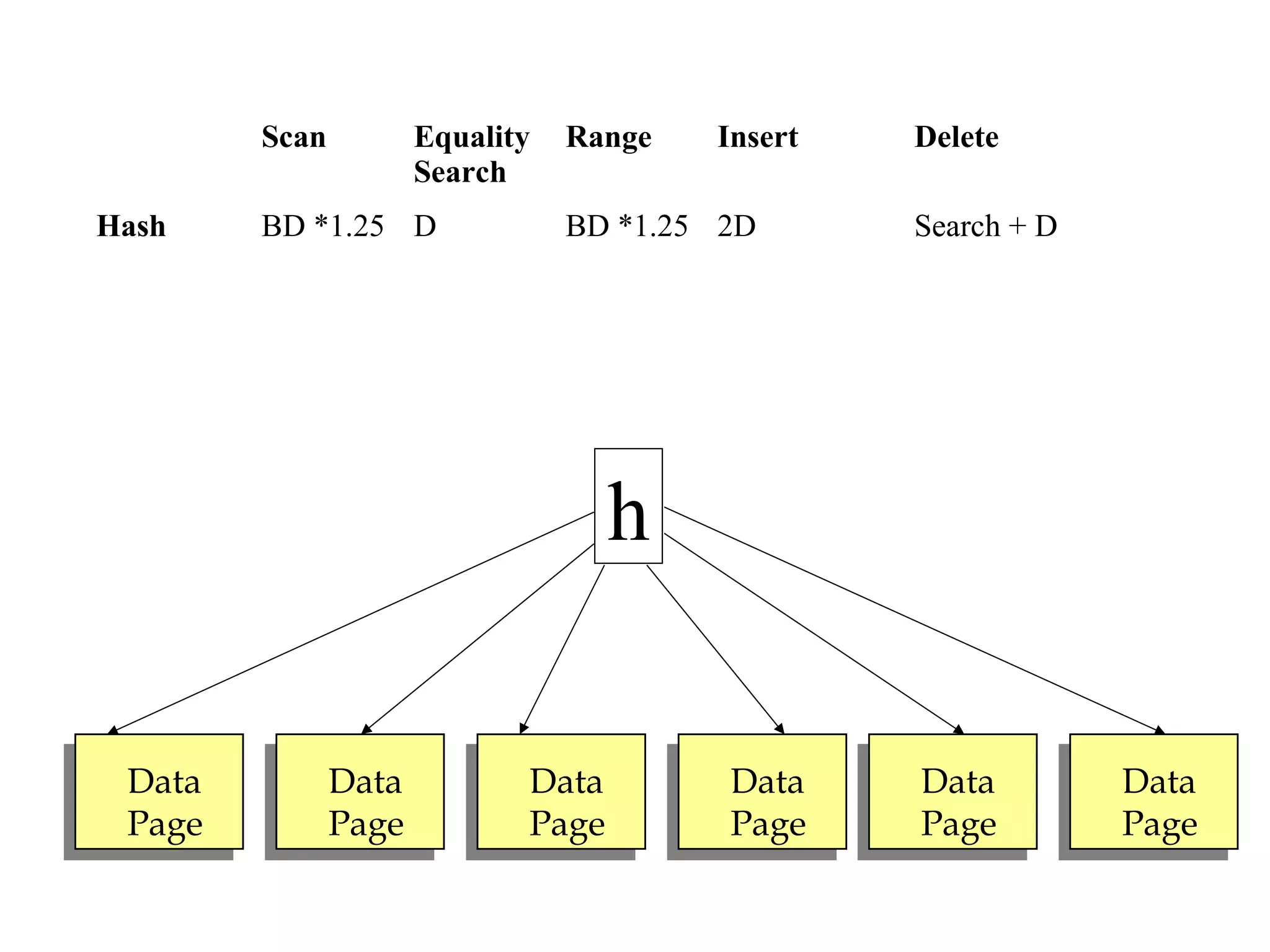

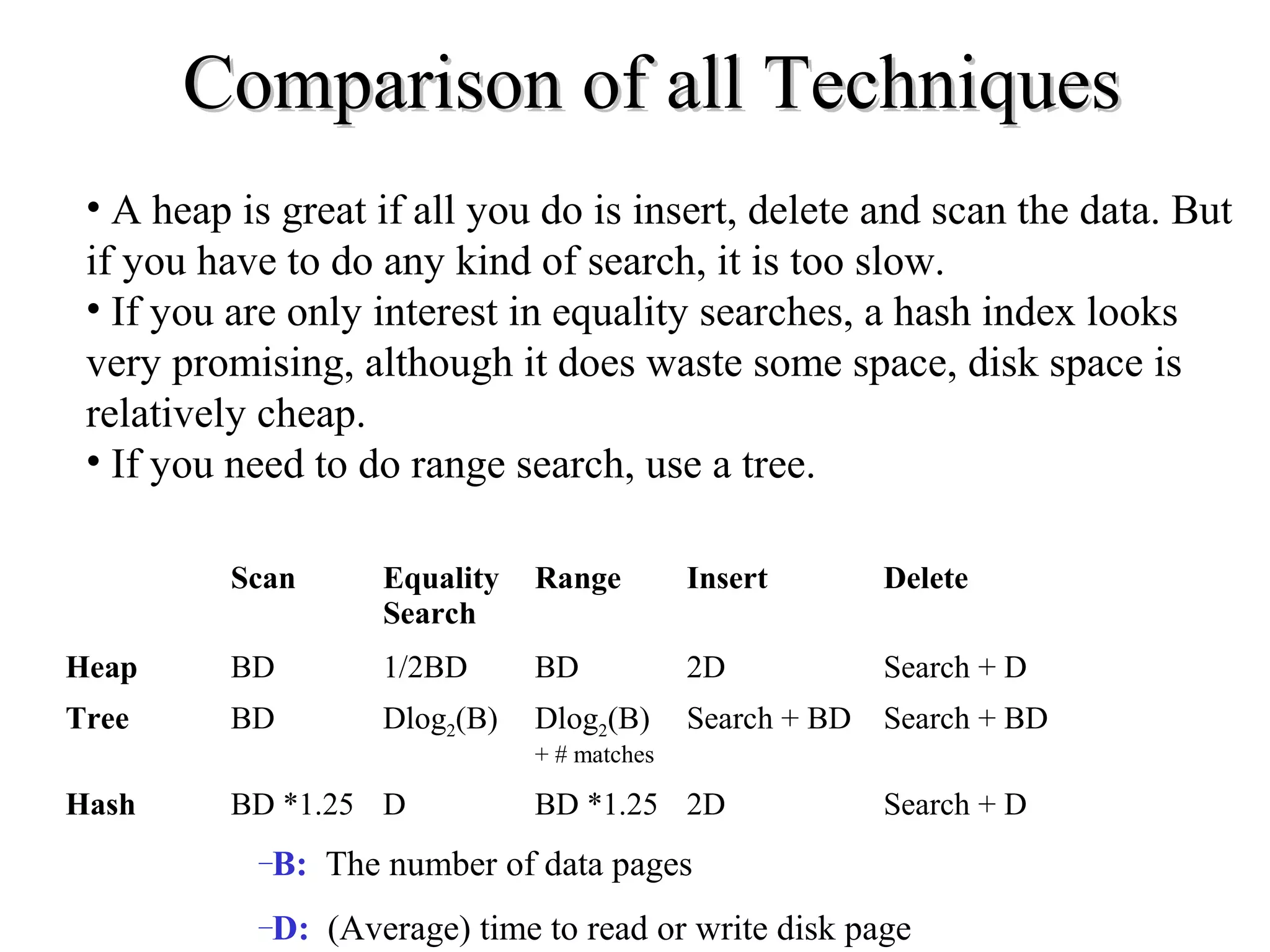

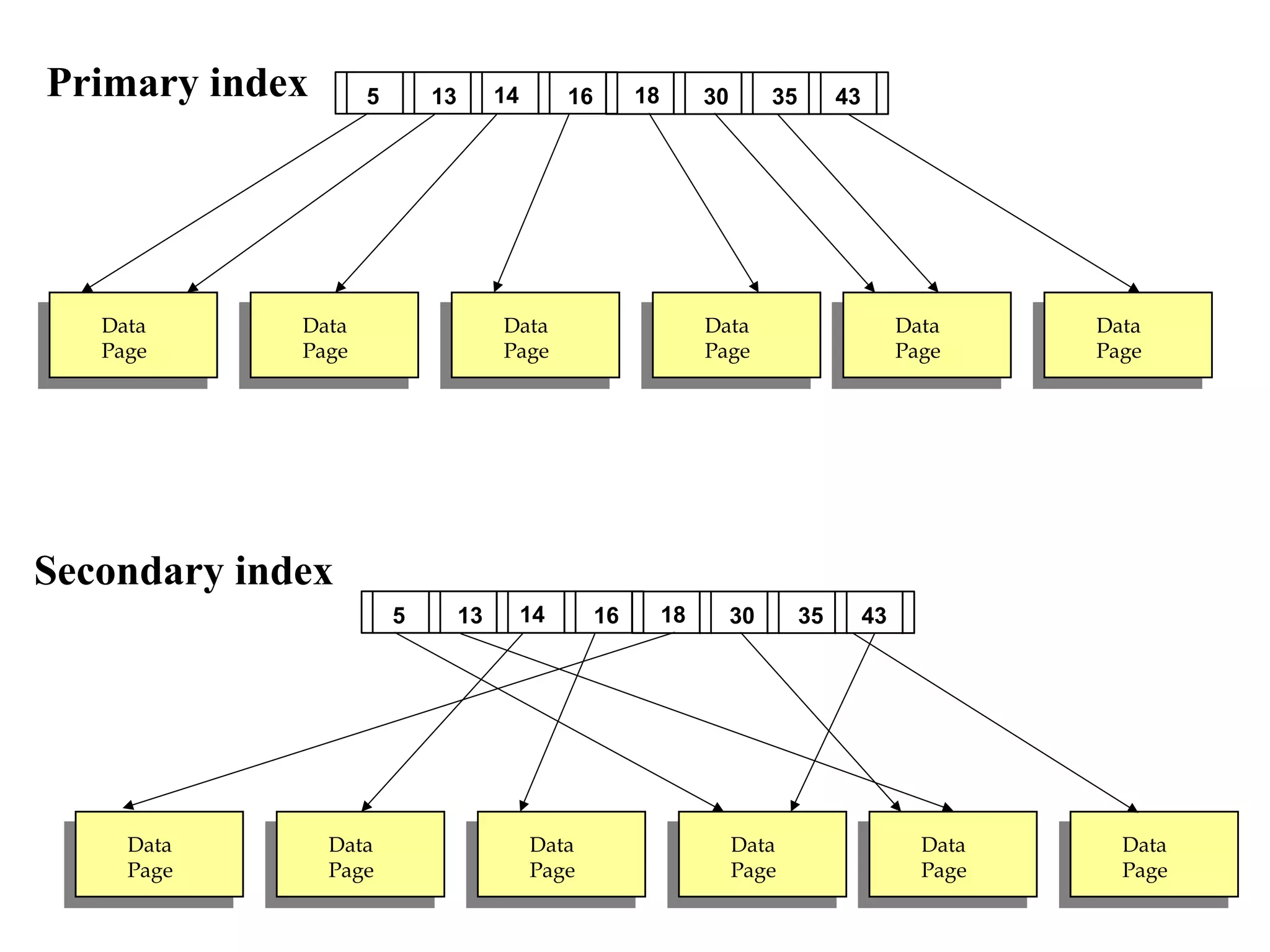

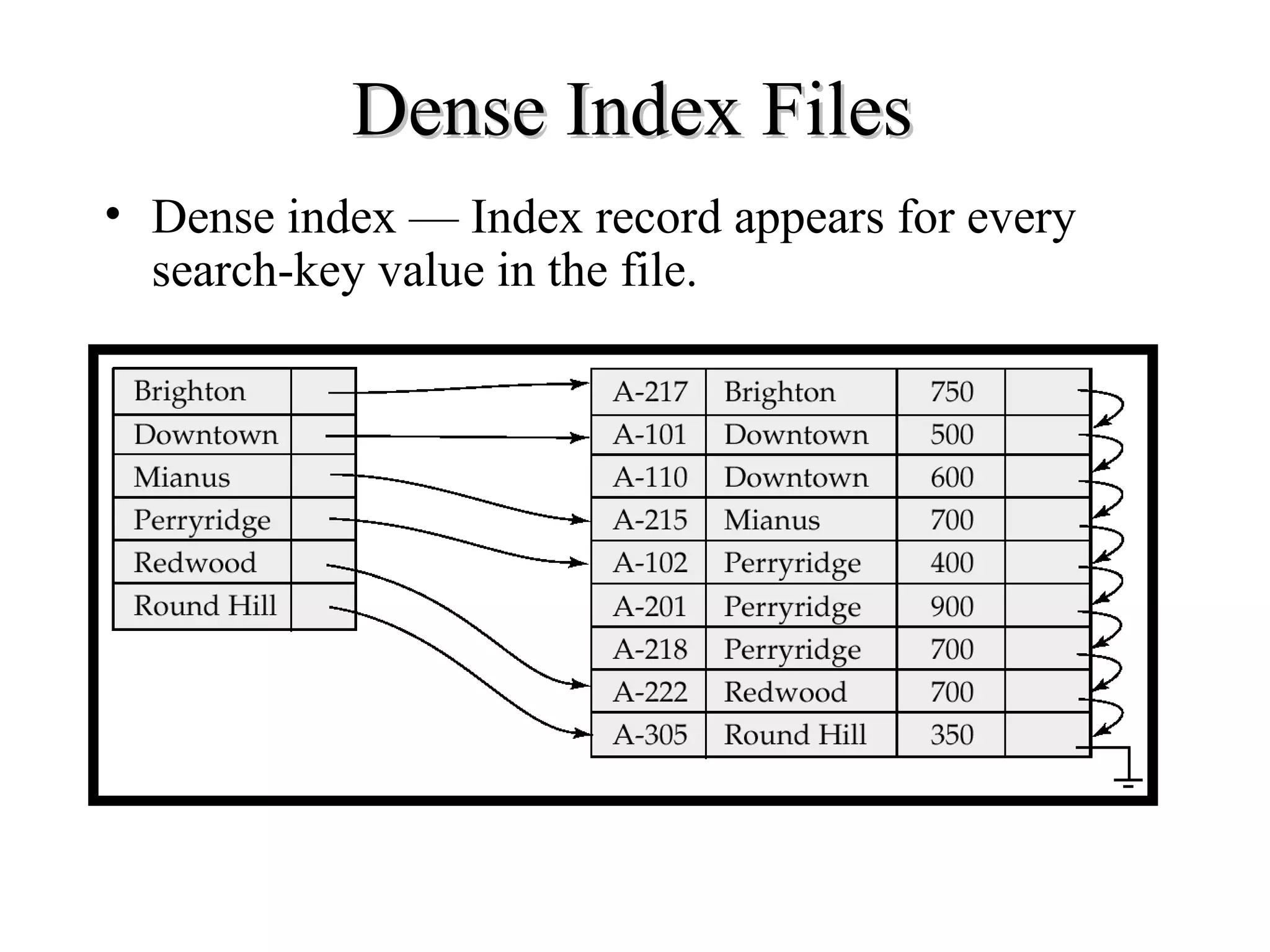

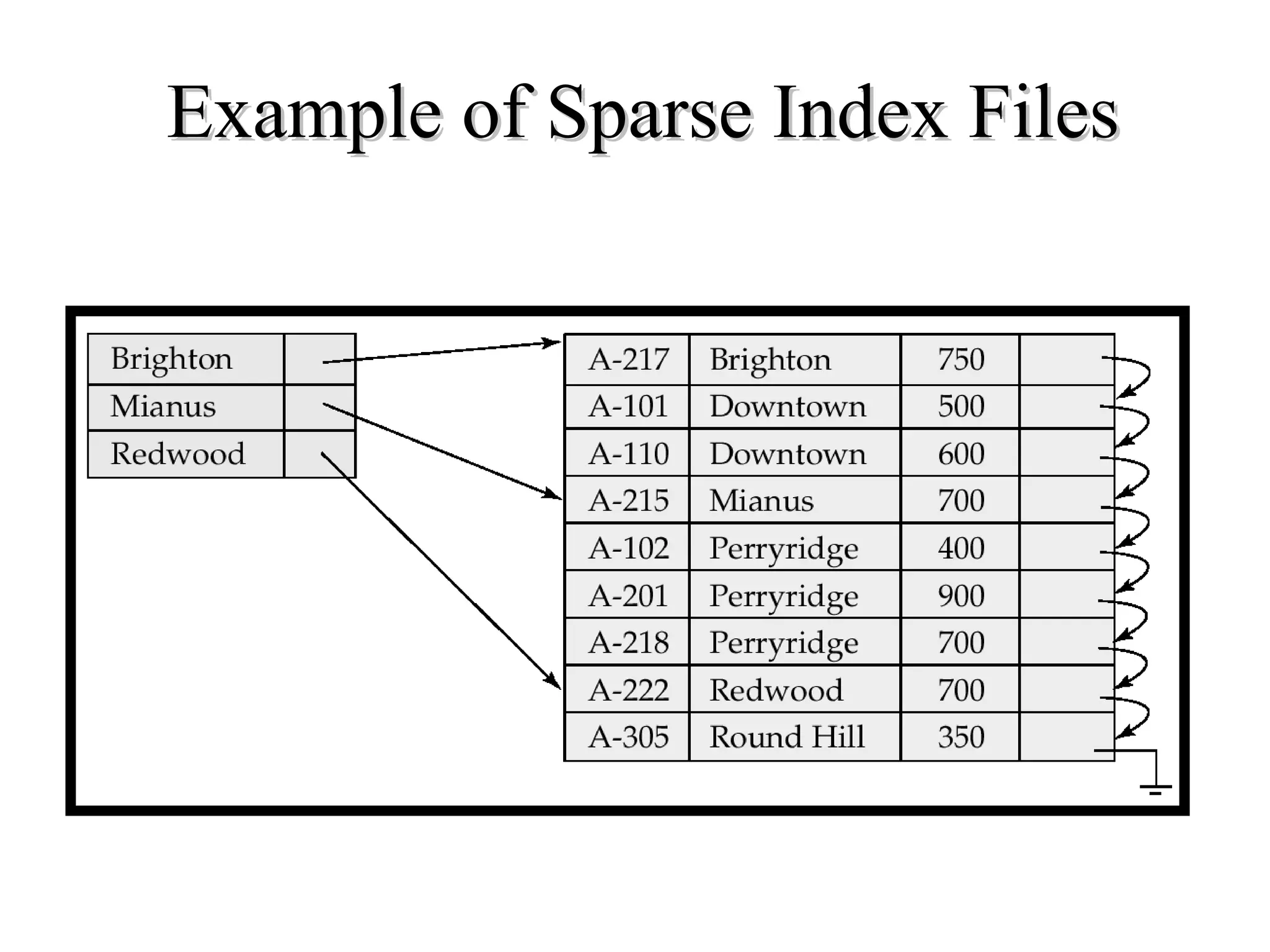

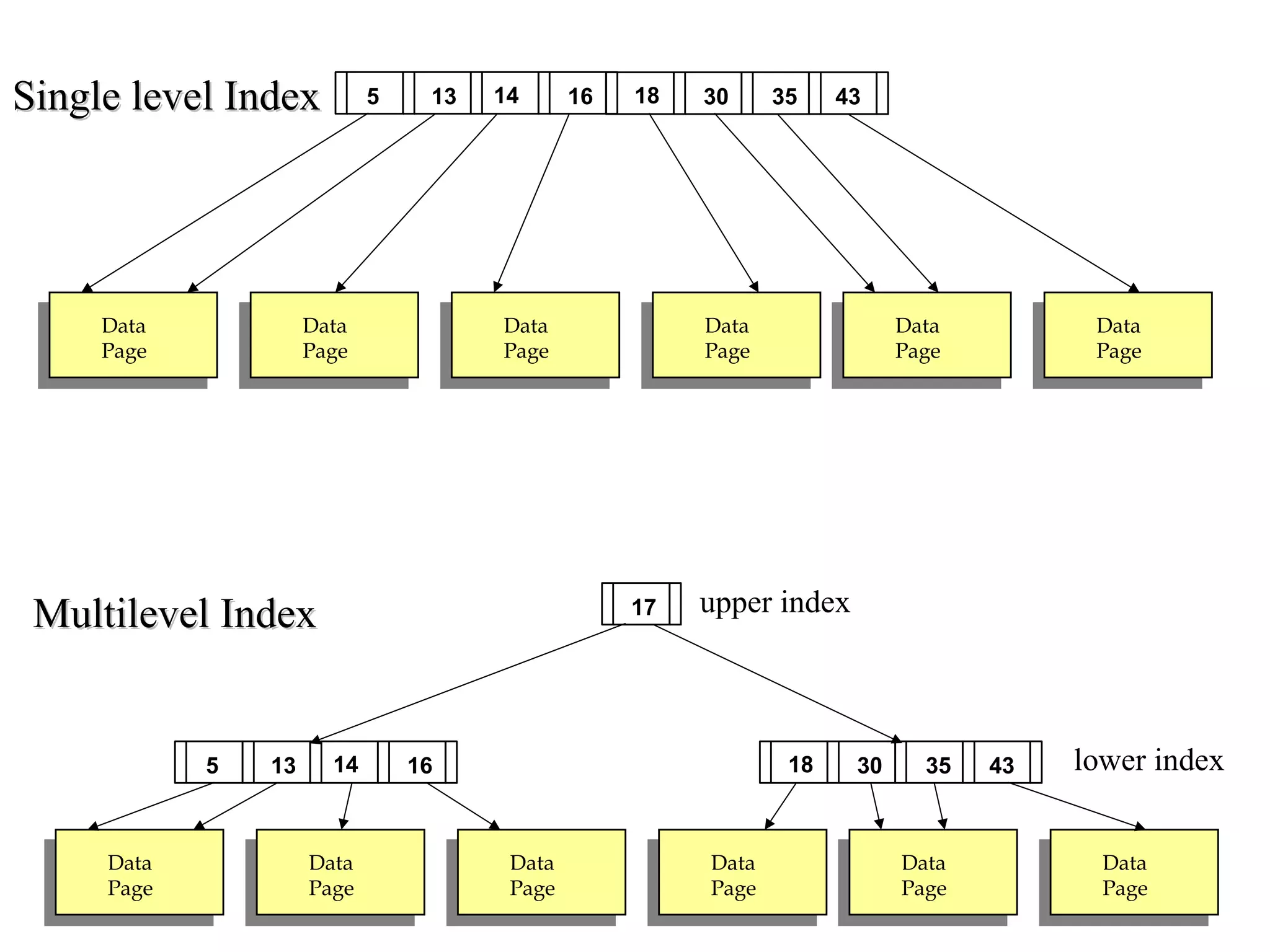

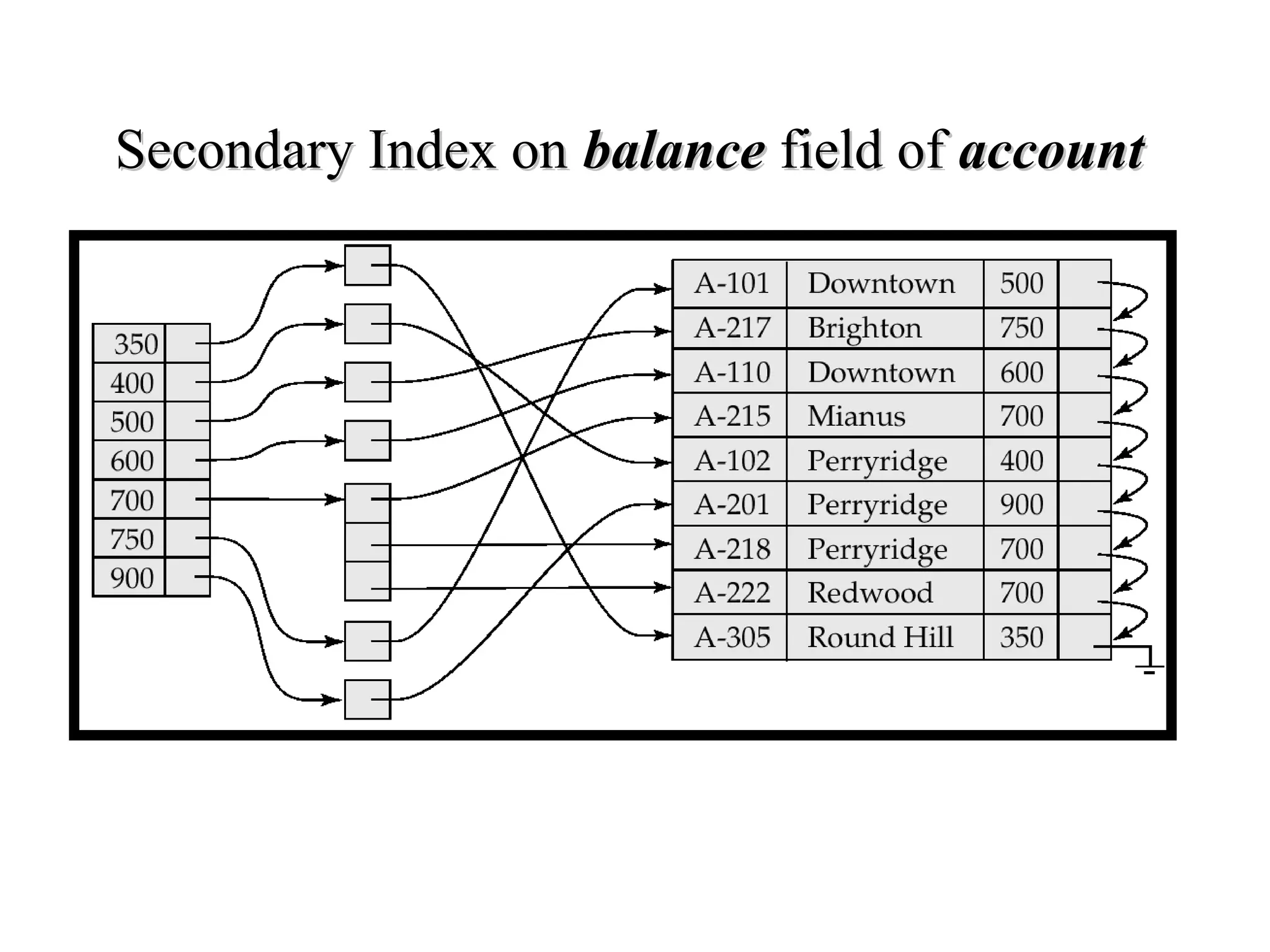

This document discusses different file organization and indexing techniques for databases. It begins by describing common operations like scans, searches, inserts and deletes. It then outlines three main file organization techniques: heap files, sorted files using tree-based indexing, and hash-based indexing. For each technique, it provides the cost in terms of disk I/O for the different operations. It also discusses concepts like primary and secondary indices, dense vs sparse indexing, and multilevel indexing. The document provides examples and diagrams to illustrate these concepts.