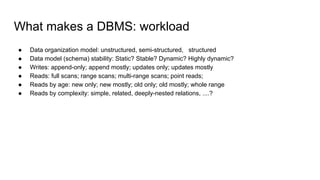

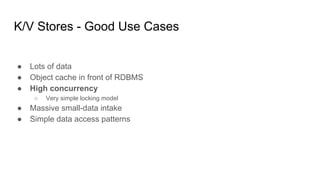

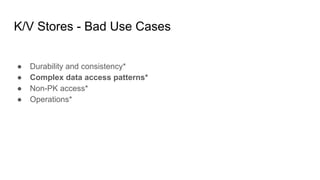

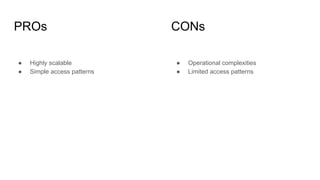

This document provides an overview of heterogeneous persistence and different database management systems (DBMS). It discusses why a single DBMS is often not sufficient and describes different types of DBMS including relational databases, key-value stores, and columnar databases. For each type, it outlines good and bad use cases, examples, considerations, and pros and cons. The document aims to help readers understand the different flavors of DBMS and how to choose the right ones for their specific data and access needs.

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near realtime indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-194-320.jpg)

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near realtime indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-195-320.jpg)

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near realtime indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-196-320.jpg)

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near realtime indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-197-320.jpg)

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near realtime indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-198-320.jpg)

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near real-time indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-199-320.jpg)

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near realtime indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-200-320.jpg)

![● Lucene based

● Quite cryptic query interface - Innovator’s Dilemma

● Support for SQL based query on 6.1

● Structured schema, data types needs to be predefined

● Written in Java, JVM limitation applies i.e. GC

● Near realtime indexing - DIH,

● Rich document handling - PDF, doc[x]

● SolrCloud support for sharding and replication

Fulltext Search: Solr](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-201-320.jpg)

![● Structured data

● MySQL protocol - SphinxQL

● Durable indexes via binary logs

● Realtime indexes via MySQL queries

● Distributed index for scaling

● No native support for replication i.e. via rsync

● Very good documentation

● Fastest full indexing/reindexing [?]

Fulltext Search: Sphinx Search](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-205-320.jpg)

![● Structured data

● MySQL protocol - SphinxQL

● Durable indexes via binary logs

● Realtime indexes via MySQL queries

● Distributed index for scaling

● No native support for replication i.e. via rsync

● Very good documentation

● Fastest full indexing/reindexing [?]

Fulltext Search: Sphinx Search](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-206-320.jpg)

![● Structured data

● MySQL protocol - SphinxQL

● Durable indexes via binary logs

● Realtime indexes via MySQL queries

● Distributed index for scaling

● No native support for replication i.e. via rsync

● Very good documentation

● Fastest full indexing/reindexing [?]

Fulltext Search: Sphinx Search](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-207-320.jpg)

![● Structured data

● MySQL protocol - SphinxQL

● Durable indexes via binary logs

● Realtime indexes via MySQL queries

● Distributed index for scaling

● No native support for replication i.e. via rsync

● Very good documentation

● Fastest full indexing/reindexing [?]

Fulltext Search: Sphinx Search](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-208-320.jpg)

![● Structured data

● MySQL protocol - SphinxQL

● Durable indexes via binary logs

● Realtime indexes via MySQL queries

● Distributed index for scaling

● No native support for replication i.e. via rsync

● Very good documentation

● Fastest full indexing/reindexing [?]

Fulltext Search: Sphinx Search](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-209-320.jpg)

![● Structured data

● MySQL protocol - SphinxQL

● Durable indexes via binary logs

● Realtime indexes via MySQL queries

● Distributed index for scaling

● No native support for replication i.e. via rsync

● Very good documentation

● Fastest full indexing/reindexing [?]

Fulltext Search: Sphinx Search](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-210-320.jpg)

![● Structured data

● MySQL protocol - SphinxQL

● Durable indexes via binary logs

● Realtime indexes via MySQL queries

● Distributed index for scaling

● No native support for replication i.e. via rsync

● Very good documentation

● Fastest full indexing/reindexing [?]

Fulltext Search: Sphinx Search](https://image.slidesharecdn.com/heterogeneouspersistence-160422055632/85/Heterogenous-Persistence-211-320.jpg)