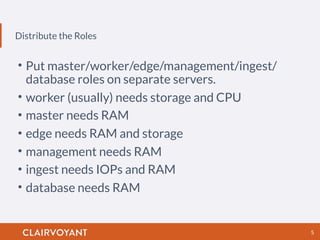

The document presents recommendations for optimizing Hadoop operations while highlighting the speaker's expertise and experience. Key points include effective distribution of server roles, the importance of monitoring and automation, and strategies for performance tuning. Additionally, it emphasizes the need for understanding application workloads and avoiding premature optimization.