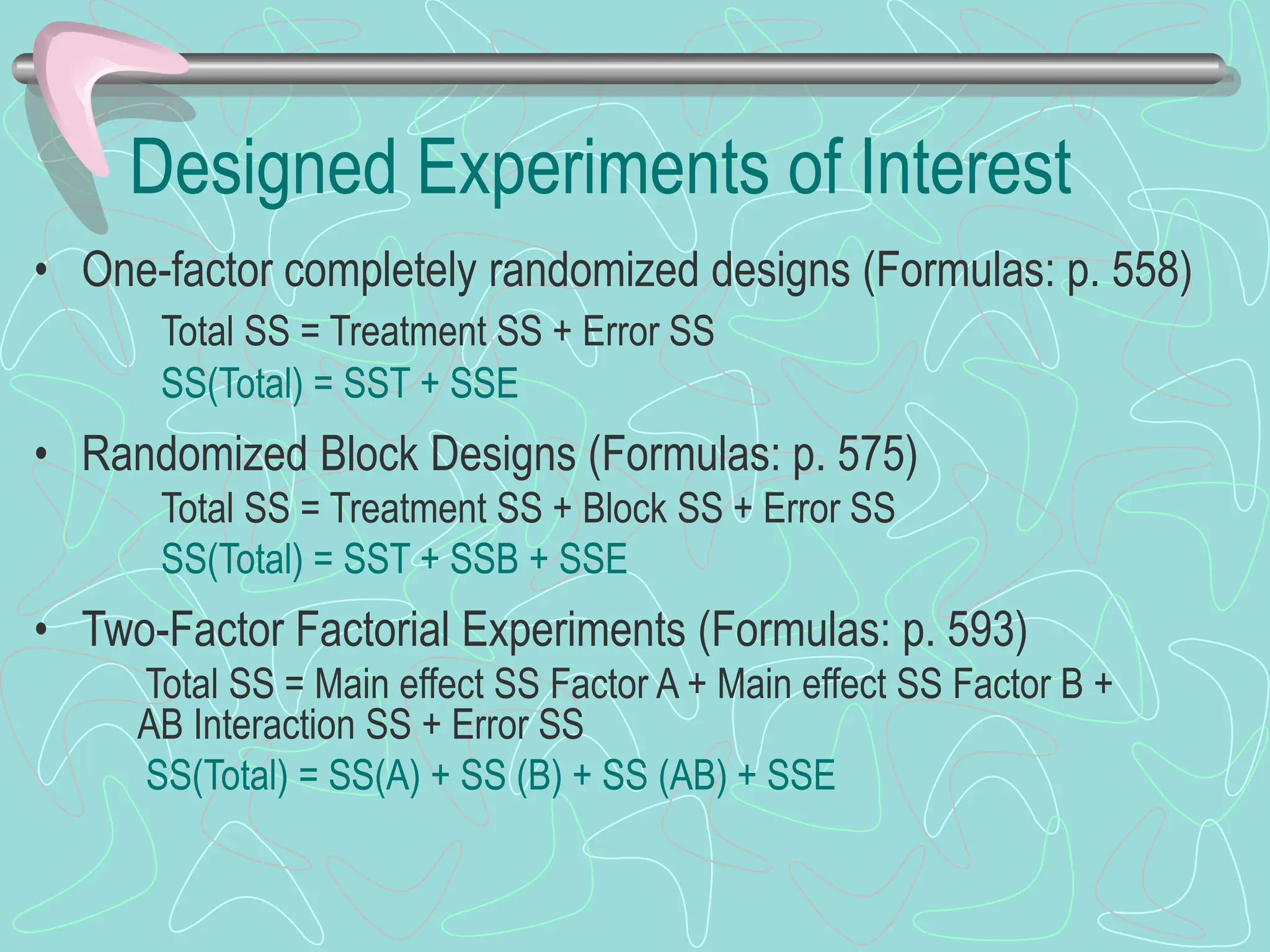

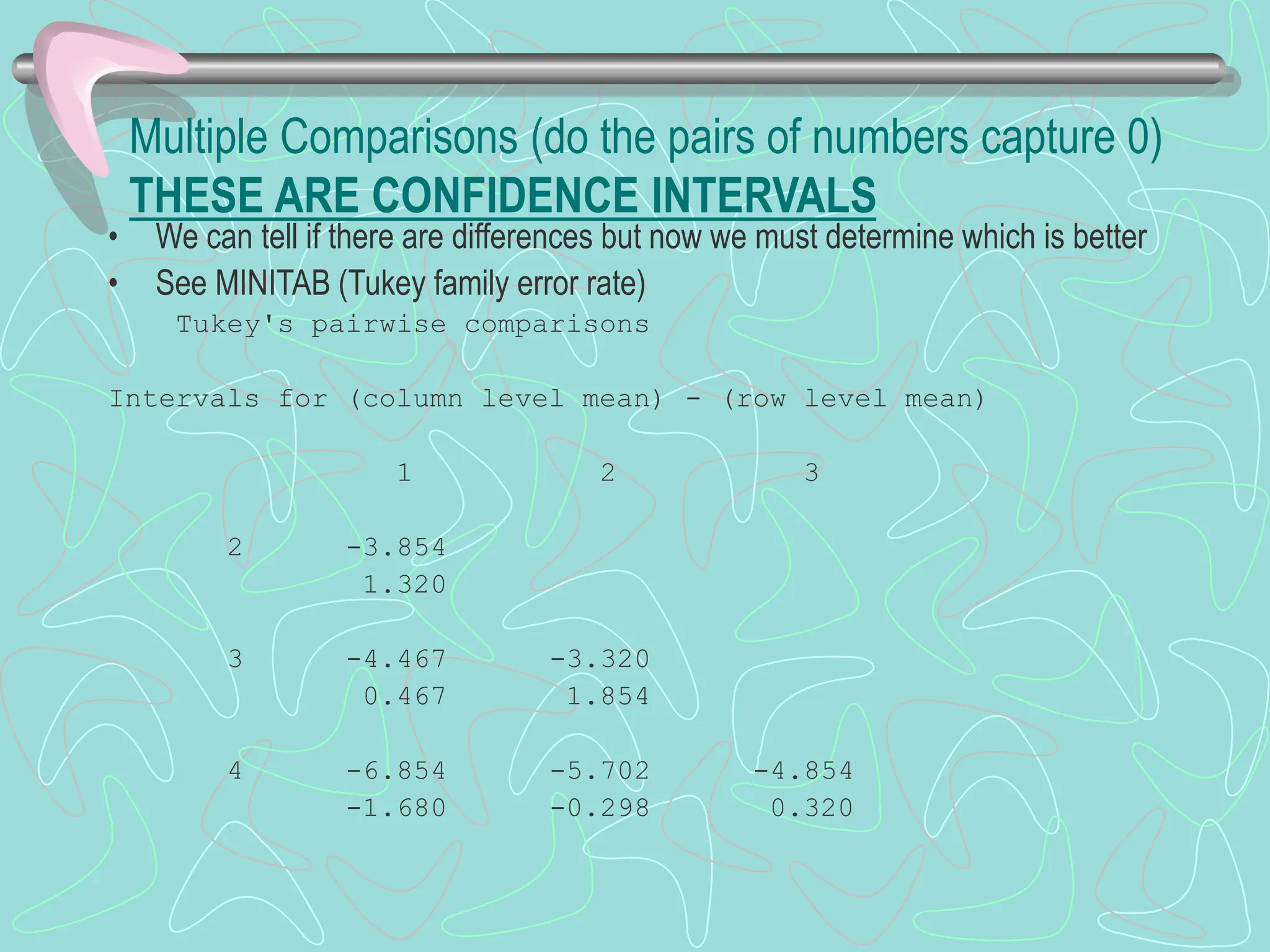

The document discusses Analysis of Variance (ANOVA) as a method to compare means across multiple groups, avoiding the increased risk of Type I errors associated with multiple t-tests. It explains the concepts of between-group and within-group variance, null and alternative hypotheses, and the various experimental designs that can be employed with ANOVA. The document also highlights the importance of calculating variance ratios to determine the significance of treatment effects and provides insight on conducting multiple comparisons using confidence intervals.