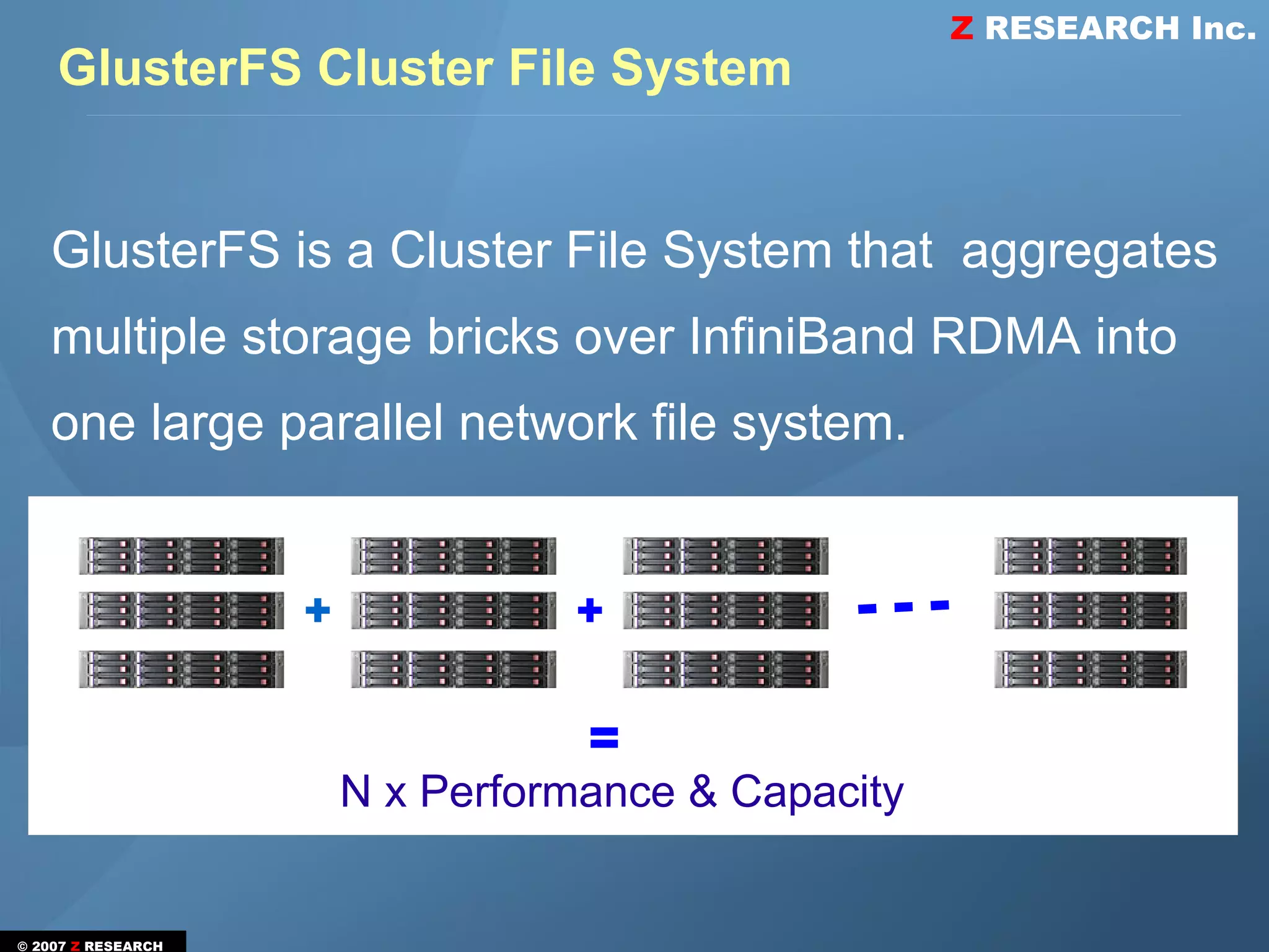

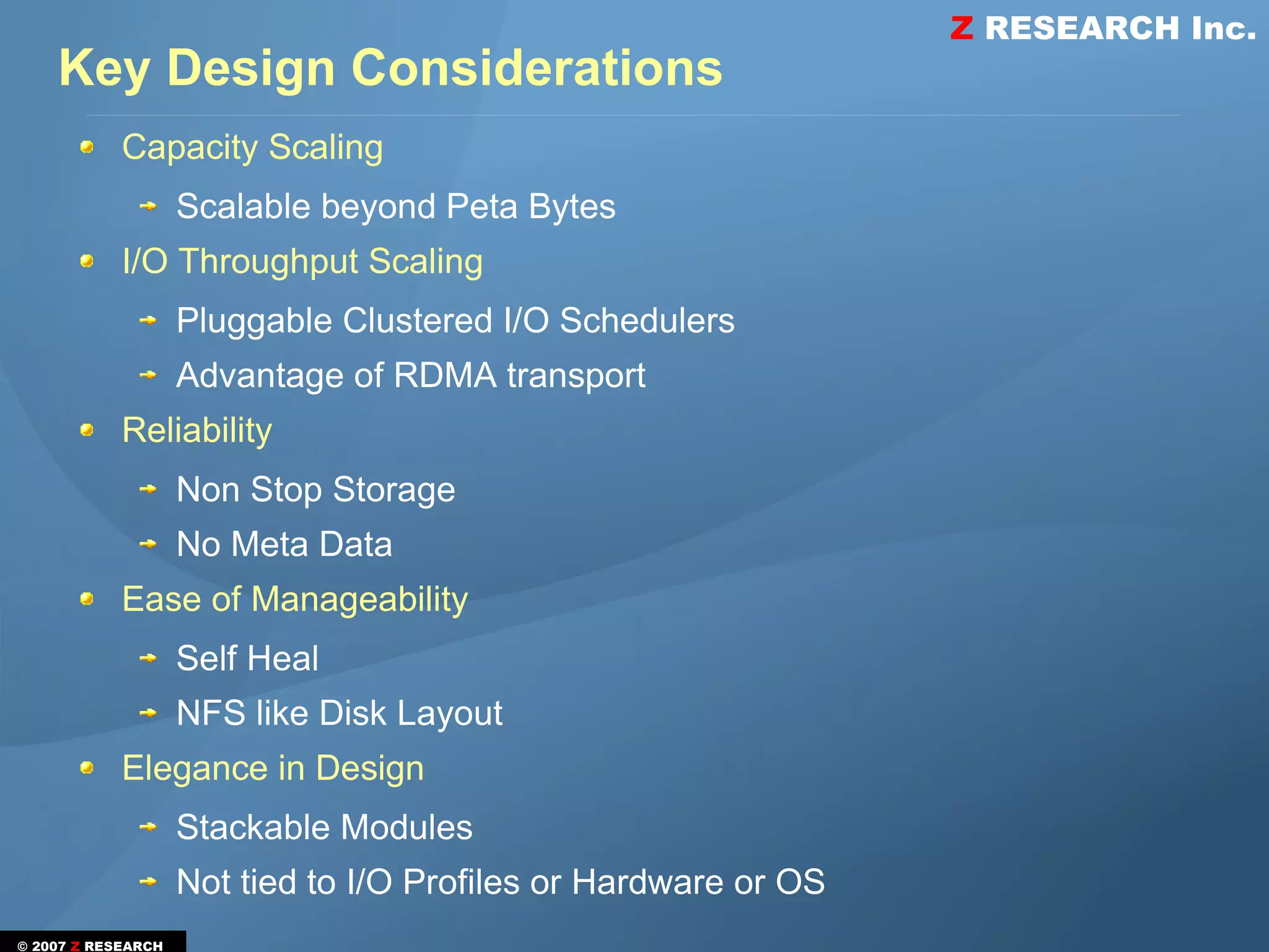

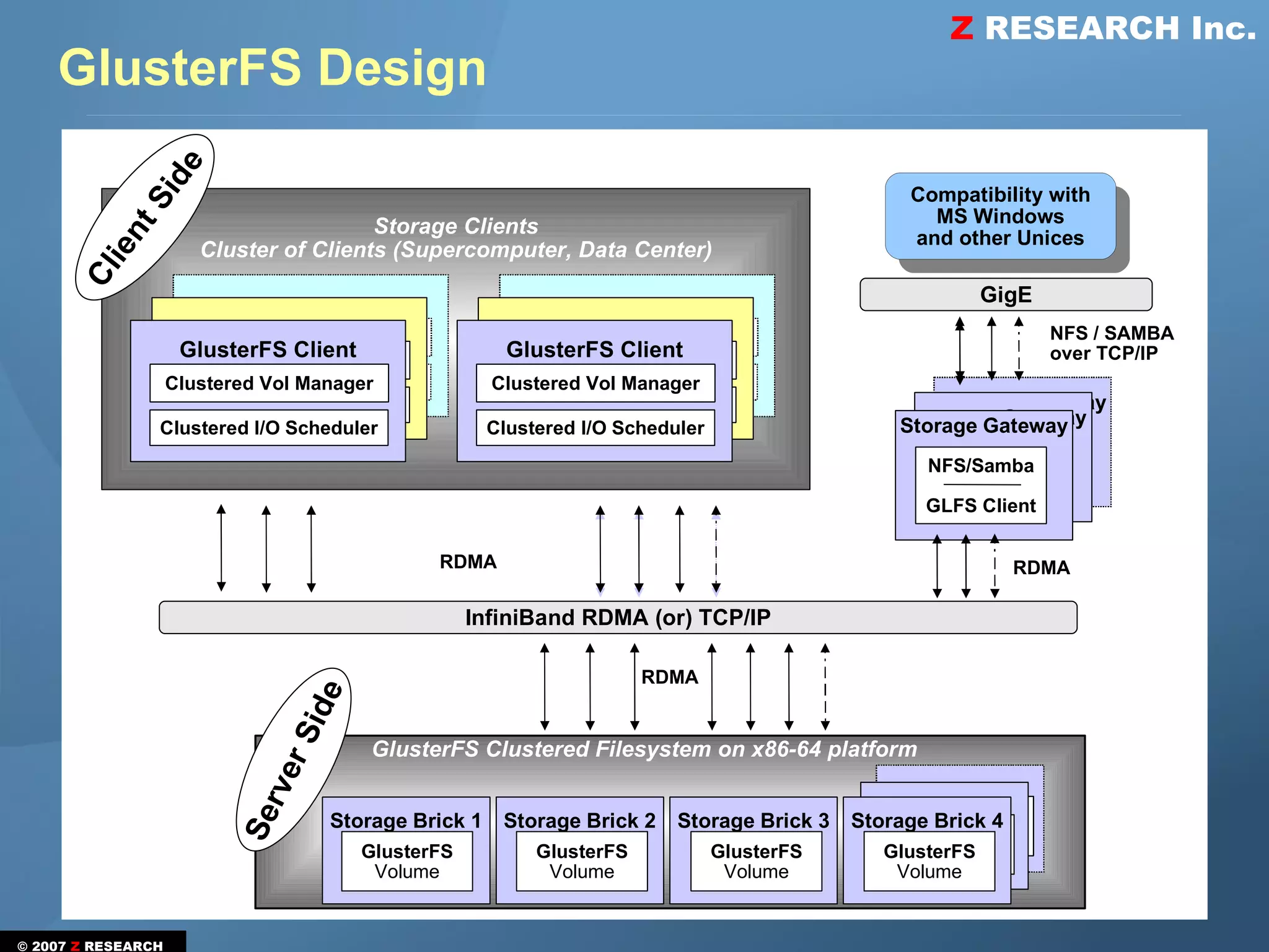

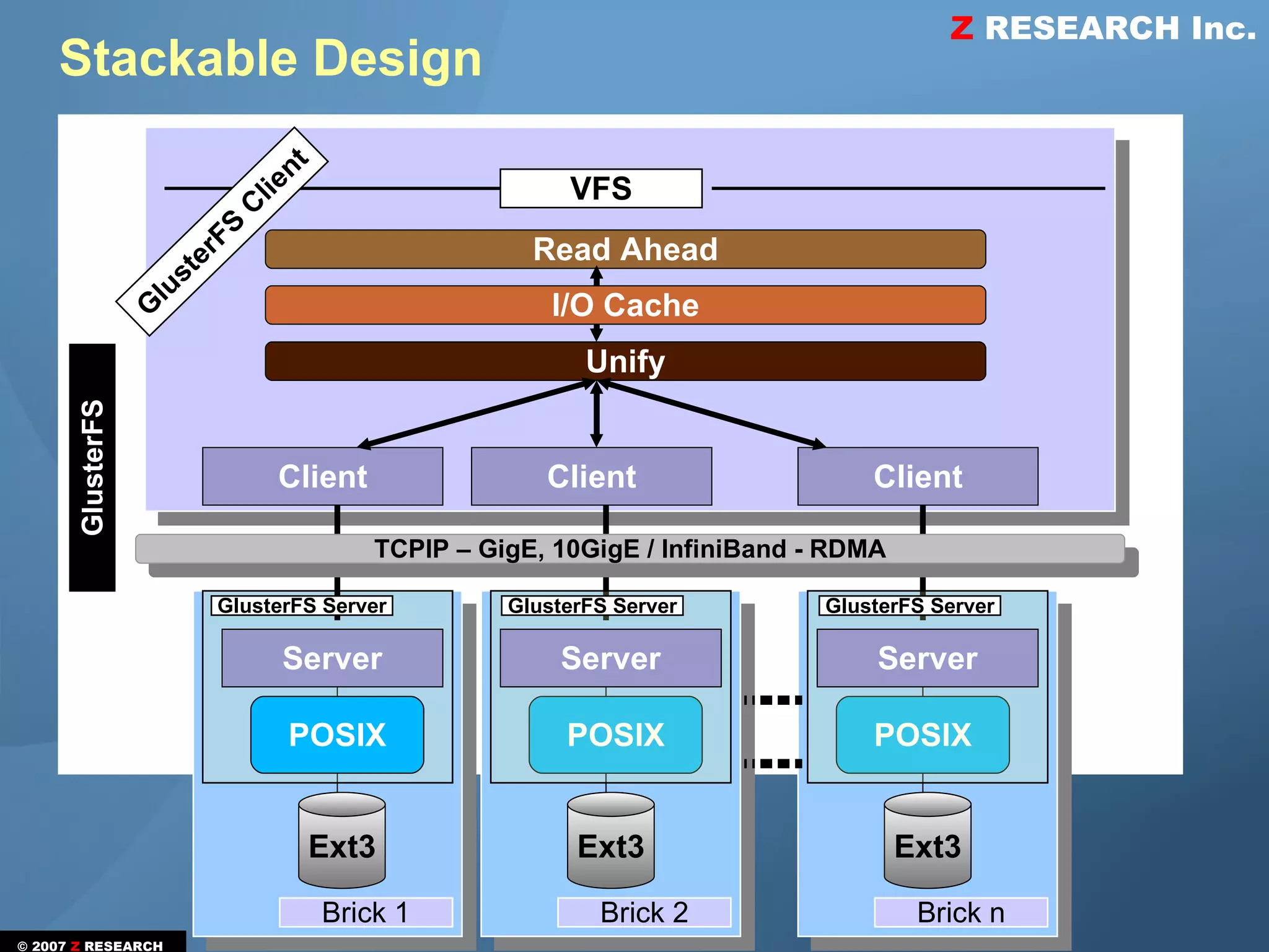

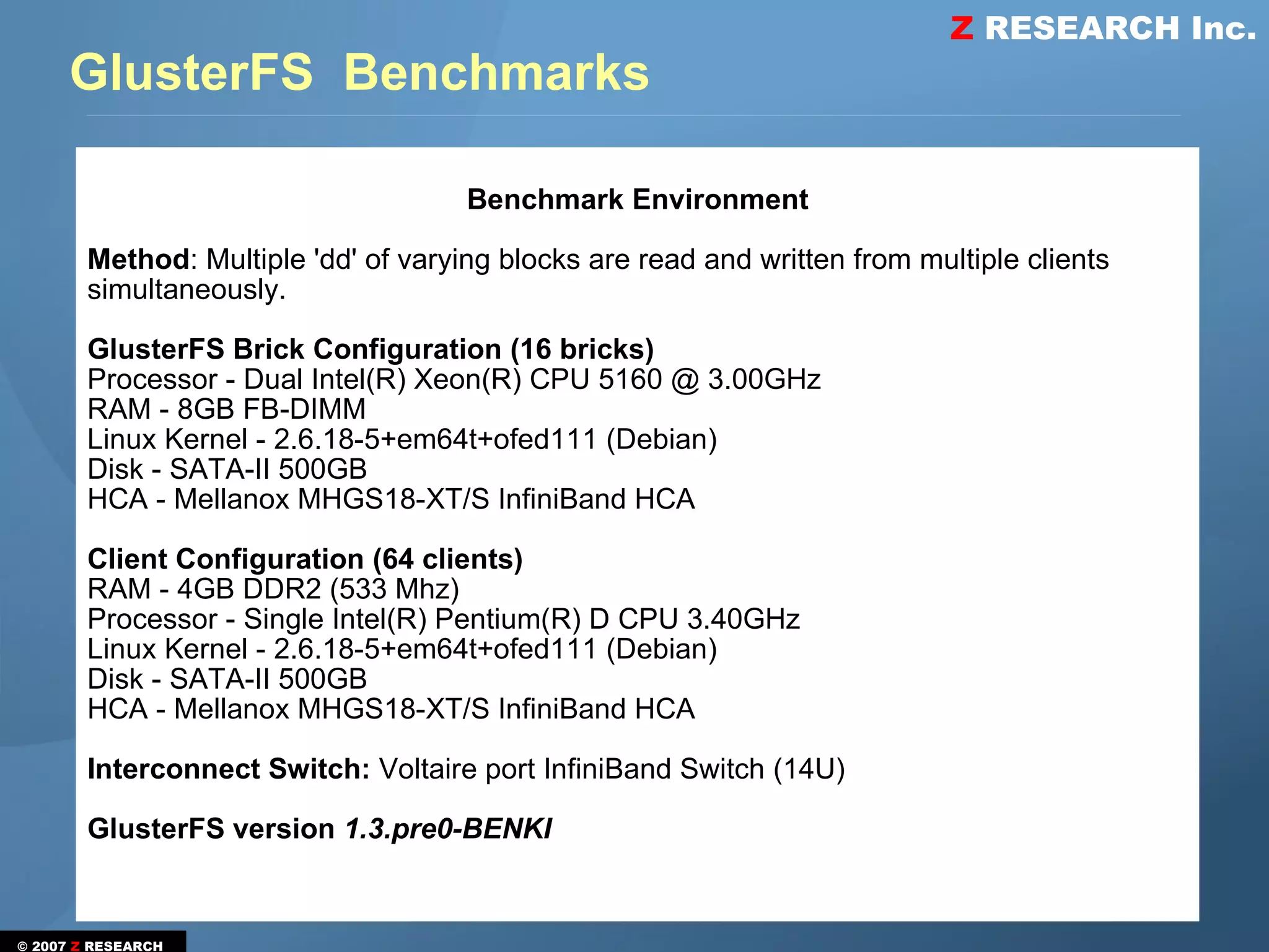

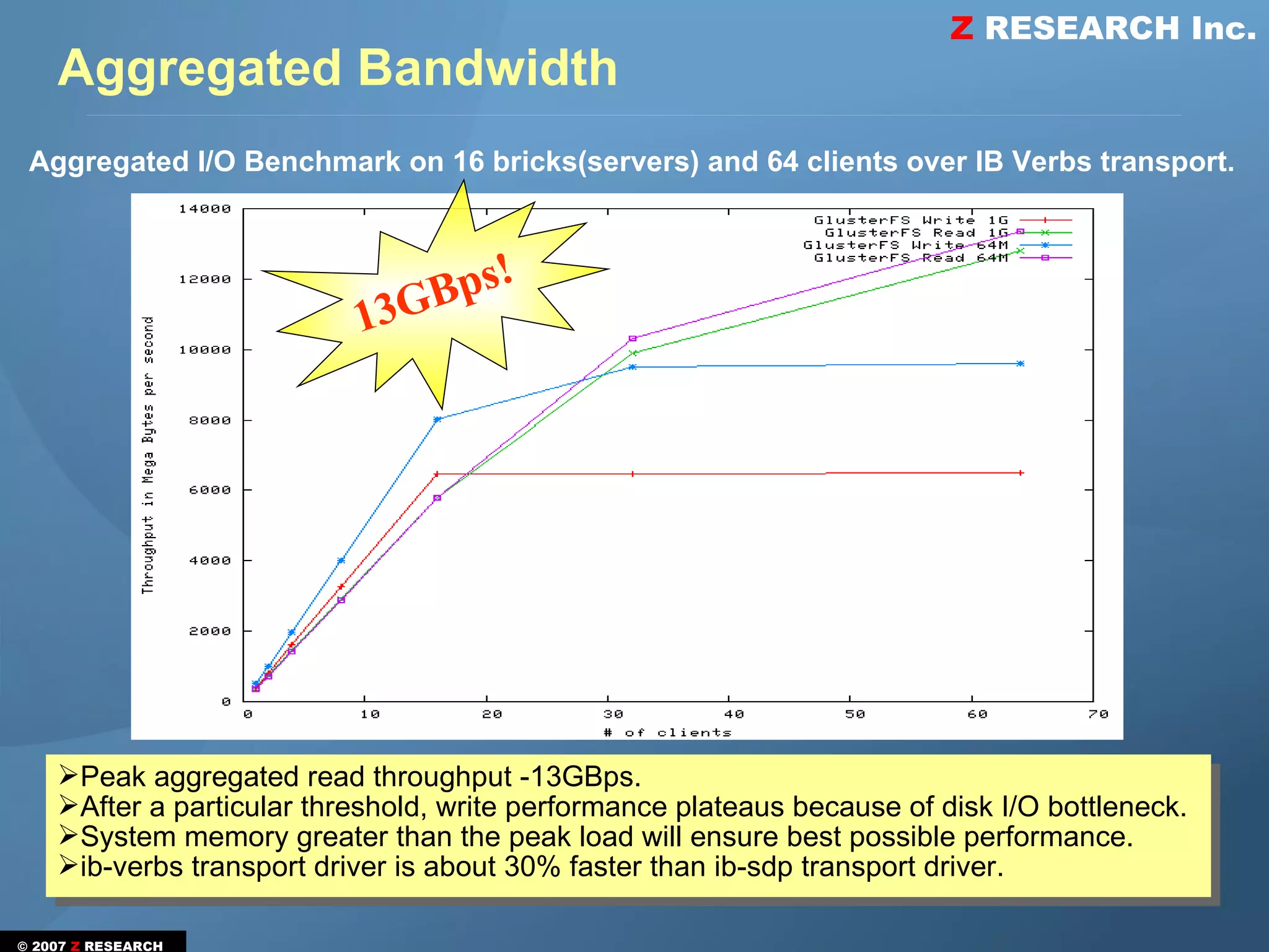

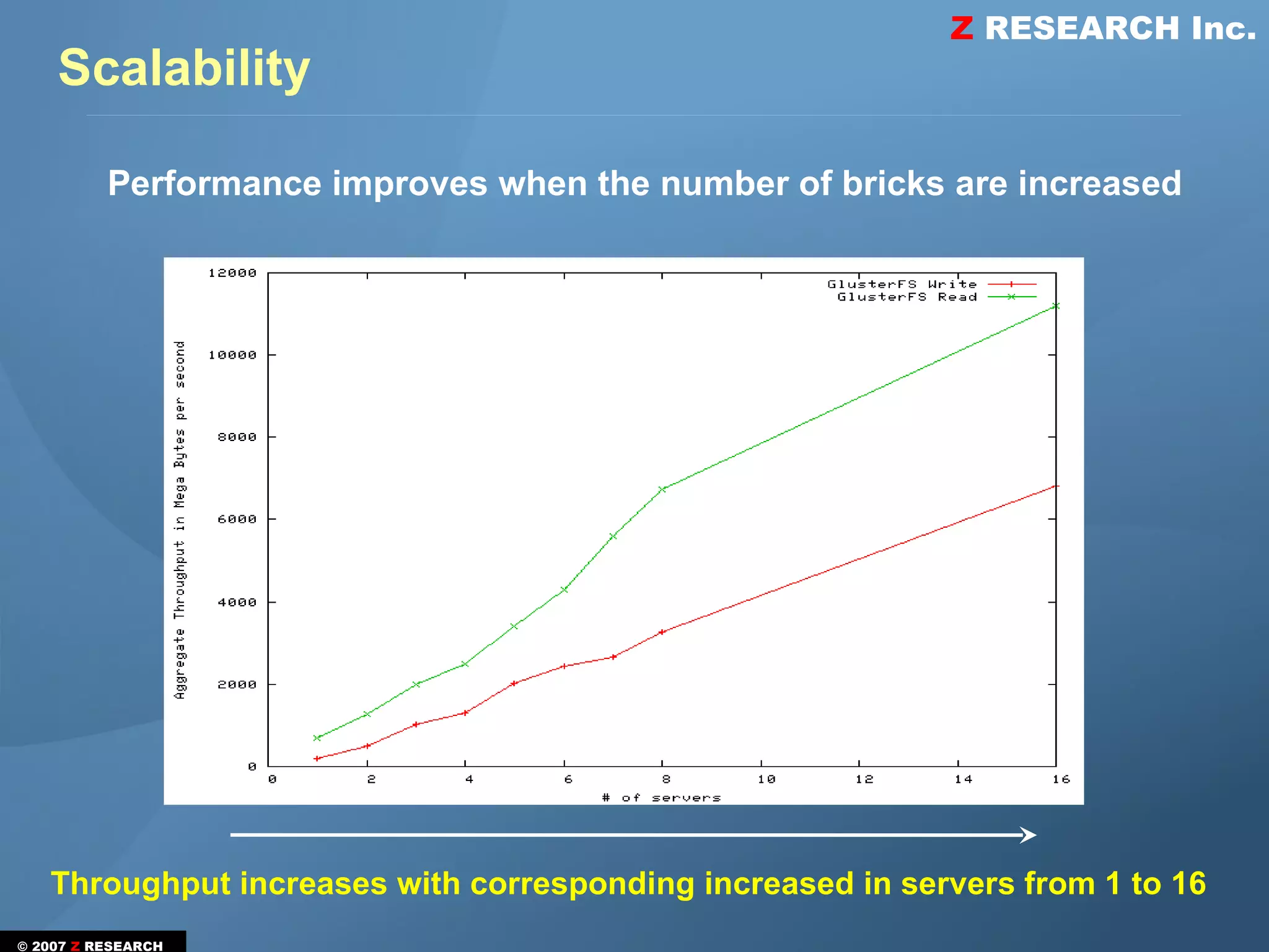

GlusterFS is a clustered file system that aggregates multiple storage servers over InfiniBand RDMA into a large parallel network file system. It was designed for scalable capacity and I/O throughput beyond petabytes, reliability through its non-stop storage architecture with no single point of failure, and ease of manageability through self-healing capabilities. Benchmark tests using 16 storage servers and 64 clients achieved a peak aggregated read throughput of 13GBps over InfiniBand verbs transport.