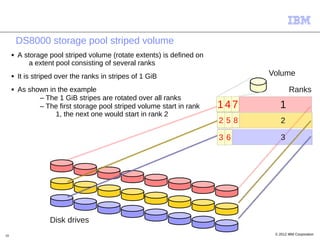

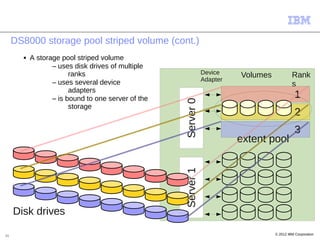

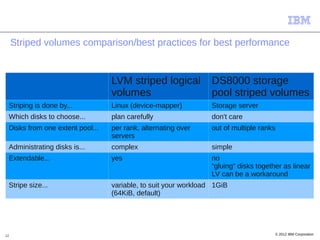

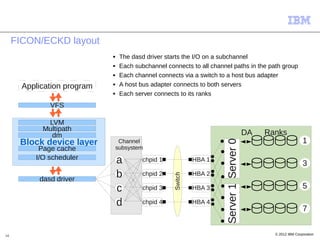

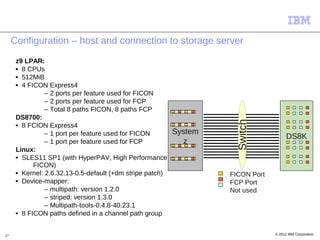

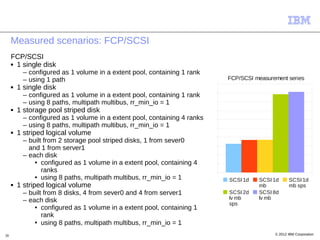

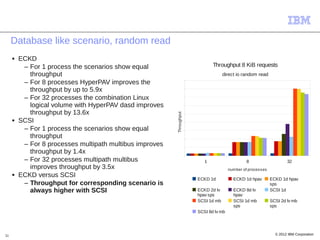

The document discusses disk I/O performance for Linux on system z, focusing on various disk configurations and management options such as logical volumes, multipathing, and caching strategies. It highlights the differences between FICON/ECKD and FCP/SCSI protocols, as well as best practices for optimizing performance and availability in a storage environment. Key components involved in disk I/O processing are also outlined, including the roles of the Linux kernel and device drivers.