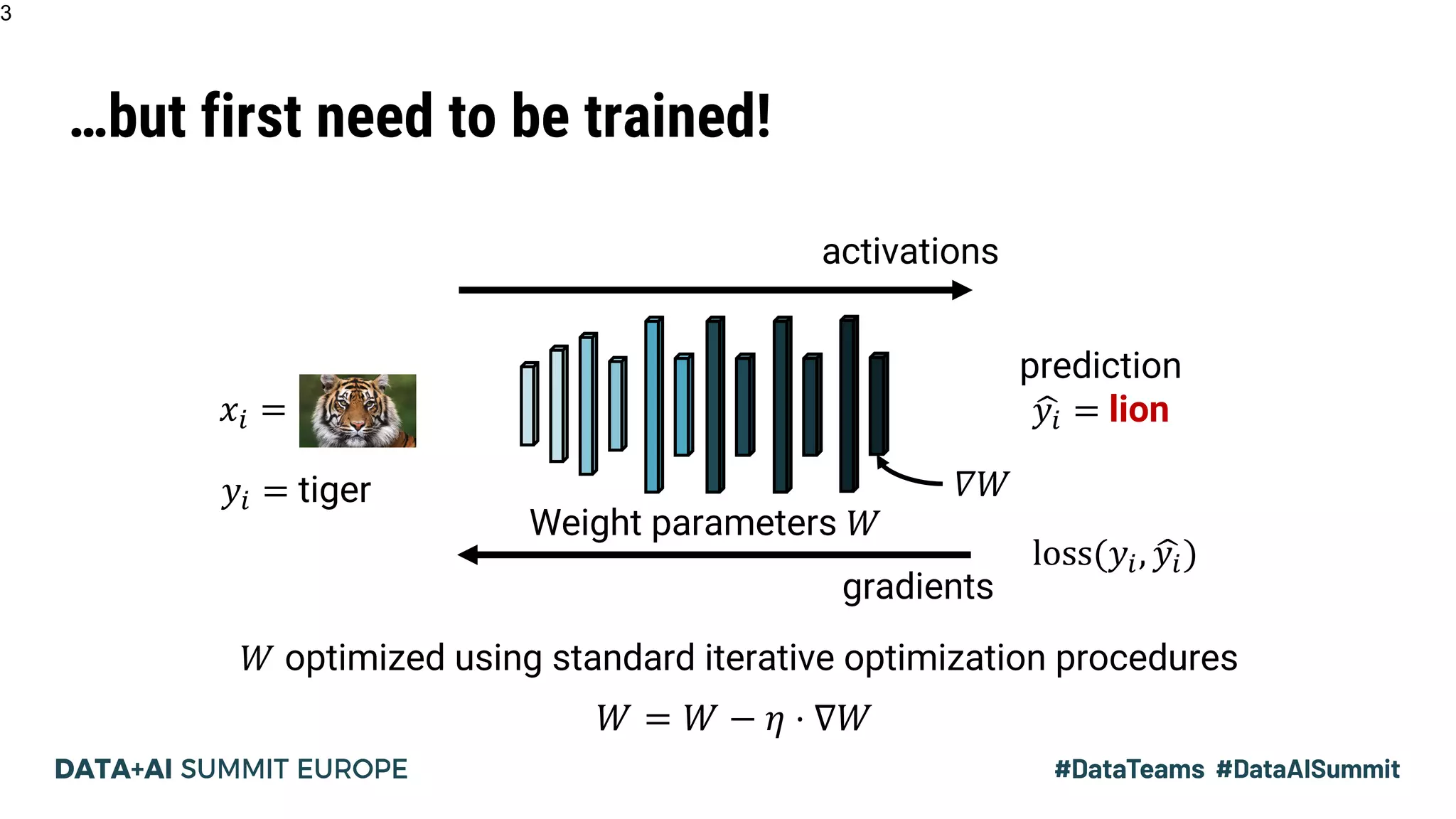

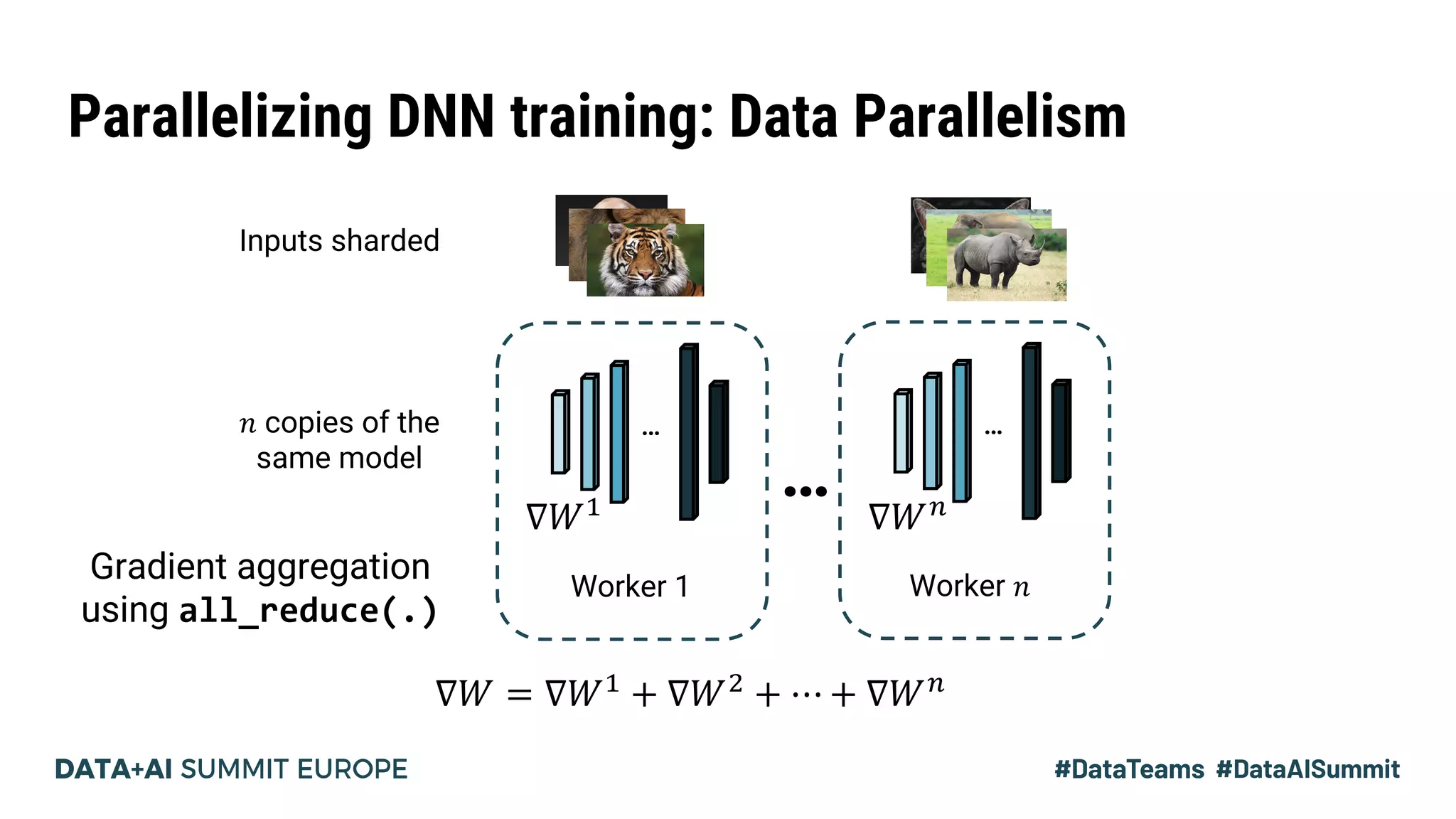

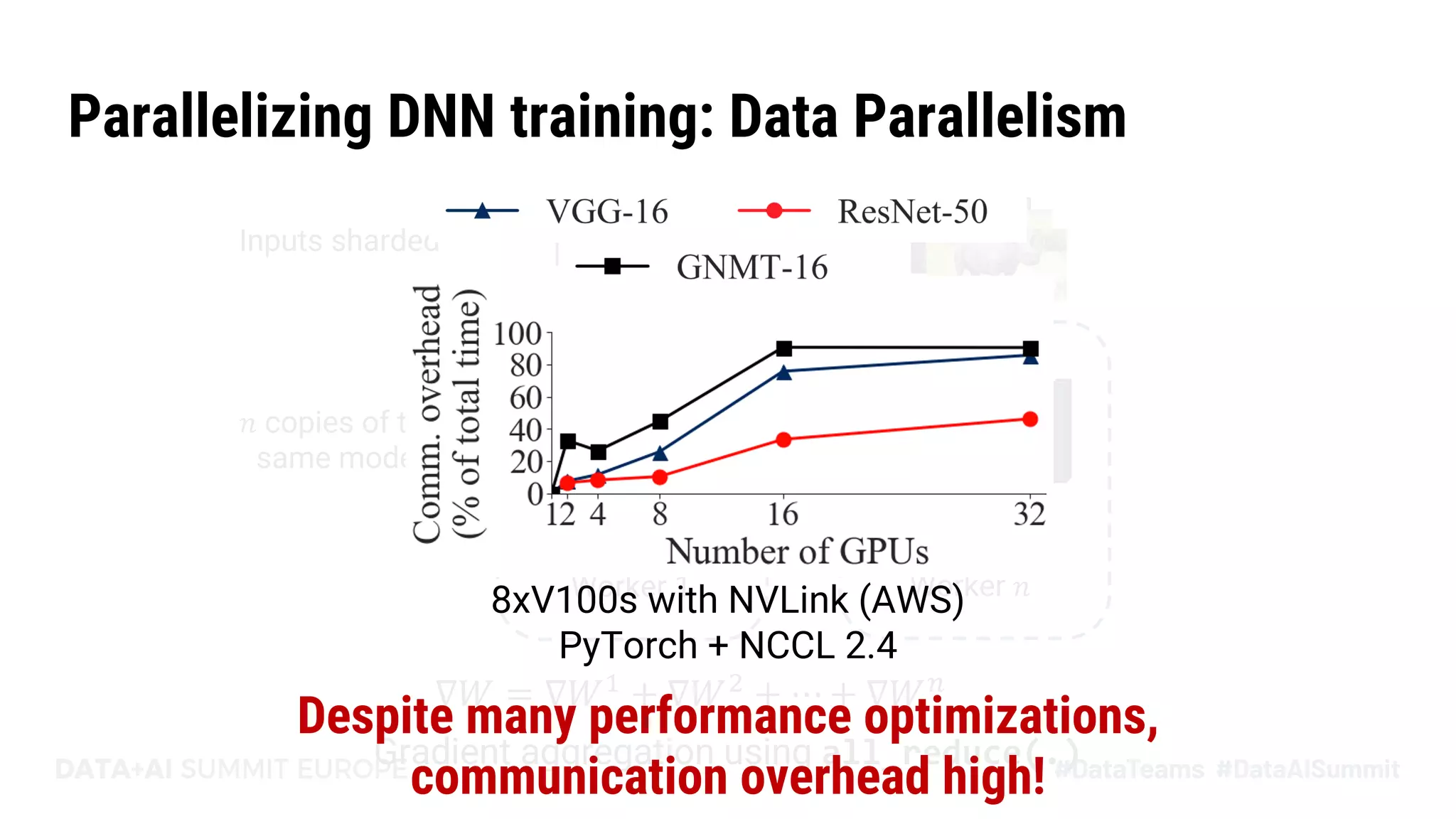

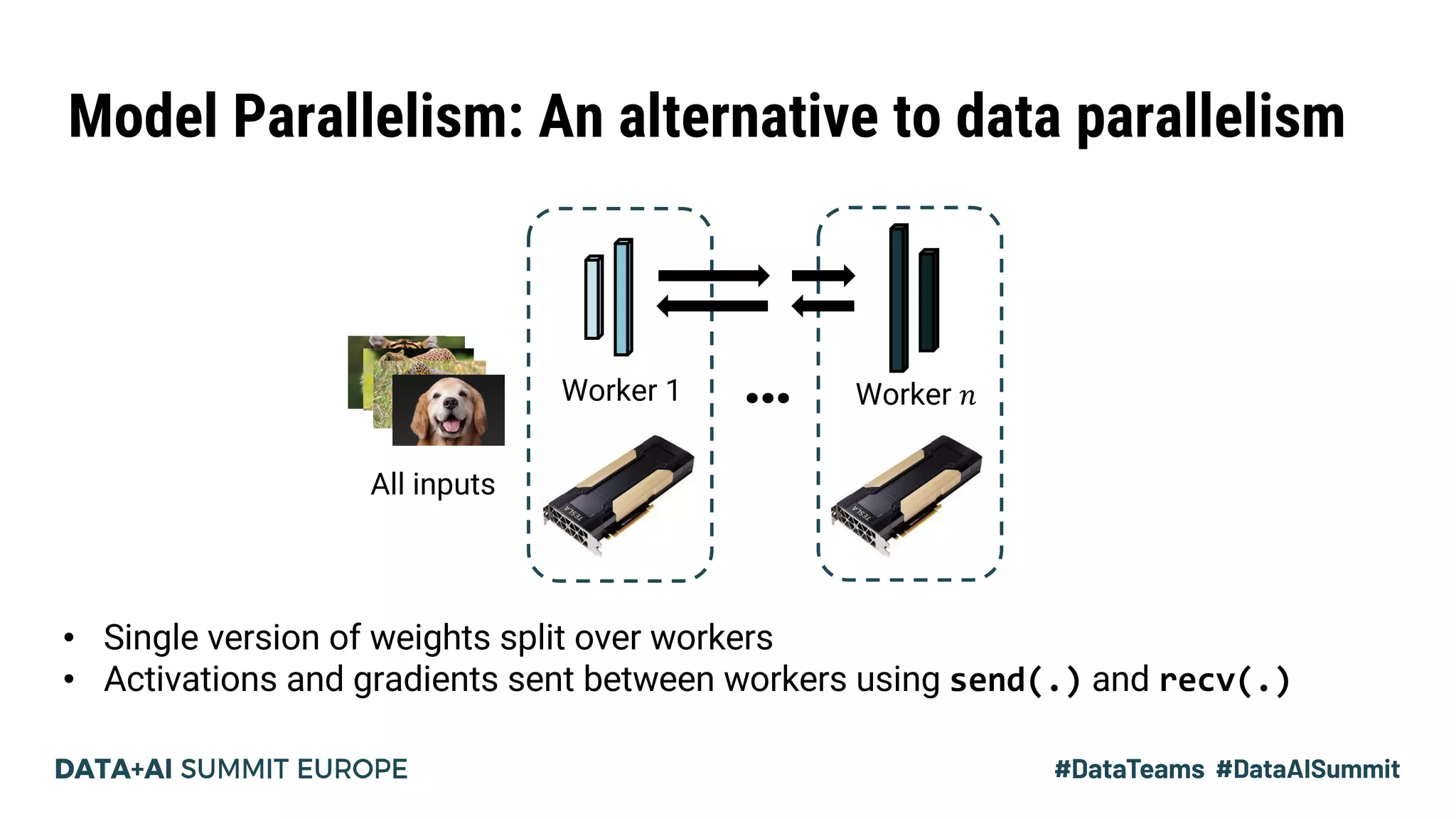

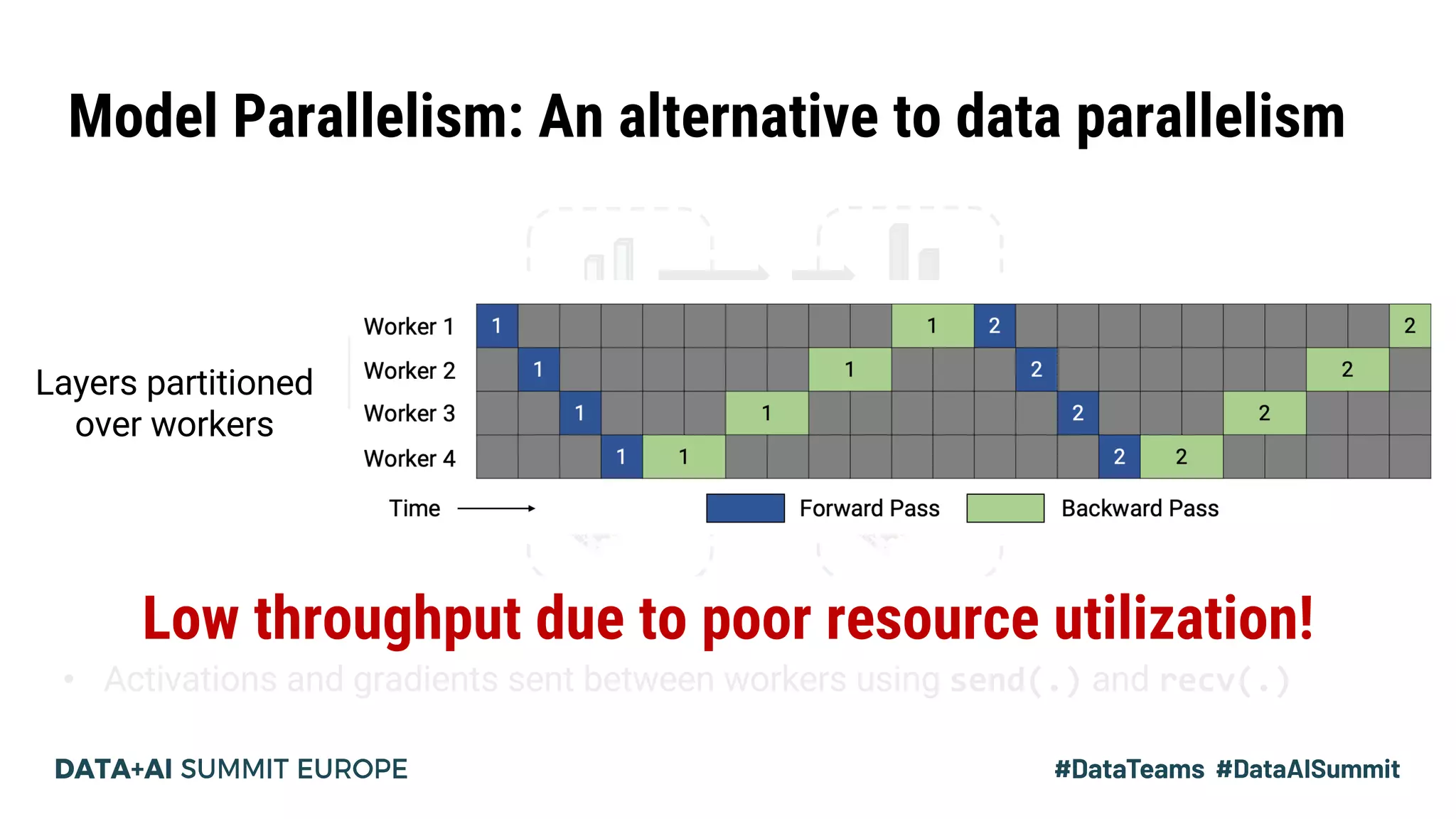

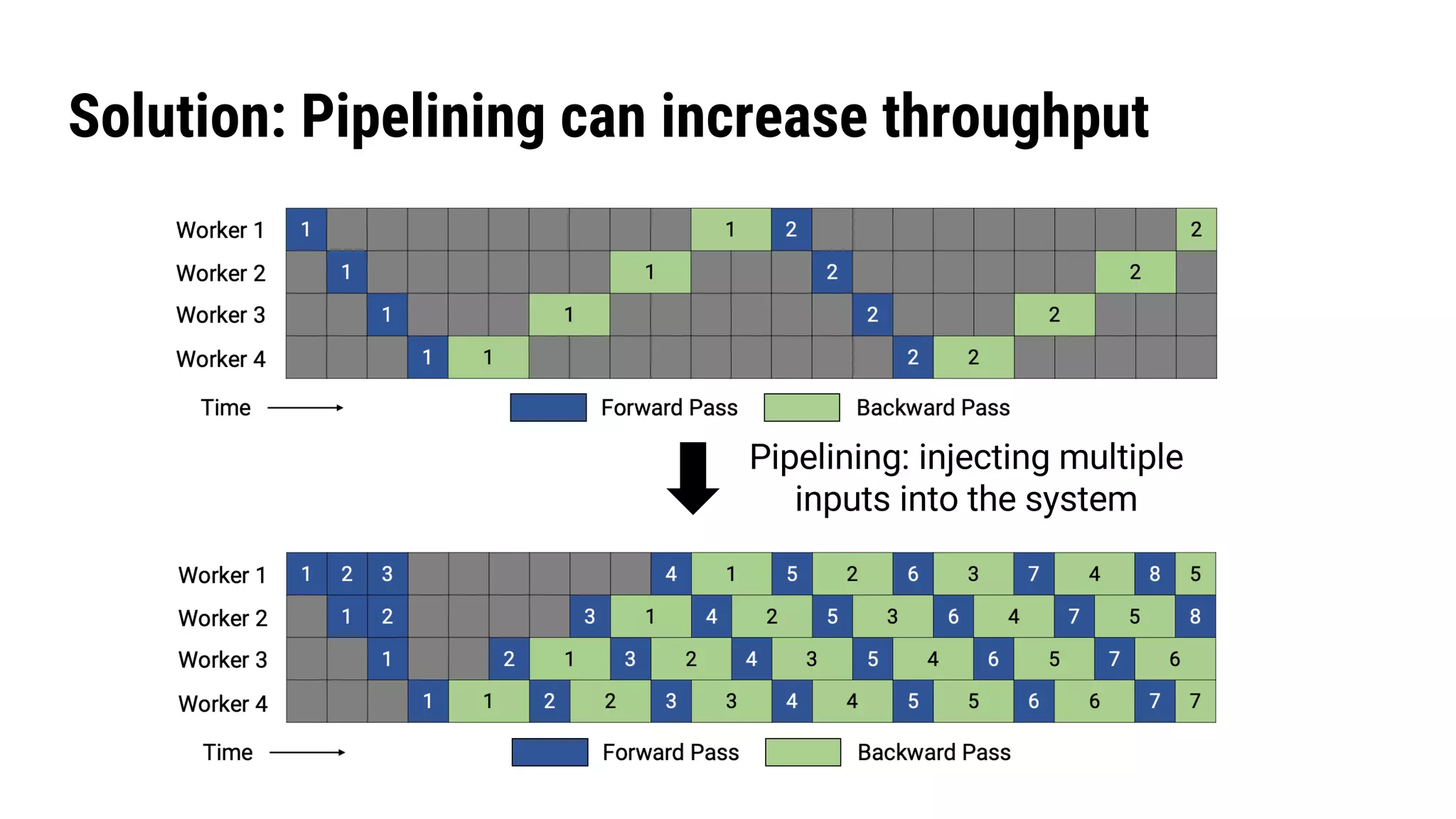

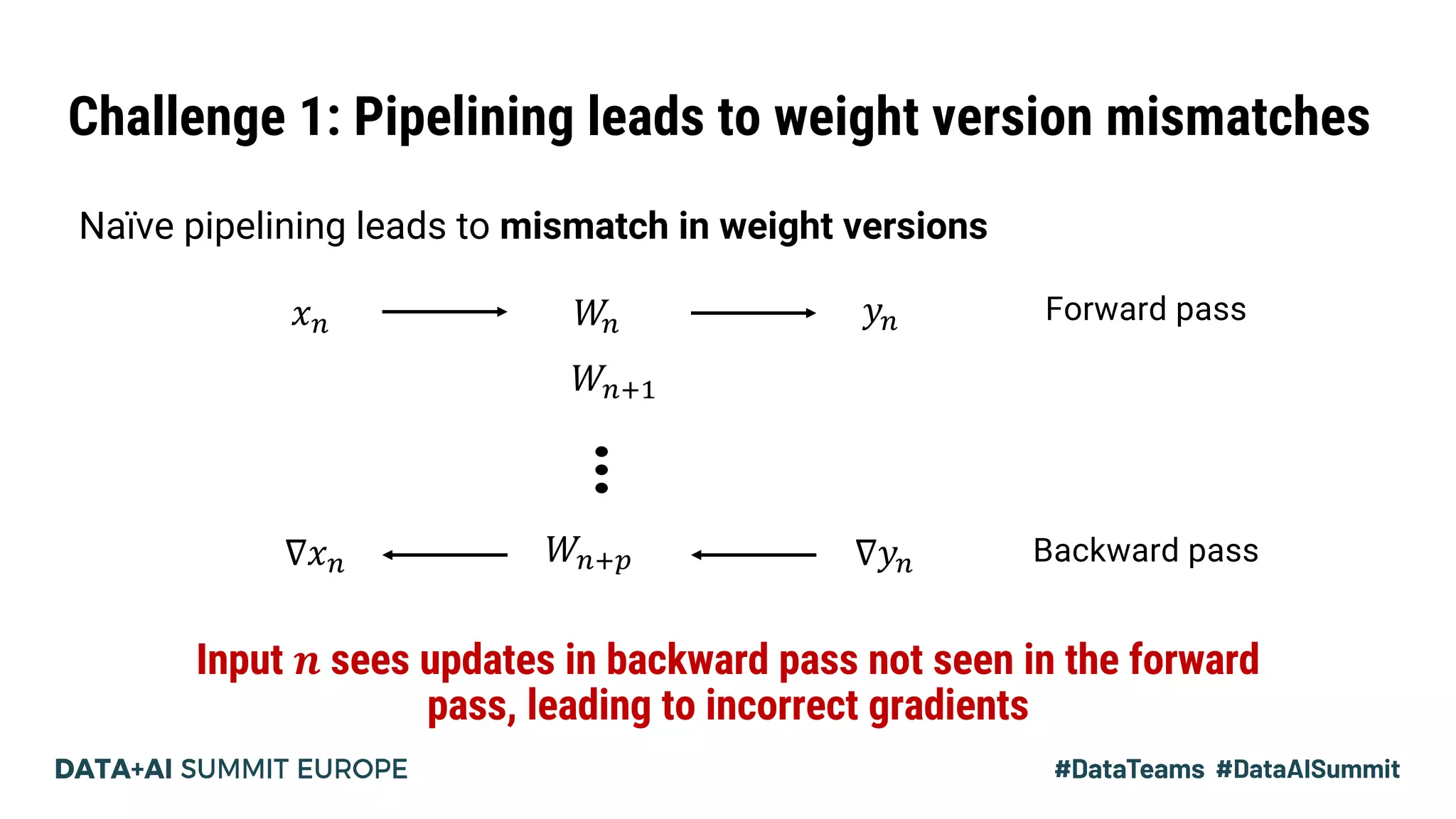

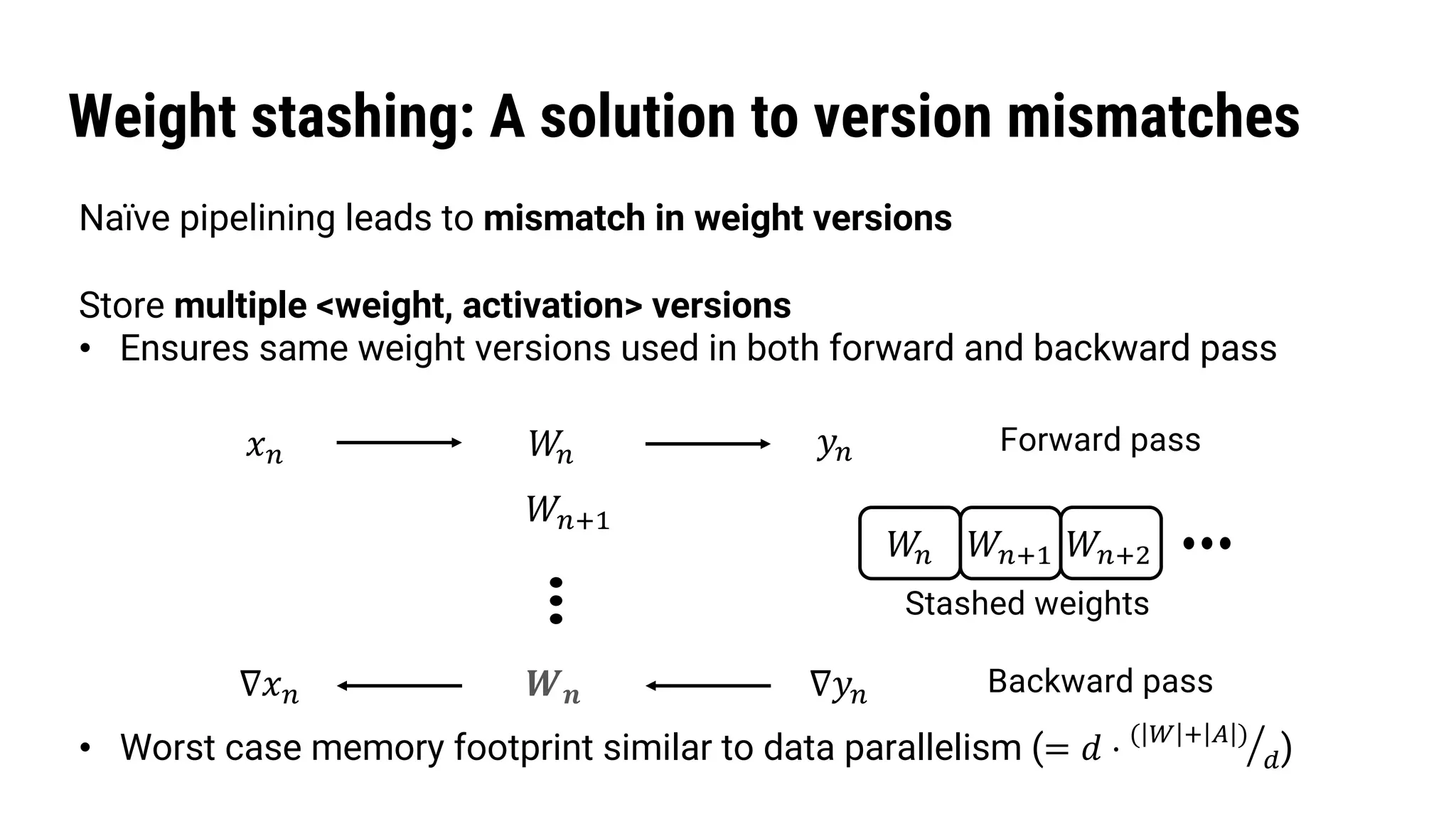

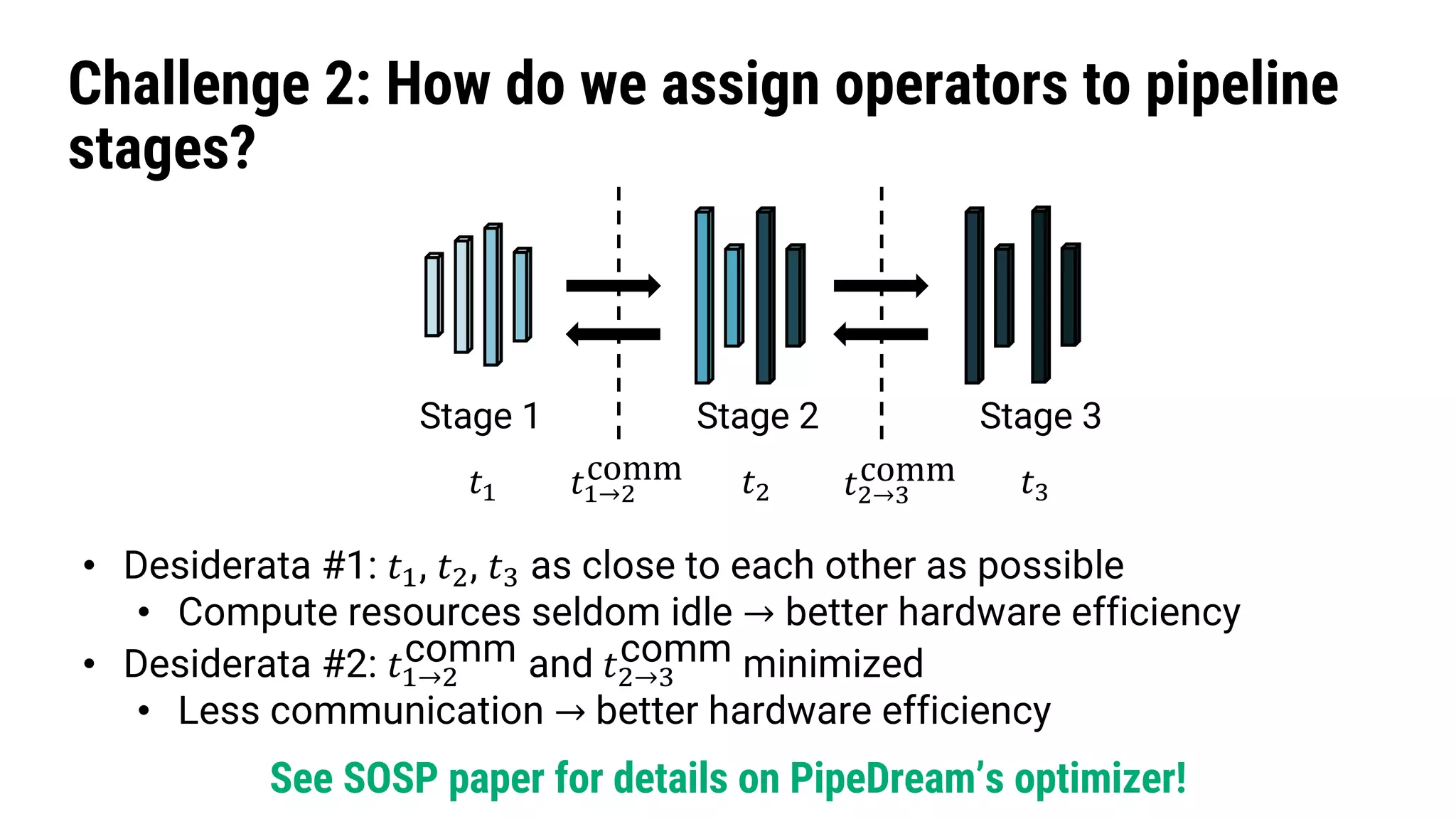

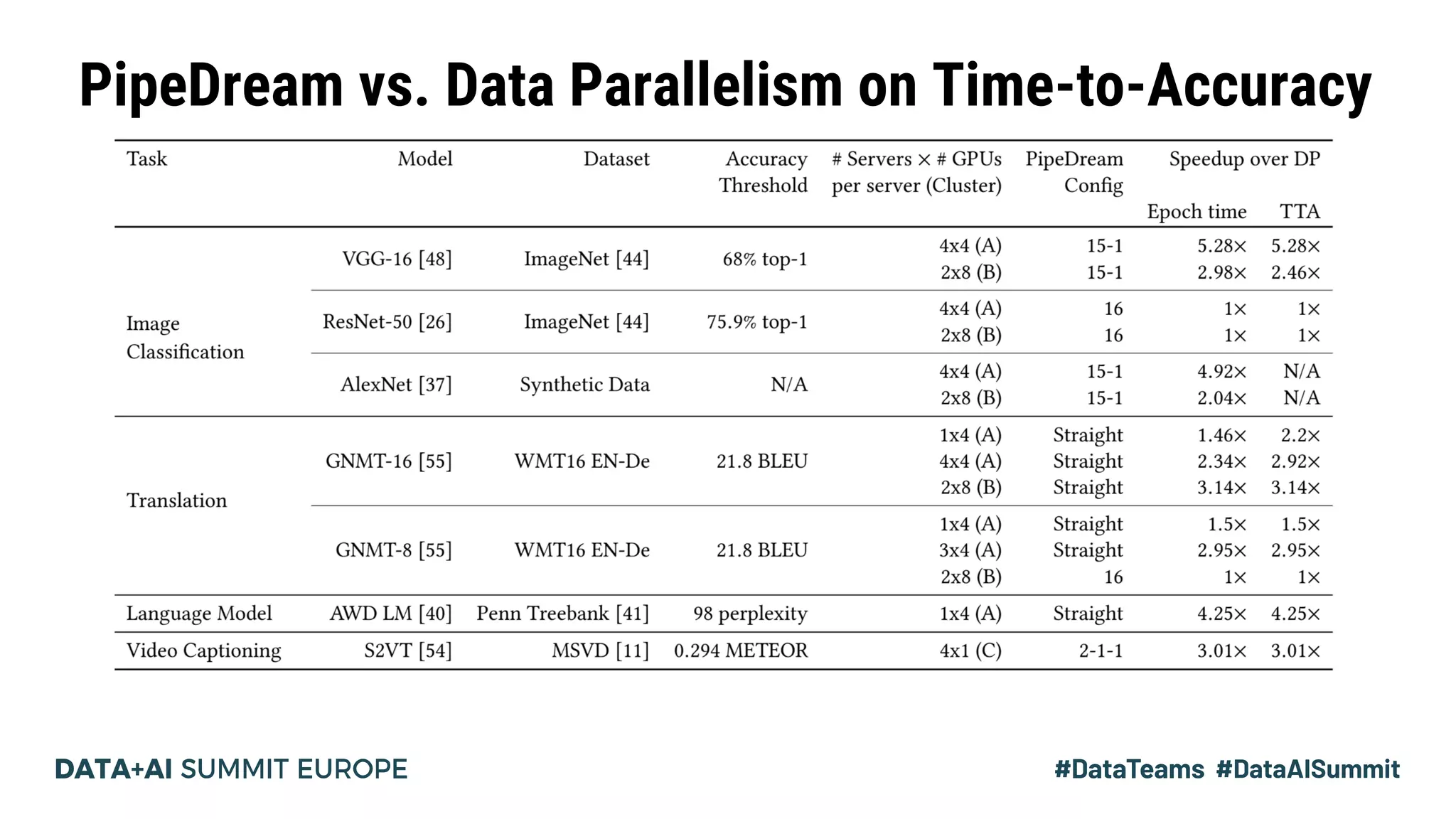

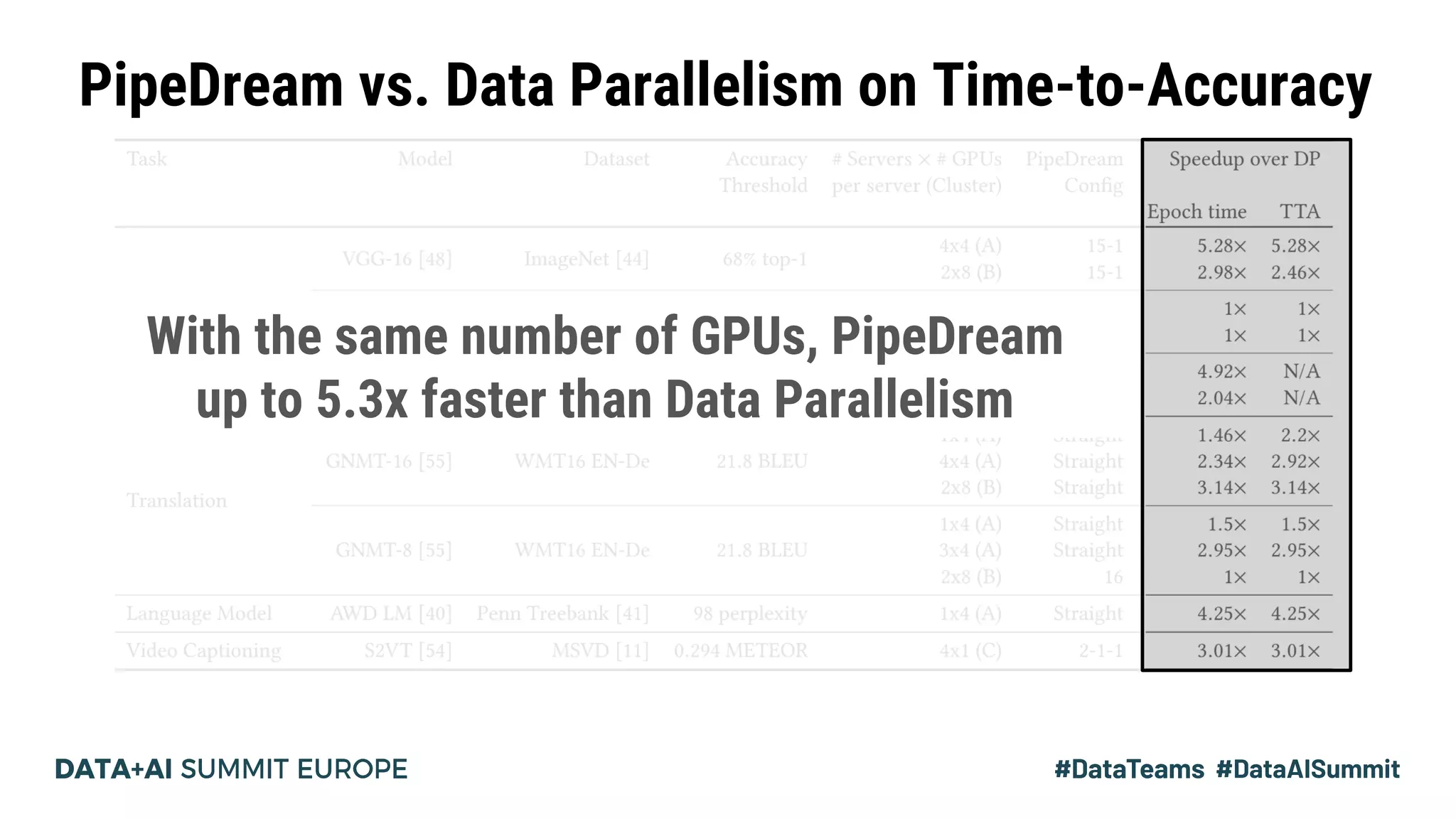

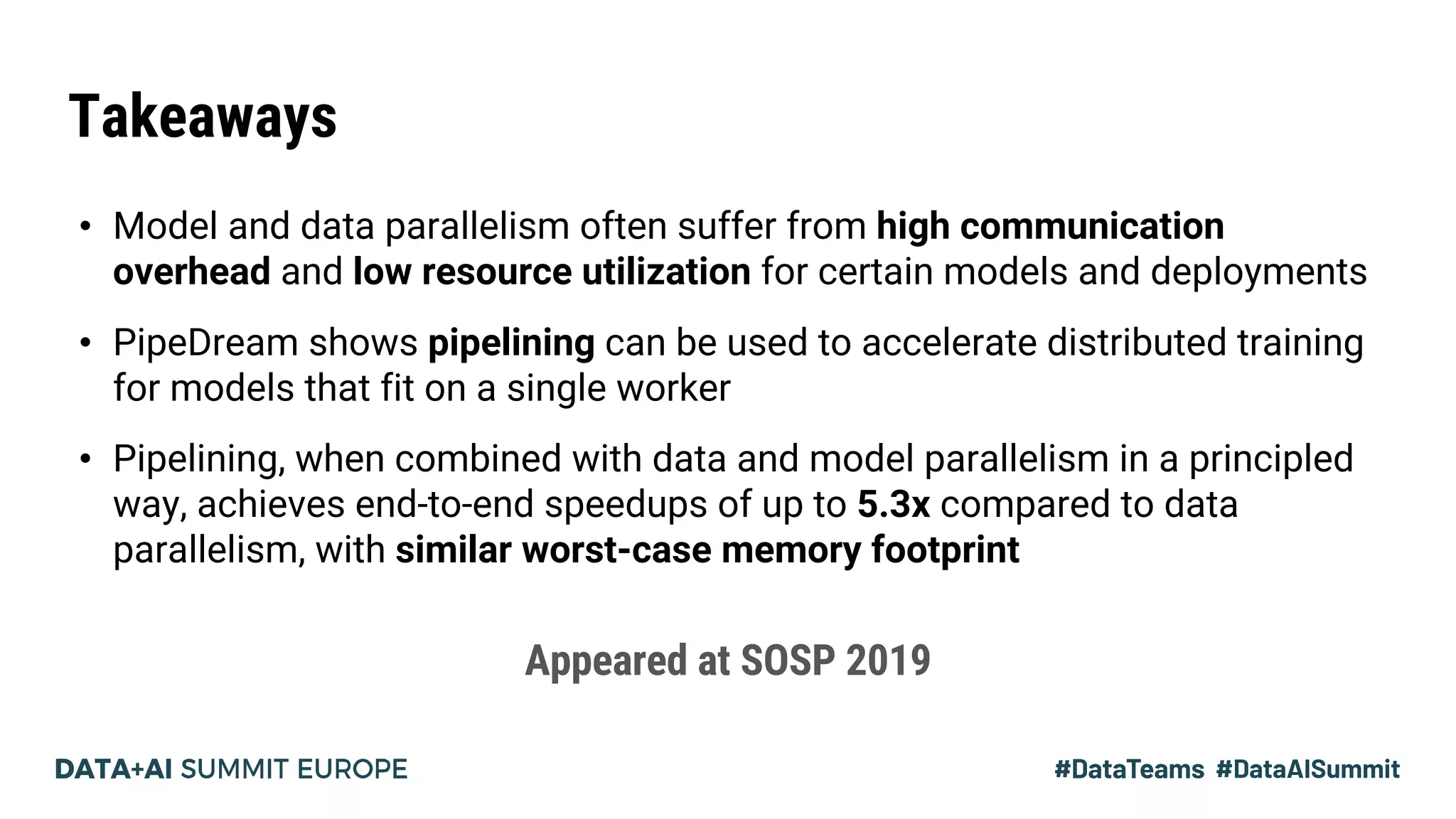

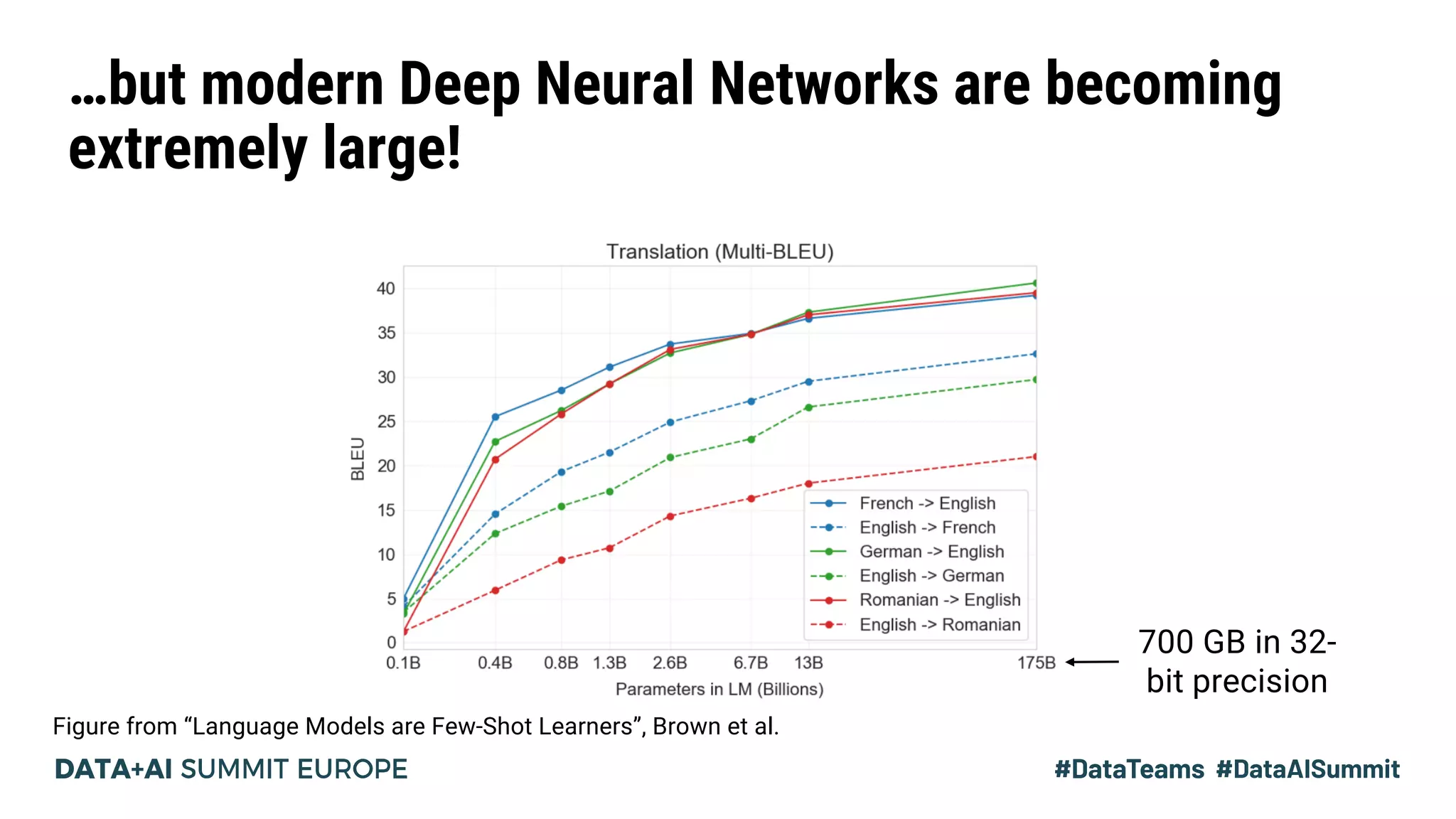

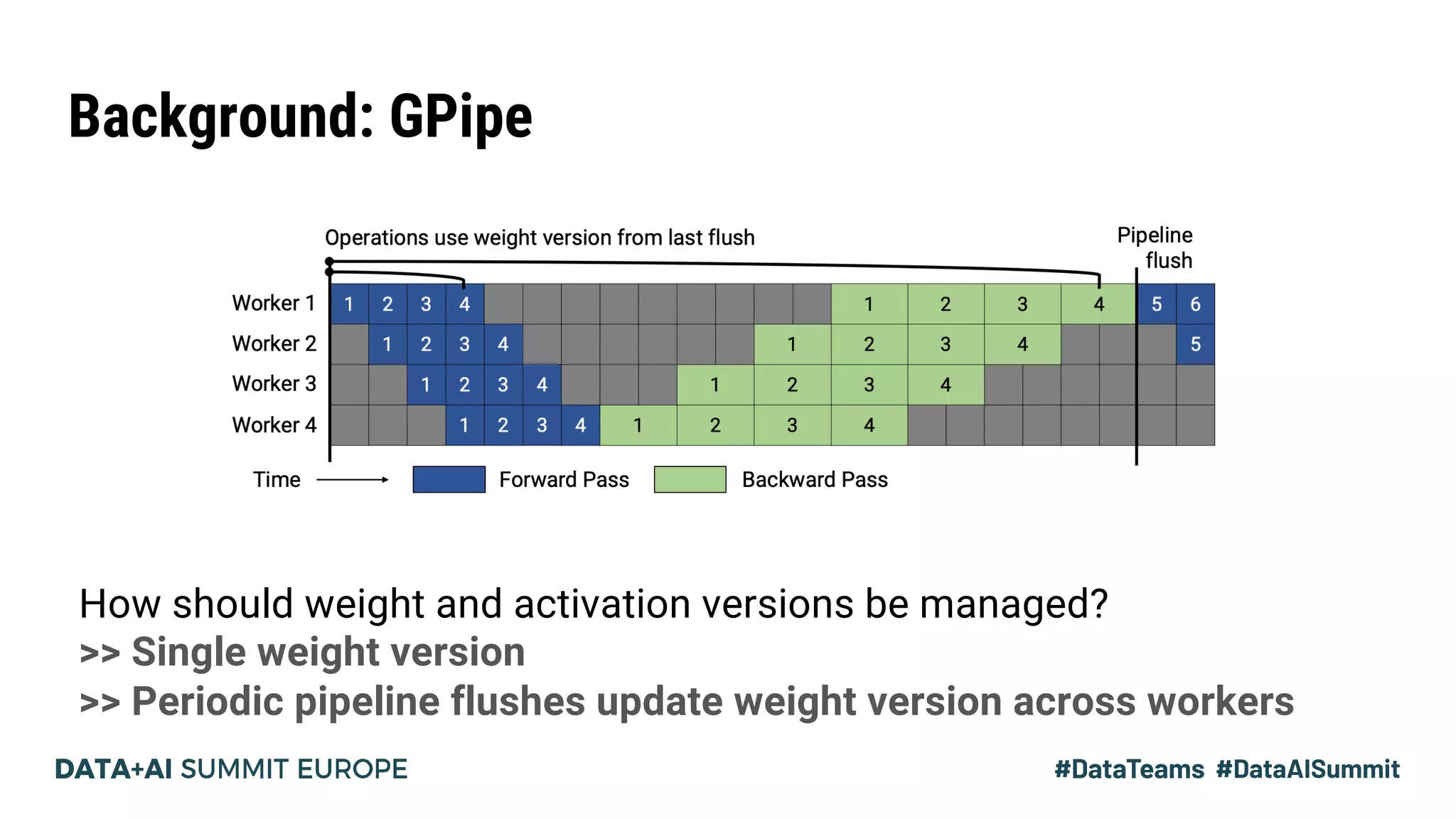

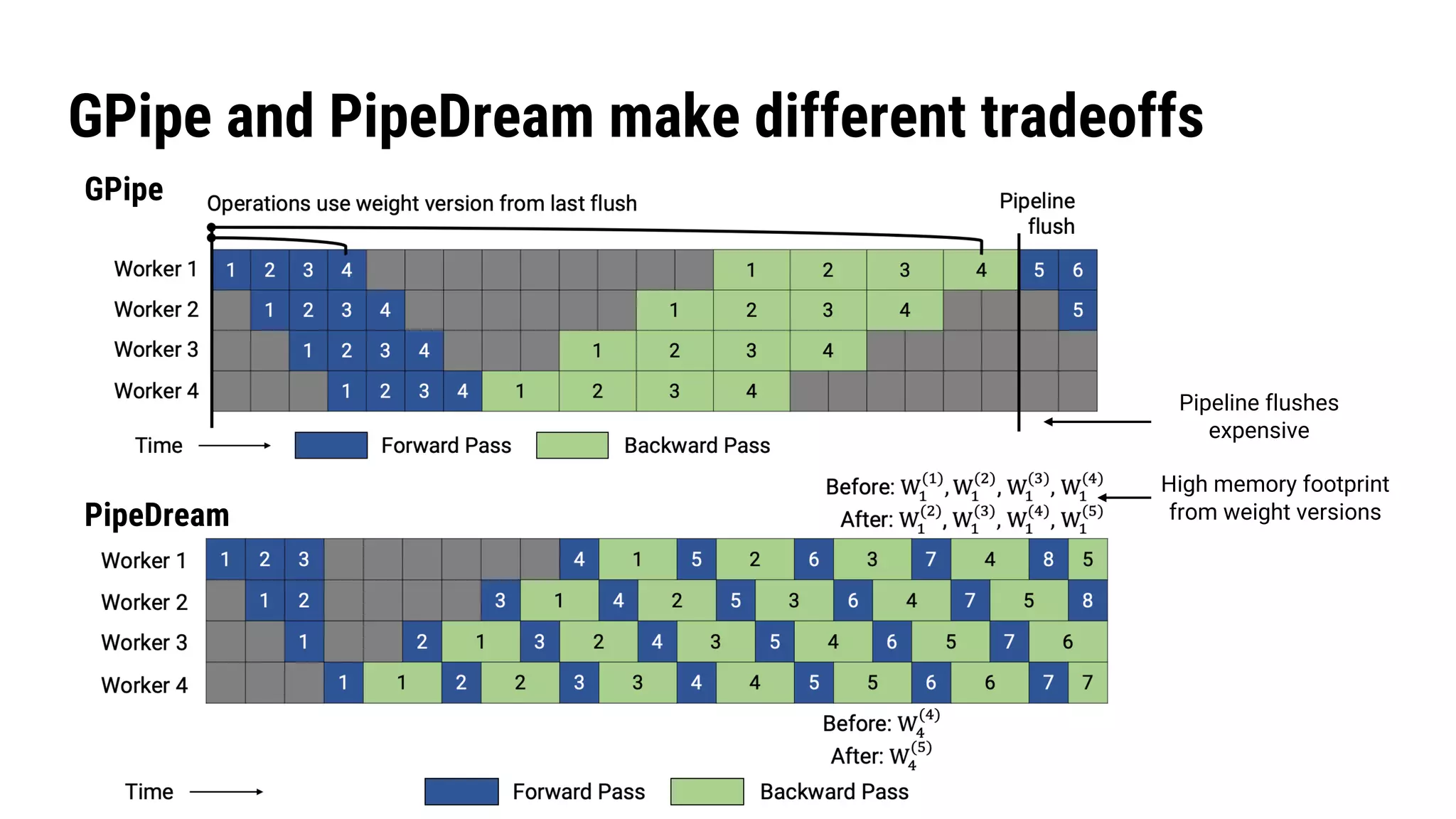

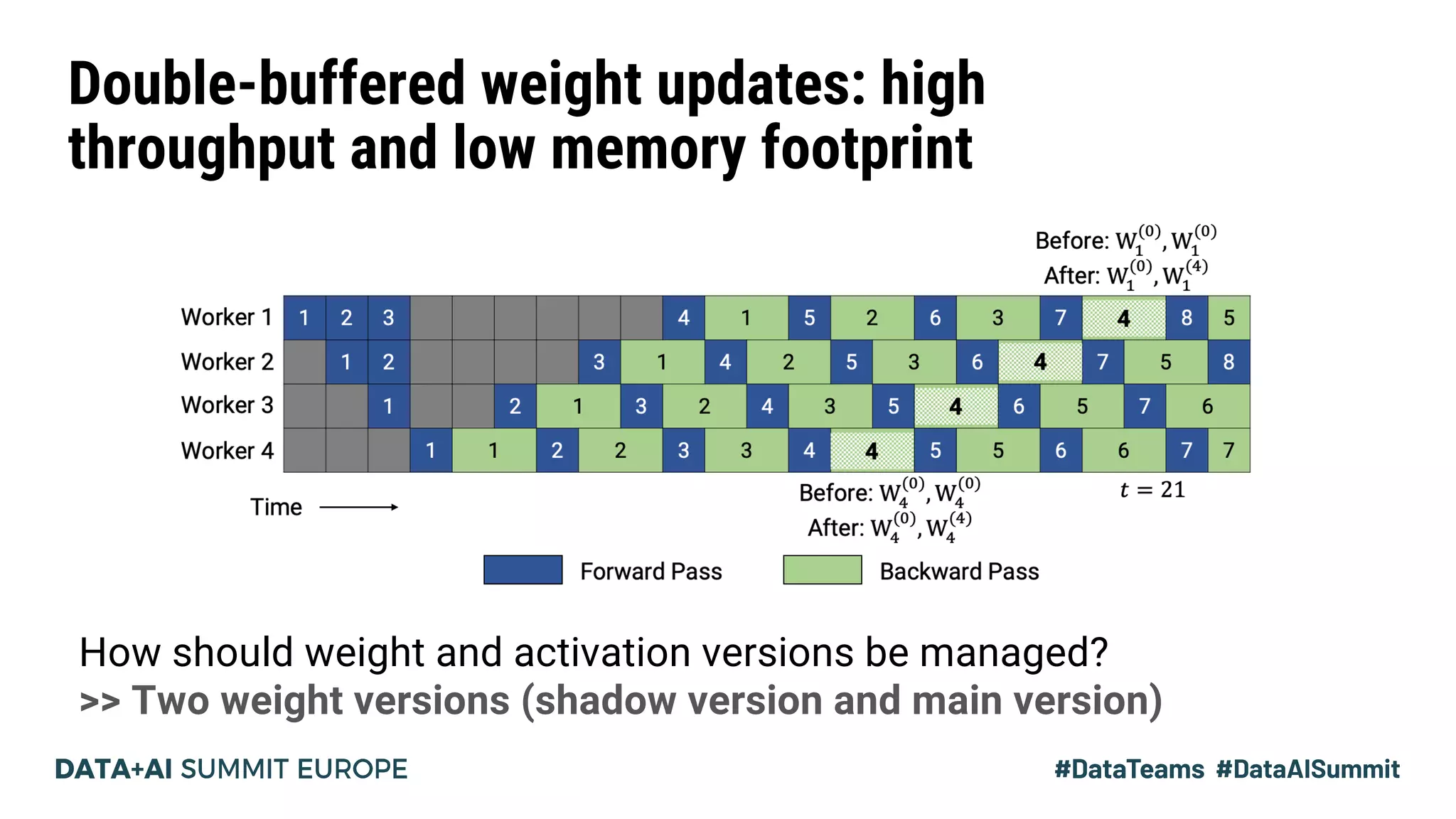

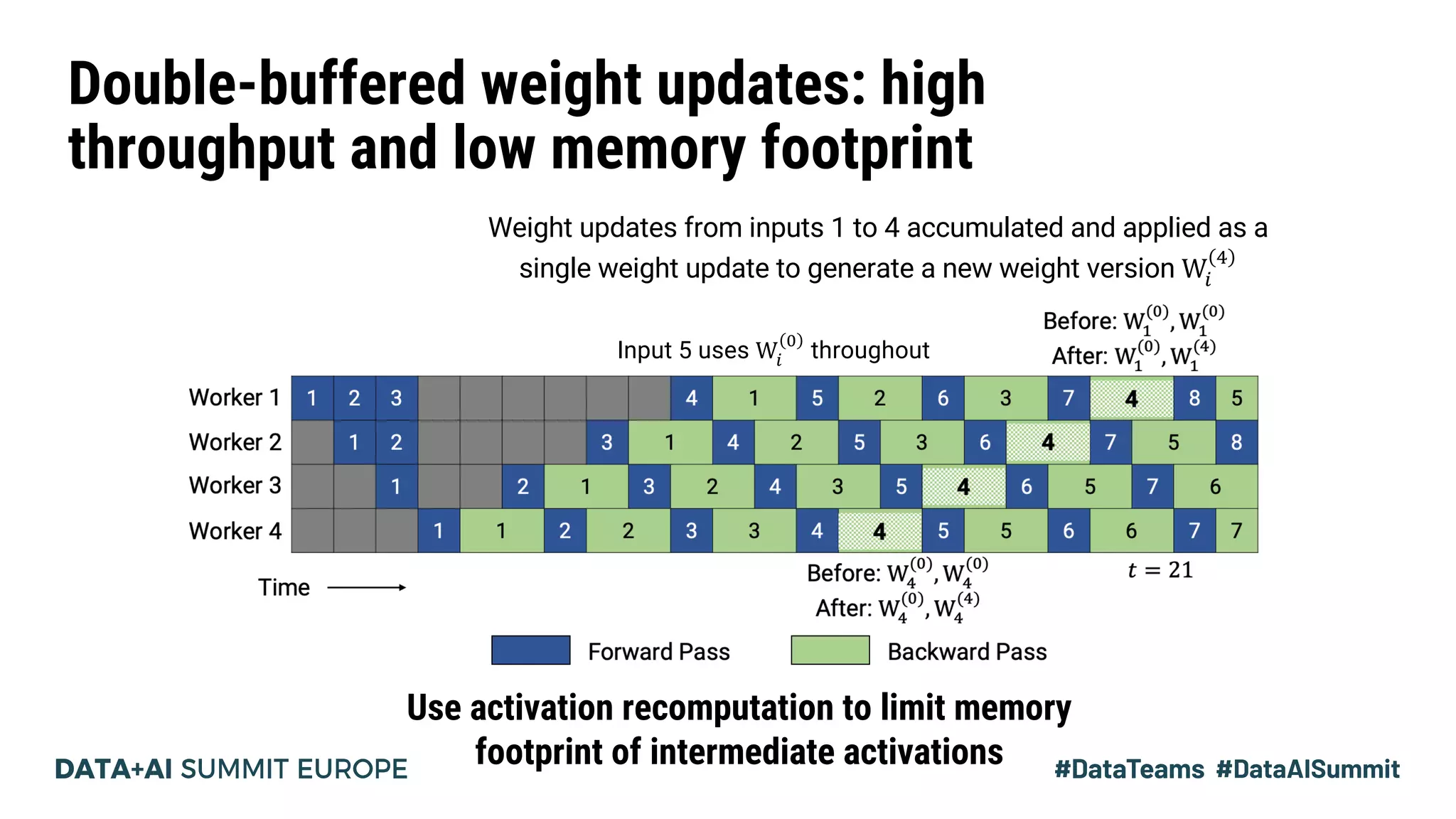

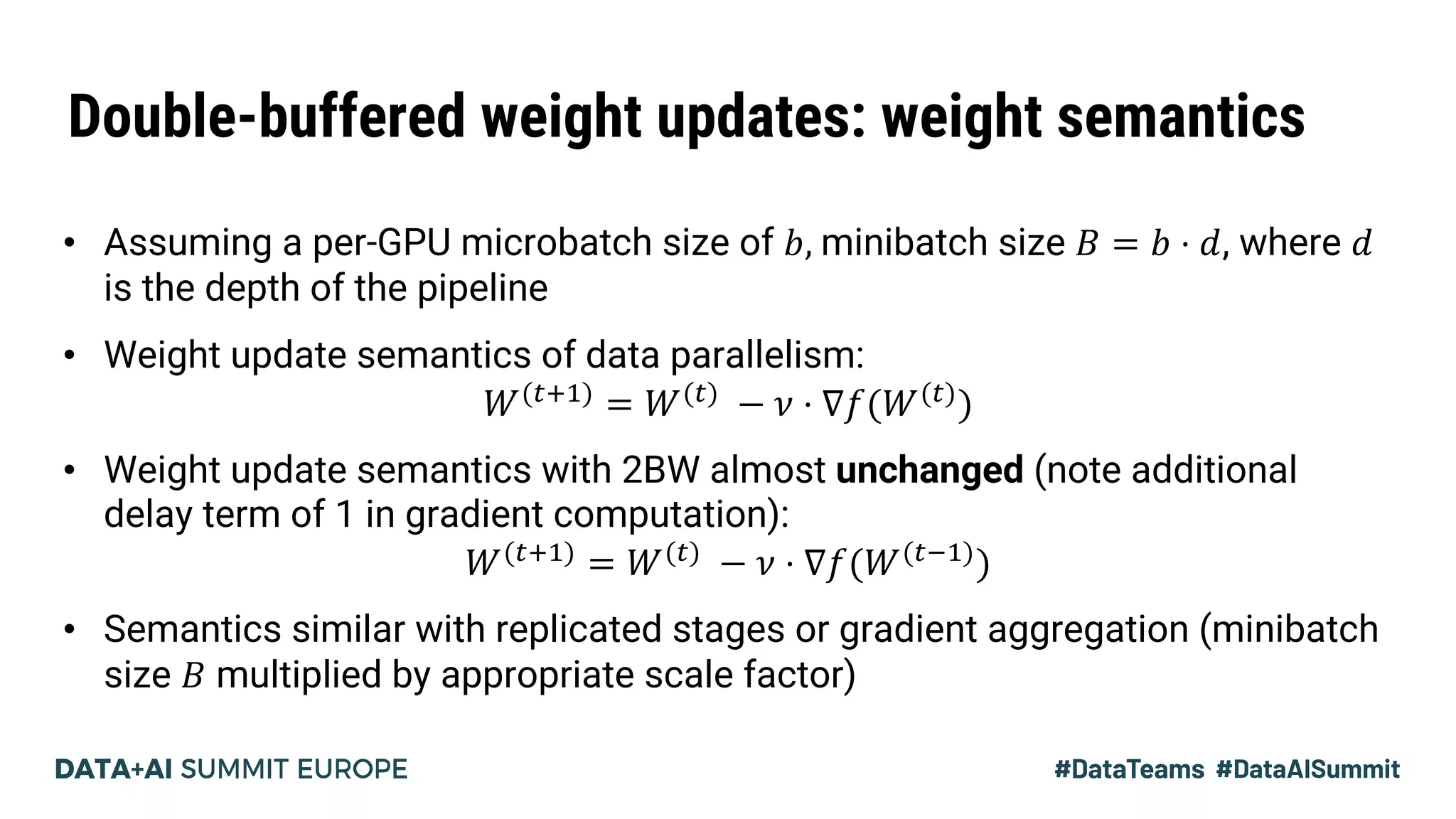

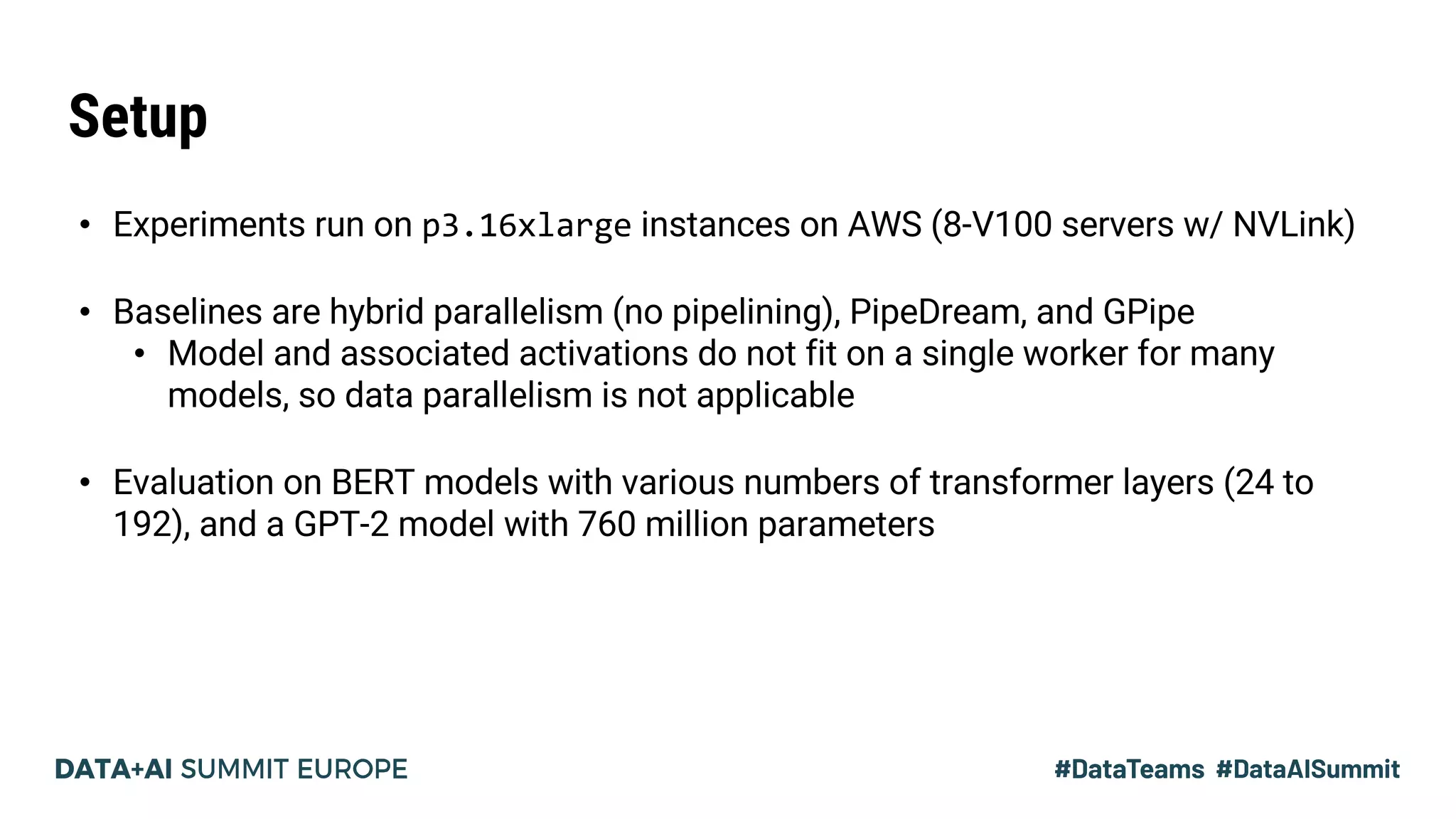

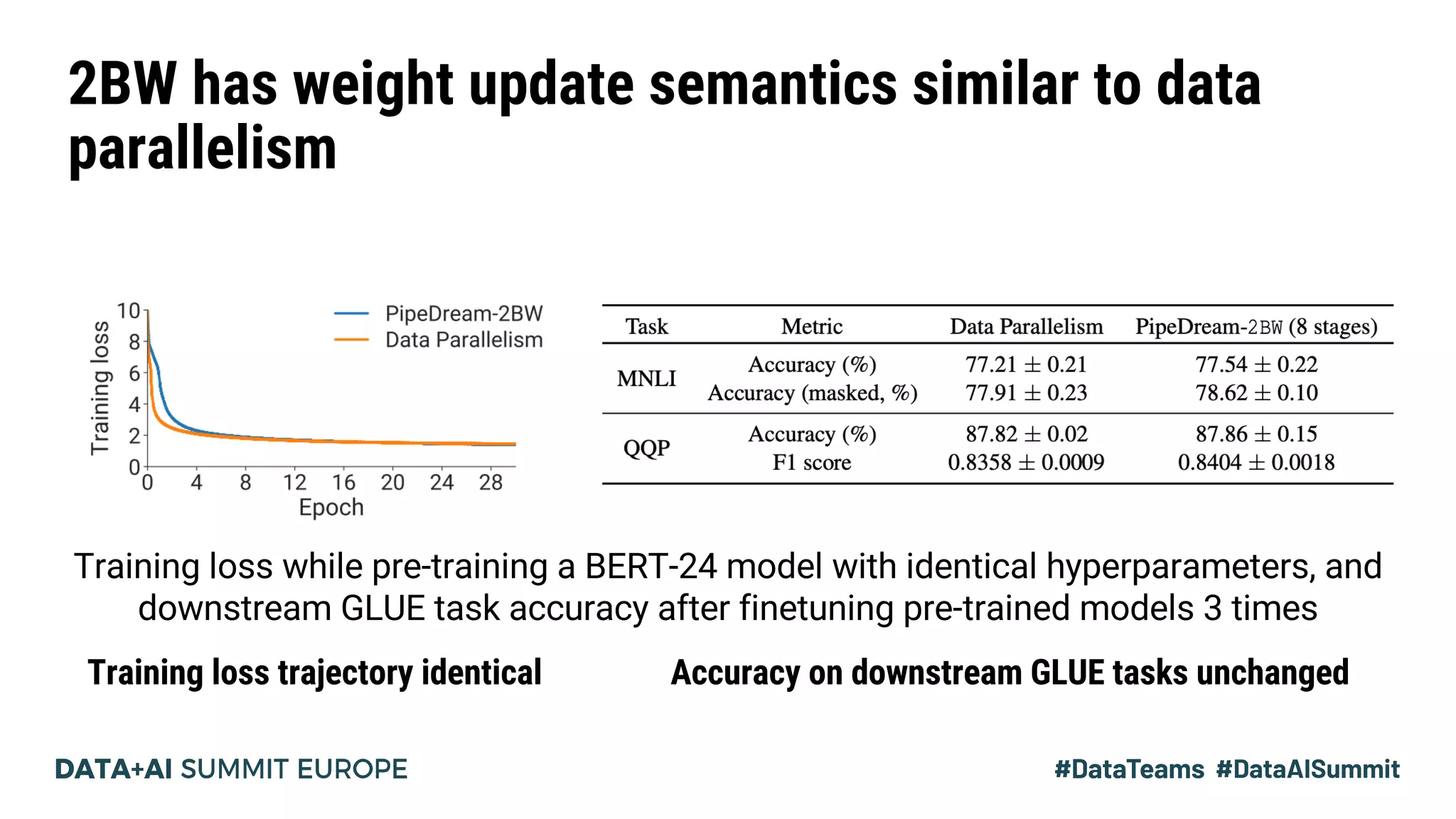

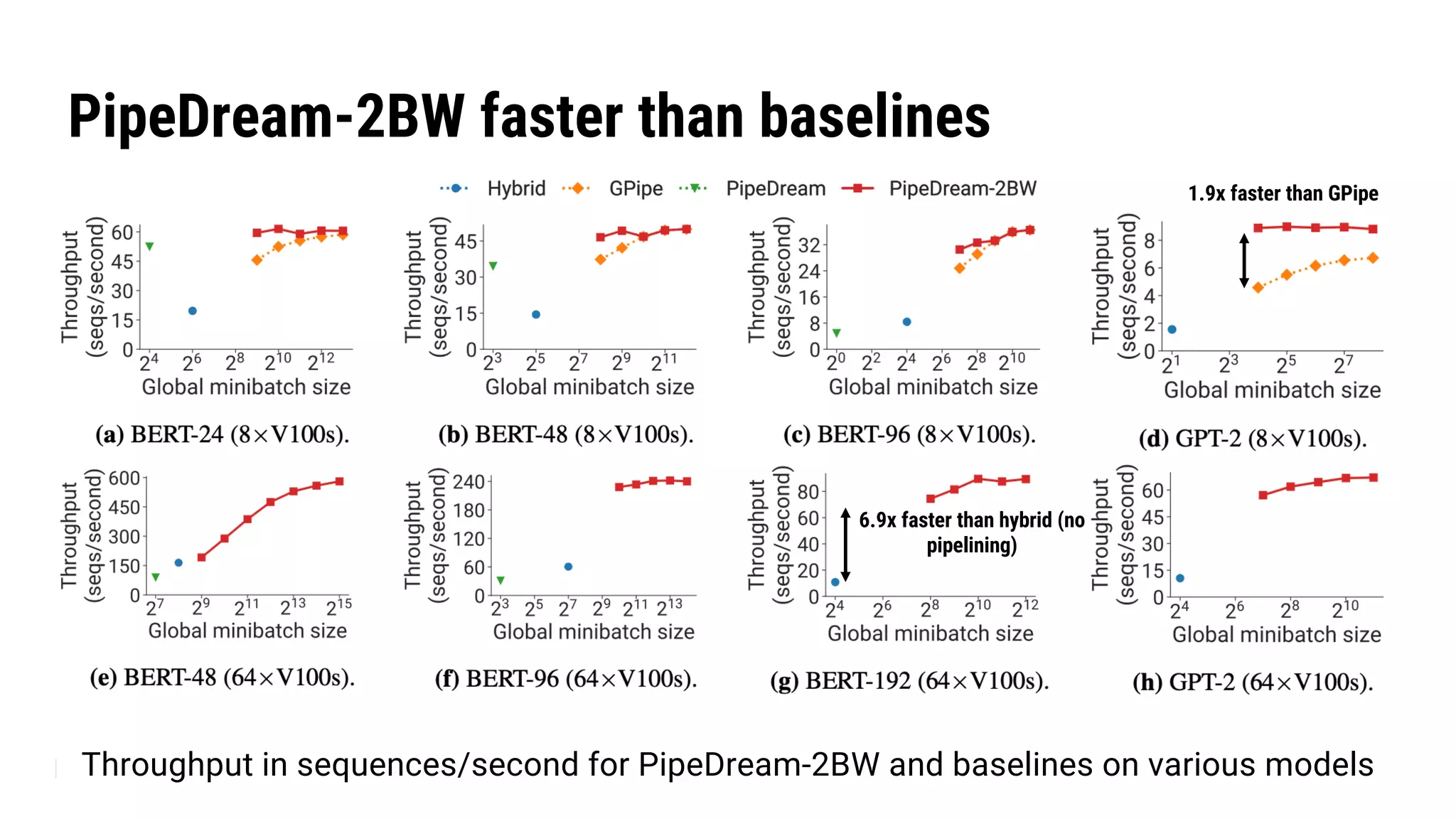

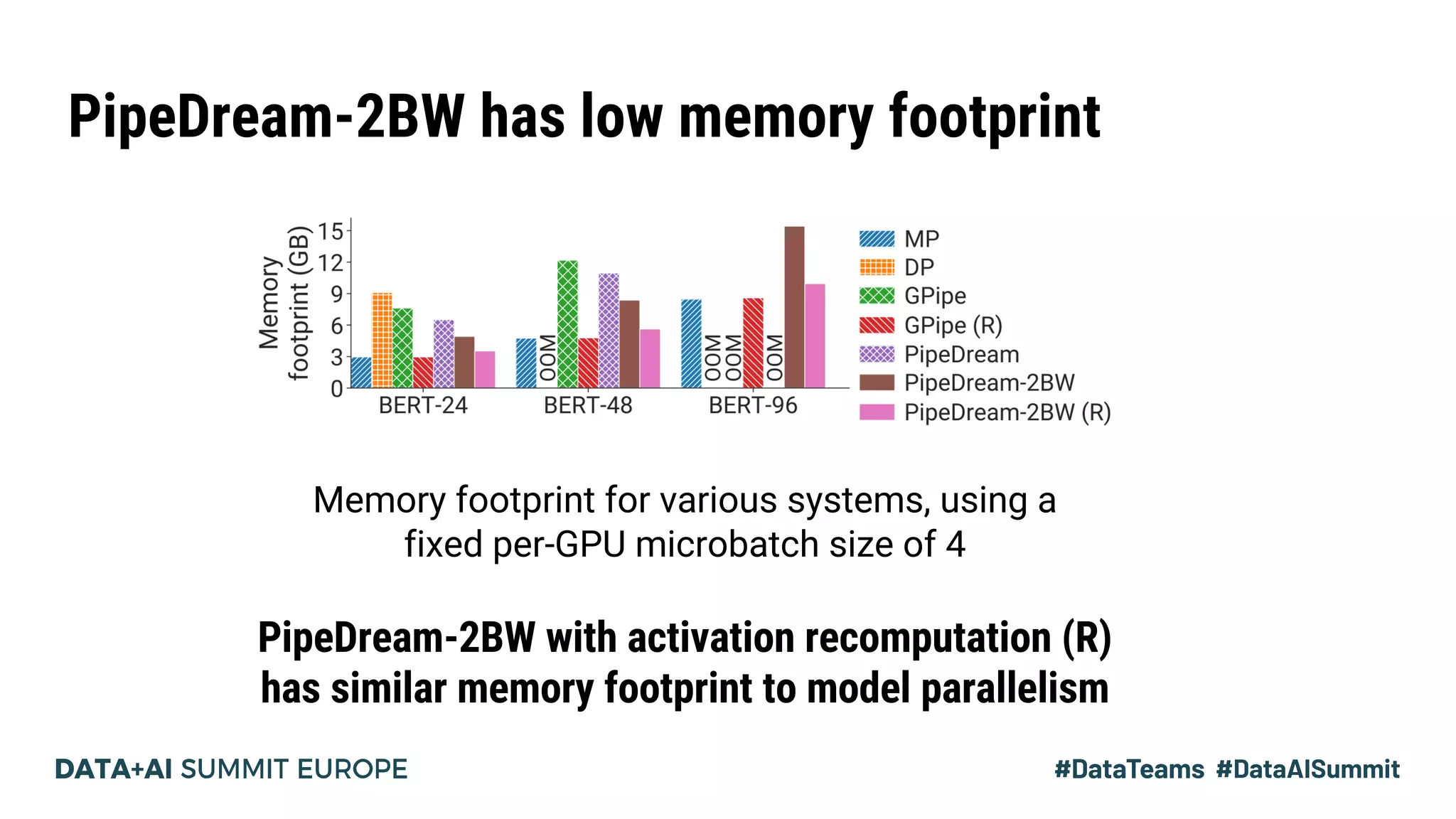

The document discusses Pipedream, a pipeline parallelism approach for deep neural network (DNN) training, which aims to address communication overhead and resource utilization issues in traditional data and model parallelism. It emphasizes the use of pipelining to improve training throughput and presents experimental results demonstrating Pipedream's speed advantages over data parallelism, achieving up to 5.3x faster training on various tasks. The paper concludes that Pipedream effectively accelerates distributed training, whether model metadata fits on a single worker or not.