This document provides an overview of field-programmable gate arrays (FPGAs):

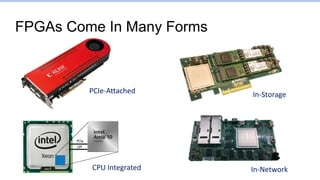

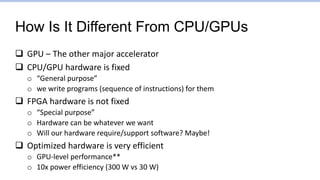

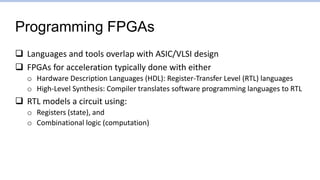

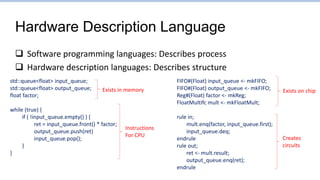

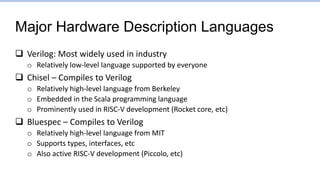

FPGAs can be configured to act like any circuit and do many computational tasks. Unlike CPUs and GPUs which have fixed hardware, FPGAs have programmable hardware that can be optimized for specific applications. This specialized hardware enables much higher performance and efficiency than general purpose processors. Programming FPGAs involves using hardware description languages to describe circuits or high-level synthesis tools to compile software languages into hardware implementations.

![High-Level Synthesis

Compiler translates software programming languages to RTL

High-Level Synthesis compiler from Xilinx, Altera/Intel

o Compiles C/C++, annotated with #pragma’s into RTL

o Theory/history behind it is a complex can of worms we won’t go into

o Personal experience: needs to be HEAVILY annotated to get performance

o Anecdote: Naïve RISC-V in Vivado HLS achieves IPC of 0.0002 [1], 0.04 after

optimizations [2]

OpenCL

o Inherently parallel language more efficiently translated to hardware

o Stable software interface

[1] http://msyksphinz.hatenablog.com/entry/2019/02/20/040000

[2] http://msyksphinz.hatenablog.com/entry/2019/02/27/040000](https://image.slidesharecdn.com/fpga1-whatis-220507122503-c44c9adf/85/fpga1-What-is-pptx-18-320.jpg)