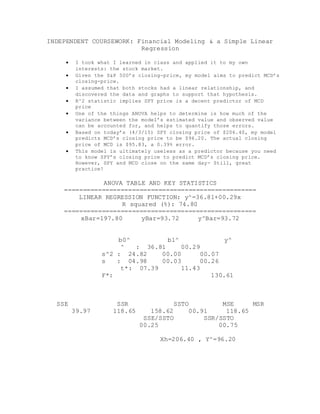

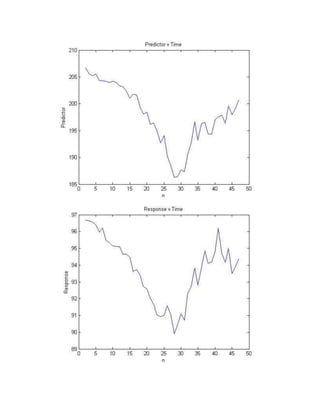

The student conducted an independent linear regression analysis to model the relationship between the closing prices of the S&P 500 ETF (SPY) and McDonald's (MCD) stock. A linear regression model was fitted with MCD closing price as the response variable and SPY closing price as the predictor variable. The model found a statistically significant linear relationship between the two variables, with SPY price explaining about 75% of the variation in MCD price. When using the model to predict MCD's closing price based on SPY's actual later closing price, the model prediction was within 0.4% of the actual MCD price.

![MATLAB CODE FOR THE LINEAR REGRESSION MODEL:

clc

clear all

%SSR = SUM[(Yhat-Ybar)^2]

%SSE = SUM[(Y-Yhat)^2]

%SSTO = SUM[(Y-Ybar)^2]

%Yhat= B0hat+ B1hat*X

X=importdata('C:/SPYCLOSING.txt');

Y=importdata('C:/MCDCLOSING.txt');

%for i=1:numel(Y)

% X(i)=i;

%end

%X=[rand(1) rand(1) rand(1)];

%Y=[rand(1) rand(1) rand(1)];

%X=transpose(X);

%Y=transpose(Y);

i=0;

for i=1:numel(Y)

T(i)=i+1;

end

clc

fprintf('Last Value: X=%05.2fn', X(numel(X)))

fprintf('Last Value: Y=%05.2fn', Y(numel(Y)))

xPred=input('Predictor Value (X-Level): ');

n=numel(X);

Xbar=0;

Ybar=0;

b1Num=0;

b1Den=0;

for i=1:n

Xbar=Xbar+X(i);

end

for i=1:n

Ybar=Ybar+Y(i);

end

Xbar=Xbar/n;

Ybar=Ybar/n;](https://image.slidesharecdn.com/581eb286-412b-4930-b3a1-9cd84f53eb19-150404032750-conversion-gate01/85/Financialmodeling-4-320.jpg)

![%LEAST SQUARES PARAMTER ESTIMATES : b0, b1 and y^

for i=1:n

b1Num=b1Num+((X(i)-Xbar)*(Y(i)-Ybar));

b1Den=b1Den+((X(i)-Xbar)^2);

end

b1=b1Num/b1Den;

b0=Ybar-b1*Xbar;

Yhat=b0+b1.*X;

%SSR

SSR=0;

for i=1:n

SSR=SSR+(Yhat(i)-Ybar)^2;

errBar(i)=Yhat(i)-Ybar;

end

SSR;

%SSE

SSE=0;

for i=1:n

SSE=SSE+(Y(i)-Yhat(i)).^2;

errData(i)=Y(i)-Yhat(i);

end

SSE;

%YHATBAR

sum=0;

for i=1:numel(Yhat)

sum=Yhat(i)+sum;

end

yHatBar=sum/numel(Yhat);

%SSTO

SSTO=SSE+SSR;

Rsquared=SSR/SSTO;

%MSE = s^2 = Sample Variance

MSE=SSE/(n-2); %2 degrees of freedom are lost in estimating b0

and b1

rootMSE=sqrt(MSE);

%MSR

MSR=SSR/1;

%s^2[YHath]

x=0;](https://image.slidesharecdn.com/581eb286-412b-4930-b3a1-9cd84f53eb19-150404032750-conversion-gate01/85/Financialmodeling-5-320.jpg)

![Xh=xPred; %X Level

for i=1:n

x=(X(i)-Xbar).^2 + x;

end

sSquaredYh=MSE*( (1/n)+ ((Xh-Xbar).^2)/x);

sYhatH=sqrt(sSquaredYh);

yhatH=b0+b1*Xh;

%s^2[b0]

Ssquaredb0=0;

Ssquaredb0=MSE*((1/n)+ (Xbar^2)/(x));

sB0=sqrt(Ssquaredb0);

%s^2[b1]

varianceb1=1;

Ssquaredb1=MSE/(x);

sB1=sqrt(Ssquaredb1);

%MATRIX FORM

XM=ones(numel(X), 1);

XM=[XM zeros(size(XM,1),1)];

for i=1:n

XM(i,2)=X(i);

end

XM;

YM=Y;

XMtrans=transpose(XM);

XtransposeTimesX=XMtrans*XM;

XtransposeTimesY=XMtrans*YM;

InverseXXt=inv(XtransposeTimesX);

BETA=InverseXXt*XtransposeTimesY;

YhatM=XM*BETA;

ResidualM=YM-YhatM;

VarCov=MSE*InverseXXt;

%t statistic

tb1=b1/sB1;

tb0=b0/sB0;

%f statstic

F=MSR/MSE;

clc](https://image.slidesharecdn.com/581eb286-412b-4930-b3a1-9cd84f53eb19-150404032750-conversion-gate01/85/Financialmodeling-6-320.jpg)