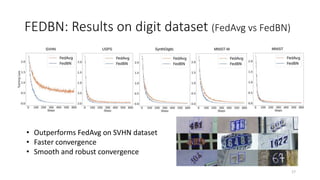

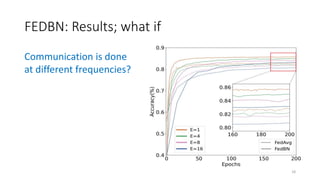

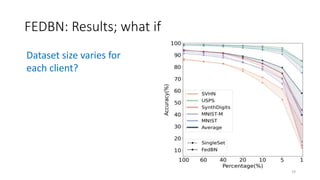

This paper proposes FedBN, a method for federated learning that uses local batch normalization to address statistical heterogeneity in non-IID datasets across clients. FedBN trains batch normalization parameters locally on each client's data and aggregates only the global model during federated averaging. This allows FedBN to converge faster and more smoothly than FedAvg on heterogeneous datasets like SVHN. The paper shows FedBN provides theoretical convergence guarantees and improves privacy by keeping more client data local compared to baseline federated learning methods.

![FEDBN: Federated learning[1]

Year

2021

2020

2018

2017

2016

2015

2014

2013

2012

2011

[1] Jakub et al. Federated optimization: Distributed machine learning for on-device intelligence. 2016

Classical Machine Learning:

• Centralized data storage

• Training process computations at the central server.

What if ?

Data stays distributed on remote devices

Devices maintain control of their own data

Training is done locally on remote devices

One global model is learned via aggregation

3

Autonomous cars on an

average generate around 4 GB

of data per hour of driving.](https://image.slidesharecdn.com/paper-210601090122/85/FedBN-3-320.jpg)

![FEDBN: Federated learning

Applications [1]

• Transportation: self-driving cars

• Healthcare: predictions on patient data

• Cybersecurity: spam filtering

• Smart applications: voice recognition, next word prediction, etc.

[1] Read more: Priyanka et al. Federated Learning: Opportunities and Challenges, 2021

Challenges [1]

• Communication Overheads: presence of stragglers

• Heterogeneity: system, statistical (in contrast to distributed learning)

• Privacy concerns

4

Year

2021

2020

2018

2017

2016

2015

2014

2013

2012

2011](https://image.slidesharecdn.com/paper-210601090122/85/FedBN-4-320.jpg)

![FEDBN: Related work

• FedAvg[1]: Federated Average

[1] Brendan McMahan et al. Communication-efficient learning of deep networks from decentralized data. 2017.

At each communication round

1. Server randomly selects a subset of K clients and Send them current global model

2. Selected device k updates this model on local client data via SGD. After training client

sends the new local model back to server

3. Server aggregates local models to form a new global model

- Convergence in not guranteed. In hetergeneous settings it can diverge [1]

Year

2021

2020

2018

2017

2016

2015

2014

2013

2012

2011

7](https://image.slidesharecdn.com/paper-210601090122/85/FedBN-7-320.jpg)

![FEDBN: Related work

• FedProx[1]: Federated Optimization in Heterogeneous Networks

[1] Tian Li et al, In Conference on Machine Learning and Systems, 2020a, 2020b.

Slide credit: Tian Li, MLSys presentation.

+ Limits the impact of heterogeneous local updates

+ Safely incorporate partial work of stragglers

+ Generalization of FedAvg; Allows for any local solver

+ Theoretical guarantees for convergence

Year

2021

2020

2018

2017

2016

2015

2014

2013

2012

2011

8](https://image.slidesharecdn.com/paper-210601090122/85/FedBN-8-320.jpg)

![FEDBN: Related work

• SiloBN[1]: Siloed Federated Learning for Multi-Centric Histopathology Datasets

[1] Mathieu Andreux et al, Siloed federated learning for multi-centric histopathology datasets, pp. 129–139. Springer, 2020.

Slide credits: [1]

Year

2021

2020

2018

2017

2016

2015

2014

2013

2012

2011

9](https://image.slidesharecdn.com/paper-210601090122/85/FedBN-9-320.jpg)

![FEDBN: Batch Normalization

Year

2021

2020

2018

2017

2016

2015

2014

2013

2012

2011

[1] Sergey Ioffe et al. Batch normalization: Accelerating deep network training by reducing internal covariate shift. 2015

γ and β are the only

learnable parameters

of BN layer.

10

Why we use it ?

To reduce internal covariate

shift in neural network [1].

How it works ?](https://image.slidesharecdn.com/paper-210601090122/85/FedBN-10-320.jpg)