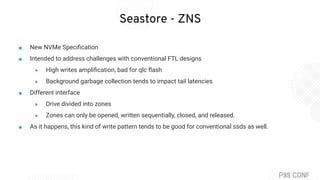

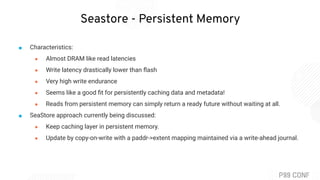

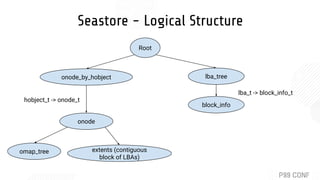

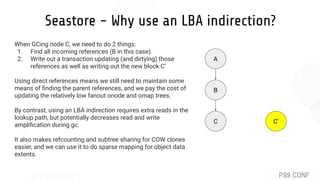

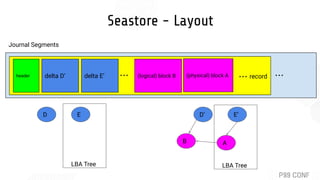

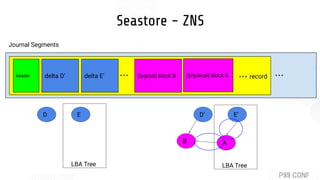

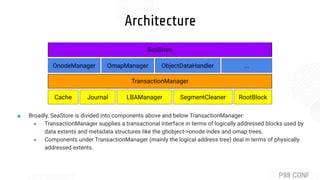

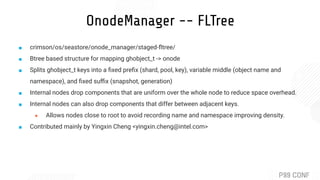

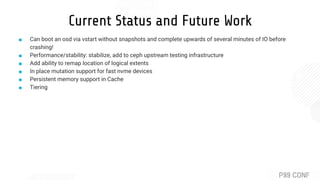

The document presents a detailed overview of 'Seastore,' a next-generation backing store for Ceph, designed to optimize I/O performance through a redesigned architecture leveraging the Seastar programming model. It explains the innovative features of Seastore, including its transactional object store, support for emerging technologies like zoned namespaces (ZNS) and persistent memory, and the advantages of using logical block addressing for efficient garbage collection and data management. The document also outlines the current status and future directions of the project, indicating ongoing efforts to enhance stability and performance.

![Classic vs Crimson Programming Model

for (;;) {

auto req = connection.read_request();

auto result = handle_request(req);

connection.send_response(result);

}

repeat([conn, this] {

return conn.read_request().then([this](auto req) {

return handle_request(req);

}).then([conn] (auto result) {

return conn.send_response(result);

});

});](https://image.slidesharecdn.com/sam-just-p99-seastore-rough1-211003052233/85/Seastore-Next-Generation-Backing-Store-for-Ceph-6-320.jpg)