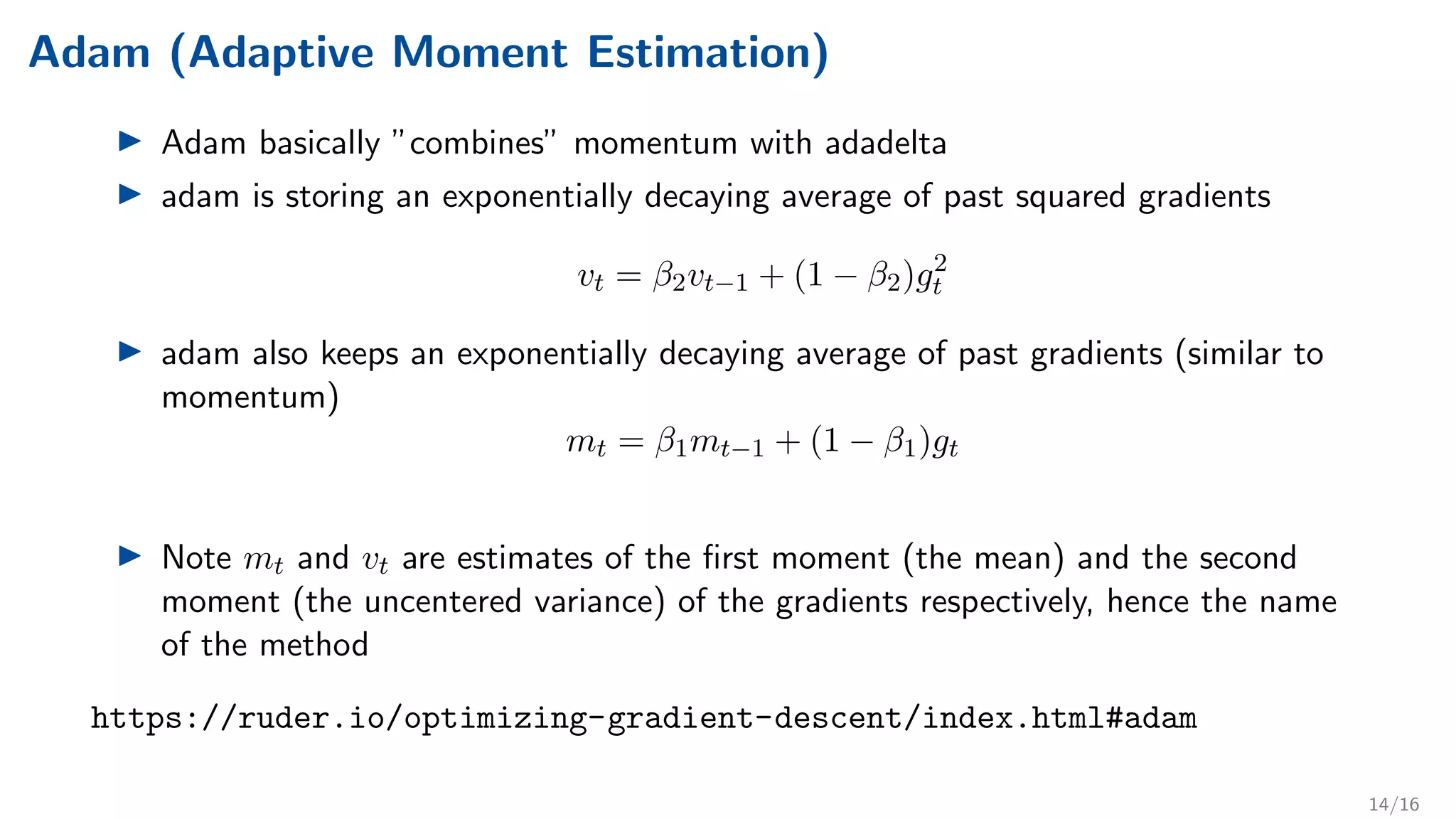

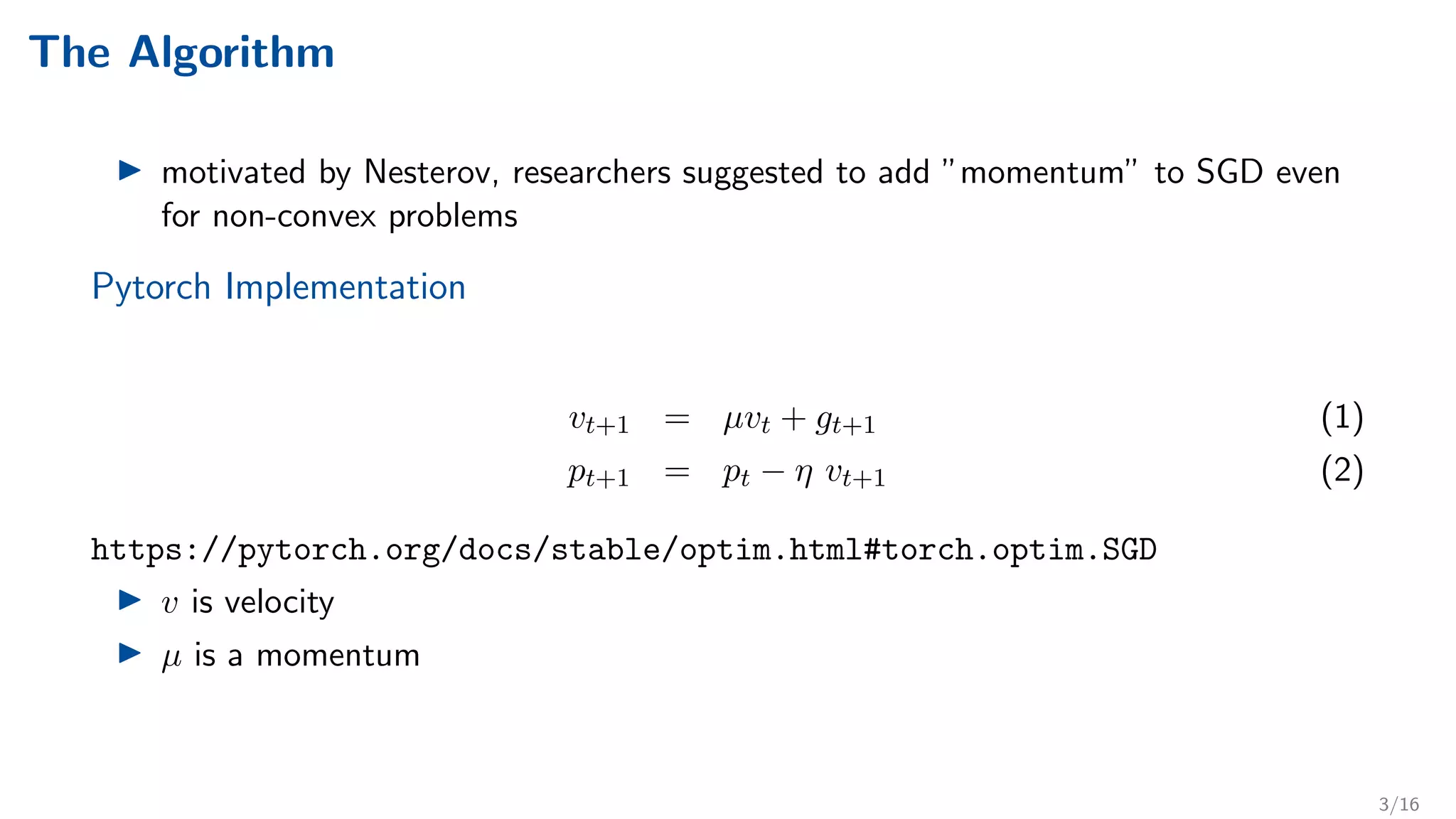

SGD with momentum adds a "momentum" term to SGD to smooth out noisy stochastic gradients. Adam combines momentum and RMSProp approaches, storing an exponentially decaying average of past gradients mt and past squared gradients vt. It uses biased-corrected estimates m̂t and v̂t in the update rule. Adadelta is an extension of Adagrad that restricts the window of past gradients to reduce its aggressive learning rate decay.

![Adagrad

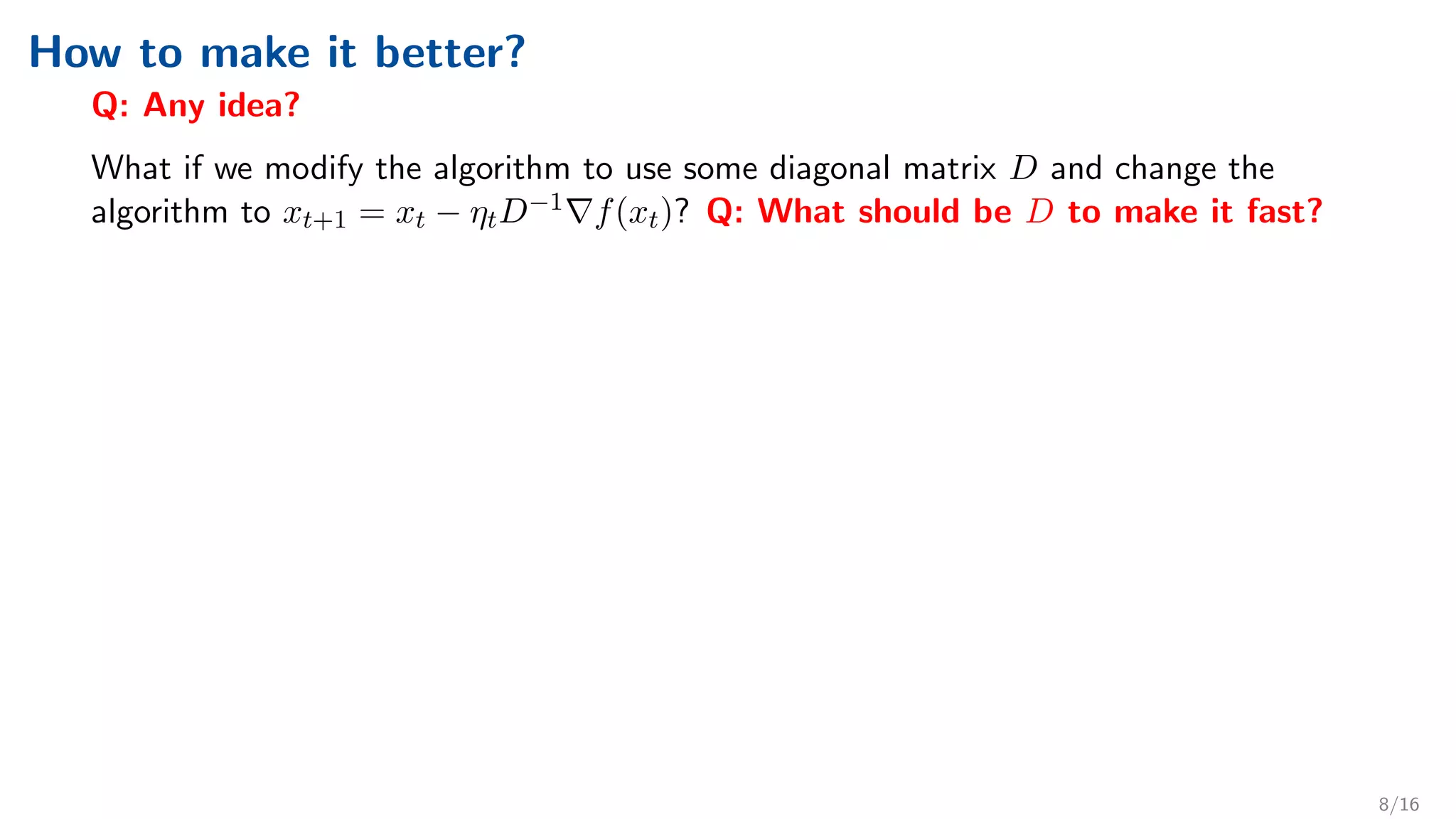

SGD update rule:

xi

k+1 = xi

k − η[gk]i

, ∀i ∈ {1, 2, . . . , d}

Adagrad:

xi

k+1 = xi

k − η

1

p

+ Gk,ii

[gk]i

, ∀i ∈ {1, 2, . . . , d}

Gk = diag(

k

X

l=0

gl gl)

is usually choosen as 10−8 or 10−10.

PS: we can write it in ”compact” way as: xk+1 = xk − η 1

√

+Gk

gk Q: What can be

BAD about this algorithm? Q: How could we do it better?

https://ruder.io/optimizing-gradient-descent/index.html#adagrad

10/16](https://image.slidesharecdn.com/06a-230516154553-6767231f/75/06_A-pdf-11-2048.jpg)