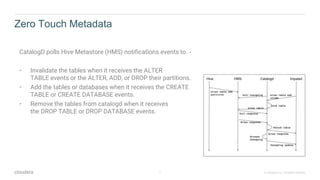

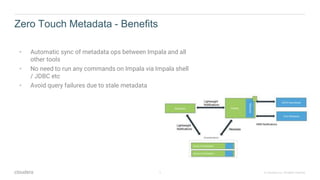

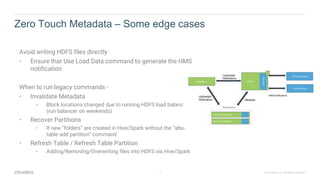

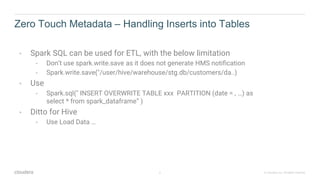

CatalogD polls Hive Metastore notifications to automatically sync metadata operations between Impala and other tools like Hive and Spark. This avoids query failures from stale metadata. Some edge cases require running legacy Impala commands like Invalidate Metadata if HDFS block locations change or new partitions are added without ALTER TABLE commands. Spark SQL and Hive loads should use INSERT OVERWRITE instead of directly writing files to generate notifications.