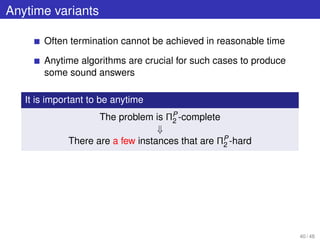

This document discusses efficient solving techniques for answer set programming (ASP). It begins with an introduction to ASP, including its declarative programming paradigm based on stable model semantics. Computational tasks for ASP like model generation, optimum answer set search, and cautious reasoning are described along with their complexities. The document outlines the architecture of an ASP solver, covering input preprocessing, propagation methods, and learning heuristics. Model-guided and core-guided algorithms for optimum answer set search are also summarized.

![Solving ground ASP programs

Computational tasks and applications

1. Model generation

Given a ground ASP program Π, find an answer set of Π

→ [Balduccini et al., LPNMR 2001; Gebser et al, TPLP 2011]

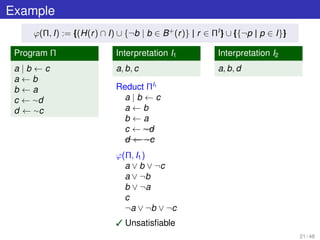

2. Optimum answer set search

Given a ground ASP program Π, find an answer set of Π

with the minimum cost

→ [Marra et al., JELIA 2014; Koponen et al., TPLP 2015]

3. Cautious reasoning

Given a ground ASP program Π and a ground atom a,

check whether a is true in all answer sets of Π

→ [Arenas et al., TPLP 2003; Eiter, LPNMR 2005]

7 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-8-320.jpg)

![ASP encoding of TSP

Example (Travelling Salesman Problem)

Input: A weighted, directed graph G = V, E, φ

A vertex s ∈ V

Goal: Find the Hamiltonian cycle of minimum weight

% Guess a cycle

in(x, y) ∨ out(x, y) ← ∀(x, y) ∈ E

% A vertex can be reached only once

← #count{1 : in(x, y) | y ∈ V} = 1 ∀x ∈ V

← #count{1 : in(x, y) | x ∈ V} = 1 y ∈ V

% All vertices must be reached

← not reached(x) ∀x ∈ V

reached(y) ← in(s, y) ∀y ∈ V

reached(y) ← reached(x), in(x, y) ∀(x, y) ∈ E

% Minimize the sum of distances

← in(x, y) [φ(x, y)] ∀(x, y) ∈ E

Guess

Check

Aux. Rules

Optimize

12 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-18-320.jpg)

![Input preprocessing and simplifications

Preprocessing of the input program

Deletion of duplicate rules

- Even more than 80% in some benchmarks

Deterministic inferences

- Deletion of satisfied rules

Clark’s completion

- Constraints for discarding unsupported models

Simplifications

In the style of SATELITE [Eén and Biere, SAT 2005]

- Subsumption, self-subsumption, literals elimination

15 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-21-320.jpg)

![Model Generator

I := preprocessing()

I := propagation(I)

AnswerSet := I

I := chooseUndefinedLiteral(I)

analyzeConflict(I))

I := restoreConsistency(I)

Incoherent AnswerSet

[inconsistent]

learning

backjumping

[consistent]

[no undefined literals]

[fail]

[succeed]

16 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-22-320.jpg)

![Unfounded-free check

HCF programs

Unfounded-free check can be done in polynomial time

Algorithm based on source pointers [Simons et al., Artif.

Intell. 2002]

non-HCF programs

Unfounded-free check is co-NP complete

Algorithm based on calls to a SAT oracle [Koch et al., Artif.

Intell. 2003]

Input: an input program Π and an interpretation I

Output: a formula ϕ such that I is unfounded-free if and only

if ϕ is unsatisfiable

20 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-26-320.jpg)

![Model-guided algorithms

I need a solution! Give me any answer set

remove weak constraints from the program

model generator

add violated weak constraints to the program

update upper bound

Optimum found

[coherent]

[incoherent]

25 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-37-320.jpg)

![Core-guided algorithms

I feel lucky! Try to satisfy all weak constraints

consider weak constraints as hard

model generator

analyze unsatisfiable core

update lower bound

Optimum found

[incoherent]

[coherent]

26 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-38-320.jpg)

![Stratification

wmax := +∞

wmax := max{wi | ri ∈ weak(Π) ∧ wi wmax}

consider weak constraints in {ri | wi ≥ wmax} as hard

model generator

analyze unsatisfiable core

Optimum found

[incoherent]

[coherent]

[wmax = 0]

[wmax 0]

28 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-40-320.jpg)

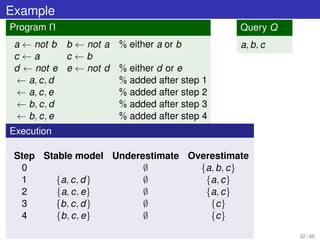

![Cautious reasoning by enumeration of models

Answers := ∅; Candidates := Query

model generator

Answers := Candidates

Answers

Candidates := Candidates ∩ AnswerSet

Π := Π ∪ Constraint(AnswerSet)

[coherent]

[incoherent]

31 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-43-320.jpg)

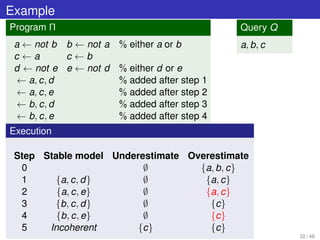

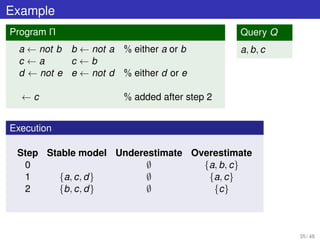

![Cautious reasoning by overestimate reduction

Answers := ∅; Candidates := Query

model generator

Answers := Candidates

Answers

Candidates := Candidates ∩ AnswerSet

Π := Π ∪ Constraint(Candidates)

[coherent]

[incoherent]

34 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-52-320.jpg)

![Cautious reasoning by iterative coherence testing

Answers := ∅; Candidates := Query

a := OneOf(Candidates Answers)

model generator on Π ∪ {← a}

Answers

Candidates := Candidates ∩ AnswerSet

Answers := Answers ∪ {a}

[Answers = Candidates]

[coherent]

[incoherent]

37 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-59-320.jpg)

![Bibliography (1)

[Arenas et al., TPLP 2003] M. Arenas, L. E. Bertossi, J. Chomicki. Answer

sets for consistent query answering in inconsis-

tent databases. TPLP 3(4-5): 393-424 (2003).

[Balduccini et al., LPNMR 2001] M. Balduccini, M. Gelfond, R. Watson, M.

Nogueira. The USA-Advisor: A Case Study in

Answer Set Planning. LPNMR 2001: 439-442.

[Eén and Biere, SAT 2005] N. Eén, A. Biere. Effective Preprocessing in SAT

Through Variable and Clause Elimination. SAT

2005: 61-75.

[Eiter, LPNMR 2005] T. Eiter. Data Integration and Answer Set Pro-

gramming. LPNMR 2005: 13-25.

[Gebser et al., TPLP 2011] M. Gebser, T. Schaub, S. Thiele, P. Veber. De-

tecting inconsistencies in large biological net-

works with answer set programming. TPLP 11(2-

3): 323-360 (2011).

47 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-75-320.jpg)

![Bibliography (2)

[Koch et al., Artif. Intell. 2003] C. Koch, N. Leone, G. Pfeifer. Enhancing

disjunctive logic programming systems by SAT

checkers. Artif. Intell. 151(1-2): 177-212 (2003).

[Koponen et al., TPLP 2015] L. Koponen, E. Oikarinen, T. Janhunen, L. Säilä.

Optimizing phylogenetic supertrees using an-

swer set programming. TPLP 15(4-5): 604-619

(2015).

[Marra et al., JELIA 2014] G. Marra, F. Ricca, G. Terracina, D. Ursino. Ex-

ploiting Answer Set Programming for Handling

Information Diffusion in a Multi-Social-Network

Scenario. JELIA 2014: 618-627.

[Simons et al., Artif. Intell. 2002] P. Simons, I. Niemelä, T. Soininen. Extending

and implementing the stable model semantics.

Artif. Intell. 138(1-2): 181-234 (2002).

48 / 48](https://image.slidesharecdn.com/slides-160531175605/85/Efficient-Solving-Techniques-for-Answer-Set-Programming-76-320.jpg)