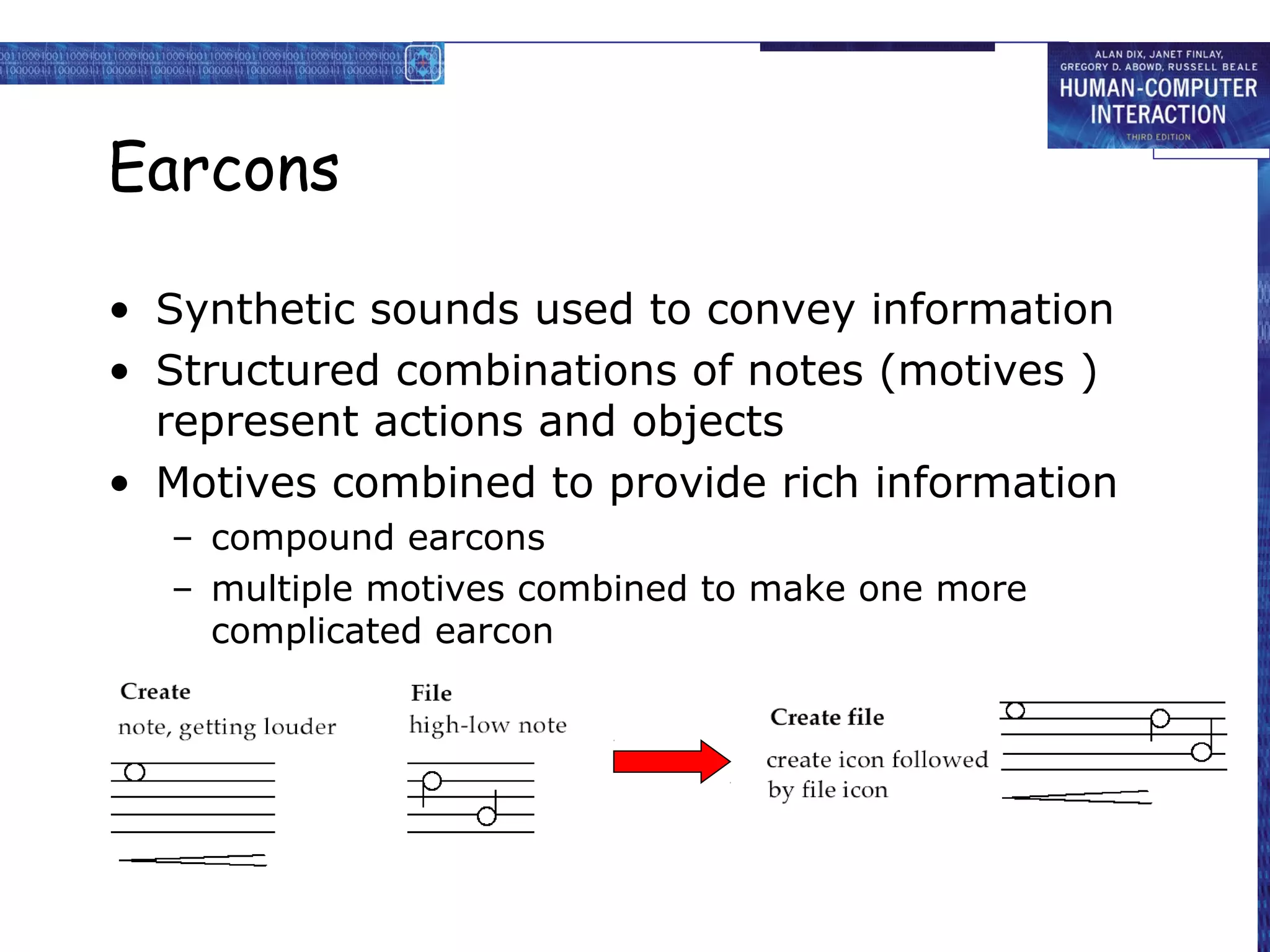

Universal design principles aim to make systems usable by all people to the greatest extent possible without need for adaptation. These include principles of equitable use, flexibility in use, simple and intuitive use, perceptible information, tolerance for error, low physical effort, and size and space for approach and use. Multi-sensory systems use more than one sensory channel like vision, sound, touch to interact. While computers can use vision, sound and sometimes touch, they cannot yet use taste or smell. Different technologies are discussed to incorporate the senses in usable ways for all users.