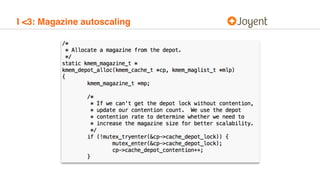

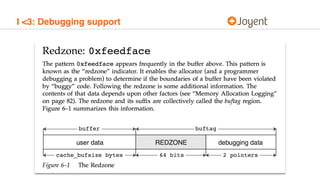

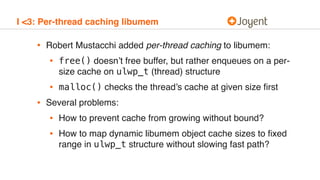

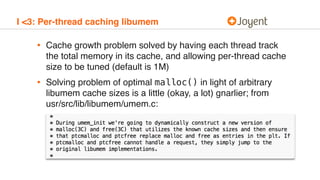

The document discusses the slab allocator, which was created two decades ago by Jeff Bonwick to efficiently manage kernel memory. The author describes loving several key aspects of the slab allocator, including its caching, debugging support, integration with tools like MDB, and porting to userspace as libumem. While libumem had worse performance than other allocators for small allocations, per-thread caching was later added to improve it. The slab allocator remains central to illumos and has stood the test of time due to its thoughtful design.