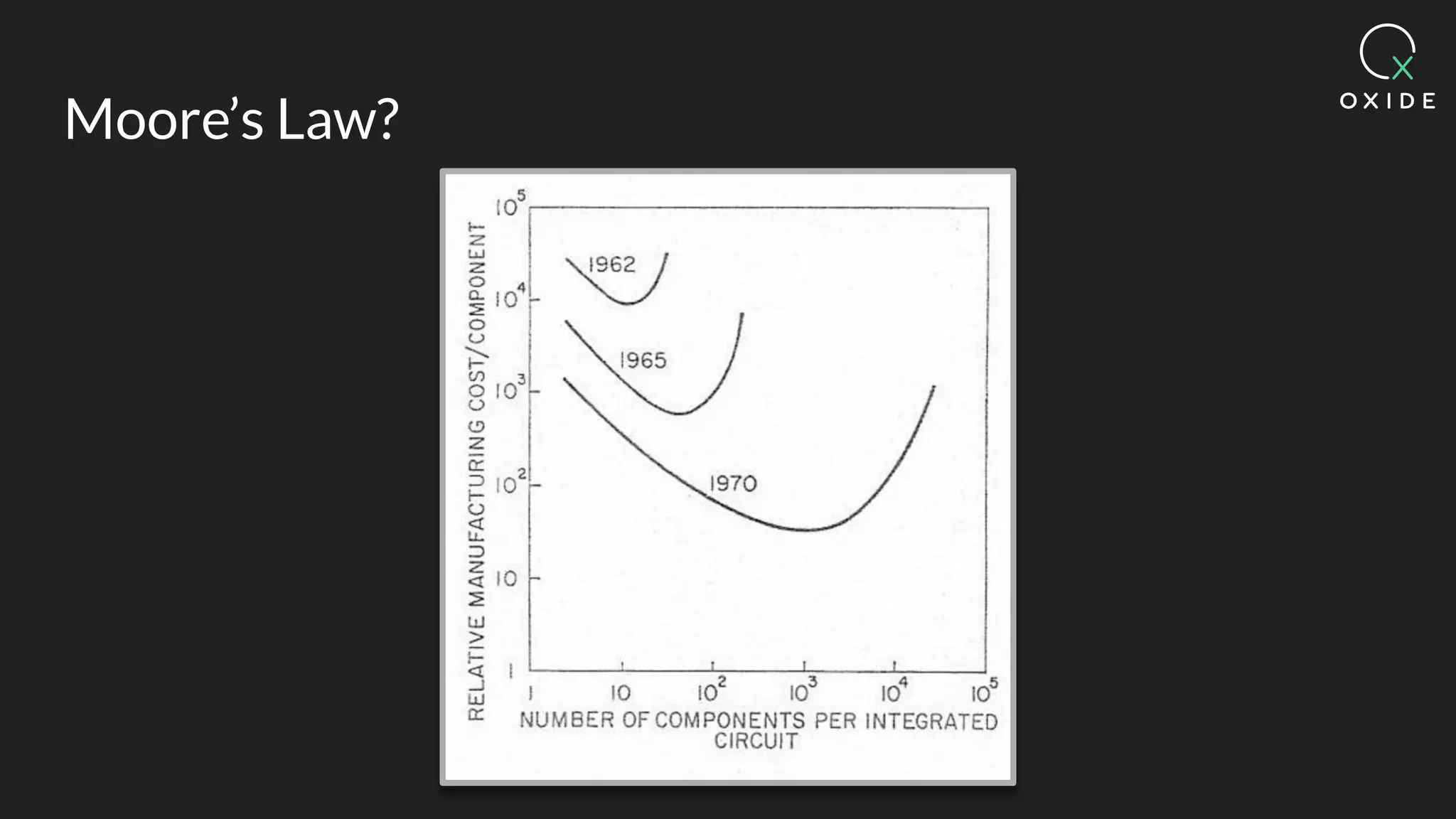

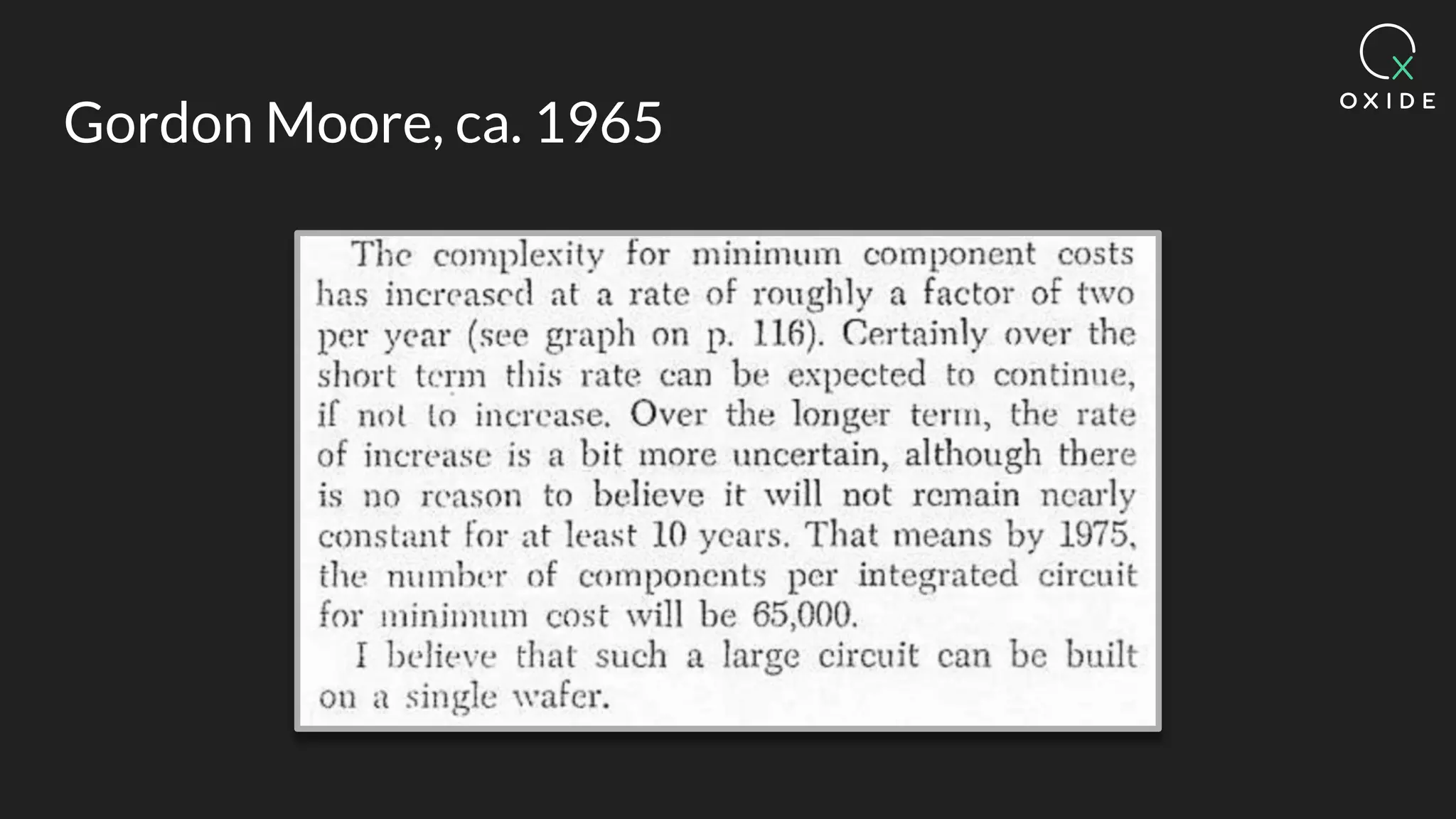

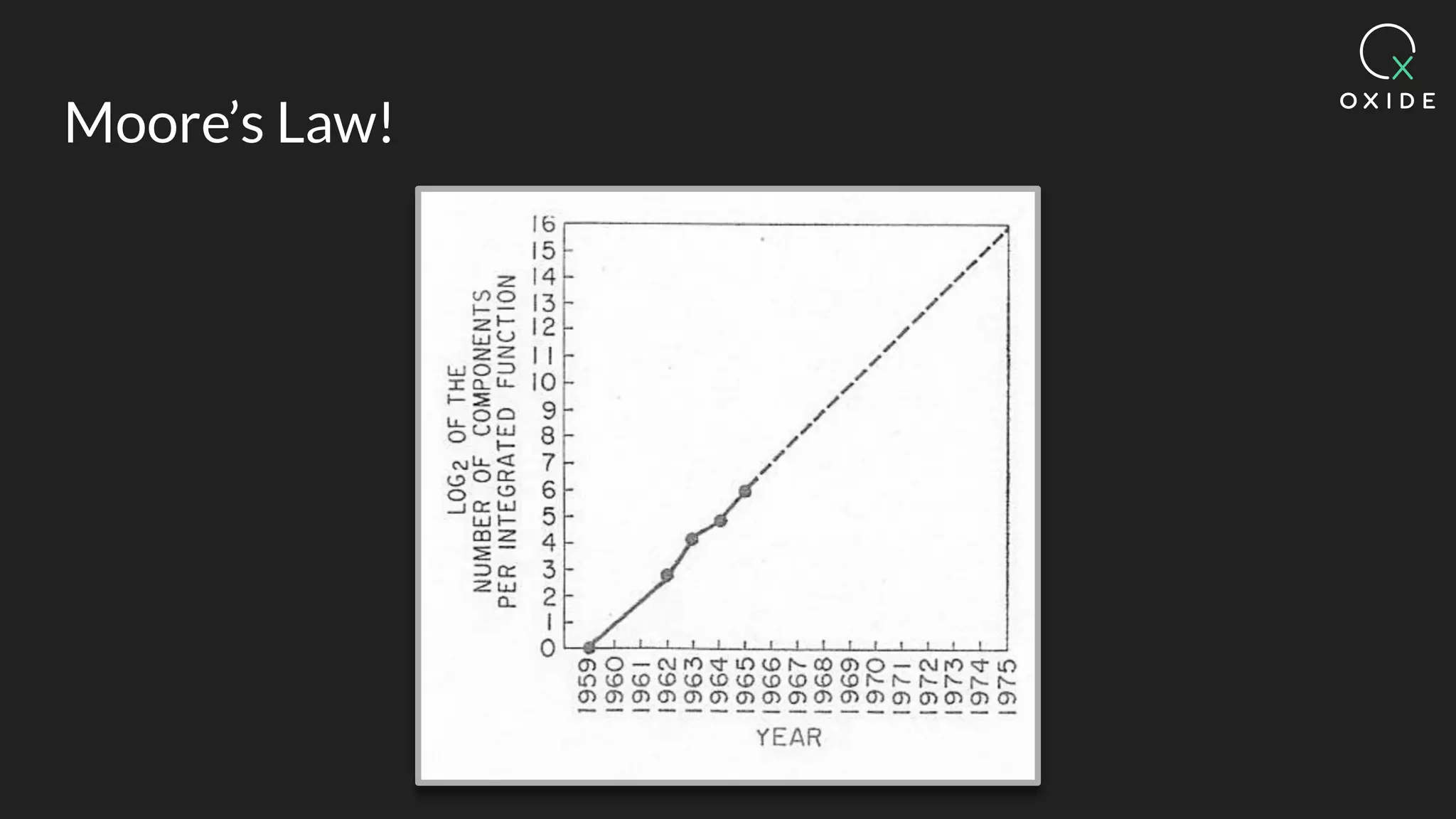

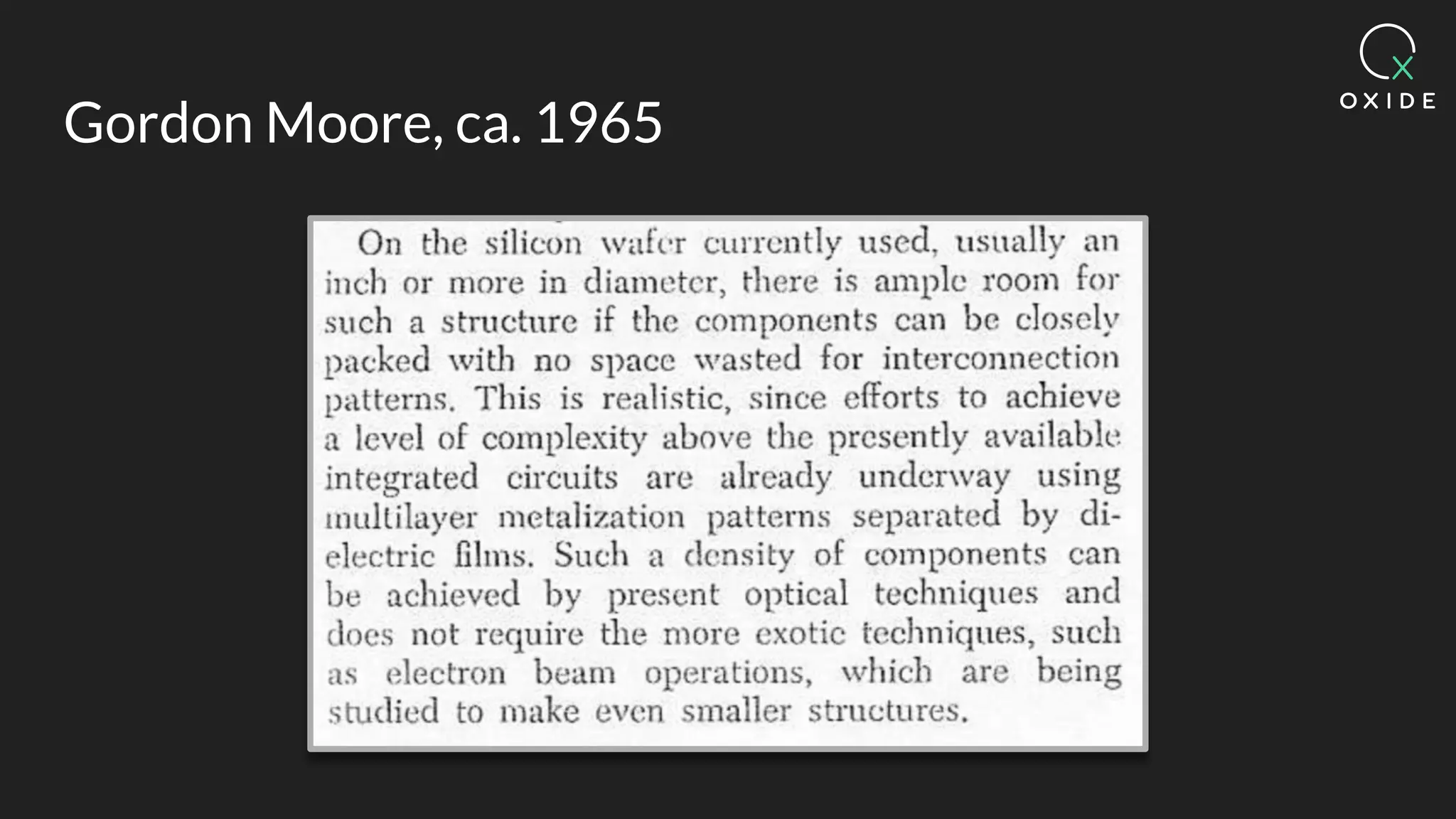

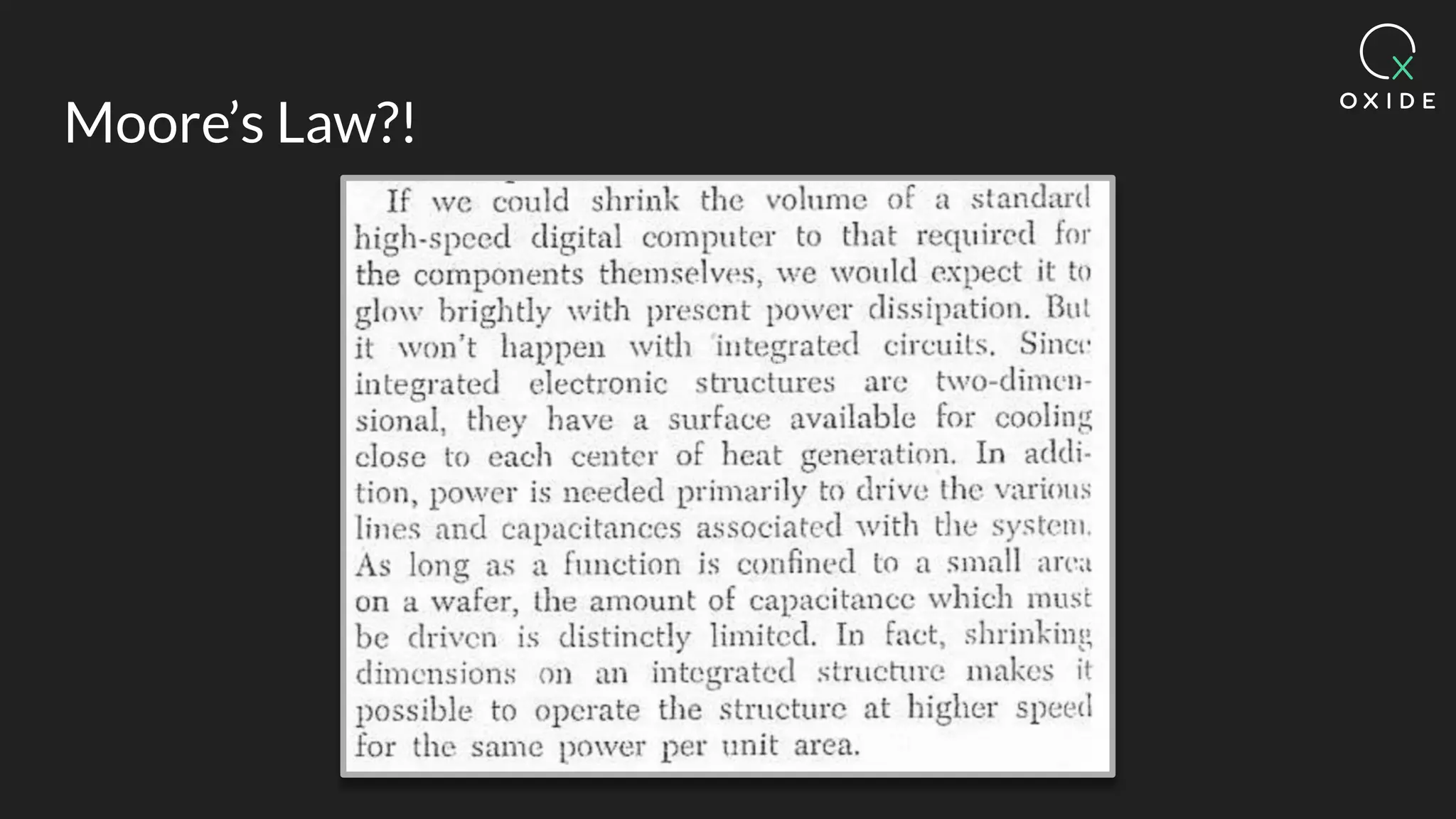

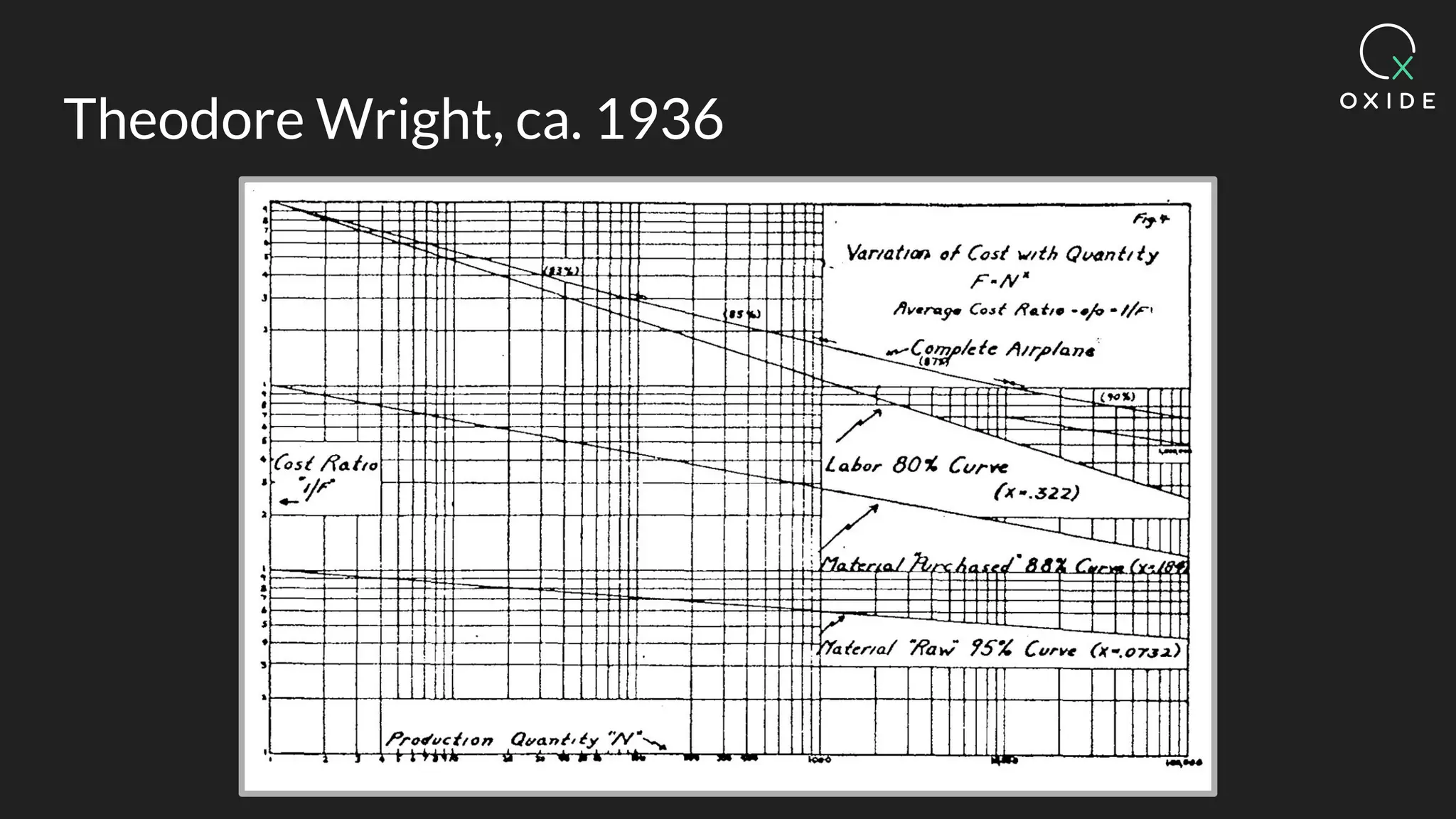

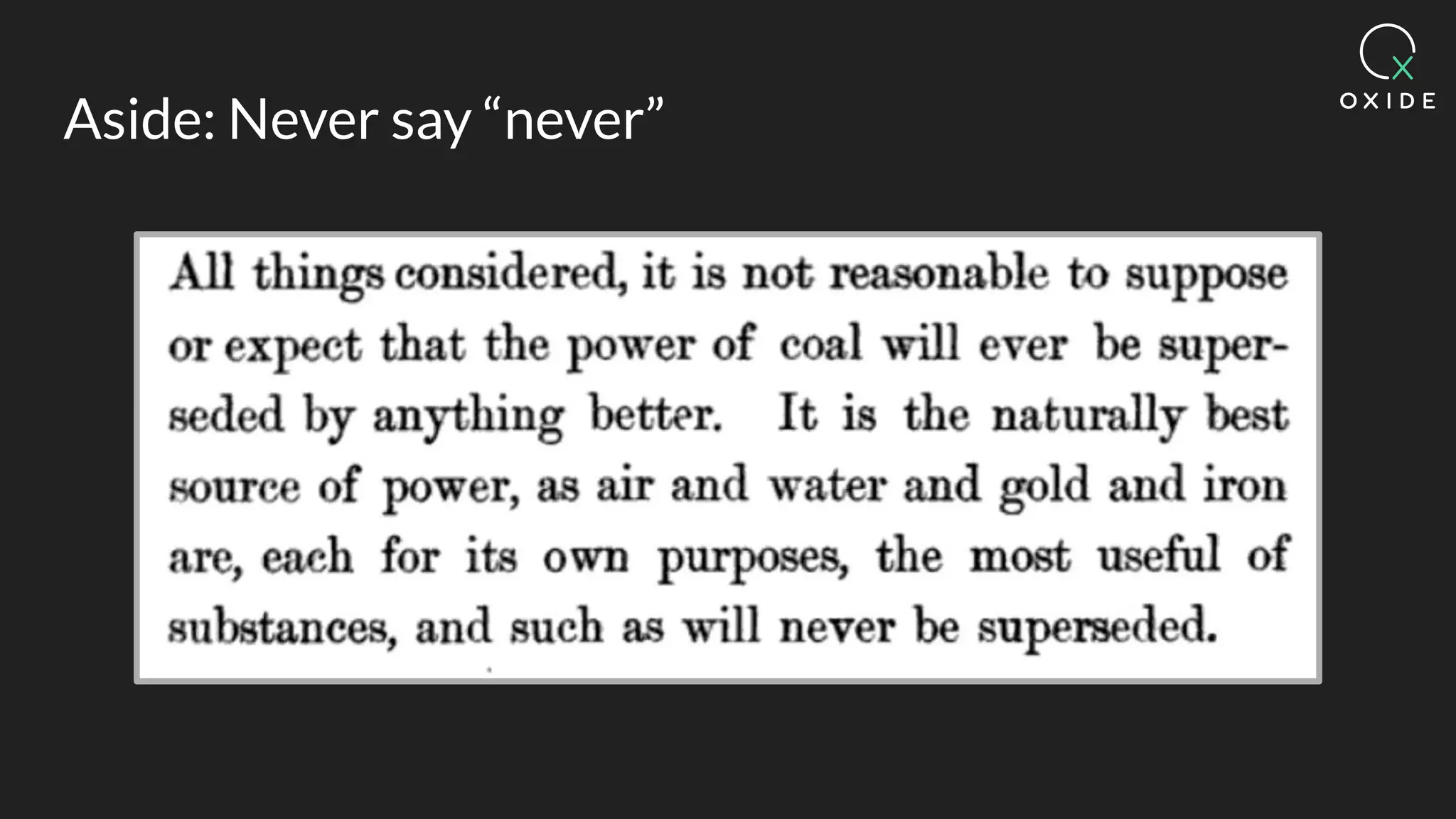

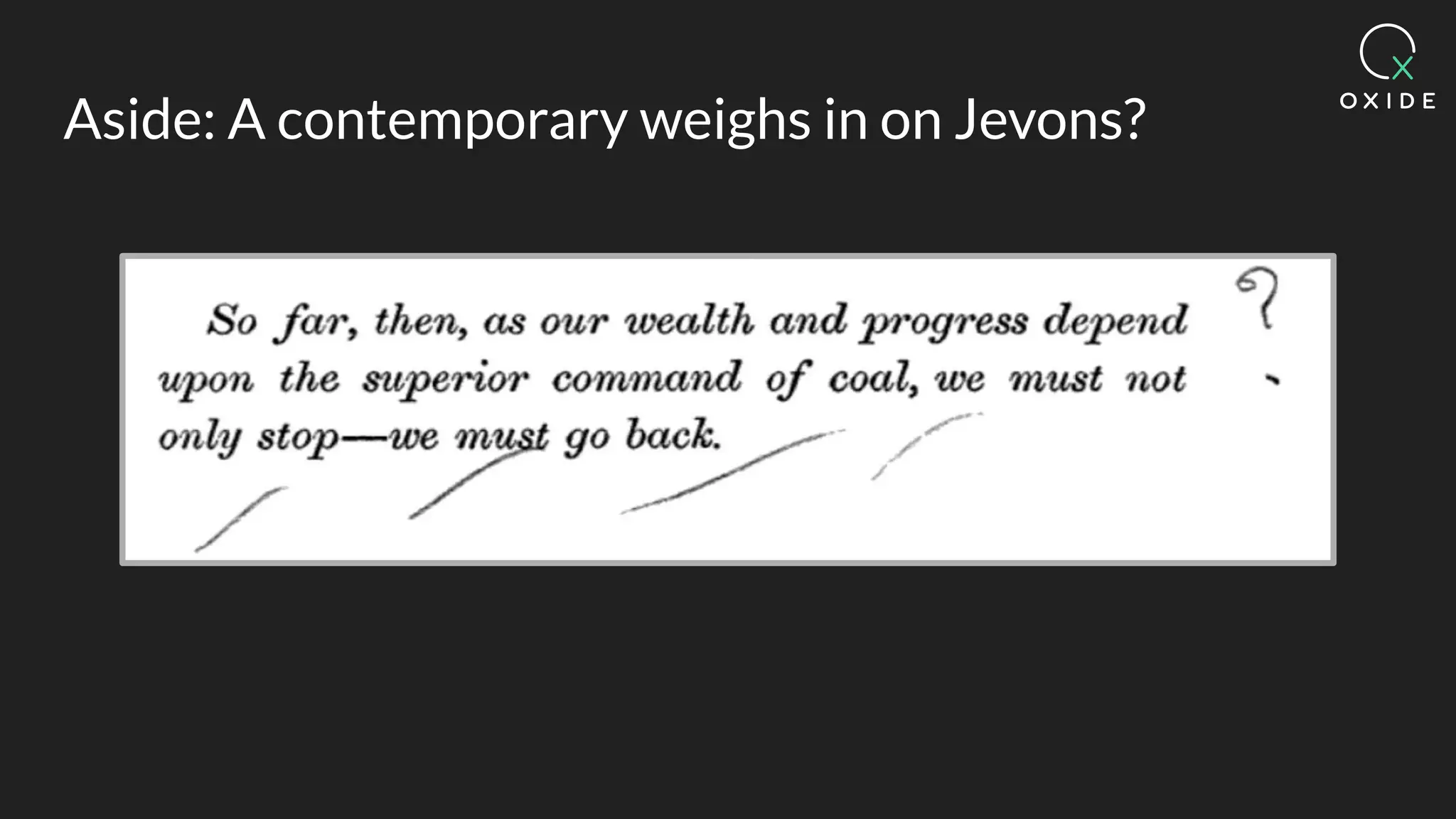

The document discusses the evolving landscape of hardware and software co-design, highlighting the risks of overly emphasizing software at the expense of hardware's relevance. It critiques Marc Andreessen's 2011 assertion that software dominates industries while acknowledging the slowing of Moore's Law and the potential of Wright's Law in predicting transistor costs. Furthermore, it emphasizes the importance of integrating open-source practices and flexible hardware designs in developing future computing systems.