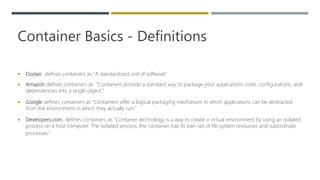

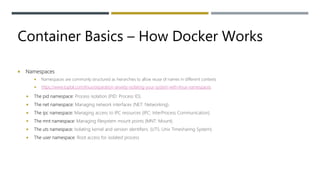

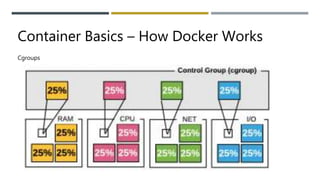

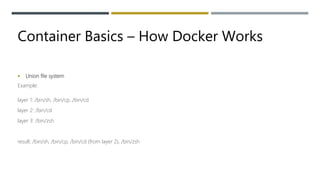

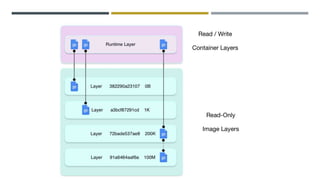

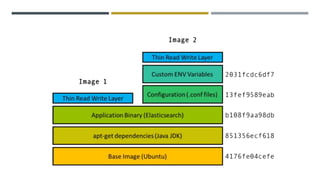

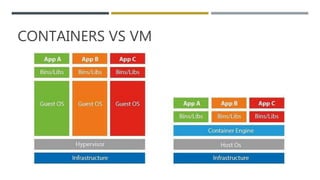

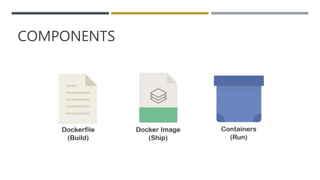

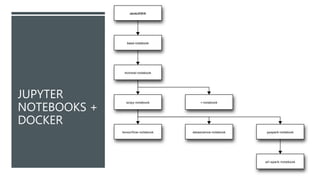

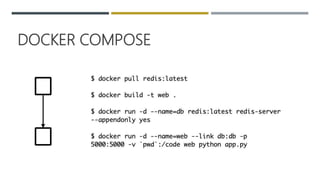

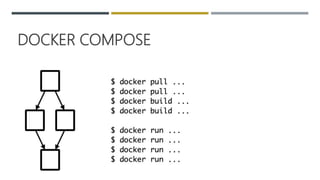

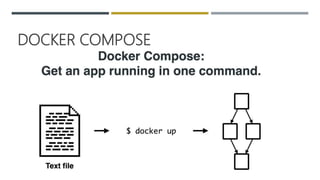

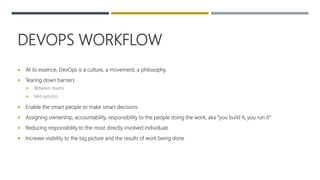

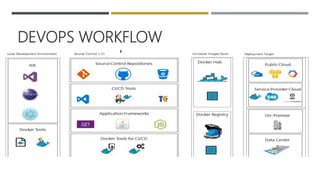

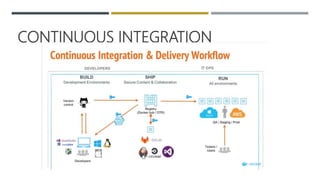

This document provides an overview of Docker and containers for data science. It begins with definitions of containers and discusses the history and benefits of containers. It then explains how Docker containers work using namespaces, cgroups, and union file systems. Key Docker concepts are introduced like Dockerfiles, images, containers, and the Docker architecture. Practical examples are given for building simple machine learning models and databases in containers. Advanced topics covered include Docker Compose, DevOps workflows, continuous delivery, and Kubernetes. The document is intended to provide data scientists with an introduction to using Docker for their work.