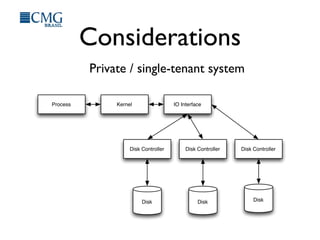

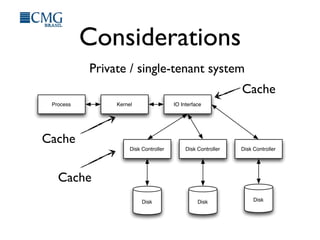

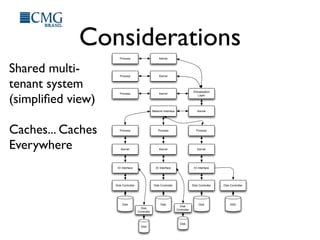

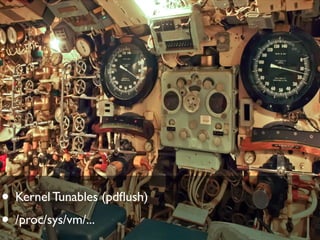

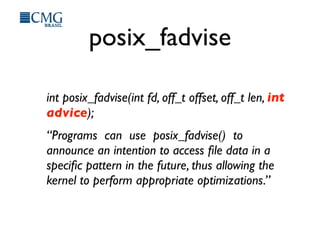

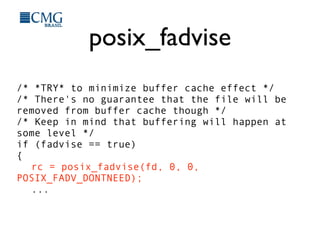

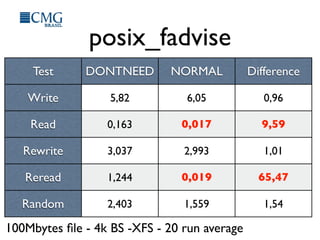

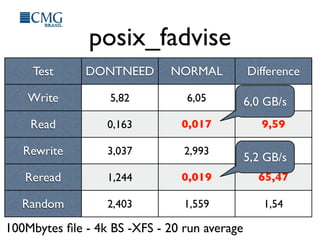

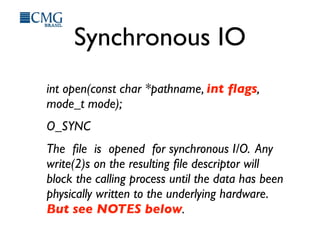

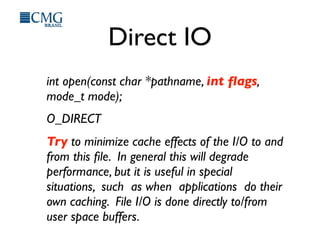

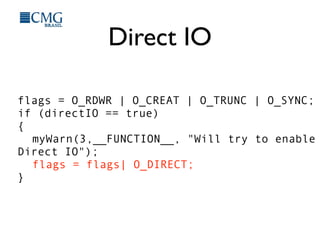

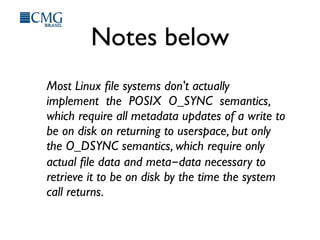

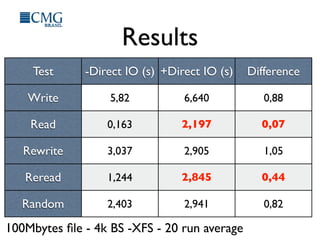

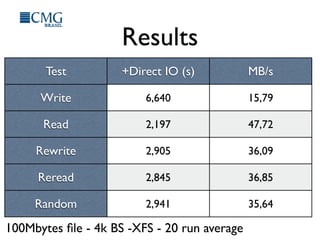

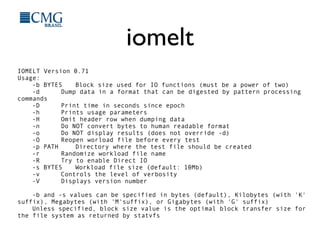

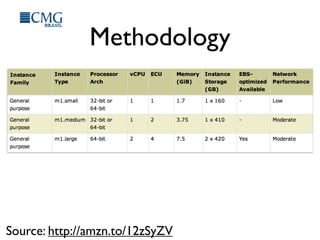

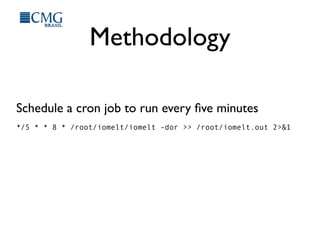

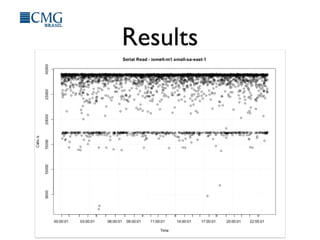

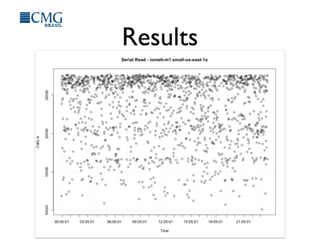

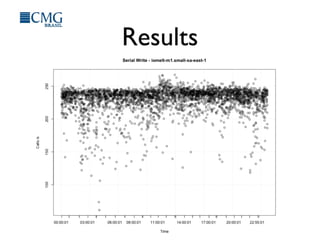

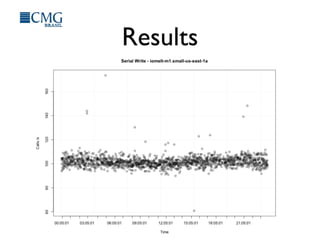

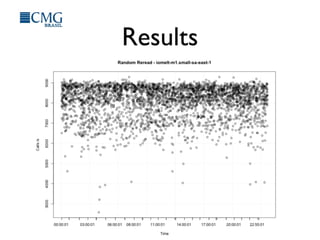

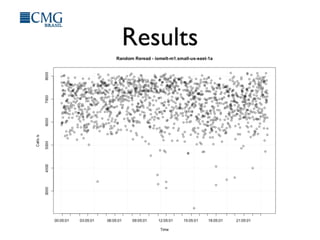

This document discusses benchmarking disk I/O performance in shared, multi-tenant cloud environments. It notes challenges with traditional tools and methodologies. It proposes a simple benchmarking tool called iomelt that uses direct I/O and fadvise calls to minimize buffering effects. Results show direct I/O can reduce performance compared to buffered I/O. The document recommends regularly benchmarking different instance types and regions over long periods to analyze performance consistency in shared environments.