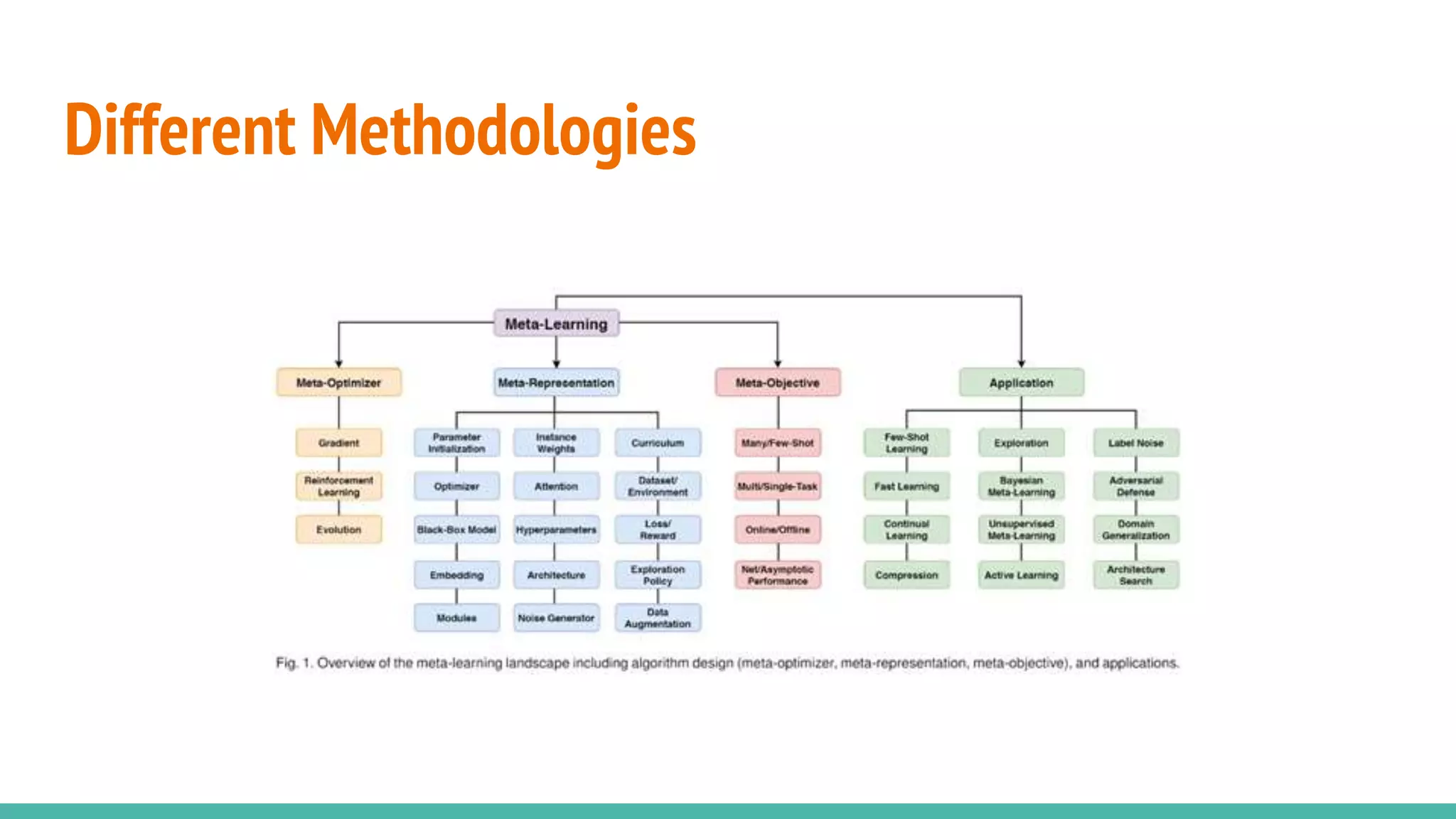

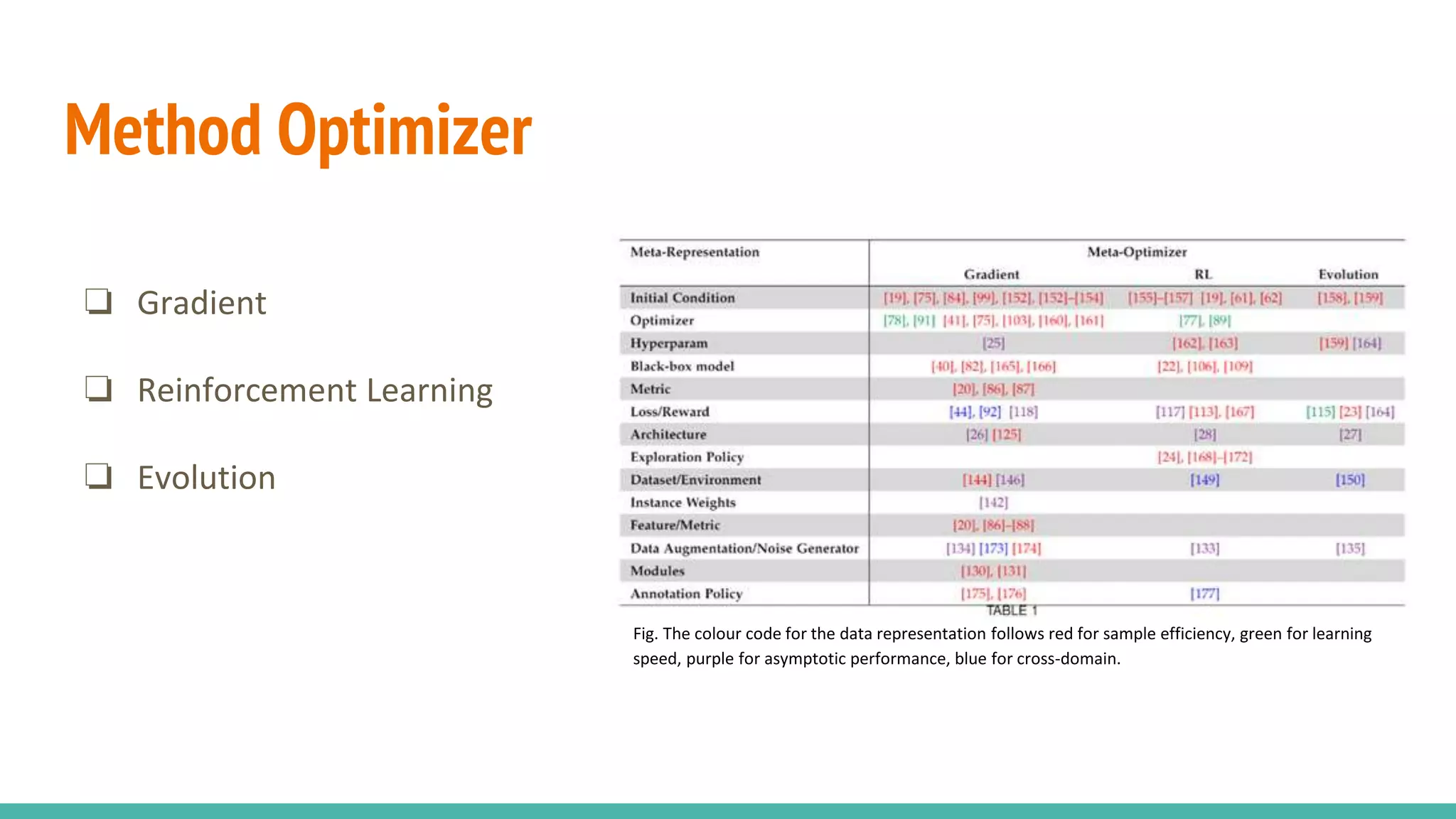

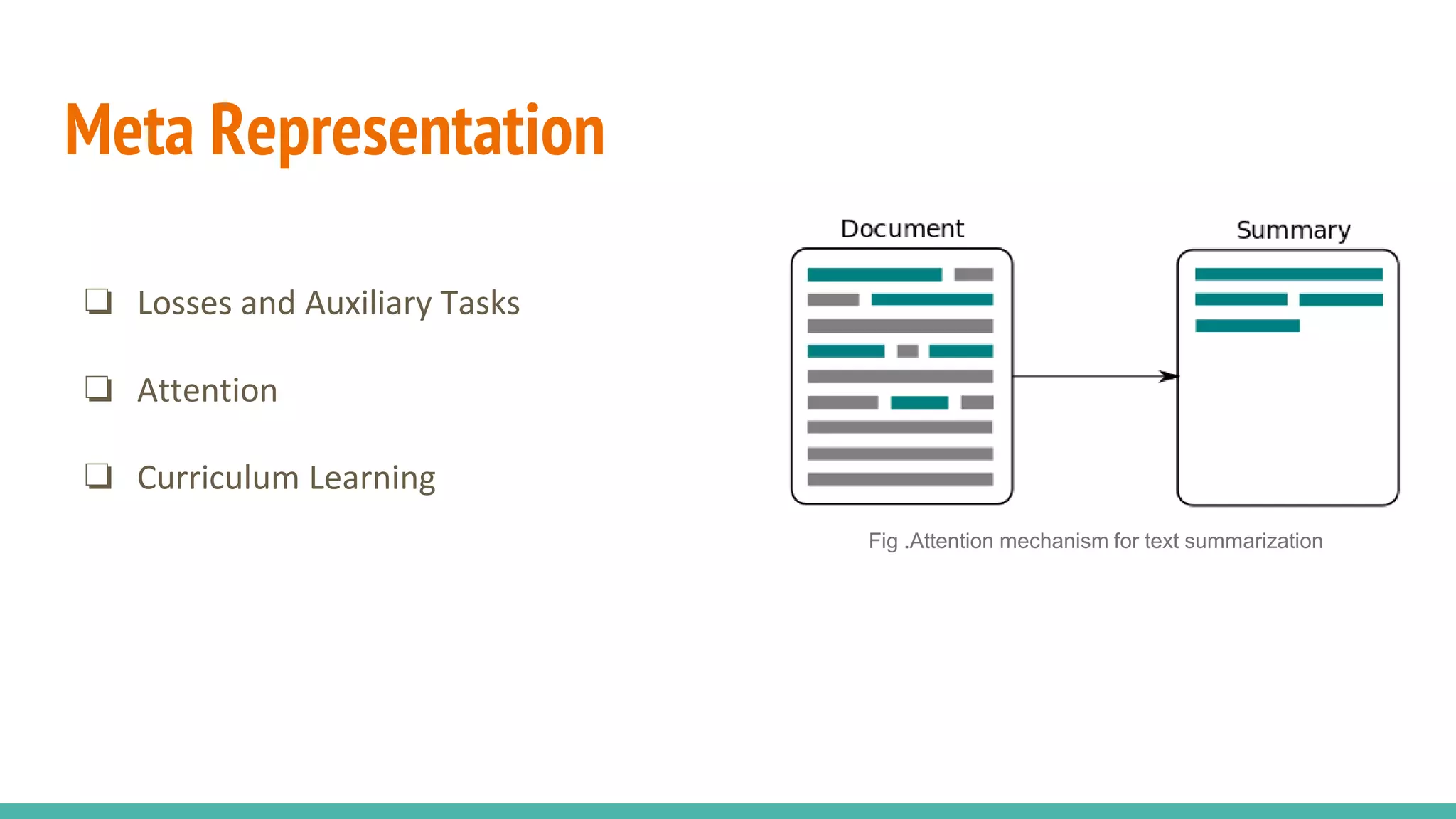

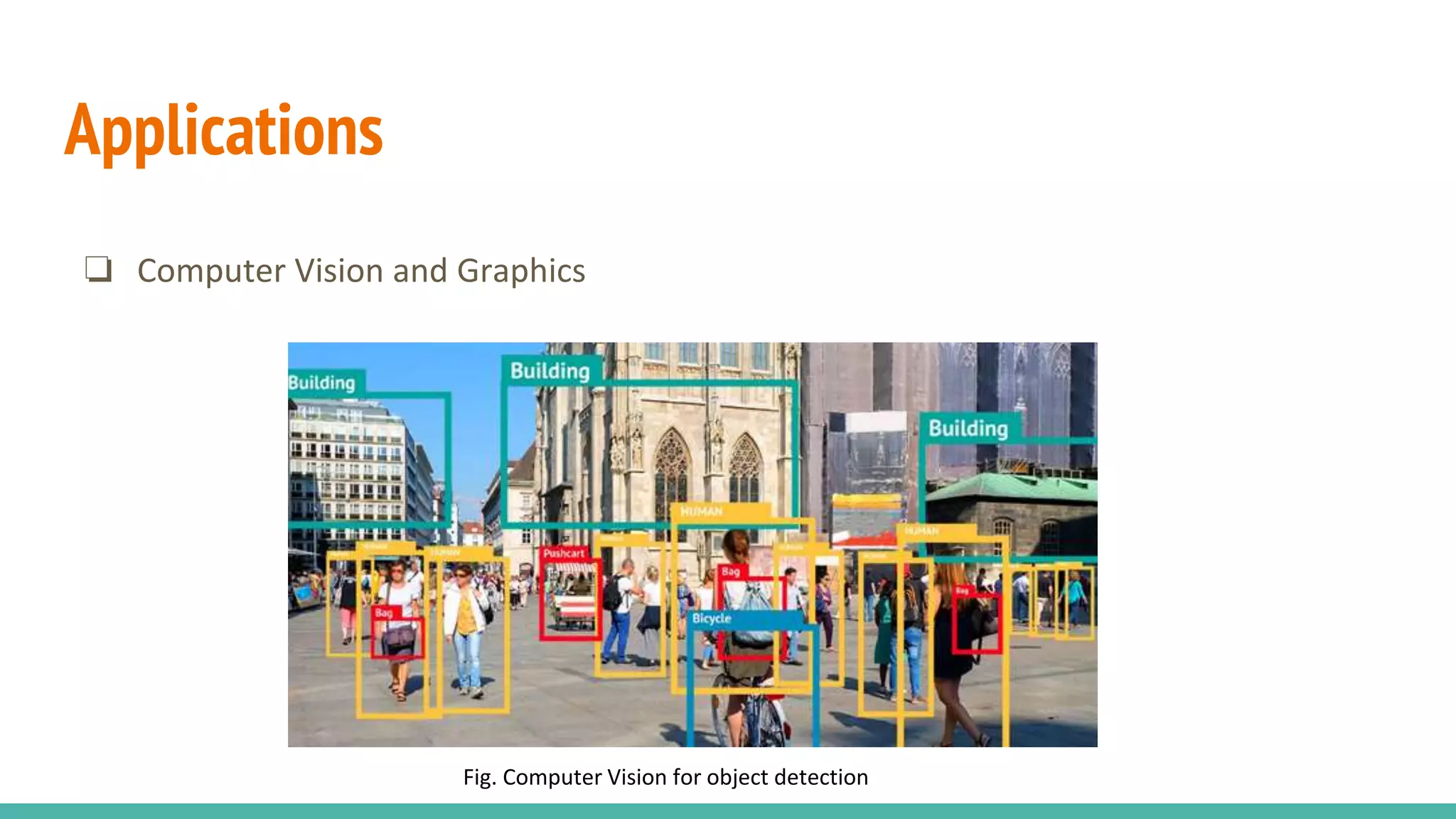

This document provides an overview of meta-learning techniques for designing neural networks. It defines meta-learning as learning from experience across multiple tasks or episodes of learning to tackle challenges like data and computation bottlenecks. Various meta-learning fields are discussed, including transfer learning, multi-task learning, and hyperparameter optimization. New taxonomies are proposed for categorizing meta-learning methods based on the representation, optimizer, and objective. Different meta-learning methodologies, representations, objectives, and applications are surveyed, with the goal of improving neural network generalization and learning.