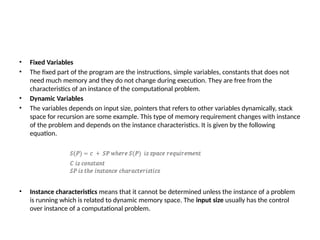

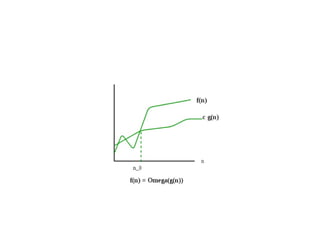

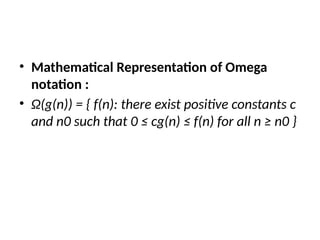

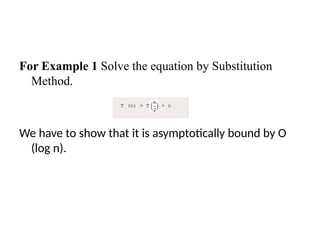

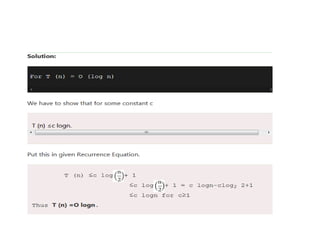

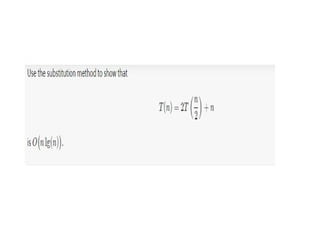

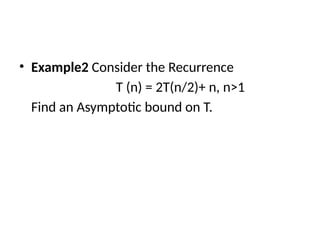

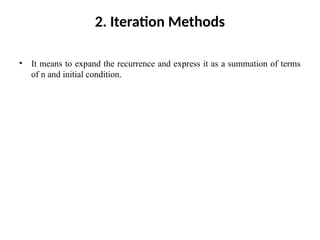

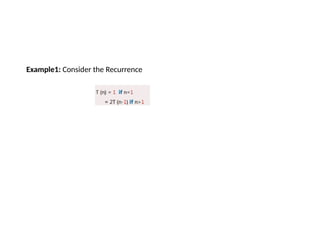

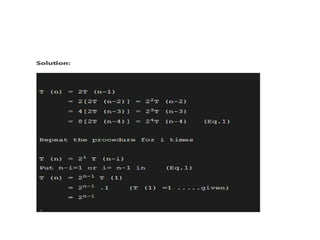

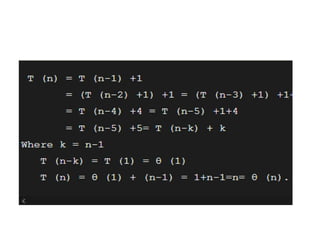

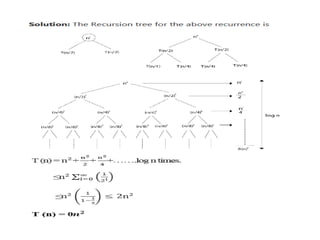

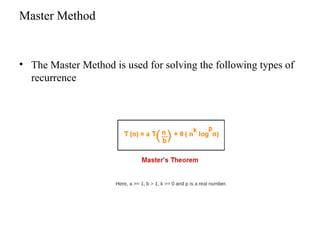

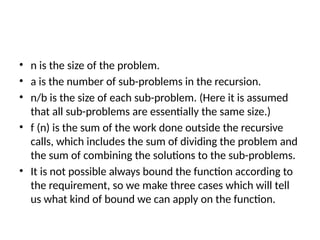

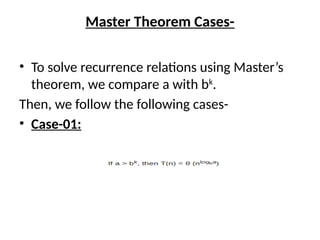

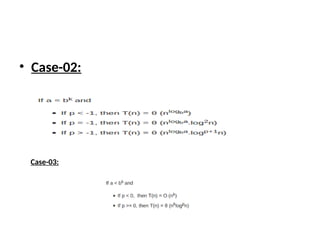

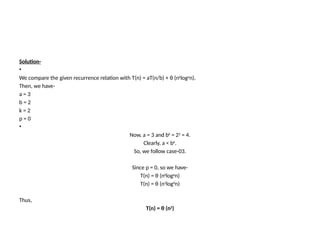

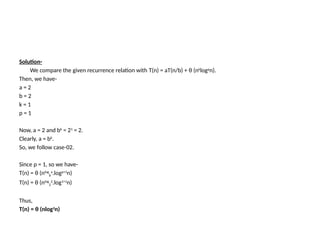

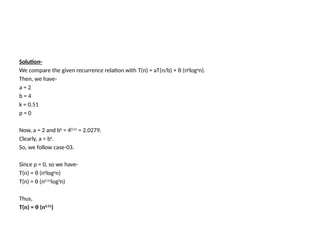

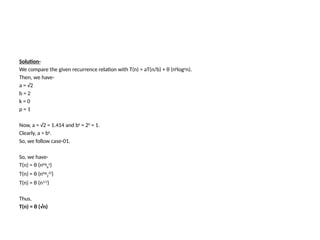

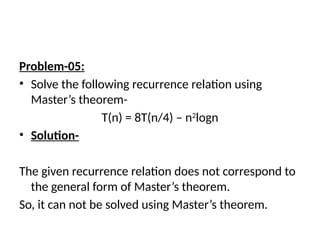

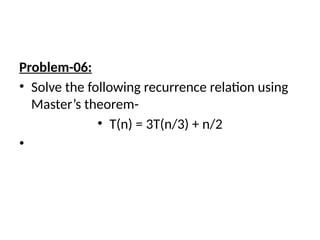

The document provides an in-depth overview of algorithms, focusing on their characteristics, efficiency analysis through asymptotic notation, and performance comparisons. It discusses different types of performance measures, including time and space complexity, and methods for analyzing recursive algorithms, such as the substitution method, recursion tree method, and master theorem. Asymptotic analysis is emphasized as a crucial tool for evaluating algorithm efficiency by determining best, average, and worst-case scenarios.