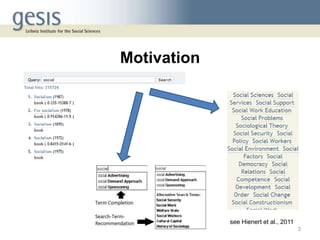

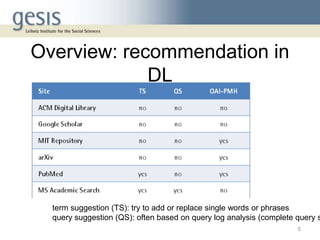

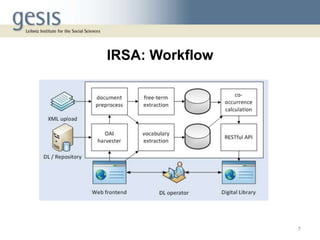

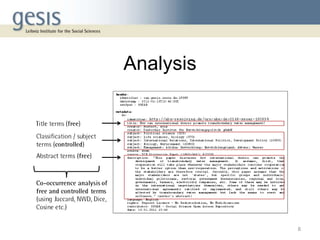

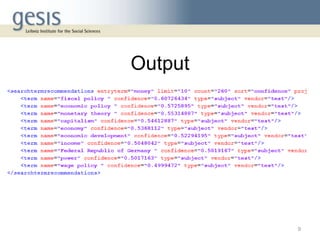

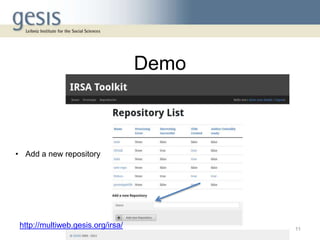

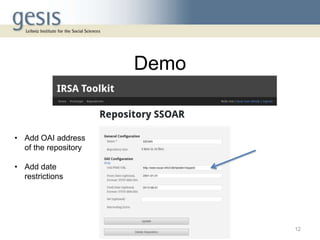

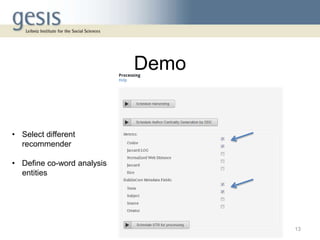

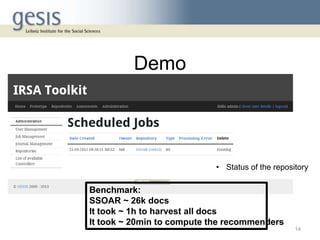

This document describes a framework for knowledge organization system (KOS)-based recommendation systems. It discusses two funded projects (IRM I and IRM II) that aimed to implement value-added information retrieval services for digital libraries based on applying scholarly models. These services include term suggestion, query suggestion, and bibliometric analysis. The document outlines the Information Retrieval Service Assessment (IRSA) component, which calculates search term suggestions using co-occurrence analysis of controlled vocabularies harvested via OAI-PMH. It demonstrates the IRSA system and discusses limitations and references.