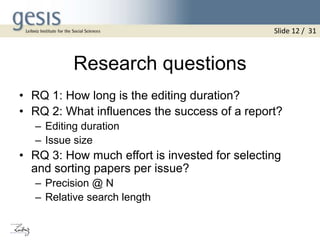

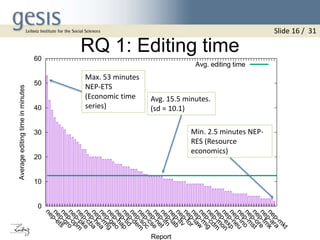

1) The average editing time for creating a report was 15.5 minutes, with a minimum of 2.5 minutes and maximum of 53 minutes.

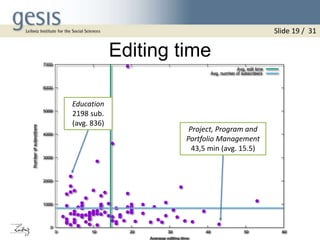

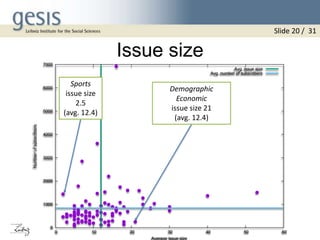

2) There was no correlation found between the issue size, editing time, and the success of a report, which is assumed to be mainly driven by topic and age.

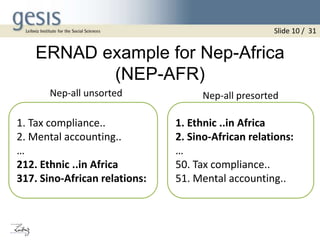

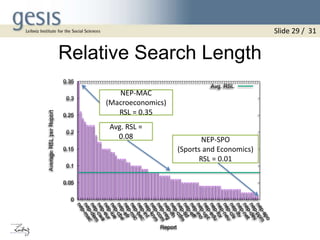

3) Editors worked comfortably with the document presorting, with on average 77-82% of selected documents coming from the top N presorted documents. The relative search length, how deep editors inspected the document list, was on average 8% of the list, suggesting editors selected from the upper part of the presorted list.

![1. Introduction

• Current awareness in digital libraries

– To inform users / subscribers about new / relevant

acquisitions in their libraries [1].

• Current awareness services allow subscribers to keep up to

date with new additions in a certain area of research.

• Selection of relevant documents can be done (semi-

)automatically or manually.

• For this work we focus on the intellectual editing process

• Aim of this work:

How do editors work when creating a subject

specific report in Digital Libraries (DL)?

Slide 4 / 31](https://image.slidesharecdn.com/isifinal-150521101602-lva1-app6891/85/Assessing-a-human-mediated-current-awareness-service-4-320.jpg)